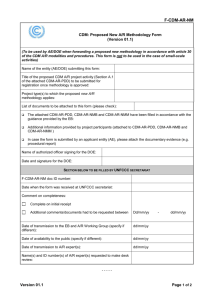

ESnet - Office of Research & Economic Development

advertisement

Cyberinfrastructure and Networks: The Advanced Networks and Services Underpinning the Large-Scale Science of DOE’s Office of Science William E. Johnston ESnet Manager and Senior Scientist Lawrence Berkeley National Laboratory 1 ESnet Provides Global High-Speed Internet Connectivity for DOE Facilities and Collaborators (ca. Summer, 2005) ESnet Science Data Network (SDN) core Japan (SINet) Australia (AARNet) Canada (CA*net4 Taiwan (TANet2) Singaren CA*net4 France GLORIAD (Russia, China) Korea (Kreonet2 MREN Netherlands StarTap Taiwan (TANet2, ASCC) SINet (Japan) Russia (BINP) CERN (USLHCnet CERN+DOE funded) GEANT - France, Germany, Italy, UK, etc LIGO PNNL ESnet IP core MIT BNL JGI LBNL NERSC SLAC TWC QWEST ATM LLNL SNLL FNAL ANL AMES Lab DC Offices PPPL MAE-E YUCCA MT Equinix OSC GTN NNSA PAIX-PA Equinix, etc. KCP OSTI LANL ARM GA 42 end user sites Office Of Science Sponsored (22) NNSA Sponsored (12) Joint Sponsored (3) Other Sponsored (NSF LIGO, NOAA) Laboratory Sponsored (6) commercial and R&E peering points ESnet core hubs JLAB SNLA ORNL ORAU NOAA SRS Allied Signal ESnet IP core: Packet over SONET Optical Ring and Hubs high-speed peering points with Internet2/Abilene International (high speed) 10 Gb/s SDN core 10G/s IP core 2.5 Gb/s IP core MAN rings (≥ 10 G/s) OC12 ATM (622 Mb/s) OC12 / GigEthernet OC3 (155 Mb/s) 45 Mb/s and less DOE Office of Science Drivers for Networking • The role of ESnet is to provide networking for the Office of Science Labs and their collaborators • The large-scale science that is the mission of the Office of Science is dependent on networks for o Sharing of massive amounts of data o Supporting thousands of collaborators world-wide o Distributed data processing o Distributed simulation, visualization, and computational steering o Distributed data management • These issues were explored in two Office of Science workshops that formulated networking requirements to meet the needs of the science programs (see refs.) 3 CERN / LHC High Energy Physics Data Provides One of Science’s Most Challenging Data Management Problems (CMS is one of several experiments at LHC) Online System ~PByte/sec Tier 0 +1 human ~100 MBytes/sec event simulation event reconstruction CERN LHC CMS detector 15m X 15m X 22m, 12,500 tons, $700M. 2.5-40 Gbits/sec Tier 1 German Regional Center French Regional Center Tier2 Center Tier2 Center Tier2 Center Tier2 Center Tier2 Center Tier 2 ~0.6-2.5 Gbps Tier 3 Institute Institute Institute Institute ~0.25TIPS Courtesy Harvey Newman, CalTech FermiLab, USA Regional Center ~0.6-2.5 Gbps analysis Physics data cache Italian Center 100 - 1000 Mbits/sec Tier 4 Workstations • 2000 physicists in 31 countries are involved in this 20-year experiment in which DOE is a major player. • Grid infrastructure spread over the US and Europe coordinates the data analysis LHC Networking • This picture represents the MONARCH model – a hierarchical, bulk data transfer model • Still accurate for Tier 0 (CERN) to Tier 1 (experiment data centers) data movement • Probably not accurate for the Tier 2 (analysis) sites 5 Example: Complicated Workflow – Many Sites 6 Distributed Workflow • Distributed / Grid based workflow systems involve many interacting computing and storage elements that rely on “smooth” inter-element communication for effective operation • The new LHC Grid based data analysis model will involve networks connecting dozens of sites and thousands of systems for each analysis “center” 7 Example: Multidisciplinary Simulation Ecosystems Species Composition Ecosystem Structure Energy Water Aerodynamics Soil Water Snow Intercepted Water Disturbance Fires Hurricanes Vegetation Ice Storms Dynamics Windthrows (Courtesy Gordon Bonan, NCAR: Ecological Climatology: Concepts and Applications. Cambridge University Press, Cambridge, 2002.) Years-To-Centuries Watersheds Surface Water Subsurface Water Geomorphology Hydrologic Cycle Days-To-Weeks Nutrient Availability Minutes-To-Hours A “complete” Chemistry Climate CO2, CH4, N2O Temperature, Precipitation, approach to ozone, aerosols Radiation, Humidity, Wind climate Heat CO2 CH4 Moisture N2O VOCs modeling Momentum Dust involves many Biogeophysics Biogeochemistry Carbon Assimilation interacting Decomposition models and data Mineralization Microclimate that are provided Canopy Physiology by different Phenology Hydrology groups at Bud Break Leaf Senescence different locationsEvaporation Gross Primary Species Composition Transpiration Production Ecosystem Structure (Tim Killeen, Snow Melt Plant Respiration Nutrient Availability Infiltration Microbial Respiration Water NCAR) Runoff 8 Distributed Multidisciplinary Simulation • Distributed multidisciplinary simulation involves integrating computing elements at several remote locations o Requires co-scheduling of computing, data storage, and network elements o Also Quality of Service (e.g. bandwidth guarantees) o There is not a lot of experience with this scenario yet, but it is coming (e.g. the new Office of Science supercomputing facility at Oak Ridge National Lab has a distributed computing elements model) 9 Projected Science Requirements for Networking Science Areas considered in the Workshop [1] Today End2End Throughput (not including Nuclear Physics and Supercomputing) 5 years End2End Documented Throughput Requirements 5-10 Years End2End Estimated Throughput Requirements Remarks High Energy Physics 0.5 Gb/s 100 Gb/s 1000 Gb/s high bulk throughput with deadlines (Grid based analysis systems require QoS) Climate (Data & Computation) 0.5 Gb/s 160-200 Gb/s N x 1000 Gb/s high bulk throughput SNS NanoScience Not yet started 1 Gb/s 1000 Gb/s remote control and time critical throughput (QoS) Fusion Energy 0.066 Gb/s (500 MB/s burst) 0.198 Gb/s (500MB/ 20 sec. burst) N x 1000 Gb/s time critical throughput (QoS) Astrophysics 0.013 Gb/s (1 TBy/week) N*N multicast 1000 Gb/s computational steering and collaborations Genomics Data & Computation 0.091 Gb/s (1 TBy/day) 100s of users 1000 Gb/s high throughput and steering 10 Feb, 05 Aug, 04 Feb, 04 Aug, 03 Feb, 03 Aug, 02 Feb, 02 Aug,01 Feb, 01 Aug, 00 Feb, 00 Aug, 99 Feb, 99 Aug, 98 Feb, 98 Aug, 97 Feb, 97 Aug, 96 Feb, 96 Aug, 95 Feb, 95 Aug, 94 500 Feb, 94 Aug, 93 Feb, 93 Aug, 92 Feb, 92 Aug, 91 Feb, 91 Aug, 90 Feb, 90 TByte/Month TBytes/Month Observed Drivers for the Evolution of ESnet ESnet is Currently Transporting About 530 Terabytes/mo. and this volume is increasing exponentially 600 ESnet Monthly Accepted Traffic Feb., 1990 – May, 2005 400 300 200 100 0 11 Who Generates ESnet Traffic? ESnet Inter-Sector Traffic Summary, Jan 03 / Feb 04/ Nov 04 72/68/62% DOE sites DOE is a net supplier of data because DOE facilities are used by universities and commercial entities, as well as by DOE researchers 21/14/10% ESnet ~25/19/13% 14/12/9% 17/10/14% 10/13/16% 9/26/25% 4/6/13% Note • more than 90% of the ESnet traffic is OSC traffic • less that 20% of the traffic is inter-Lab R&E (mostly universities) Peering Points 53/49/50% DOE collaborator traffic, inc. data Commercial International (almost entirely R&E sites) Traffic coming into ESnet = Green Traffic leaving ESnet = Blue Traffic between ESnet sites % = of total ingress or egress traffic 12 A Small Number of Science Users Account for a Significant Fraction of all ESnet Traffic ESnet Top 100 Host-to-Host Flows, Feb., 2005 Class 1: DOE LabInternational R&E TBytes/Month 12 10 Class 2: Lab-U.S. (domestic) R&E 8 6 Class 3: Lab-Lab (domestic) Total ESnet traffic Feb., 2005 = 323 TBy in approx. 6,000,000,000 flows All other flows (< 0.28 TBy/month each) 4 2 Class 4: Lab-Comm. (domestic) Top 100 flows = 84 TBy 0 Notes: 1) This data does not include intra-Lab (LAN) traffic (ESnet ends at the Lab border routers, so science traffic on the Lab LANs is invisible to ESnet 2) Some Labs have private links that are not part of ESnet - that traffic is not represented here. Terabytes/Month DOE Lab-International R&E Lab-U.S. R&E (domestic) 12 12 10 10 8 8 6 6 4 4 2 2 0 SLAC (US) RAL (UK) Fermilab (US) WestGrid (CA) SLAC (US) IN2P3 (FR) LIGO (US) Caltech (US) SLAC (US) Karlsruhe (DE) LLNL (US) NCAR (US) SLAC (US) INFN CNAF (IT) Fermilab (US) MIT (US) Fermilab (US) SDSC (US) Fermilab (US) Johns Hopkins Fermilab (US) Karlsruhe (DE) IN2P3 (FR) Fermilab (US) LBNL (US) U. Wisc. (US) Fermilab (US) U. Texas, Austin (US) BNL (US) LLNL (US) BNL (US) LLNL (US) Fermilab (US) UC Davis (US) Qwest (US) ESnet (US) Fermilab (US) U. Toronto (CA) BNL (US) LLNL (US) BNL (US) LLNL (US) CERN (CH) BNL (US) NERSC (US) LBNL (US) DOE/GTN (US) JLab (US) U. Toronto (CA) Fermilab (US) NERSC (US) LBNL (US) NERSC (US) LBNL (US) NERSC (US) LBNL (US) NERSC (US) LBNL (US) CERN (CH) Fermilab (US) Source and Destination of the Top 30 Flows, Feb. 2005 Lab-Lab (domestic) Lab-Comm. (domestic) 14 Observed Drivers for ESnet Evolution • The observed combination of o o exponential growth in ESnet traffic, and large science data flows becoming a significant fraction of all ESnet traffic show that the projections of the science community are reasonable and are being realized • The current predominance of international traffic is due to high-energy physics o o • However, all of the LHC US tier-2 data analysis centers are at US universities As the tier-2 centers come on-line, the DOE Lab to US university traffic will increase substantially High energy physics is several years ahead of the other science disciplines in data generation o Several other disciplines and facilities (e.g. climate modeling and the supercomputer centers) will contribute comparable amounts of additional traffic in the next few years 15 DOE Science Requirements for Networking 1) Network bandwidth must increase substantially, not just in the backbone but all the way to the sites and the attached computing and storage systems 2) A highly reliable network is critical for science – when large-scale experiments depend on the network for success, the network must not fail 3) There must be network services that can guarantee various forms of quality-of-service (e.g., bandwidth guarantees) and provide traffic isolation 4) A production, extremely reliable, IP network with Internet services must support Lab operations and the process of small and medium scale science 16 ESnet’s Place in U. S. and International Science • ESnet and Abilene together provide most of the nation’s transit networking for basic science o o • Abilene provides national transit networking for most of the US universities by interconnecting the regional networks (mostly via the GigaPoPs) ESnet provides national transit networking for the DOE Labs ESnet differs from Internet2/Abliene in that o o Abilene interconnects regional R&E networks – it does not connect sites or provide commercial peering ESnet serves the role of a tier 1 ISP for the DOE Labs - Provides site connectivity - Provides full commercial peering so that the Labs have full Internet access 17 ESnet and GEANT • GEANT plays a role in Europe similar to Abilene and ESnet in the US – it interconnects the European National Research and Education Networks, to which the European R&E sites connect • GEANT currently carries essentially all ESnet traffic to Europe (LHC use of LHCnet to CERN is still ramping up) 18 Ensuring High Bandwidth, Cross Domain Flows • ESnet and Abilene have recently established highspeed interconnects and cross-network routing • Goal is that DOE Lab ↔ Univ. connectivity should be as good as Lab ↔ Lab and Univ. ↔ Univ. • Constant monitoring is the key US LHC Tier 2 sites need to be incorporated The Abilene-ESnet-GEANT joint monitoring infrastructure is expected to become operational over the next several months (by mid-fall, 2005) 19 Monitoring DOE Lab ↔ University Connectivity AsiaPac SEA • Current monitor infrastructure (red&green) and target infrastructure • Uniform distribution around ESnet and around Abilene • All US LHC tier-2 sites will be added as monitors CERN CERN Europe Europe LBNL Abilene FNAL ESnet OSU Japan Japan CHI NYC DEN SNV DC KC BNL IND Japan LA NCS* SDG SDSC ALB ELP HOU DOE Labs w/ monitors Universities w/ monitors Initial site monitors network hubs high-speed cross connects: ESnet ↔ Internet2/Abilene ( ATL ESnet Abilene *intermittent scheduled for FY05) 20 One Way Packet Delays Provide a Fair Bit of Information Normal: Fixed delay from one site to another that is primary a function of geographic separation The result of a congested tail circuit to FNAL The result of a problems with the monitoring system at CERN, not the network 21 Strategy For The Evolution of ESnet A three part strategy for the evolution of ESnet 1) Metropolitan Area Network (MAN) rings to provide - dual site connectivity for reliability - much higher site-to-core bandwidth - support for both production IP and circuit-based traffic 2) A Science Data Network (SDN) core for - provisioned, guaranteed bandwidth circuits to support large, high-speed science data flows - very high total bandwidth - multiply connecting MAN rings for protection against hub failure - alternate path for production IP traffic 3) A High-reliability IP core (e.g. the current ESnet core) to address - general science requirements - Lab operational requirements - Backup for the SDN core - vehicle for science services 22 Strategy For The Evolution of ESnet: Two Core Networks and Metro. Area Rings CERN Asia-Pacific GEANT (Europe) Australia Science Data Network Core (SDN) (NLR circuits) Aus. Sunnyvale New York IP Core Washington, DC LA Albuquerque San Diego IP core hubs Metropolitan Area Rings SDN/NLR hubs Primary DOE Labs New hubs Production IP core (10-20 Gbps) Science Data Network core (30-50 Gbps) Metropolitan Area Networks (20+ Gbps) Lab supplied (10+ Gbps) International connections (10-40 Gbps) ESnet MAN Architecture (e.g. Chicago) core router R&E peerings International peerings T320 ESnet SDN core ESnet production IP core core router switches managing multiple lambdas Starlight Qwest ESnet managed λ / circuit services ESnet production IP service ESnet management and monitoring 2-4 x 10 Gbps channels ANL FNAL monitor site equip. ESnet managed λ / circuit services tunneled through the IP backbone Site gateway router Site LAN monitor Site gateway router Site LAN site equip. 24 First Two Steps in the Evolution of ESnet 1) The SF Bay Area MAN will provide to the five OSC Bay Area sites o Very high speed site access – 20 Gb/s o Fully redundant site access 2) The first two segments of the second national 10 Gb/s core – the Science Data Network – are San Diego to Sunnyvale to Seattle 25 ESnet SF Bay Area MAN Ring (Sept., 2005) SDN to Seattle (NLR) • 2 λs (2 X 10 Gb/s channels) in a ring configuration, and delivered as 10 GigEther circuits - 10-50X current site bandwidth Joint Genome Institute λ2 SDN/circuits NERSC λ3 future λ4 future SF Bay Area • Will be used as a 10 Gb/s production IP ring and 2 X 10 Gb/s paths (for circuit services) to each site • Project completion date is 9/2005 10 Gb/s optical channels λ1 production IP LBNL • Dual site connection (independent “east” and “west” connections) to each site • Qwest contract signed for two lambdas 2/2005 with options on two more IP core to Chicago (Qwest) LLNL SNLL SLAC DOE Ultra Science Net (research net) Level 3 hub Qwest / ESnet hub ESnet MAN ring (Qwest circuits) ESnet hubs and sites SDN to San Diego NASA Ames IP core to El Paso SF Bay Area MAN – Typical Site Configuration max. of 2x10G connections on any line card to avoid switch limitations West λ1 and λ2 0-10 Gb/s drop-off IP traffic Site LAN site Site ESnet 6509 nx1GE or 10GE IP SF BA MAN 1 or 2 x 10 GE (provisioned circuits via VLANS) East λ1 and λ2 0-20 Gb/s VLAN traffic 0-10 Gb/s pass-through VLAN traffic 0-10 Gb/s pass-through IP traffic = 24 x 1 GE line cards = 4 x 10 GE line cards (using 2 ports max. per card) 27 Evolution of ESnet – Step One: SF Bay Area MAN and West Coast SDN CERN Asia-Pacific GEANT (Europe) Australia Science Data Network Core (SDN) (NLR circuits) Sunnyvale Aus. New York IP Core (Qwest) Washington, DC LA San Diego IP core hubs Albuquerque Metropolitan Area Rings El Paso SDN/NLR hubs Primary DOE Labs New hubs In service by Sept., 2005 planned Production IP core Science Data Network core Metropolitan Area Networks Lab supplied International connections ESnet Goal – 2009/2010 SEA • 10 Gbps enterprise IP traffic • 40-60 Gbps circuit based transport Europe CERN Aus. CERN Europe ESnet Science Data Network (2nd Core – 30-50 Gbps, National Lambda Rail) Japan Japan CHI SNV NYC DEN Europe AsiaPac DC Metropolitan Area Rings Aus. ALB SDG ESnet IP Core (≥10 Gbps) ATL ESnet hubs New ESnet hubs ELP Metropolitan Area Rings Major DOE Office of Science Sites High-speed cross connects with Internet2/Abilene Production IP ESnet core Science Data Network core Lab supplied Major international 10Gb/s 10Gb/s 30Gb/s 40Gb/s 29 Near-Term Needs for LHC Networking • The data movement requirements of the several experiments at the CERN/LHC are considerable • Original MONARC model (CY2000 - Models of Networked Analysis at Regional Centres for LHC Experiments – Harvey Newman’s slide, above) predicted o Initial need for 10 Gb/s dedicated bandwidth for LHC startup (2007) to each of the US Tier 1 Data Centers - By 2010 the number is expected to 20-40 Gb/s per Center o Initial need for 1 Gb/s from the Tier 1 Centers to each of the associated Tier 2 centers 30 Near-Term Needs for LHC Networking • However, with the LHC commitment to Grid based data analysis systems, the expected bandwidth and network service requirements for the Tier 2 centers are much greater than the MONARCH bulk data movement model o MONARCH still probably holds for the Tier0 (CERN) – Tier 1 transfers o For widely distributed Grid workflow systems QoS is considered essential - • Without a smooth flow of data between workflow nodes the overall system would likely be very inefficient due to stalling the computing and storage elements Both high bandwidth and QoS network services must be addressed for LHC data analysis 31 Proposed LHC high-level architecture LHC Network Operations Working Group, LHC Computing Grid Project 32 Near-Term Needs for North American LHC Networking • Primary data paths from LHC Tier 0 to Tier 1 Centers will be dedicated 10Gb/s circuits • Backup paths must be provided o o • About day’s worth of data can be buffered at CERN However, unless both the network and the analysis systems are over-provisioned it may not be possible to catch up even when the network is restored Three level backup strategy o o o Primary: Dedicated 10G circuits provided by CERN and DOE Secondary: Preemptable10G circuits (e.g. ESnet’s SDN, NSF’s IRNC links, GLIF, CA*net4) Tertiary: Assignable QoS bandwidth on the production networks (ESnet, Abilene, GEANT, CA*net4) 33 Proposed LHC high-level architecture Tier2s Tier1s L3 Backbones Tier0 Main connection Backup connection 34 LHC Networking and ESnet, Abilene, and GEANT • USLHCnet (CERN+DOE funded) supports US participation in the LHC experiments o • Dedicated high bandwidth circuits from CERN to the U.S. transfer LHC data to the US Tier 1 data centers (FNAL and BNL) ESnet is responsible for getting the data from the transAtlantic connection points for the European circuits (Chicago and NYC) to the Tier 1 sites o ESnet is also responsible for providing backup paths from the transAtlantic connection points to the Tier 1 sites • Abilene is responsible for getting data from ESnet to the Tier 2 sites • The new ESnet architecture (Science Data Network) is intended to accommodate the anticipated 20-40 Gb/s from LHC to US (both US tier 1 centers are on ESnet) 35 ESnet Lambda Infrastructure and LHC T0-T1 Networking CANARIE Seattle Toronto Boise BNL Clev New York Denver KC Pitts FNAL Wash DC GEANT-1 Sunnyvale Chicago CERN-1 CERN-2 CERN-3 TRIUMF Vancouver Raleigh Phoenix Albuq. Tulsa San Diego Atlanta Dallas NLR PoPs Jacksonville El Paso Las Cruces ESnet IP core hubs ESnet SDN/NLR hubs Tier 1 Centers Cross connects with Internet2/Abilene New hubs GEANT-2 LA San Ant. Houston Pensacola Baton Rouge ESnet Production IP core (10-20 Gbps) ESnet Science Data Network core (10G/link) (incremental upgrades, 2007-2010) Other NLR links CERN/DOE supplied (10G/link) International IP connections (10G/link) 36 Abilene* and LHC Tier 2, Near-Term Networking Vancouver CERN-1 CERN-2 CERN-3 TRIUMF CANARIE Seattle Toronto Boise Clev BNL New York Denver KC Pitts FNAL Wash DC GEANT-1 Sunnyvale Chicago Raleigh Phoenix Albuq. Tulsa San Diego Dallas Atlanta GEANT-2 LA Jacksonville El Paso Atlas Tier 2 Centers Pensacola Las Cruces NLR PoPs • University of Texas at Arlington Baton Rouge Houston • University CMS Tier 2 Centers ESnet of IP Oklahoma core hubsNorman San Ant. • University of New Mexico Albuquerque • MIT ESnet Production IP core (10-20 Gbps) • Langston • University of Florida at Gainesville ESnetUniversity SDN/NLR hubs < 10G connections to Abilene ESnet Science Data Network core (10G/link) • University of Chicago • University of Nebraska at Lincoln (incremental upgrades, 2007-2010)to USLHC or Tier University 1 CentersBloomington 10G connections • Indiana • University of Wisconsin at Madison Other NLR links ESnet • Boston • Caltech CrossUniversity connects with Internet2/Abilene CERN/DOE supplied (10G/link) • Harvard University • Purdue University USLHCnet nodes New hubs • University of Michigan • University of California SanInternational Diego 37 IP connections (10G/link) QoS - New Network Service • New network services are critical for ESnet to meet the needs of large-scale science like the LHC • Most important new network service is dynamically provisioned virtual circuits that provide o Traffic isolation - will enable the use of high-performance, non-standard transport mechanisms that cannot co-exist with commodity TCP based transport (see, e.g., Tom Dunigan’s compendium http://www.csm.ornl.gov/~dunigan/netperf/netlinks.html ) o Guaranteed bandwidth - the only way that we have currently to address deadline scheduling – e.g. where fixed amounts of data have to reach sites on a fixed schedule in order that the processing does not fall behind far enough so that it could never catch up – very important for experiment data analysis 38 OSCARS: Guaranteed Bandwidth Service • Must accommodate networks that are shared resources o Multiple QoS paths o Guaranteed minimum level of service for best effort traffic o Allocation management - There will be hundreds of contenders with different science priorities 39 OSCARS: Guaranteed Bandwidth Service • Virtual circuits must be set up end-to-end across ESnet, Abilene, and GEANT, as well as the campuses o There are many issues that are poorly understood o To ensure compatibility the work is a collaboration with the other major science R&E networks - code is being jointly developed with Internet2's Bandwidth Reservation for User Work (BRUW) project – part of the Abilene HOPI (Hybrid Optical-Packet Infrastructure) project - Close cooperation with the GEANT virtual circuit project (“lightpaths – Joint Research Activity 3 project) 40 policer authorization user system1 shaper OSCARS: Guaranteed Bandwidth Service resource manager bandwidth broker allocation manager site A resource manager policer • To address all of the issues is complex -There are many potential restriction points -There are many users that would like priority service, which must be rationed resource manager user system2 site B 41 Between ESnet, Abilene, GEANT, and the connected regional R&E networks, there will be dozens of lambdas in production networks that are shared between thousands of users who want to use virtual circuits. similar situation in Europe US R&E environment Federated Trust Services • Remote, multi-institutional, identity authentication is critical for distributed, collaborative science in order to permit sharing computing and data resources, and other Grid services • Managing cross site trust agreements among many organizations is crucial for authentication in collaborative environments o • ESnet assists in negotiating and managing the cross-site, cross-organization, and international trust relationships to provide policies that are tailored to collaborative science The form of the ESnet trust services are driven entirely by the requirements of the science community and direct input from the science community 43 ESnet Public Key Infrastructure • ESnet provides Public Key Infrastructure and X.509 identity certificates that are the basis of secure, cross-site authentication of people and Grid systems • These services (www.doegrids.org) provide o Several Certification Authorities (CA) with different uses and policies that issue certificates after validating request against policy This service was the basis of the first routine sharing of HEP computing resources between US and Europe 44 ESnet Public Key Infrastructure • ESnet provides Public Key Infrastructure and X.509 identity certificates that are the basis of secure, cross-site authentication of people and Grid systems • The characteristics and policy of the several PKI certificate issuing authorities are driven by the science community and policy oversight (the Policy Management Authority – PMA) is provided by the science community + ESnet staff • These services (www.doegrids.org) provide o Several Certification Authorities (CA) with different uses and policies that issue certificates after validating certificate requests against policy This service was the basis of the first routine sharing of HEP computing resources between US and Europe 45 ESnet Public Key Infrastructure • Root CA is kept off-line in a vault • Subordinate CAs are kept in locked, ESnet root CA alarmed racks in an access controlled machine room and have dedicated firewalls • CAs with different policies as required by the science community DOEGrids CA o DOEGrids CA has a policy tailored to accommodate international science collaboration o NERSC CA policy integrates CA and certificate issuance with NIM (NERSC user accounts management services) NERSC CA FusionGrid CA …… CA o FusionGrid CA supports the FusionGrid roaming authentication and authorization services, providing complete key lifecycle management 46 7500 7250 7000 6750 6500 6250 6000 5750 5500 5250 5000 4750 4500 4250 4000 3750 3500 3250 3000 2750 2500 2250 2000 1750 1500 1250 1000 750 500 250 0 User Certificates Service Certificates Expired(+revoked) Certificates Total Certificates Issued Total Cert Requests Ja Fe n-0 b 3 M -0 ar 3 A -0 p 3 M r- 0 ay 3 Ju -03 n Ju -0 3 A l-0 u 3 S g- 0 ep 3 O -0 c 3 N t- 0 ov 3 D -0 ec 3 Ja -0 3 Fe n-0 4 M b- 0 ar 4 A - 04 p M r- 0 ay 4 Ju -04 n Ju -0 4 A l-0 u 4 S g- 0 e 4 O p- 0 c 4 N t- 04 ov D -0 ec 4 Ja -0 4 Fe n-0 b 5 M -0 ar 5 A - 05 M pr- 0 ay 5 Ju -05 n Ju -0 5 l-0 5 No.of certificates or requests DOEGrids CA (one of several CAs) Usage Statistics Production service began in June 2003 User Certificates 1999 Total No. of Certificates 5479 Host & Service Certificates 3461 Total No. of Requests 7006 ESnet SSL Server CA Certificates 38 DOEGrids CA 2 CA Certificates (NERSC) 15 FusionGRID CA certificates 76 * Report as of Jun 15, 2005 47 DOEGrids CA Usage - Virtual Organization Breakdown DOEGrids CA Statistics (5479) ANL 3.5% DOESG 0.3% ESG 0.8% ESnet 0.4% FusionGRID 4.8% iVDGL 18.8% *Others 41.2% LBNL 1.2% “Other” is mostly auto renewal certs (via the Replacement Certificate interface) that does not provide VO information NERSC 3.2% NCC-EPA 0.1% LCG 0.6% ORNL 0.6% PNNL 0.4% FNAL 8.9% PPDG 15.2% *DOE-NSF collab. 48 North American Policy Management Authority • The Americas Grid, Policy Management Authority • An important step toward regularizing the management of trust in the international science community • Driven by European requirements for a single Grid Certificate Authority policy representing scientific/research communities in the Americas • Investigate Cross-signing and CA Hierarchies support for the science community • Investigate alternative authentication services • Peer with the other Grid Regional Policy Management Authorities (PMA). European Grid PMA [www.eugridpma.org ] o Asian Pacific Grid PMA [www.apgridpma.org] o • Started in Fall 2004 [www.TAGPMA.org] • Founding members o o o o o DOEGrids (ESnet) Fermi National Accelerator Laboratory SLAC TeraGrid (NSF) CANARIE (Canadian national R&E network) 49 References – DOE Network Related Planning Workshops 1) High Performance Network Planning Workshop, August 2002 http://www.doecollaboratory.org/meetings/hpnpw 2) DOE Science Networking Roadmap Meeting, June 2003 http://www.es.net/hypertext/welcome/pr/Roadmap/index.html 3) DOE Workshop on Ultra High-Speed Transport Protocols and Network Provisioning for Large-Scale Science Applications, April 2003 http://www.csm.ornl.gov/ghpn/wk2003 4) Science Case for Large Scale Simulation, June 2003 http://www.pnl.gov/scales/ 5) Workshop on the Road Map for the Revitalization of High End Computing, June 2003 http://www.cra.org/Activities/workshops/nitrd http://www.sc.doe.gov/ascr/20040510_hecrtf.pdf (public report) 6) ASCR Strategic Planning Workshop, July 2003 http://www.fp-mcs.anl.gov/ascr-july03spw 7) Planning Workshops-Office of Science Data-Management Strategy, March & May 2004 o http://www-conf.slac.stanford.edu/dmw2004 50