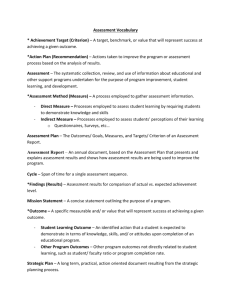

Common Industry Format Usability Testing Checklist

advertisement

CIF Usability Test Detailed Method Outline Dilip Chetan, author Sam Rajkumar, author Velynda Prakhantree, reviewer, group lead Amy Chen, reviewer Somnath Lokesh, reviewer March 2006 Applications User Experience Overview • What is CIF Usability Test? • Features of a CIF Test • When to conduct CIF • When not to conduct CIF • Pros & Cons • Metrics & Definitions • Investment • Methodology • Data Analysis • Reporting • Follow up • Appendix & Resources Applications User Experience What is a CIF Usability Test? • A formal, lab-based test to benchmark the usability of a product via performance and subjective data • Goal: to measure performance of users on a set of core tasks to predict how usable the product will be with real customers. Users should be domain experts but not be experienced with the application • The name CIF, or Common Industry Format, refers to the standardized method for reporting usability test findings that is used across the software industry • CIF was developed by a group of 300+ software organizations led by NIST • CIF became an ANSI standard in December, 2001 (ANSI/NCITS 354-2001) Applications User Experience Features of a CIF Test • Always tested on beta or live released code. Code must be stable enough to collect meaningful timing data • Conducted in a controlled environment, almost always in a usability lab • Users should not be experienced with the application being tested • Number of users: 10+ (with 8 being absolute minimum) • Deliverables: - Quick findings presentation Report in standard CIF format Applications User Experience When to conduct a CIF Test • When your focus is on gauging the overall usability of a product (live beta or released code), and not on probing users’ thought processes • When you want to create a standardized benchmark for comparing future versions of the product, or possibly similar products • When you have sufficient time (this is a time intensive activity) – at least 3 months, typically longer Applications User Experience When NOT to conduct a CIF Test User Evaluatio n • When the goals are to get some measures of user performance across participants, while maintaining the ability to probe the user’s thought process as needed during tasks. Formative Assessmen t • • When you are not concerned with measuring performance. • When more than one participant is providing feedback on the prototype at the same time. • When there is a desire (and resources) to rapidly iterate through designs in a short time. • When your primary questions do not require a task-based method. • When there is a desire to elicit feedback from users experienced with the application (though demo/interview is most common, other methods may be used). RITE Applications User Experience When the product is not sufficiently interactive, and needs to be driven by the test administrator. Pros • Provides quantitative measures of software usability, including time on task • Allows for benchmarking – future comparisons • Uses an industry standardized format for reporting findings • Is a very structured method for conducting the test, giving the most experimental control to make conclusions across participants Applications User Experience Cons • High investment of time and resources • Since time on task is considered, the UE cannot probe the participant during the task to get richer feedback • Feedback about usability issues can only be incorporated into future releases • Not a means of gathering feedback from users experienced with the application Applications User Experience Required Quantitative Metrics 1. Time on task 2. Number of Errors per participant per task - Any click or action off the successful path to task completion is an error There may be more than one successful path 3. Number of accesses to help documentation (online or other help sources) when available 4. Number of Assists per participant per task - Any help or guidance offered by the moderator is an assist. See “When to Assist Guidelines” document Users are specifically instructed to “try everything they can” to complete tasks. Users are told to say, “I’m stuck!” if they have exhausted all ideas about how to continue. See “Introductory Script” 5. Unassisted task completion rate (successfully completes task with 0 assists and within the timeout criterion) 6. Assisted completion rate (successfully completes task with 2 or fewer assists and within the timeout criterion) Applications User Experience Required Qualitative Metrics • SUMI - Software Usability Measurement Inventory – a standardized tool for measuring user perceptions of usability. See Appendix 1 for more details • Oracle “4 Universal Scales” - 4-item Likert scale for how “Easy to Use,” “Attractive,” “Useful,” and “Clear and Understandable” users perceive the product Applications User Experience Investment Activity Time* Kick-off with product team and proposal (example) ½ week Secure testable environment 3-8 weeks Agree on user profile and create screener 1 week Prepare task script/scenarios** 1-3 weeks Seed necessary data for tasks 1 week Recruit participants 3 weeks Pilot test with 2-3 U|X or product team members 1 week Conduct benchmark session with expert user 1 day Conduct CIF test 1-3 weeks Preliminary data analysis 1 week Give quick findings presentation to key team members 1 day Finish analysis and give complete findings to entire product team 1-2 weeks Publish final report 1-2 weeks Applications User Experience Notes Total time investment averages 3-6 months from kickoff to publishing a report. Securing a testable environment is often the biggest variable. Securing appropriate participants can also take much longer than anticipated, depending on the user profile. * Times for steps will vary for each test. Some steps may occur in parallel. ** For non selfservice applications, a 5-10 minute introductory video is also required. Methodology - 1 • The general methodology involves the following steps: - Define a target population Prepare a task list, scenario, and a test script Recruit / Schedule participants Determine expert times and compute timeout criterion. Conduct pilot testing Conduct tests and gather data Analyze the data Prepare list of issues and recommendations Prepare report Applications User Experience Methodology • Benchmark Times and Timeout Criterion - Definitions • Benchmark Time typically refers to the time taken by an expert user to complete a task. • Another possibly method of arriving at a benchmark time is to use the expectations of the Product Development team or the customer, of reasonable time to complete a task. Timeout Criterion refers to the maximum reasonable time for a user to complete a task. The task is regarded as a failure if the user exceeds the timeout criterion. Applications User Experience Methodology - Timeout Criterion • Methods for arriving at the Timeout Criterion 1. Multiplier approach (see next two slides for details) • Timeout Criterion = Benchmark Time (Expert time) X (multiplier between 2 – 5) or 2. The Product Management/Development team’s expectation of the reasonable maximum time for a first time user to complete a task can also be used as a Timeout Criterion. E.g. Product install must be completed in X minutes. or 3. A combination of the above two for different tasks Applications User Experience Methodology – Timeout Criterion • Multiplier approach: Deciding on the multiplier • The multiplier should be a mutually agreed upon number between the UX Team and the Product Team • Typically this is based on the complexity and/or length of the tasks • The multiplier can be the same for all tasks, or differ across tasks. For example, if the tasks vary in complexity or length, a multiplier of 3 can be applied to some tasks, while a multiplier of 5 can be applied to others. For example: • Use a higher multiplier when a task or set of tasks are short compared to other tasks. Tasks less than a minute in length – use multiplier of 5 Tasks over a minute – use multiplier of 3 Historically, UE’s have used a multiplier of 3 for most CIF tests Applications User Experience Methodology – Timeout Criterion • Multiplier approach: Process • Again, the goal is to arrive at reasonable maximum time for a first time user to complete the tasks. The task is regarded as a failure if the user exceeds the timeout criterion. • Have an expert complete the tasks as efficiently as possible under controlled conditions in the test environment • The expert is someone who is familiar with the application –typically a Product Developer or Product Manager • For each task, multiply the expert time by a mutually agreed upon factor of between 2 to 5 to arrive at the timeout criterion Example Task Description Benchmark Time (hh:mm:ss) Multiplier Timeout Criterion (hh:mm:ss) A Define an authorized delegate 00:00:25 5 00:02:05 B Create an Expense Report 00:08:40 3 00:26:00 C Update and submit a saved Expense Report 00:01:18 3 00:03:54 Applications User Experience Quantitative Data Analysis • Mean performance measures across all tasks and participants - Assisted Task Completion Rate Unassisted Task Completion Rate Mean Total Task Time Mean Total Errors Mean Total Assists Mean performance measures across all tasks and participants Assisted Task Completion Rate (%) 62.50 Applications User Experience Unassisted Task Completion Rate (%) 54.69 Task Time (hh:mm:ss) Number of Errors Number of Assists Number of Help Accesses 01:04:23 2.33 0.84 2.50 Quantitative Data Analysis (continued) • Performance Results by task across all participants (example table is shown in following slide) - Assisted Task Completion Rate Unassisted Task Completion Rate Task Time Errors Assists Descriptive statistics - The above results are reported using the following descriptive statistics - Mean - SD - Standard error - Min and Max Applications User Experience Example – Results by Task across all Participants Performance Result for Task x by participant Participant Assisted Total Task Completion Rate (%) 1 . . . 10 + Benchmark Timeout Criterion+ Mean* Standard Dev* Stand Error* Min* Max* Unassisted Total Total Task Time Task Completion (h:mm:ss) Rate (%) Errors Help Accesses 100 100 0:00:11 0 0 0 100 100 100 100 0:00:25 0:00:10 0 0 0 0 0 0 NA 100.00 NA 100.00 0:00:30 0:00:20 NA 0.00 NA 0.00 NA 0.00 0.00 0.00 100.00 0.00 0.00 100.00 0:00:07 0:00:03 0:00:10 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 100.00 100.00 0:00:31 0 0 0 + +The benchmark time is for reference only. Benchmark refers to performance of an expert user. + ++The timeout criterion is the benchmark time multiplied by a factor of 3. *Values were calculated by aggregating across test participants only. Applications User Experience Assists Quantitative Data Analysis (continued) • Performance Results by Participant across all Tasks (example in following slide) - Assisted Task Completion Rate Unassisted Task Completion Rate Task Time Errors Assists Descriptive statistics - Mean - SD - Standard error - Min and Max Applications User Experience Example – Results by Participant across all Tasks Summary performance results across all tasks by participant Participant Assisted Total Task Completion Rate (%) 1 . . . n + Benchmark Timeout Criterion++ Mean* Standard Dev* Stand Error* Min* Max* Unassisted Total Total Task Time Total Errors Total Assists Total Help Task Completion (h:mm:ss) Accesses Rate (%) 57.14 64.29 1:09:15 30 15 3 42.67 100 57.90 100 0:55:07 0:20:00 22 0 9 0 12 0 NA 60.71 NA 70.54 1:00:00 0:55:20 NA 24 NA 5 NA 7 6.77 3.56 32.87 7.10 3.76 45 0:12:00 0:04:35 0:38:55 12.98 3.21 12 5.81 1.67 7 1.32 1.01 3 95 100.00 1:25:56 32 17 12 + +The benchmark time is for reference only. Benchmark refers to performance of an expert user. + ++The timeout criterion is the benchmark time multiplied by a factor of 3. *Values were calculated by aggregating across test participants only. Applications User Experience Qualitative Data Analysis - SUMI • Example for SUMI 100 90 80 70 60 50 40 30 20 10 0 Ucl Medn ss y bi lit ar na on t C ne fu l ro l Le H el p Af fe ct ie Ef fic G lo ba nc y l Lcl This graph shows medians (the middle score when the scores are arranged in numerical order) and the upper (Ucl) and lower (Lcl) confidence limits. The confidence limits represent the limits within which the theoretical true score lies 95% of the time for this sample of users. Excerpt from Oracle Enterprise Manager 10g Grid Control Release 1 (10.1.0.3), Out of the Box Experience (OOBE) in Red Hat Enterprise Linux 3.0 Tested by: Arin Bhowmick, Darcy Menard, March 2005 Applications User Experience Qualitative Data Analysis – Oracle Universal Scales • Example for Oracle Universal Scales: Time and Labor 7.00 mean rating 6.00 4.88 5.00 4.88 4.13 4.50 4.00 3.00 2.00 un de rs ta nd ab le cl ea r& us ef ul at tra ct iv e ea sy to us e 1.00 Excerpt from Oracle Time and Labor 11.5.10. Tested by: Frank Y. Guo and Sajitha Narayan, December 2004-March 2005 Applications User Experience Qualitative Data Analysis - Table of Usability issues and design recommendations # 1. 2. 3. Issue Eight users needed to be assisted to see and go to the Tracking subtab. Four users requested that the system automatically re-price the delivery when a new order was added. Two users did not remember to click the Audit and Payment button, and needed assistance. Applications User Experience Priority / # Users Affected HIGH 8/10 Design Recommendation Incorporate tracking functionality into what is currently termed the Workbench. Remove the Tracking subtab. Accepted Incorporate automatic repricing. Not Accepted MEDIUM 4/10 LOW 2/10 Status / Bug # #37654897 Technology does not support. More design discussion is needed for the entire Audit and Payment section. Pending Design Discussion Report Sections • • • • Executive Summary Product Description Test Objectives Methods - Participants (User profile and screener) Context of product use in the test Task order Test facility Participants’ computing environment Test administrator tools Experimental design Procedure Participant general instructions Participant task instructions Usability metrics – Effectiveness, Efficiency, and Satisfaction Applications User Experience Report (Continued) • Results - - Data Analysis • Data Scoring • Data Reduction • Statistical Analysis Presentation of Results • Performance Results • Satisfaction Results • SUMI Results Design Issues • What worked • What did not work Table of Usability issues and design recommendations Appendices Applications User Experience Reporting • Usability Issues Reporting - The body of the report (in the results section) will include complete descriptions of the most outstanding issues, along with screenshots and callouts as needed to adequately describe the issues - A separate table (see example on slide 22) will include ALL the issues and design recommendations, with a column for tracking the status of the recommendation going forward. • Additional screenshots / callouts (as needed) may be included following this table, and referenced within table Applications User Experience Follow-up • Results Presentation to product team • • Commonly, a “Quick Findings” presentation is given to key team members within a week of testing. A complete findings presentation to all team members may follow after 1-2 weeks Logging bugs for the agreed-upon usability recommendations is required - A member of the product team should ideally agree to do this prior to running the test (negotiable with team, UE may also file bugs if desired) - Bug logging instructions document. Send final report to the product team, including the directorlevel or above Publish final report to Apps UX website and the UI lab doc drop Applications User Experience Appendix – Introduction to SUMI • The SUMI Questionnaire is a professionally produced, standardized tool for measuring user’s perceptions of the usability of commercial software, based on users’ hands-on exposure to the software. SUMI output scores are standard scores falling along a normal distribution in which the mean is set at 50 and the standard deviation is 10. Thus, scores can range from 0 to 100, but the majority (66%) of SUMI scores will lie between 40 and 60. For any of the SUMI scales, products falling below 50 are considered to be substandard for the software industry. For a score equal to 40, only 16% of software scores are worse. But for a score of 60, only 16% of software scores are better. • The SUMI questionnaire results are displayed in terms of 6 dimensions plotted in a profile graph: global, efficiency, affect, helpfulness, control, and learnability. The global scale measures the usability of the software as a whole, and is a weighted combination of the other dimensions. Efficiency measures the degree to the software enables users to get their work done. Affect measures how likeable and enjoyable it is to interact with the software. Helpfulness measures how well the software assists users, particularly when they encounter errors. Control measures how well the software lets users make it do what they want or expect. Learnability measures how easy it is to master the software, including the ability to grasp new functions and features. Applications User Experience Resources • CIF Testing Process • CIF Report Template – in progress • Sample Report 1 • Sample Report 2 including Help access (Old format) • Introductory Script • Introductory Video Script • When To Assist document (Under review) • Oracle Universal Scales • SUMI (scroll down to SUMI information section) • CIF FAQs – in progress Applications User Experience