click - Uplift Education

advertisement

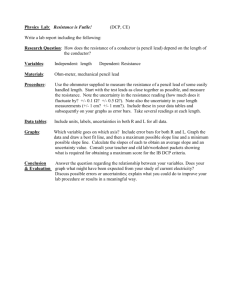

» In order to create a valid experiment, the experimenter can only change ONE variable at a time » The variable that is being changed by the experimenter is called the INDEPENDANT ______________________ variable. » The variable that changes as a result of the independent variable is called DEPENDENT the ________________ variable. » In physics there is no CONTROL variable, instead there are » CONTROLED variables is what is kept the same throughout the experiment because it also affects the dependent variables being tested, thus affecting the outcome of the experiment Your knowledge and grade in physics is related to the amount of quality time you spend studying.. independent variable: ? dependent variable: ? » If you are measuring the amount of bacteria over time, what is the independent and dependent variable? Dependent Variable on the y-axis! Independent Variable on the x-axis! The graph shown here is ok. Why only ok? The graph shows us the overall trend (average value). In science we care about BOTH the average value and some measure of the variation/uncertainty in the data. We use the synonymous terms uncertainty, error, or deviation to represent the variation in measured data. Data nearly always has uncertainty. There are many causes of uncertainty (different results when doing the same measurements), including: instrument uncertainty No measuring instrument is fully precise. Each instrument has an inherent amount of uncertainty in its measurement. Even the most precise measuring device cannot give the actual value because to do so would require an infinitely precise instrument. uncontrolled variables Its nearly impossible to fully control all variables. Un- or poorly- controlled variables will cause variation in your results. For example, changes in wind speed and direction would effect the results of a projectile launching experiment. Sampling from a population Sometimes you are measuring a small number of individuals from a larger population who differ in some trait. This is very common in biology but is NOT usually relevant to physics. Random and systematic errors » In ANY science, we care about the variation in the data nearly as much as the overall outcome / trend. Why? » Because the variation gives us some (NOT perfect) idea of how close our measurement is to the TRUE value. » Variation also limits our ability to make a conclusion about an overall trend or difference in our data. For example, our measurements of the size of 100 maple leaves might be summarized by reporting a typical value and a range of variation. This data can be reported in the form of a plot with "error bars". For example, if 100 maple leaves were collected from three different sites (parking lots, prairie, and the woods) we can display typical values and ranges of variation: As you can see there is a huge deviation from mean value for parking location, so it is not quite possible to distinguish between parking and prairie location. The uncertainty gives an answer to the question, "how well does the measurement represent the value of the quantity being measured?" In general: Measurement = (mean value ± uncertainty) unit of measurement uncertainty uncertainty mean value Measurement values Uncertainty (of measurement) defines a range of the values that represent the measurement result at a given confidence. Unfortunately, there is no general rule for determining the uncertainty. If STANDARD DEVIATION is taken as uncertainty then Measurement = (mean value ± standard deviation) indicates approximately a 68% confidence interval Usually done in fields where a sample represents the whole population (biology, sociology, psychology …) In PHYSICS, Chemistry there is no sample representing the whole population , so uncertainty is 𝑨𝒃𝒔𝒐𝒍𝒖𝒕𝒆 𝒖𝒏𝒄𝒆𝒓𝒕𝒂𝒊𝒏𝒕𝒚 ∆𝒙 = 𝑟𝑎𝑛𝑔𝑒 2 𝑹𝒂𝒏𝒈𝒆 𝒐𝒇 𝒕𝒉𝒆 𝒎𝒆𝒂𝒔𝒖𝒓𝒆𝒎𝒆𝒏𝒕𝒔 = 𝑥𝑚𝑎𝑥 − 𝑥𝑚𝑖𝑛 For example, result (20.1 cm ± 0.1) cm basically communicates that the person making the measurement believe the value to be closest to 20.1 cm but it could have been anywhere between 20.0 cm and 20.2 cm. A major part of any lab is evaluating the strength of your data, identifying the possible sources of error, and suggesting improvements or further lines of inquiry for the future. DO NOT ANSWER: “I think my data are good because I worked hard and didn’t mess up. I could do better if I had more time and better equipment.” Instead, » use your uncertainties to comment meaningfully on whether or not you can really make a conclusion or find a trend. » Think carefully about sources of error (often, these are poorly controlled variables that are affecting your results, but sometimes your whole approach may be flawed). » If your uncertainties are really large you should explain why and suggest how to reduce them in the future. » If you obtained the expected results then think of a follow up question you could study. If the results were surprising, think of a way to figure out why you got those results. Human error (messing up) is not a valid type of experimental error, thus the term “HUMAN ERROR“ should NEVER be used in a lab report!!! Instead the two acceptable types of experimental error explored in physics labs are systematic and random error. LABS ARE NOT MEANT TO BE FUN, OR EASY, OR TO BOOST YOUR GRADES. (though, I do hope you enjoy it, and I always always hope you earn a good grade!) We do labs so that you learn how to investigate a problem and how to analyze data. By 11th grade, you should know how to investigate a problem fairly well. One of our big focuses this year, then, is how to analyze data in a rigorous fashion. (so, use this ppt as a reference AND DO YOUR BEST WORK on the upcoming lab!!) 3 A REPORTING A SINGLE MEASUREMENT – Instrument uncertainty No measuring instrument is fully precise. Each instrument has an inherent amount of uncertainty in its measurement. Even the most precise measuring device cannot give the actual value because to do so would require an infinitely precise instrument. Generally we report the measured value of something with the decimal place or precision going not beyond the smallest graduation (called the ‘least count’) on the instrument. We report instrument uncertainty whenever we take a single measurement of something … If not stated differently by manufacturer, these uncertainties are: Analog instrument (the one with a scale): ½ of the smallest increment (precision) Digital instrument : the whole smallest increment Uncertainties are given to 1 significant figure. # of SF is 4: 3 known with certainty and one estimate L = (10.66 ± 0.05) cm The experimenter can determine the error to be different from instrument uncertainty provided some justification can be given. For example, mercury and alcohol thermometers are quite often not as accurate as the instrument uncertainty says. Instrument uncertainty when measuring time with stopwatch is certainly not the one stated by manufacturer – usually 0.01 s. It is ridiculous since you could never, ever move your thumb that fast! It doesn’t take into account human reaction time. More realistic would be ± 0.3 s , if you can justify it. Example: Distance of horizontal travel of a projectile launched at different angles. Angle of launch (+ 5o) Horizontal Distance travelled (m) (+ 1 cm) Trial 1 Trial 2 Trial 3 Trial 4 Trial 5 20 6.2 6.6 6.7 6.2 6.4 40 9.9 9.6 9.5 10.1 9.8 60 8.7 9.1 8.6 8.3 8.6 80 3.1 3.5 3.7 3.2 3.3 When someone else reads this report they would know instrument uncertainties. If you add the sentence like: Although measurement tape had instrument uncertainty of 0.5 mm, due to set up of experiment (….) estimated uncertainty is 1 cm (reason). 3 B REPORTING YOUR BEST ESTIMATE OF A MEASUREMENT – Absolute uncertainty The best way to come up with a good measurement of something is to take several measurements and average them all together. Each individual measurement has uncertainty, but the reported uncertainty in your average value is different than the uncertainty in your instrument. The precision of the instrument is not the same as the uncertainty in the measurement (unless you take only one measurement). Taking several measurements of something (x) , leads to distribution of values (x1, x2, x3,…) 𝑨𝒗𝒆𝒓𝒂𝒈𝒆 𝒗𝒂𝒍𝒖𝒆 = 𝑠𝑢𝑚 𝑜𝑓 𝑎𝑙𝑙 𝑚𝑒𝑎𝑠𝑢𝑟𝑒𝑚𝑒𝑛𝑡𝑠 : 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑚𝑒𝑎𝑠𝑢𝑟𝑒𝑚𝑒𝑛𝑡𝑠 𝑥𝑎𝑣𝑔 = 𝑥𝑖 𝑛 𝑹𝒂𝒏𝒈𝒆 𝒐𝒇 𝒕𝒉𝒆 𝒎𝒆𝒂𝒔𝒖𝒓𝒆𝒎𝒆𝒏𝒕𝒔 = 𝑀𝑎𝑥𝑖𝑚𝑢𝑚 𝑣𝑎𝑙𝑢𝑒 − 𝑚𝑖𝑛𝑖𝑚𝑢𝑚 𝑣𝑎𝑙𝑢𝑒 = 𝑥𝑚𝑎𝑥 − 𝑥𝑚𝑖𝑛 𝑨𝒃𝒔𝒐𝒍𝒖𝒕𝒆 𝒖𝒏𝒄𝒆𝒓𝒕𝒂𝒊𝒏𝒕𝒚 ∆𝒙 = 𝑟𝑎𝑛𝑔𝑒 𝑥𝑚𝑎𝑥 − 𝑥𝑚𝑖𝑛 = 2 2 𝑭𝒓𝒂𝒄𝒕𝒊𝒐𝒏𝒂𝒍 𝒖𝒏𝒄𝒆𝒓𝒕𝒂𝒊𝒏𝒕𝒚 = ± ∆𝑥 𝑥 𝑷𝒆𝒓𝒄𝒆𝒏𝒕𝒂𝒈𝒆 𝒖𝒏𝒄𝒆𝒓𝒕𝒂𝒊𝒏𝒕𝒊𝒆𝒔 = ± (𝑥 𝑖𝑠 𝑎𝑐𝑐𝑡𝑢𝑎𝑙𝑙𝑦 𝑥𝑎𝑣𝑔 ) ∆𝑥 100% 𝑥 (𝑥 𝑖𝑠 𝑎𝑐𝑐𝑡𝑢𝑎𝑙𝑙𝑦 𝑥𝑎𝑣𝑔 ) Measurement = average value ± absolute uncertainty (unit of measurement) 𝑥 = (𝑥𝑎𝑣𝑔 ±∆𝑥) 𝑢𝑛𝑖𝑡𝑠 Always round your stated uncertainty up to match the number of decimal places of your measurement, if necessary. In the IB Physics laboratory, you should take 5 measurements if you can manage it. EX: The Six students measure the resistance of a lamp. Their answers in Ω are: 609; 666; 639; 661; 654; 628. What should the students reports as the resistance of the lamp? Average resistance = 643 Ω Range = Largest - smallest resistance: 666 - 609 = 57 Ω Absolute uncertainty: dividing the range by 2 = 29 Ω So, the resistance of the lamp is reported as: R = (643 ± 30) Ω 𝐹𝑟𝑎𝑐𝑡𝑖𝑜𝑛𝑎𝑙 𝑢𝑛𝑐𝑒𝑟𝑡𝑎𝑖𝑛𝑡𝑦 = ±0.05 𝑃𝑒𝑟𝑐𝑒𝑛𝑡𝑎𝑔𝑒 𝑢𝑛𝑐𝑒𝑟𝑡𝑎𝑖𝑛𝑡𝑖𝑒𝑠 = 5% This tells you immediately the maximum and minimum experimental values of a measurement When taking time measurements, the stated uncertainty cannot be unreasonably small – not smaller than 0.3 s, no matter what the range. For example if the range is 0.1s, then absolute uncertainty is 0.05 s which is highly unreasonable. You have to change it and explain the reasons. When taking several measurements, it should be clear if you have a value with a large error. Do not be afraid to throw out any measurement that is clearly a mistake. You will never be penalized for this if you explain your rationale for doing so. In fact, it is permissible, if you have many measurements, to throw out the maximum and minimum values. Remember that the manner in which you report measured and calculated values is entirely up to you, as the experimenter. However, be realistic in your precision and be able to fully justify reported measurements and calculated values, showing all of your work in doing so. 4. DRAWING A GRAPH In many cases, the best way to present and analyze data is to make a graph. A graph is a visual representation of 2 things and shows nicely how they are related. A graph is the visual display of quantitative information and allows us to recognize trends in data. Graphs also let you display uncertainties nicely. When making graphs: 1. The independent variable is on the x-axis and the dependent variable is on the y-axis. 2. Every graph should have a title that this concise but descriptive, in the form ‘Graph of (dependent variable) vs. (independent variable)’. 3. The scales of the axes should suit the data ranges. 4. The axes should be labeled with the variable, units, and instrument uncertainties. 5. The data points should be clear. 7. Error bars should be shown correctly (using a straight-edge). Error bars represent the uncertainty range 8. Data points (average value ONLY!) should not be connected dot-to-dot fashion. A line of best fit should be drawn instead. The best fit line is not necessarily the straight line and should pass through all of the crosses created by the error bars. Approximately the same number of data points should be above your line as below it. 9. Each point that does not fit with the best fit line should be identified. If you have an outlier, leave it as it is, discuss it, and the draw a second graph omitting the outlier and discuss it again. 10. Think about whether the origin should be included in your graph (what is the physical significance of that point?) Do not assume that the line should pass through the origin. A nice way to show uncertainty in data is with error bars. These are bars in the x and y directions around each data point that show immediately how big or small the uncertainty is for that value. Plotting a graph allows one to visualize all the readings at one time. Ideally all of the points should be plotted with their error bars. In principle, the size of the error bar could well be different for every single point and so they should be individually worked out. In practice, it would often take too much time to add much time to add all the correct error bars, so some (or all) of the following short cut could be considered. ▪ Rather than working out error bars for each point – use the worst value and assume that all of the other errors bars are the same. In the report include explanation, a sentence like: “Taking the highest uncertainty we are reasonably sure that result is ….” You should discuss the experiment results at the end. What type of dependence you discovered,…. For example when graphing experimental data, you can see immediately if you are dealing with random or systematic errors (if you can compare with theoretical or expected results).