AskMike -- summary

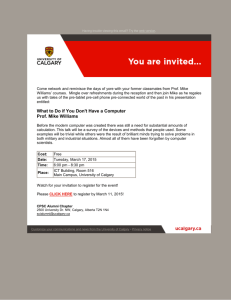

advertisement