ABSTRACT - Department of Math & Computer Science

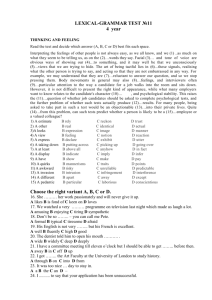

advertisement

Intelligent Web Topics Search Using Early Detection and Data Analysis Ching-Cheng Lee, Yixin Yang Mathematics and Computer Science, California State University at Hayward Hayward, California 94542 Abstract Topic-specific search engines that offered users relevant topics as search results have recently been developed. However, these topic-specific search engines require intensive human efforts to build and maintain. In addition, they visit many irrelevant pages. In our project, we propose a new approach for Web topics search. First, we ………...Example Format for Abstract…Here.….. THIS IS USED FOR EXAMPLE rance information such as appearance times and places for candidate topics. By these two techniques, we can reduce candidate topics’ crawling times and computing cost. Analysis of the results and the comparisons with related research will be made to demonstrate the effectiveness of our approach. 1. Background Search engines are widely used for information access in World Wide Web. Today’s conventional search engines are designed to crawl and index the web, and produce giant hyperlink databases. These search engines may return hundreds or more links to user's queries provided that the right key word is used in the query. Despite the power of searching, these search engines lack the capabilities of finding the relevant Web sites for a giving specific topic. It is important to add capabilities to search engines that provide topic-related search. Various topic-related search systems have recently been proposed. One approach is the topic-specific search engine Mathsearch. This search engine offers higher quality search results, but it requires intensive human efforts [12]. J. Cho, H Garcia-Molina and L. Page proposed an efficient crawling through Uniform Resource Locator (URL) ordering scheme [7]. Their scheme guides the web crawler to rank web pages on both content and link measure. But the disadvantages of their system are that it requires scanning of the entire text of web page and does not account for topic relevance. Another approach is Focused Crawler [2] that utilizes both web link structure information and content similarity (based on document classification). The main drawback of this system is that it visits too many irrelevant pages and requires additional seed pages in order to add new topics. Most recently, J. Yi and N. Sundaresan [6] proposed an effective web-mining algorithm called topic expansion to discover relevant topics during the search. This scheme avoids visiting unnecessary Web pages and the whole text from a web page, and it does not need intensive human effort. The system mines the relevant topic as follows. First, it collects large number of Web pages. Then it extracts words from the text that is contained inside HTML document tags. From the extracted words, this system selects some words that are potentially relevant to the target topic. Finally, the system uses a formula and a relationship-based architecture for finding the relation between words to refine and return the relevant topics. Although the topic expansion scheme is far better than other schemes, it has some drawbacks in that it still needs much human involvement and multiple iterations of Web sites crawling. The number of Web crawling times depends on the number of the given candidate topics. Additionally, if a new incoming word does not appears in this relationship-based architecture, the new incoming word will not easily be detected although this word is highly relevant to the target topic. In this project, we propose a new scheme that uses early detection and data analysis techniques for detecting and analyzing candidate topics. By these, we can reduce candidate topics’ crawling times and computing cost to make the system more efficient. 2. The Research 2.1. System Architecture Our system consists of the following six components: a web crawler, a page parser, a stop word filter, a candidate topic selector, a candidate topic filter, and a relevant database as illustrated in Figure 1. Web Pages Web crawler Stop word Filter Candidate topic selector HTML parser Candidate topic filter Relevant term database Figure 1. System Architecture The core parts of the system will be the candidate topic selector and the candidate topic filter. Candidate topic selector performs early detection when words are extracted. Candidate topic filter analyzes occurring times and places of candidate topics to eliminate the false candidate topics and find brand new Internet words. By applying the early detection and data analysis methods in these two components, our system can reduce candidate topics’ crawling times and computing cost, thus make the system more efficient. In the following, we describe each of the component in more details. 2.2. System Components The system is implemented as follows: Web Crawler: The Web Crawler crawls the World Wide Web, retrieves and processes large amount of web pages. Our web crawler is a fast, memory-limited and highly flexible web crawler. In addition, our web crawler has two features not found in most web crawlers. They are: (1) It detects the file types, if a hyperlink references to some big ZIP files, application files, audio and video files, this web crawler will detect and choose to skip these large files. (2) It can avoid visiting irrelevant web pages. If our web crawler detects that a web page is not relevant to the target topic, it can just avoid retrieving most of the URLs in this web page. Therefore, in our implementation, the web crawler is more efficient and robust in that it won’t choke on unreadable file formats. In order to determine the file types, our system uses methods in java.net package prepared by java 2 Platform SE 1.3 to determine the type of the remote object, if the remote object is of type such as ZIP files, application files, image files, etc., our web crawler will skip these unreadable or too-large files. By this way, we get wellformatted HTML pages. Then our web crawler will pass the web page to the page parser (other layers such as stop words filer and candidate topic selector will be invoked from then on). Candidate topic selector will perform part of the early detection in that if the system detects a web page is obviously irrelevant to the target topic the candidate topic our web crawler will avoid retrieving most of the URLs in that web page to make our system more efficient. HTML Parser: The HTML Parser parses and extracts metadata as text from the downloaded web pages. In this project, we choose to use anchor text metadata as metadata information. Because anchor text is most frequently occurred in a HTML document and is most reliable one among four kinds of hyperlink metadata [6]. Page parser is implemented as follows: When the page parser receives a well-formed web document from the crawler, it parses the document for elements with names corresponding to the hyperlink elements (A for anchor tags, IMG for image tags, etc.), and extracts the attribute values. Stop Words Filter: The Stop Words Filter gets the words from the HTML parser and filters all the common words and words that are obviously irrelevant to the target topic. Common words are words that are too popular or too generic such as “welcome”, “best”, “a”, “that”, “is”,” in” etc., Stop words filter could be target-specific and needs minimal human interference in the process of this topic-mining scheme. After this step, words are passed onto candidate topic selector. All stop words are stored in a database table for easy access and easy update. User may update the stop words database in the middle of a mining iteration, and a set of common words can be reused for different target topics. Stop words filter checks every term, if a term is in the stop words database, then the term will be disqualified as candidate topics. detected. After this step, the detected relevant terms will be stored in the relevant term database described below for future uses as knowledge base of search engines. In our system, for each candidate topic, we use a simple but adequate formula to filter them: Candidate Topic Selector: The Candidate Topic Selector selects candidate topics with respect to the specified target topic. It performs early detection of candidate topics and all terms that are co-occurred with the target topic can be considered as candidate topic. The candidate topic selector is implemented as follows: The Candidate Topic Selector receives words from stop words filter. For every words, candidate topic selector computes and updates occur times of each word and the times the word co-occurred with the target topic. A record for this word is stored in the database. Every word (excluding the stop words) will be kept in database as a record; the records are for the relevance computations in subsequent components. The same candidate selection will be applied to all anchor text metadata in a page, and the system will recursively perform the same procedure for all the crawled URLs. When a user chooses to stop selecting candidate topics or the number of URLs crawled reaches a user-specified count, candidate topic selector will be stopped. Only the words that are frequently co-occurred with the target topic can be finally deemed as candidate topics. User can also define the smallest co-occurred numbers as a criterion for candidate topics. If the co-occurred time is less than the smallest co-occurred number, the word cannot be considered as the candidate topic. In this formula, co is the number of times a candidate topic co-occurred with the target topic. to is the total occur times for a candidate topic. MAXco is the maxim number of co among all candidate topics. r stands for the filtering metric; c is the user-defined threshold. Candidate Topic Filter: The Candidate Topic Filter is activated at the end of mining, it retrieves candidate topics from the database, and then uses a simplified yet powerful formula to compute the relevance of each candidate topic and decide whether to keep the candidate topic as relevant word or not. By analyzing the candidate topics’ occurrence information, relevant words can be r co co * c to MAXco Relevant Term Database: The Relevant Term Database contains the relevant topic data for the user-specified target topic. A database will be created with three tables defined: candidate_topics, relevant_terms and stop_words. Stop_words table stores words that are too popular or obviously irrelevant to the target topic; candidate_topics table store words that are coming from extracted metadata and are potentially relate to the target topic; the relevant_terms table is for the final results. 3. Experimentation 3.1. The Experiments Our EXPERIMENT HERE FOR EXAMPLE -DOS Prompt application from any windows system such as Windows XP, Windows ME etc. JDK1.4.1 and Microsoft Access database are used as our develop tools. To connect to the database, an ODBC data source must be registered with the system through the ODBC data sources. When the program starts, a java GUI window will pop up as in Figure 2. User needs to enter a seed URL and the target topic, and then the program will start to run. User may use any URL as the seed URL, but for best result, user can use some domain control knowledge to decide the seed URL. Figure 2. The Java GUI Window Our system has the following features, besides the simplified filtering algorithm: - It detects the file types and handle correspondingly. For example, if a hyperlink references to some ZIP files, application files, audio or video files, the system will detect and choose to skip these large files. - It detects the irrelevance of some pages thus avoid visiting irrelevant web pages. If our system detects a web page is irrelevant to the target topic, which page’s chance of getting visited is much less than other seemingly relevant pages, thus it can help our system staying in the right mining direction. Most of URL or web page errors are handled in a nice manner by the system, such as URL referring to an empty or removed page, server or client down. This feature makes our system most robust. We use the following criteria to evaluate the algorithm: (1) The algorithm of algorithm should be evaluated by considering the number of false inclusions (meaning irrelevant terms are falsely considered as relevant). The false inclusions should be minimized. (2) The quality of algorithm should be evaluated by considering number of relevant terms found versus number of web pages crawled. At the same time, it takes into accounts of the number of false inclusions. 3.2. Results and Comparisons Table 1 is a summary of the mining results for target topic “XML”. By applying our scheme to the hyperlink metadata of EXAMPLR HERE ages, our system produced sets of relevant topics with good quality. In table 1, the “Actual relevant topics” in left column means the actual relevant topics detected from candidate topics by people ( not by our application) this filed “Actual relevant topics” is used for check our results. “Relevant topics by c=0.055” means by using c=0.055 and run our application, how many relevant topics detected by our application. False inclusion refers to the irrelevant topic terms that are included in the set of relevant topics by our application. False exclusion refers to the relevant topics that are not included in the set of relevant topics by the algorithm. As seen from the above results, our mining scheme showed low false inclusion, for instance, our system produced low rate of false inclusion with 0 false inclusions in 51 relevant topics in the 4th iteration. Every time a user runs the application and update the stop word database, these steps called iteration. In terms of number of relevant terms vs. number of web pages crawled, our system exhibits very good result for relevant topics and shows our system are more efficient, comparing to the experiment results provided by topic expansion system. That system used 4 iterations with a total number of 34,000 web pages crawled to get 49 relevant topics out of 54 actual relevant topics; our system crawled only 17,000 web pages for obtaining 51 relevant topics out of 57 actual relevant topics by 4 iterations. # of pages crawled Candidate topics 1st Iteration 2nd Iteration 3rd Iteration 4th Iteration EXAMPLE- EXAMPLE ……… 7000 HERE- HERE. 141 167 RESULT RESULT HERE HERE Actual relevant topics 25 36 45 57 Relevant topics by c=0.055 EXAMPLE RESULT 46 51 HERE HERE RESULT RESULT RESULT 0 HERE HERE HERE 9 RESULT 5 False inclusion ( c=0.055) False exclusion (c=0.055) 6 HERE Table 1 Experiment for candidate topic “XML” Another improvement of our system is that users do not have to create a relation-based architecture. In other similar systems, most of them need a relationship-based hierarchy. In topic expansion system, users have to expand the topic hierarchy after each iteration to help next iteration get better results; In our system, after each iteration, users may choose to add more stop words (maybe target-specific) to the stop words table but user don’t have to understand very well about the relationship between words. One more thing we have to realize is that in reality there is no ultimate way of eliminating false exclusion (meaning relevant terms in certain crawled pages are falsely considered irrelevant by the algorithm). Other research claimed that they can minimized false exclusion from candidate topics to final relevant topics,. What they did is to reduce the candidate topics set. However the downside of their approach is the exclusion of a lot of potential relevant terms from candidate topics set, thus the completeness of candidate set is greatly compromised. On the other hand, in our project, false exclusion is empirically implemented by the filtering function, while maintaining the completeness of candidate set. 4. Conclusions Contrary to commercial search engines that output as many results as possible including irrelevant garbage pages, the topic relevance search's philosophy is to make sure that the correctness of relevance is found while trying to achieve the completeness. Since, when mining for the relevance, huge number of web pages need to be crawled, the efficiency becomes a very important issue. In this project, we developed a new approach for relevant topic search and demonstrated that our system can efficiently mine topic-specific relevance words by much smaller number of crawling times. We used early detection method to select candidate topics from the text inside HTML document’s anchor text metadata. Our system analyzed the occurrence times and locations of candidate topics in the filtering step so that false candidate topics can be successfully eliminated and words relevant to the user specified target could be found. 5. References [1] H.B.P. Pirolli, J.Pitkow, and R.Lukose. Strong regularities in world wide web surfing. Since,(280):95-97, Apr. 1998. [2] S. Chakrabarti, M van den Berg, and B, Dom. Focused crawling : A new approach to topic-specific web resource discovery. In Proc. of the 8th International World Wide Web Conference, Toronto,Canada, May 1999. [3] A. Sugiura and Etzioni, Query routing for web search engines: Architecture and experiments. In Proc. of the 9th International World Wide Web Conference,Amsterdam, The Netherlands, May 2000. [4] A. McCallum, K. Nigam, J.Rennie, and K.Seymore. Building domain-specific search engines with machine learning techniques. In AAAI Spring Symposium, 1999. [5] S. Lawrence and L. Giles. Accessibility and distribution of information on web. Nature, 400, pages 107-109, July 1999. [6] Jeonghee Yi, N. Sundaresan. Metadata Based Web Mining for Relevance. In IEEE 2000 International Database Engineering and Applications Symposium (IDEAS'00) Yokohama, Japan, September 18 - 20, 2000. [7] J. Cho, H. Garcia-Molina, and L. Page. Efficient crawling through url ordering. In Proc. of the 7th International World Wide Web Conference, Brisbane,Australia, Apr, 1998. [8] K. Bharat and M. Henzinger. Improved algorithms for topic distillation in hyperlinked environments. In Proc. of 21st International ACM SIGIR Conference, Melbourne, Australia, 1998. [9] J. Kleinberg. Authoritative sources in hyperlinked environment. In Proc. of 9th ACM-SIAM Symposium on Discrete Algorithm. [10] H. Chen, Y. Chung, M. Ramsey and C. Yang. A smart itsy bitsy spider for the web. Journal of American Society of Information Science, 49(7):604-618,1998. [11] S. Charkrabarti, B. Dom, P. Raghavan, S. rajagopalan, D. Gibson, and J. Kleinberg. Automatic resource compilation by analyzing hyperlink structure and associated text. In Proc. of the 7th International World Wide Web Conference, Brisbane, Australia, Apr, 1998. [12] http://www.maths.usyd.edu.au/MathSearch.html