processor

advertisement

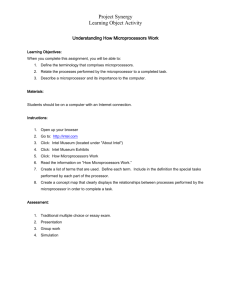

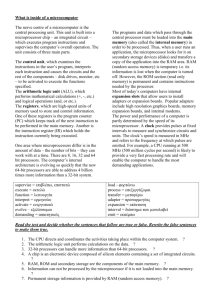

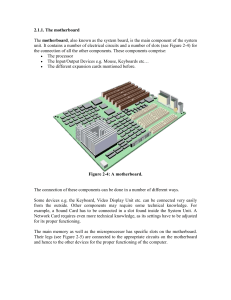

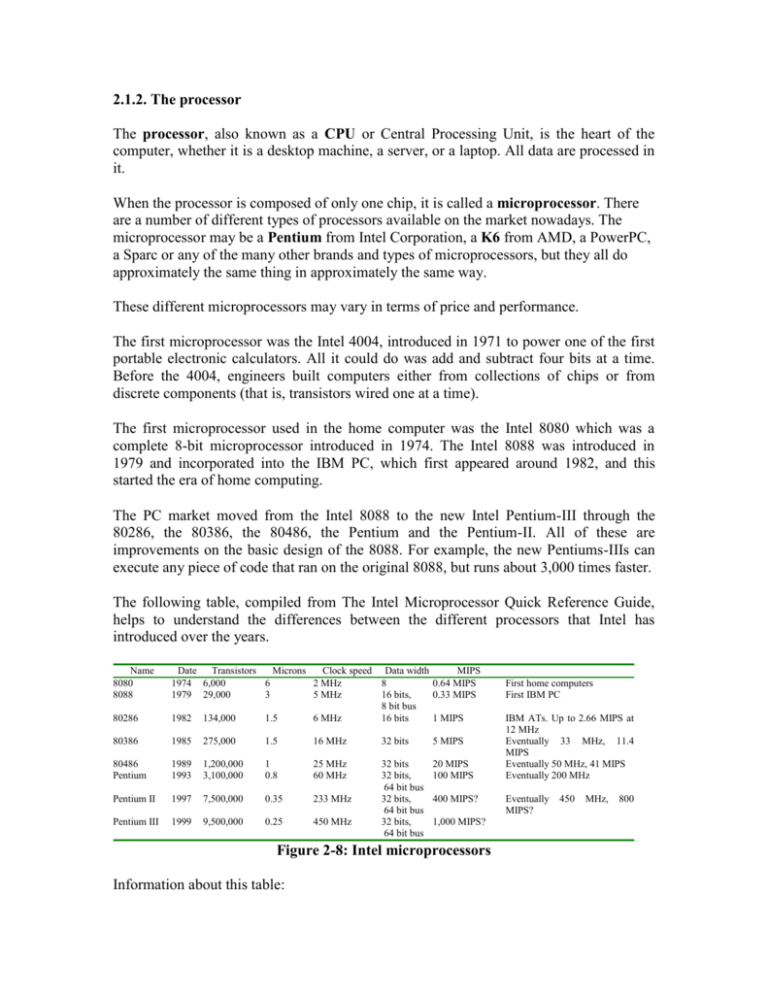

2.1.2. The processor The processor, also known as a CPU or Central Processing Unit, is the heart of the computer, whether it is a desktop machine, a server, or a laptop. All data are processed in it. When the processor is composed of only one chip, it is called a microprocessor. There are a number of different types of processors available on the market nowadays. The microprocessor may be a Pentium from Intel Corporation, a K6 from AMD, a PowerPC, a Sparc or any of the many other brands and types of microprocessors, but they all do approximately the same thing in approximately the same way. These different microprocessors may vary in terms of price and performance. The first microprocessor was the Intel 4004, introduced in 1971 to power one of the first portable electronic calculators. All it could do was add and subtract four bits at a time. Before the 4004, engineers built computers either from collections of chips or from discrete components (that is, transistors wired one at a time). The first microprocessor used in the home computer was the Intel 8080 which was a complete 8-bit microprocessor introduced in 1974. The Intel 8088 was introduced in 1979 and incorporated into the IBM PC, which first appeared around 1982, and this started the era of home computing. The PC market moved from the Intel 8088 to the new Intel Pentium-III through the 80286, the 80386, the 80486, the Pentium and the Pentium-II. All of these are improvements on the basic design of the 8088. For example, the new Pentiums-IIIs can execute any piece of code that ran on the original 8088, but runs about 3,000 times faster. The following table, compiled from The Intel Microprocessor Quick Reference Guide, helps to understand the differences between the different processors that Intel has introduced over the years. Name 8080 8088 80286 Date Transistors Microns Clock speed Data width MIPS 1974 6,000 6 2 MHz 8 0.64 MIPS 1979 29,000 3 5 MHz 16 bits, 0.33 MIPS 8 bit bus 1982 134,000 1.5 6 MHz 16 bits 1 MIPS 80386 1985 275,000 1.5 16 MHz 32 bits 5 MIPS 80486 Pentium 1989 1993 1,200,000 3,100,000 1 0.8 25 MHz 60 MHz 20 MIPS 100 MIPS Pentium II 1997 7,500,000 0.35 233 MHz Pentium III 1999 9,500,000 0.25 450 MHz 32 bits 32 bits, 64 bit bus 32 bits, 64 bit bus 32 bits, 64 bit bus 400 MIPS? 1,000 MIPS? Figure 2-8: Intel microprocessors Information about this table: First home computers First IBM PC IBM ATs. Up to 2.66 MIPS at 12 MHz Eventually 33 MHz, 11.4 MIPS Eventually 50 MHz, 41 MIPS Eventually 200 MHz Eventually MIPS? 450 MHz, 800 The date is the year that the processor was first introduced. Many processors are re-introduced at higher clock speeds for many years after the original release date. Transistors is the number of transistors on the chip. It can be seen that the number of transistors on a single chip has risen steadily over the years. Microns is the width, in microns, of the smallest wire on the chip. For comparison, a human hair is 100 microns thick. As the feature size on the chip goes down, the number of transistors rises. Clock speed is the maximum rate that the chip can be clocked. Data Width is the width of the ALU. MIPS stands for Millions of Instructions Per Second, and is a rough measure of the performance of a CPU. Modern CPUs can do so many different things that MIPS ratings lose a lot of their meaning, but a general sense of the relative power of the CPUs from this column can be deduced. 2.1.2.1. The Chip A chip is also called an integrated circuit. Generally it is a small, thin piece of silicon onto which the transistors have been etched. A chip might be as large as an inch on a side and can contain as many as 10 million transistors. Simple processors might consist of a few thousand transistors etched onto a chip just a few millimetres square. Figure 2-9: The Pentium II chip 2.1.2.2. Inside a Microprocessor The main components of the microprocessor are the Arithmetic and Logic Unit (ALU) and the Control Unit (CU). The CU directs electronic signals between the memory and the ALU as well as between the CPU and I/O devices. The ALU performs arithmetic and comparison operations. A microprocessor executes machine instructions that tell it what to do. Based on the instructions, a microprocessor does three basic things: The ALU (Arithmetic/Logic Unit) can perform mathematical operations like addition, subtraction, multiplication and division. It can move data from one memory location to another It can make decisions and jump to a new set of instructions based on those decisions. All the sophisticated things a microprocessor does can be decomposed in terms of these three basic activities. 2.1.2.3. Microprocessor Instructions Even a simple microprocessor has a quite large set of instructions that it can perform. The collection of instructions is implemented as bit patterns, known as opcodes, each of which has a different meaning when loaded into the instruction register. Since humans are not good at remembering bit patterns, a set of short words or mnemonics, is defined to represent the different bit patterns. This collection of words is called the assembly language of the processor. An assembler can translate the words into their bit patterns very easily, and then the output of the assembler is placed in memory for the microprocessor to execute. The instruction decoder needs to turn each of the opcodes into a set of signals that drive the different components inside the microprocessor. Consider the ADD instruction as an example: During the first clock cycle the instruction is loaded. During the second clock cycle the ADD instruction is decoded. During the third clock cycle, the program counter is incremented and the instruction executed. This is known as the Fetch-Execute cycle of the microprocessor. Every instruction can be broken down as a set of sequenced operations like the above. Some instructions take 2 or 3 clock cycles while others take 5 or 6 clock cycles. 2.1.2.4. Trends in Microprocessor Design The number of transistors available has a huge effect on the performance of a processor More transistors also allow a technology called pipelining. In a pipelined architecture, instruction execution overlaps. So even though it takes 5 clock cycles to execute each instruction, there can be 5 instructions in various stages of execution simultaneously. So it seems that one instruction completes every clock cycle. Many modern processors have multiple instruction decoders, each with its own pipeline. This allows multiple instruction streams, which means that more than one instruction can be completed during each clock cycle. This technique can be quite complex to implement, so it takes lots of transistors. The trend in processor design has been toward full 32-bit ALUs with fast floating point processors built in and pipelined execution with multiple instruction streams. There has also been a tendency toward special instructions (like the MMX instructions designed for Multimedia purposes) that make certain operations particularly efficient. There has also been the addition of hardware virtual memory support and L1 caching on the processor chip. All of these trends push up the transistor count, leading to the multi-million transistor microprocessors available today and these processors can execute about one billion instructions per second. 2.1.2.5. Data representation Inside the computer, data are represented using the binary system. This system or base consists of only two digits: 0 and 1. In the computer these digits are represented by the states of electrical circuits: a low state stands for 0 and a high state for 1. One binary digit is known as a bit and 8 bits make a byte. Any character can be represented by a combination of binary digits. A byte can represent up to 256 different characters. Memory capacity is expressed in terms of Megabytes (or MB). One MB is 220 Bytes and is roughly equal to 1 million Bytes. The RAM of a computer is expressed in terms of MB nowadays. The Kilobyte (or KB) is 210 Bytes or roughly 1 000 Bytes. The cache of a computer is expressed in terms of KB. The Gigabyte (GB) is 230 Bytes. It is used to express Hard Disk capacity. The examples used are just to give an idea of the capacity of the different mediums in a computer. We can express the size of any storage device in terms of any unit. There are a number of different binary systems: ASCII: The American Standard Code for Information Interchange is the main system used in microcomputers. EBCDIC: The Extended Binary Coded Decimal Interchange Code was developed by IBM and is used in large computers. Unicode: This is a sixteen-bit code. It can represent up to 2 16 different characters. It can support languages such as Chinese and Japanese, which have a very large set of characters. The electrical signal corresponding to a character is generated when the corresponding key is pressed on the keyboard. Since there can be noise on the lines from the keyboard to the processor, the signal can be corrupted. So a ninth bit, the parity bit, is added before transmission to detect errors. There are two types of parity bit: Even parity bit: The bit is added in such a way that the total number of 1 in the signal is even. Any error is detected at reception, and in case of error, the signal has to be sent again. Odd parity bit: The total number of 1 has to be odd.