Lecture 6 - Bangladesh University of Engineering and Technology

advertisement

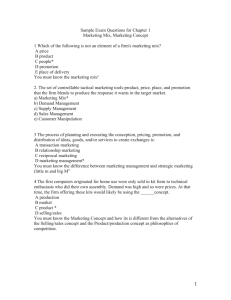

Institute of Information and Communication Technology (IICT) Bangladesh University of Engineering and Technology (BUET) Course: ICT-6010, Title: Software Engineering and Application Development Class handouts, Lecture-6 Software metrics and complexity measures 6.0 SOFTWARE MEASUREMENT Measurements in the physical world can be categorized in two ways: direct measures (e.g., the length of a bolt) and indirect measures (e.g., the 'quality' of bolts produced, measured by counting rejects).Software metrics can be categorized similarly. Direct measures of the software engineering process include cost and effort applied. Direct measures of the product include lines of code (LOC) produced, execution speed, memory size, and defects reported over some set period of time. Indirect measures of the product include functionality, quality, complexity, efficiency, reliability, maintainability, and: many other ‘attributes’. The cost and effort required to build software, the number of lines of code produced, and other direct measures are relatively easy to collect, as long as specific conventions for measurement are established in advance. However, the quality and functionality of software or its efficiency or maintainability are more difficult to assess and can be measured only indirectly. The software metrics domain can be partitioned into process, project, and product metrics. It is also noted that product metrics that are private to an individual are often combined to develop project metrics that are public to a software team. Project metrics are then consolidated to create process metrics that are public to the software organization as a whole. But how does all organization combine metrics that come from different individuals or projects. To illustrate, we consider a simple example. Individuals on two different project teams record and categorize all errors that they find during the software process, Individual measures are then combined to develop team measures. Team A found 342 errors during the software process prior to release. Team B found 184 errors. All other things being equal, which team is more effective in uncovering errors throughout the process? Because we do not know the size or complexity of the projects, we cannot answer this question. However, if the measures are normalized, it is possible to create software metrics that enable comparison to broader organizational averages. 6.1 PRODUCT AND PROCESS METRICS Internal and external characteristics may be of two different natures: either product or process. A product measure is a "snapshot" at a particular point in time. It can be a snapshot of the early design or of the code today, about which we can then derive some code metrics. It cannot therefore tell us anything about the movement from the first snapshot to the second. This occurs over a period of time. Trying to understand the process by taking only one (or possibly at best a small number) of instantaneous assessments is like trying to understand the life history of a frog only looking at the state of the system each winter. Variables such as staffing levels or effort, both as a function of time, may be used directly or may be included in prognostic models to forecast other characteristics. Process contains a time rate of change and requires a process model to fully understand what is happening. 1 Typically, as with quality assurance (QA) in manufacturing, instantaneous inspection can give only a limited insight. Not until process-based management techniques, such as total quality management (TQM), replace QA can a system be said to be fully controlled. Manufacturing insights have some applicability to software development, although the major difference between a highly repeatable and repeated mass manufacturing industry churning out identical widgets over many years and a software industry developing software that is never identical, even though there may be strong similarities between each accounting system, leads some authors to question the extent to which manufacturing statistical control (which underlies TQM, for instance) can be of use to software development Other process metrics include recording fault metrics (totals and fault densities) and change metrics (to record the number and nature of design and code changes). It is often argued that the complexity of a piece of code may be used to give a forecast of likely maintenance costs (e.g., Harrison et al., 1982), although such a connection is conceptually tenuous. Often extreme values of several metrics can more reasonably be used (Kitchenham and Linkman, 1990) to draw the developer's attention to a potential problem area. The software project manager has a variety of uses for software metrics, both for product and process (e.g., Weller, 1994). Responsibilities of a project manager range from applying (largely product metrics) to monitoring, controlling, innovating, planning, and predicting (Ince, 1990). Metrics are aimed at providing some type of quantitative information to aid in the decision-making process (e.g., Stark and Durst, 1994). They are thus "circumstantial evidence" that could be used in a decision support system (DSS), for example. The nature of software development precludes the discovery of "global verities." Second, we need to note that the decision-making process is, ideally, undertaken initially early in the project time frame. This provides a tension, since most metrics are most easily collected toward the end of the project. This leads to the attempt either to use measures of product (detailed design or code) as surrogates for process estimates or even estimates of these product measures for use in early life-cycle time and cost estimates. To estimate effort (process metric), a connection has traditionally been made to the overall "size" (product metric) of the system being developed, by means of an organization-specific value of team productivity. The use of size from the many product metrics available as input to the process model equation should not suggest that size is the "best" product measure but, rather, that it is one of the most obvious and easiest to measure, although unfortunately only available toward the end of the life cycle. Consequently, this has feed back to a focus in product metrics on estimating or measuring size, viewing size as in some way influenced by program modularity and structure (Figure in the next page). 2 However, a more thorough analysis of the product metric domain suggests that "complexity" and not size may be more relevant to modem software systems. Here complexity can be loosely defined as that characteristic of software that requires effort (resources) to design, understanding, or code. Such a loose definition is probably the best that can one or over, generic term complexity Fenton stresses the uselessness of this term, which has no agreed empirical or intuitive meaning and can therefore never be used as the basis or a measure. 6.1.1 PROCESS METRICS AND SOFTWARE PROCESS IMPROVEMENT The only rational way to improve any process is to measure specific attributes of the process, develop a set of meaningful metrics based on these attributes and then use the metrics to provide indicators that will lead to a strategy for improvement. But before we discuss software metrics and their impact on software process improvement, it is important to rote that process is only one of a number of "controllable factors in improving software quality and organizational performance [PAU94)." Referring to Figure in the next page, process sits at the center or a triangle connecting three factors that have a profound influence on software quality and organizational performance. The skill and motivation of people has been shown (BOE81] to be the single most influential factor in quality and performance. The complexity of the product can have a substantial impact on quality and team performance. The technology (i.e., the software engineering methods) that populates the process also has an impact. In addition, the process triangle exists within a circle of environmental conditions that include the development environment (e.g., CASE, tools), business conditions (e.g., deadlines, business rules), and customer characteristics (e.g., ease of communication). 3 We measure the efficacy of a software process indirectly .That is, we derive a set of metrics based on the outcomes that can be derived from the process. Outcomes include measures of errors uncovered before release of the software defects delivered to and reported by end-users, work products delivered (productivity), human effort expended, calendar time expanded, schedule conformance, and other measures. We also derive process metrics by measuring the characteristics of specific software engineering tasks, For example, we might measure the effort and time spent performing the umbrella activities and the generic software engineering activities. Grady (GRA921 argues that there are "private and public" uses for different types of process data. Because it is natural that individual software engineers might be sensitive tothe use of metrics collected on an Individual basis, these data should be private to the individual and serve as an indicator for the individual only. Examples of private metrics include defect rates (by individual), defect rates (by module), and errors found during development. Some process metrics are private to the software project team but public to all team members. Examples include defects reported for major software functions (that have been developed by a number of practitioners), errors found during formal technical reviews, and lines of code or function points per module and function. These data are reviewed by the team to uncover indicators that can improve team performance. Public metrics generally assimilate information that originally was private to individuals and teams. Project level defect rates (absolutely not attributed 10 an individual), effort, calendar times, and related data are collected and evaluated in an attempt to uncover indicators that can improve organizational process performance. Software process metrics can provide significant benefit as an organization works to improve its overall level of process maturity. However, like a metrics, these can be misused, creating more problems than they solve. Grady [GRA92} suggests a "software metrics etiquette" that is appropriate for both managers and practitioners as they institute a process metrics program: Use common sense and organizational sensitivity when interpreting metrics data. Provide regular feedback to the individuals and teams who collect measures and metrics. Don't use metrics to appraise individuals. 4 Work with practitioners and teams to set clear goals and metrics that will be used to achieve them. Never use metrics to threaten individuals or teams. Metrics data that indicate a problem area should not be considered "negative." These data are merely an indicator for process improvement. Don't obsess on a single metric to the exclusion of other important metrics. As an organization becomes more comfortable with the collection and use of process metrics. 6.2 COMPLEXITY The Macquarie Dictionary defines the common meaning of complexity as "the state or quality of being complex”, that is, "composed of interconnected parts" or "characterized by an involved combination of parts ...an obsessing notion." This definition is intuitively appealing. It recognizes that complexity is a feature of an object being studied, but that the level of perceived complexity is dependent upon the person studying it. For example, a person unfamiliar with an object may find it far more difficult to understand than someone who has studied the object many times before. However, the definition has two deficiencies as a technical definition upon which to base research. The first is that it ignores the dependency of complexity on the task being performed. As Ross Ashby (quoted in Klir. 1985) noted, ...although all would agree that the brain is complex and a bicycle simple, one has also to remember that to a butcher the brain of a sheep is simple while a bicycle, if studied exhaustively. … .may present a very great quantity of significant detail. The second problem with the definition is that it does not allow complexity to be operationalized as a measurable variable. The factors described as determining complexity are very general and therefore lack the specificity required for measurement It was probably with these problems in mind that Basili (1980) formulated his definition of "software complexity" as a measure of resources expended by a system [human or other] while interacting with a piece of software to perform a given task. Although this definition addresses the two deficiencies in the dictionary definition, it may be too broad. As the dictionary definition indicates, complexity is usually regarded as arising out of some characteristic of the code, on which the task or function is being performed and therefore does not include characteristics of the programmer that affect resource use independently of the code. A suitable compromise may be the definition proposed by Curtis (1979) and used throughout this book as an operational definition. He claimed that software complexity is a characteristic of the software interface which influences the resources another system will expend while interacting with the software. This definition is broad enough to include characteristics of the software that interact with characteristics of the system carrying out the task, in such a way as to increase the level of resource use. However, it is not so broad as to include arbitrary characteristics of the programmer that affect his or her ability generally. Software complexity research began in the 1970s around the same time as the push for structured programming was beginning. Both were result of an increasingly large number of poor experiences among practitioners with large systems, and as a consequence, the need for quality control for software becomes very strong. This quality control took two forms. First, it involved the development of new standard for programming, and, second, it required the development of 5 measure monitor the "complexity" of code being produced so as to provide benchmarks and to permit poor-quality sections to be modified or rewritten. The best known of the early approaches to complexity measurement were those focusing on structural complexity, such as McCabe (1976) and Halstead (1977). Despite criticisms of these metrics (e.g., Coulter, 1983; Shen et al, 1983; Evangelist, 1984; Nejmeh, 1988; Shepperd, 1988), they are probably still the best known and most widely used-sometimes, as Shepperd (1988) notes, beyond their limits of validity. At present there is no unified approach to software complexity research. Curtis (1979) examining the field lamented that "Rather than unite behind a common banner, we have attacked in all directions." He made a number of observations: There was an overabundance of metrics. Metricians defined complexity only after developing their metric. More time was spent developing predictors of complexity, where in fact more needed to be spent on developing criteria, such as maintainability, understandability, and operationalizing these criteria. For every positive validation there was a negative one. Zuse (1990)) sums up the situation as one in which "software complexity measurement is confusing and not satisfying to the user." More recently a number of authors have been reinforcing the notion that complexity is a higherlevel concept, involving people. Zuse (1990) states that “the term software complexity is a misnomer. The true meaning of the term software complexity is the difficulty to maintain, change and understand software. It deals with the psychological complexity of the programs” The definition of Sullivan (1975) that "Complexity denotes the degree of mental effort required for comprehension." 6.2.1 CLASSIFICATION OF COMPLEXITY When we consider the complexity an external attribute or characteristic of software, then it has three ‘flavor’: Computational, psychological and representational. The most important of these is psychological, which encompasses programmer characteristics, structural complexity and problem complexity. Programmer characteristics are hard to measure objectively and little work has been done to date on measure of problem complexity. 6 Problem complexity relates strongly to design and coding. Also functional complexity, this reflects the difficulties implicit in the problem space and is thus sometimes referred to as ‘difficulty’. The only measures are ordinal and subjective. It is often argued that problem complexity can not be controlled. The design and code complexity measures do not provide an absolute rating; rather they should be evaluated as relative to the problem complexity. 7 For example, a problem with a complexity of 1 unit, which gives rise to d design of complexity of 100 units, leads us to believe that this bad design. However, if a design of 100 units’ complexity models a problem of complexity 100 units, then we would perceive this as a good design. In addition, there are likely to be confounding factors derivable from the influence of programmer characteristics. The appropriate measures are therefore the ratios: 8 The aim would be to minimize the ratios Dp Cp, and CD where both of these variables have a lower bound of unity. While this is an important goal, operationalizing these measures awaits an objective measure for problem complexity and agreed definitions on composite measures of complexity for the total system at each stage of the software development life cycle. Despite these arguments, the software engineering literature is focused primarily on developing and validating structural complexity metrics (i.e., measures of internal characteristics Recently, many have realized that the influence of the human programmer and analyst is of crucial importance (Curtis, 1979; Davis, 1984; Kearney et al., 1986; Cant et al., 1992). A combination of this, the static (product) complexity and its relation to the problem complexity contribute to the overall psychological complexity. Mathematically, we thus identify three components to complexity human cognitive factors, H; problem complexity, P; and objective measures, which includes size, S, data structure, D, logical structure, L, internal cohesion, Ch, semantic cohesion, Cs' coupling, C. Complexity, X, is some (complicated) function of such objective measures and taking human cognitive factors into consideration, namely, X =X (H, P, S, D, L, Ch, Cs, C) Furthern1ore, the functional form of the above equation is likely to be different at different lifecycle stages. Thus, we should write Xi=Xi (H, P, S, D, L, Ch, Cs, C) ( i=l to j; ) Where there is j life-cycle phases identified in the chosen model. 9 6.2.2 COGNITIVE COMPLEXITY Cant et al. (1992, 1995) have proposed a theoretically based approach to complexity metrics by analyzing cognitive models for programmer comprehension of code and the coding process (including debugging and maintenance). This cognitive complexity model (CCM) can be described qualitatively in terms of a "landscape" model and is encapsulated quantitatively by a set of equations. The underlying rationale for the CCM is the recognition that programmers, in problem solving, use the two techniques of chunking and tracing concurrently and synergistically (e.g., Miller, 1956). In chunking, the software engineer divides up the code men- tally into logically related chunks, e.g., an iterative loop or decision block; in tracing, other related chunks are located. Having found that code, programmers will once again chunk in order to comprehend it. Conversely, when programmers are primarily tracing, they will need to chunk in order to understand the effect of the identified code fragments. For example, they may need to analyze an assignment statement in order to determine its effect, or they may need to analyze a control structure to understand how it interacts with the assignment statements contained in it, to create certain effects. If one includes the qualification that complexity is dependent on the task being performed, then the definition covers all the suggested ingredients of complexity. Therefore, the following definition will be adopted. The cognitive complexity of software refers to those characteristics of software that affect the level of resources used by a person performing a given task on it. We examine the complexity of software only in respect of tasks performed by programmers that involve analyzing code. The tasks for which this is appropriate are manifold, including maintaining, extending, and understanding code. Characteristics relevant to cognitive complexity is shown figure below: 6.2.3 COGNITIVE ARCHITECTURE OF THE MIND To understand the cognitive complexity of software fully, the comprehension process and the limits on the capacity of the human mind must first be understood. The mind is usually modeled by dividing it into activated (short-term) and long-term memory. The characteristics of short-term memory are that distraction causes forgetting of recently learned material after about 20-30 seconds (Tracz, 1979, Sutcliffe, 1989). Other simultaneous inputs or similar inputs impair recall; while 10 recall is improved by presenting both a word and a picture together (Sutcliffe, 1989). The limits on the language-based subcomponent of short- term memory are a relatively fast access time, a rapid loss of information unless refreshed (Sutcliffe, 1989), and a capacity of 7±2 chunks (Miller, 1956), although this capacity is reduced while performing difficult tasks (Kintsch, 1977). The rate of memory decay can be slowed down by mental rehearsal of the information, as we all know personally. Furthermore, the effective capacity of short-term memory is expanded by chunking, which involves abstracting recognized qualities from the information and storing the abstraction (in terms of a single symbol) instead of the complete information. Long-term memory has "virtually unlimited" capacity, and memory loss appears to be largely a problem of retrieval (Tracz, 1979, p. 26). However, the factors that determine recall ability appear to be similar to those for short-term memory. Thus, pictures presented together with associated text tend to be remembered better than text alone. Furthermore, memory recall is retarded by extraneous "noise" but is enhanced by structure, particularly when the structure or its components already exist in long-term memory (Tracz, 1979; Sutcliffe 1989, pp. 28-33). The structure of knowledge in long-term memory and the process of chunking involved in comprehension (a subtask of debugging and extending) are intimately related. 6.2.4 THE COGNITIVE PROCESSES IN COMPREHENSION Debate continues about the exact mental processes involved in debugging, ex- tending, or simply understanding a section of code. The generally held view is that programmers chunk (Miller, 1956; Shneiderman and Mayer, 1979; Badre, 1982; Davis, 1984; Ehrlich and Soloway, 1984), which involves recognizing sections of code and recording the presence of that section in short-term memory by means of a single reference or memory symbol. Chunking involves recognizing groups of statements (not necessarily sequential) and extracting from them information that is remembered as a single mental abstraction. These chunks are then further chunked together into larger chunks and so on, forming a multileveled, aggregated structure over several layers of abstraction (somewhat akin to a layered object model). However, there is also evidence that programmers perform a type of tracing. This involves scanning quickly through a program, either forward or backward, in order to identify relevant chunks. (For example, Weiser, 1981, and, later, Bieman and Ott, 1994, examined "slicing," which includes tracing backward to ascertain the determinants of a set of variables at a point in a program.) Tracing may be required to allow knowledge to be acquired about certain variables or functions that are scattered throughout a program. These two processes, of chunking and tracing, both have implications for software complexity. Furthermore, both processes are involved in the three programmer tasks of understanding, modifying, and debugging. Shneiderman and Mayer (1979) suggest that the chunking process involves utilizing two types of knowledge: semantic and syntactic. Semantic knowledge includes generic programming concepts from structures as basic as loops to more complex concepts such as sorting algorithms. This semantic knowledge is stored in a way which is relatively independent of specific programming languages. Furthermore, the information is systematically organized in a multileveled structure so that related concepts are aggregated into a single concept at a higher level of abstraction. Syntactic knowledge is specific to particular languages and allows semantic structures, implemented in those languages, to be recognized. By chunking, syntactic knowledge is used to convert the code into semantic knowledge, which is then stored as a multileveled representation of the program in memory. In a critique of this model, Gilmore and Green (1984) suggest that recognition of semantic constructs may, to some extent, be dependent upon syntactic aspects of its presentation. 11 Ehrlich and Soloway (1984) propose a more comprehensive model of chunking based on "control flow plans" and "variable plans"-a model adopted in this chapter. Control plans describe control flow structures, while variable plans rely on a range of features common to variables with particular roles. Examples of such roles include counter variables and variables in which user input is stored. 6.2.5 STRUCTURAL COMPLEXITY Structural complexity is one of three major components of psychological complexity (the others being programmer characteristics and problem complexity). Structural complexity is that portion of overall (psychological) complexity that can be represented and thus assessed objectively. It thus relates to the structure of the document, whether that document is the design document or the code itself. Structural complexity is thus measured by structural complexity metrics that offer assessment of a variety of internal attributes (also called internal characteristics). Structural metrics can be divided into intramodule (or just module) metrics and intermodule metrics (Kitchenham and Walker, 1986; Kitchenham and Linkman, 1990). Modularity metrics are focused at the individual module level (subprogram. class) and may be measures for: The internals of the module (procedural complexity) such as size, data structure, or logic structure (control flow complexity) or The external specification of the module (semantic complexity)-typical module cohesion as viewed externally to the module. Semantic complexity metrics evaluate whether an individual class really is an abstract data type in the sense of being complete and also coherent; that is, to be semantically cohesive, a class should contain everything that one would expect a class with those responsibilities to possess but no more. Intermodule metrics measure the structure of the interconnections between modules which comprise the system and thus characterize the system-level complexity. An Object Oriented (OO) system this gives some assessment of how loosely or how strongly individual classes are coupled together in the final system. Since such coupling is "designed into" the system, coupling metrics can be collected at the "design" stage. Procedural complexity metrics are perhaps best understood, although often assumed (inaccurately as we saw earlier) to give immediate information about external characteristics-for instance, Kolewe (1993) suggests that the inevitable consequence of unnecessary complexity is defective software. Maintaining a clear focus on what such procedural complexity metrics really measure (basically procedural complexity: an internal characteristic) is vital. That is not to say that they can- not be used to forecast other characteristics (internal or external), but forecasting is not the same scientific technique as measuring, and we must retain that difference clearly in mind as we use OO (and other) metrics. 6.2.6 TRADITIONAL PRODUCT METRICS FOR STRUCTURAL COMPLEXITY We stress that complexity, including structural complexity, is a concept that can be regarded as an external characteristic. This is thus part of the empirical relation system for which we then require a numerical relation system to form the substantial basis for a ‘metric’ or measure. Here, we shall discuss the different aspect of structural complexity. The terms complexity (a concept) and complexity metric (the measure) are not interchangeable, although we are sorry to say that this equivalence is used in much of the software metrics literature. We discuss, at a low level, the various possible measures without regard to whether they are prognostic of any external characteristics. Card and Glass (1990) argue that the overall sys- tem 12 complexity is a combination of intramodule complexity and intermodule complexity. They suggest that the system complexity, Cs, is given by Cs = CI + C M ……………(i) Where, CI is the intermodule complexity, and CM the intramodule complexity. Traditionally, intermodule complexity is equated to coupling in the form of subroutine CALLs or message passing and intramodule complexity to data complexity, which represents the number of I/O variables entering or leaving a module. Card and Glass (1990) suggest that the complexity components represented in equation (i) approximate Stevens et al.'s (1974) original concepts of "module complexity and cohesion.” Particularly for object-oriented systems, as can be seen in Figure below, there is a third element, included as part of CM by Card and Glass (1990)--- semantic cohesion-which is another form of module complexity. 13 If we specifically exclude cohesion from the measure of intramodule complexity, CM then we can modify equation (i) explicitly to take into account semantic cohesion, Ch Noting that increasing cohesion leads to a lower system complexity, we propose that Cs = CI + CM + (Chmax –Ch) ………….. (ii) Although this depends strongly on the scale used for Ch . (Chmax is the maximum cohesion possible for the module.) Thus we discriminate between: The objective (countable) measures such as number of I/O variables and number of control flow connections and The semantic measures needed to assess the cohesiveness of, module via the interface it presents to the rest of the system. Card and Glass's (1990) calculation of the metric for intramodule complexity (their data complexity) suggests that a module with excessive I/O is one with low cohesion. While this is grossly acceptable, it does not translate well into the object paradigm where cohesion is high, yet interconnections, via messages and services can also be high. Indeed, it could be argued that in object-oriented systems, there is minimal data connectivity such that this notation of data complexity at the intermodule level is virtually redundant. Finally, Card and Glass (1990) propose that a better comparative measure is the relative (per module) system complexity (Cs = Cs / n, where n is the number c modules in the system). This normalization allows us to compare systems of contrasting sizes. Since it gives a mean module value, it offers potential applicability t object-oriented systems as an average class metric. 6.2.6 INTERMODULE METRICS (FOR SYSTEM DESIGN COMPLEXITY) An easy measure of intermodule coupling is the fan-in/fan-out metric (Henry and Kafuca, 1981). Fan-in (strictly "informational fan-in") refers to the number of locations from which control is passed into the module (e.g., CALLs to the module being studied) plus the number of (global) data, and conversely, fan-out measures the number of other modules required plus the number of data structures that are updated by the module being studied. Since these couplings are determined in the analysis and design phases, such intermodule metrics are generally applicable across the life cycle. While there are many measures incorporating both fan-in and fan-out Card and Glass (1990) present evidence that only fan-outland its distribution between modules contribute significantly to systems design complexity. Thus, they propose In contrast, Henry and Kafura (1981) propose a family of information flow metrics: 1. fan-in x fan-out 2. (fan-in x fan-out)2 3. Ss x (fan-in x fan-out)2 14 Where, Ss is the module size in lines of code. These metrics of Henry and Kafura's have been widely utilized and were thoroughly tested by their developers with data from the UNIXTM operating system. Page-Jones (1992) recommends "eliminate any unnecessary connascence and then minimizing connascence across encapsulation boundaries by maximizing con- nascence within encapsulation boundaries." This is good advice for minimizing module complexity since it acknowledges that some complexity is necessary; it focuses on self-contained, semantically cohesive modules; and it reflects the need for low fan-out 6.3 MODULE METRICS (FOR PROCEDURAL COMPLEXITY) Of the five types of module metrics shown in the previous figure, size and logic structure ac- count for the majority of the literature on metrics: perhaps because of their perceived relationship to cost estimation and to the difficulties of maintenance, respectively and perhaps to their ease of collection. However, it should be reiterated that these metrics can, in general, be obtained only from code and are inappropriate for the analysis and design (i.e., the specification phase). Size metrics, notably, lines of code (LOC) or source lines of code (SLOC), and function points (FPs), and their variants will be discussed here. 6.3.1 SIZE METRICS: LINES OF CODE The use of source lines of code as a measure of software size is one of the oldest methods of measuring software. It was first used to measure size of programs written in fixed-format assembly languages. The use of SLOC as a metric began to be problematical when free-format assembly languages were introduced (Jones, 1986) since more than one logical statement could be writ- ten per line. The problems became greater with the advent of high-level languages that describe key abstractions in the problem domain (Booch, 1991) removing some accidental complexity (Brooks, 1975). Furthermore, lines of code can be interpreted differently for different programming languages. Jones (1986) lists 11 different counting methods, 6 at the program level and 5 at the project level. With the introduction of fourth-generation languages, still more difficulties were experienced in counting source lines (Verner and Tate, 1988,Verner, 1991). Size oriented software metrics are derived by normalizing quality and/or productivity measures by considering the size of the software that has been produced. If a software organization maintains simple records; a table of size-oriented measures. such as the one shown in Figure in the next page can be created. The table lists each software development project that has been completed over the past few years and corresponding measures for that project. Referring to the table entry (Figure) for project alpha: 12,100 lines of code were developed with 24 person-months of effort at a cost of $168,000. It should be noted that the effort and cost recorded in the table represent all software engineering activities (analysis, design, code, and test), not just coding further information for project alpha indicates that 365 pages of documentation were developed, 134 errors were recorded before the software was released, and 29 defects were encountered after release to the customer within the first year of operation. Three people worked on the development of software for project alpha. 15 In order to develop metrics that can be assimilated with similar metrics room other projects, we choose lines or code as our normalization value. From the rudimentary data contained in the table, a set or simple size-oriented metrics can be developed for each project: Errors per KLOC (thousand lines or code). Defects per KLOC. $ per LOC. Page or documentation per KLOC. In addition, other interesting metrics can be computed: Errors per person-month. LOC per person-month. $ per page or documentation. Size-oriented metrics are not universally accepted as the best way to measure the process or software development. Most or the controversy swirls around the use of lines or code as a key measure. Proponents or the LOC measure claim that LOC is an "artifact" or all software development projects that can be easily counted, that many existing software estimation models use LOC or KLOC as a key input, and that a large body or literature and data predicated on LOC already exists. On the other hand, opponents argue that LOC measures are programming language dependent, that they penalize well-designed but shorter programs, that they cannot easily accommodate nonprocedural languages, and that their use in estimation requires a level of detail that may be difficult to achieve (i.e., the planner must estimate the LOC to be produced long before analysis and design have been completed). 6.3.2 FUNCTION-ORIENTED METRICS: FUNCTION POINT Function-oriented software metrics use a measure of the functionality delivered by the application as a normalization value. Since 'functionality' cannot be measured directly, it must be derived indirectly using other direct measures. Function-oriented metrics were first proposed by Albrecht 16 [AL879J, who suggested a measure called the function point. Function points are derived using an empirical relationship based on countable (direct) measures of software's information domain and assessments of software complexity. Function point counting (Albrecht, 1979; Albrecht and Gaffney, 1983) is a technology-independent method of estimating system size without the use of lines of code. Function points have been found useful in estimating system size early in the development life cycle and are used for measuring productivity trends (Jones, 1986) from a description of users' specifications and requirements. They are probably the sole representative of size metrics not restricted to code. However, Keyes (1992), quoting Rubin, argues that they are unlikely to be useful to modern-day graphical user interface (GUI) developments since they cannot include concerns of color and motion. Albrecht argued that function points could be easily understood and evaluated even by nontechnical users. The concept has been extended more recently by Symons (1988) as the Mark II FP counting approach-a metric that the British government uses as the standard software productivity metric (Dreger, 1989). In FP application, system size is based on the total amount of information that is processed together with a complexity factor that influences the size of the final product. It is based on the following weighted items: Number of external inputs Number of external outputs Number of external inquiries Number of internal master files Number of external interfaces Function points are computed (IFP94) by completing the table shown in Figure below. Five information domain characteristics are determined and counts are provided in the appropriate table location. Once these data have been collected, a complexity value is associated with each count. Organizations that use function point methods develop criteria for determining whether a particular entry is simple, average, or complex. Nonetheless, the determination of complexity is somewhat subjective. To compute function points (FP) the following relationship is used: 17 FP = count total x [0.65 + 0.01 x (Fj)] ……………………. (ii) Where, count total is the sum of all FP entries obtained from Figure above The Fj ( j= 1 to 14) are complexity adjustment values based on responses to the following questions [ART85]: 1. Does the system require reliable backup and recovery? 2. Are data communications required? 3. Are there distributed processing functions? 4. Is performance critical? 5. While system run in an existing heavily utilized operational environment? 6. Does the system require on-line data entry'? 7. Does the on-line data entry require the input transaction to be built over multiple screens or operations? 8. Are the master files updated on-line? 9. Are the inputs outputs files or inquiries complex? 10. Is the internal processing complex? 11. Is the code designed to be reusable? I2. Are conversion and installation included in the design? 13. Is the system designed for multiple installations in different organizations? 14. Is the application designed lo facilitates change and ease of use by the user? Each of these questions is answered using a scale that ranges from 0 (not important or applicable) to 5 (absolutely essential). The constant values in Equation (ii) and the weighting factors that are applied to information domain counts are determined empirically. Once function points have been calculated, they are used in a manner analogous to LOC as a way to normalize measures for software productivity, quality, and. other attributes: Errors per FP. Defects per FP $ per FP. Pages of documentation per FP FP per person-month. Example The function point metric can be used effectively as a means for predicting the size of a system that will be derived from the analysis model. To illustrate the size of the FP metric in this context, we consider a simple analysis model representation, illustrated in Figure in the next page. Referring to the figure, a data flow diagram for a function within the SafeHome software is represented. The function manages user interaction, accepting a user password to activate or deactivate the, system, and allows inquiries on the status of security zones and various security sensors. The function displays a series of prompting messages and sends appropriate control signals to various components of the security system. 18 The data flow diagram is evaluated to determine the key measures required for computation of the function point metric: number of user inputs number of user outputs number of user inquiries number of files number of external interfaces Three user inputs---- password, panic button, and activate/deactivate---are shown in the .figure along with two inquires---zone inquiry and sensor inquiry. One file (system configuration file) is shown. Two user outputs (messages and sensor status) and four external interfaces (test sensor, zone setting, activate/deactivate, and a1arm alert) are also present. These data, along with the appropriate complexity, are shown in Figure below. The count tolal shown in Figure above must be adjusted using Equation (ii) FP = count total x [0.65.. 0.01 x (Fj)] 19 Where count total is the sum of all FP entries obtained from Figure SafeHome DFD above and Fj (j = 1 to 14) are "complexity adjustment values." For the purposes of this example, we assume that (Fj) is 46 (a moderately complex product). Therefore, FP = 50 x [0.65 + (0.01 x 46)) = 56 Based on the projected FP value derived from the analysis model, the project team can estimate the overall implemented size of the SafeHome user interaction function. Assume that past data indicates that one FP translates into 60 lines of code (an object-oriented language is to be used) and that 12 FPs are produced for each person-month of effort. These historical data provide the project manager with important planning information that is based on the analysis model rather than preliminary estimates. Assume further that past projects have found an average of three errors per function point during analysis and design reviews and four errors per function point during unit and integration testing. These data can help software engineers assess the completeness of their review and test activities. Advantages of function points Advantages of function points as seen by the users of this method are: Function point counts are independent of the technology (operating system, language and database), developer productivity and methodology used. Function points are simple to understand and can be used as a basis for discussion of the application system scope and requirements with the users. Function points are easy to count and require very little effort and practice Function points can be used to compare productivity of different groups and technologies. Function point counting method uses information gathered during requirements definition. 6.4 RECONCILING DIFFERENT METRICS APPROACHES The relationship between lines of code and function points depend upon the programming language that is used to implement the software and the quality of the design. A number of studies have attempted to relate FP and LOC measures. The following table provides rough estimates of the average number of lines of code required to build one function point in various programming languages: A review of these data indicates that one Lac of C++ provides approximately 1.6 times the "functionality" (on average) as one LOC of FORTRAN. Furthermore, one of a Visual Basic provides more than three times the functionality of a LOC for a conventional programming language. More detailed data on the relationship between FP and LOC are presented in and can be used to "backfire' (i.e., to compute the number of function points when the number of delivered Lac are known) existing programs to determine the FP measure for each. 20 LOC and FP measures are often used to derive productivity metrics. This invariably leads to a debate about the use of such data. Should the LOC/person-month (or FP/person-month) of one group be compared to similar data from another? Should managers appraise the performance of individuals by using these metrics? The answers to these questions is an emphatic “No”!. The reason for this response is that many factors influence productivity, making for “apples and oranges” comparisons that can be easily misinterpreted. Function points and LOC based metrics have been found to be relatively accurate predictors of software development effort and cost. However, in order to use LOC and FP for estimation, a historical baseline of information must be established. 6.5 METRICS FOR SOFTWARE QUALITY The overriding goal or software engineering is to produce a high-quality system, application. or product. To achieve the goal, software engineers must apply effective methods coupled with modern tools within the context or a mature software process. In addition, good software engineer (and good software engineering managers) must measure if high quality is to be realized. The quality of a system, application, or product is only as good as the requirements that describe the problem, the design that models the solution, the code that leads to an executable program, and the tests that exercise the software to uncover errors. A good software engineer uses measurement to assess the quality or the analysis and design models, the source code, and the test cases that have been created as the software is engineered. TO accomplish this real-time quality assessment, the engineer must use technical measures to evaluate quality in objective, rather than subjective ways. The project manager must also evaluate quality as the project progresses. Private metrics collected by individual software engineers are assimilated to provide project-level results. Although many quality measures can be collected, the primary thrust at the project level is to measure errors and defects. Metrics derived from these measures provide an indication of the effectiveness of individual and group software quality assurance and control activities. Metrics such as work product (e.g., requirements or design) errors per function point, errors uncovered per review hour, and errors uncovered per testing hour provide insight into the efficacy of each or the activities implied by the metric. Error data can also be used to compute the deject removal efficiency (DRE) for each process frame work activity. 6.5.1 MEASURING QUALITY Although there are many measures of software quality, correctness, maintainability, integrity, and usability provide useful indicators for the project team. Gilb [GIL88] suggests definitions and measures for each. Correctness: A program must operate correctly or it provides little value to its users. Correctness is the degree to which the software performs its required function; the most common measure for correctness is defects per KLOC, where a defect is defined as a verified lack of conformance to requirements. When considering the overall quality of a software product, defects are those problems reported by a user of the program alter the program has been released for general use. For quality assessment purposes, defects are counted over a standard period of time, typically one year. Maintainability: Software maintenance accounts for more effort than any other software engineering activity. Maintainability is the ease with which a program can be corrected if an error is encountered, adapted if its environment changes, or enhanced if the customer desires a change in requirements. There is no way lo measure maintainability directly; therefore, we must use indirect measures. A simple time-oriented metric is mean-time-to-change (MTTC), the time it takes to 21 analyze the change request, design an appropriate modification, implement the change, test it, and distribute the change to all users. On average, programs that are maintainable will have a lower MTTC (for equivalent types of changes) than programs that are not maintainable. Integrity: Software integrity has become increasingly important in the age of hackers and firewalls. This attribute measures a system's ability to withstand attacks (both accidental and intentional) to its security. Attacks can be made on all three components of software: programs, data, and documents. To measure integrity, two additional attributes must be defined: threat and security. Threat is the Probability (which can be estimated or derived from empirical evidence) that an attack of a specific type will occur within a given time. Security is the probability (which can be estimated or derived from empirical evidence) that the attack of a specific type will be repelled. The integrity of ( a system can then be defined as Integrity = summation [(1 -threat) x (1 -security)] Where, threat and security are summed over each type of attack. Usability: The catch phrase 'user-friendliness' has become ubiquitous in discussions or software products. If a program is not user-friendly, it is often doomed to failure, even if the functions that it performs are valuable. Usability is an attempt to quantify user-friendliness and can be measured in terms of four characteristics: The physical and or intellectual skill required to learn the system, The time required lo become moderately efficient in the use of the system, The net increase in productivity (over the approach that the system replaces) measured when the system is used by someone who is moderately efficient, and A subjective assessment (sometimes obtained through a questionnaire) of users attitudes toward the system.. The (our factors just described are only a sampling or those that have been proposed as measures (or software quality. 6.5.2 DEFECT REMOVAL EFFICIENCY A quality metric that provides benefit at both the project and process level is defect removal efficiency (DRE). In essence, DRE is a measure of tile filtering ability of quality assurance and control activities as they are applied throughout all process frame- work activities. When considered for a project as a whole, DRE is defined in the following manner: DRE = E/(E + D) Where, E is the number of errors found before delivery of the software to the end-user and D is the number of defects found after delivery. The ideal value for DRE is 1. That is no defects are found in the software. Realistically; D will be greater than 0, but the value of DRE can still approach 1. As E increases (for a given value of D), the overall value of DRE begins to approach 1. In fact, as E increases, it is likely that the final value of D will decrease (errors are filtered out before they become defects). If used as a metric that provides an indicator of the filtering ability of quality control and assurance activities, DRE 22 encourages a software project team to institute techniques for finding as many errors as possible before delivery. DRE can also be used within the project to assess a team's ability to find errors before they are passed to the next framework activity or software engineering task. For example, the requirements analysis task produces an analysis model that can be reviewed to find and correct errors. Those errors that are not found during the review of the analysis model are passed on to the design task (where they mayor may not be found). When used in this context we redefine DRE as DREi = Ei/(Ei+Ei+1) Where, Ei is the number of errors found during software engineering activity i and Ei+1 is the number of errors found during software engineering activity i+1 that are traceable to errors that were not discovered in software engineering activity i. A quality objective for a software team (or an individual software engineer) is to achieve DRE, which approaches 1. That is, errors should be filtered out before they are passed on to the next activity. 6.6 SUMMARY Measurement enables managers and practitioners to improve the software process; assist in the planning, tracking, and control of a software project; and assess the quality of the product (software) that is produced. Measures of specific attributes of the process, project, and product are used to compute software metrics. These metrics can be analyzed to provide indicators that guide management and technical actions. Process metrics enable an organization to take a strategic view by providing insight into the effectiveness of a software process. Project metrics are tactical. They enable a project manager to adapt project work now and technical approach in a real-time manner Both size- and function-oriented metrics are used throughout the industry. Size-oriented metrics use the line of code as a normalizing factor for other measures such as person-months or defects. The function point is derived from measures of the information domain and a subjective assessment of problem complexity. Software quality metrics, like productivity metrics, focus on the process, the project, and the product. By developing and analyzing a metrics baseline for quality, an organization can correct those areas of the software process that are the cause of software defects. Metrics are meaningful only if they have been examined for statistical validity. The control chart is a simple method 1or accomplishing this and at the same time examining the variation and location of metrics results. Measurement results in cultural change. Data collection metrics computation and metrics analysis are the three steps that must be implemented to begin a metrics program. In general, a goal-driven approach helps an organization focus on the right metrics for its business. By creating a metrics baseline----a database containing process and product measurements----software engineers and their managers can gain better insight into the work that they do and the product that they produce. 23