iii. performance parameter

advertisement

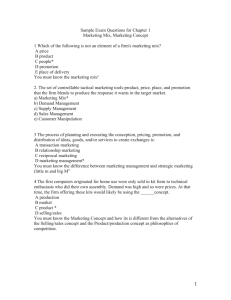

Evaluation parameter study for recommendation algorithms Chhavi Rana Department of Computer Science Engineering, University Institute of Engineering and Technology, MD University, Rohtak, Haryana, India. e-mail: chhavi1jan@yahoo.com Sanjay Kumar Jain Department of Computer Engineering, National Institute of Technology, Kurukshetra,136119 Haryana, India e-mail: skjnith@yahoo.com Abstract—The enormous amount of Web data has challenged its usage in efficient manner in the past few years. As such, a range of techniques are applied to tackle this problem; prominent among them is personalization and recommender system. In fact, these are the tools that assist user in finding relevant information of web. Most of the e-commerce websites are applying such tools in one way or the other. In the past decade, a large number of recommendation algorithms have been proposed to tackle such problems. However, there have not been much research in the evaluation criteria for these algorithms. As such, the traditional accuracy and classification metrics are still used for the evaluation purpose that provides a static view. This paper studies how the evolution of user preference over a period of time can be mapped in a recommender system using a new evaluation methodology that explicitly using time dimension. We have also presented different types of experimental set up that are generally used for recommender system evaluation. Furthermore, an overview of major accuracy metrics and metrics that go beyond the scope of accuracy as researched in the past few years is also discussed in detail. Keywords—Evolutionary; Clustering; Algorithm; Recommender Systems; Collaborative filtering; Data mining I. INTRODUCTION The focus of recommendation algorithm evaluation has been on accuracy. Most of the research in this field focuses on algorithms that reduce the error between the predicted ratings and true ratings. However, such method used to test recommendation algorithms fails to include an important aspect of parameter: time [23, 25]. Real world recommender systems are iteratively updated and reorganized as items are added and user provide ratings. Thus, the underlying user rating dataset will grow, and any recommendations derived from it will be subject to change with passage of time. The experiments that are carried on an unchanging dataset cannot reflect the ability of a real world recommender system. Consequently the effect of these changes on users preferences cannot be explored with any static method. In the last decade, there has been a huge amount of research in the field of recommender systems, which mostly focuses on implementing new algorithms for recommendations. Most of the researchers who develop new recommendation algorithms compare the performance of their new algorithm to a set of existing approaches. Such evaluations are typically performed by applying some evaluation metric and many evaluation metrics have been used to rank recommendation algorithms. It is obvious that there does not exist a single algorithm that would outperform all others over all possible methods. Hence the evaluation needs to be done over some particular parameters and goals. In this thesis, the performance will be evaluated over temporal parameters. The prime focal point is to examine if recommendation accuracy as well as scalability can be improved by adding temporal dimension. The general experimental approaches used for evaluating recommendation algorithms are divided into three categories namely online experiments, user study and offline experiments [21]. Online experiments can measure the real performance of the system but there subjective nature and cost limit them in using them on a large scale. The use offline experiments are more widespread and therefore the major part of this paper describes the offline experimental setting in detail. There are several requirements that make sure that the results of the experiments are statistically sound. This evaluation approaches are also applied in related areas such as machine learning and information retrieval, highlighting their relevancy to evaluating recommendation systems [12, 32]. II. LITERATURE REVIEW A wide of range of algorithms have been applied in the field of recommender system to make their prediction accurate and scalable. These algorithms have been developed and improved over the past decade to the point where a wide variety of algorithms exist for generating recommendations. Each algorithmic approach has disciples who assert it to be superior for some purpose. The performance parameters for identifying the best algorithm for a given purpose has proven challenging. This is because researchers disagree on which metrics should be used and also what are the attributes on which these metrics should be applied. A dozen of quantitative metrics and additional qualitative evaluation techniques are spread across literature. Moreover, even if the metrics and their corresponding attributes are agreed upon there are several other factors that hamper the process of evaluating recommender systems and their algorithms. First, different algorithms may be better or worse on different data sets. In addition the properties of these dataset, the rating scale and rating density also effect the evaluation process. Most of the recommendation algorithms have been tested on data sets that have many more users than items (e.g., the MovieLens data set has 65,000 users and 5,000 movies). Such algorithms may be entirely unsuitable in a domain where there are many more items than users (e.g., an Academic referencing Rs where there are many more research papers that their users). The second criterion that makes evaluation difficult is related to the objectives of the evaluation process. The majority of these process focus on the “accuracy” of algorithms in predicting ratings. However, even early researchers accepted that there are properties different from accuracy that has a bigger effect on user satisfaction and system performance. For example, Mobasher et al. [28] studied the extent to which the recommendations cover the entire set of items and McNee et al. [27] explored the novelty and serendipity of recommendations. Most of the researchers agree that the bottom-line measure of recommender system success should be user satisfaction. The measurement of user satisfaction again varies for different system. In the e-commerce domain user satisfaction is generally measured with the number of products purchased, but what if the users have not liked those products after buying them. The best case scenario could be directly asking user’s how satisfied they are, but again it is not feasible all the time due to user reluctance in filling up such details. Thus, there is a significant challenge in maintaining the balance between the taking explicit feedbacks from the user itself and generating implicit feedback from user navigation behavior. It is very difficult to deciding what combination of measures should be used in comparative evaluation. The majority of the published empirical evaluations of recommender algorithms till date have focused on accuracy, even though it has been challenges by few researchers in the past few years. The development of new evaluation metrics and their implementation is growing as a full-fledged area of research [25, 30]. On one hand, the traditional choices for evaluation has been reviewed by few researchers. Herlocker et.al. [21] analysed the key parameters that should be taken into account in evaluating collaborative filtering recommender systems such as: the user tasks, datasets, prediction quality metrics, prediction attributes and the user-based evaluation of the system as a whole. In addition to reviewing the evaluation strategies implemented by prior researchers, they also presented empirical results from the analysis of various accuracy metrics on one content domain where all the tested metrics collapsed roughly into three equivalence classes. Shani and Gunawardana [38] discussed how to compare recommenders on the basis of a set of properties that are relevant for a particular application. A comparative analysis is performed where few algorithms are compared using some evaluation metric, rather than absolute benchmark algorithms. Cremonesi et al. [10] focused on analyzing the performance of a recommendation algorithm on new data rather than on past data. The tried to complement the work done by Herlocker et al. [21] with appropriate methods partitioning dataset and applying an integrated methodology to evaluate different algorithms on the same data. On the other few researchers have tried to go beyond the accuracy metrics. McNee et al. [27] proposed informal arguments that researchers should move beyond the conventional accuracy metrics and their associated experimental methodologies to get the actual picture of realworld performance. They proposed new user-centric directions for evaluating recommender systems. Similarily, Ge et al. [14] focus on two crucial metrics in RS evaluation: coverage and serendipity. They have tried to analyze the role of coverage and serendipity as indicators of recommendation quality and discussed novel ways of measuring and interpreting the obtained measurements. Olma et al. [29] proposed an evaluation framework that tries to extract the essential features of recommender systems. In this framework, the most essential feature is the objective of the recommender system such as (i) choose which (of the items) should be shown to the user, (ii) decide when and how the recommendations must be shown. Based on this framework they developed a new metric and compared it with other metrics. Grimberghe et al. [18] focused on the requirements of business and research users and proposed a novel, extensible framework for the evaluation of recommender systems. The research framework uses a multidimensional approach and applies a user driven approach through an interactive visual analysis, which allows easy refining and reshaping of queries. Integrated actions such as drill-down or slice/dice, enable the user to assess the performance of recommendations in terms of business criteria such as increase in revenue, accuracy, prediction error, coverage and more. Schröder et al. [37] focuses on importance of clearly defining the objective of an evaluation and how this could affect the selection of an appropriate metric. III. PERFORMANCE PARAMETER The majority of the published empirical evaluations of recommender algorithms till date has focused on accuracy even though it has been challenges by few researchers in the past few years. The development of new evaluation metrics and their implementation is growing as a full-fledged area of research [25, 30]. Accuracy metric empirically measures the difference between the predicted ratings of items by the recommendation algorithm and the user’s true rating of preference. In selecting accuracy metric, researchers have a number of choices that are described below. The following list the most prominent metrics that include accuracy metrics as well as some of newly proposed metrics, but is certainly not exhaustive. A. Accuracy metrics The accuracy of recommendation algorithms has been evaluated in the research literature since 1994 [31]. A number of different metrices are used by these published evaluations. The generally used accuracy metrices are described hereby are used in most of the recommender system experimentation. These are broadly classified by Herlocker et al. [21] into three classes: predictive accuracy metrics, classification accuracy metrics, and rank accuracy metrics. 1) . Predictive Accuracy Metrics. Predictive accuracy metrics measure how close the recommender system’s predicted ratings are to the true user ratings. In the application domains of recommender system where prediction ratings are displayed to user, these metrics are generally used. For example, the Lastfm movie recommender [8] predicts the number of stars that a user will give each song and displays that prediction to the user. The researchers who want to measure predictive accuracy computes the difference between the predicted rating and true rating using some metrics such as mean absolute error. a) Mean Absolute Error and Related Metrics. Mean absolute error (often referred to as MAE) measures the average absolute deviation between a predicted rating and the user’s true rating. Mean absolute error (Eq. (1)) has been used to evaluate recommender systems in several cases [8, 21, 39]. (1) Where E is MAE p is the predicted rating r is the true rating N are the total number of rating The three variants of mean absolute error are mean squared error, root mean squared error, and normalized mean absolute error. On one hand, Mean squared error and Root mean squared error calculates the square of the error before summing it leading to more emphasis on large errors. On the other hand, Normalized mean absolute error [17], is mean absolute error normalized with respect to the range of rating values. This is done in order to differentiate between prediction runs on different datasets though it is not experimented yet. The drawback of these metrics is that they are less suitable for tasks such as Find Good Items where a ranked result is given to the user, who views only top ranking items. Also, when the granularity of true preference of a user is small, these metrics are less relevant. 2) Classification Accuracy Metrics. Classification metrics measure the rate with which a recommendation algorithm predicts a correct or incorrect decisions on whether an item is good. These metrics are more suitable for application such as Find Good Items where users have twofold choices. These metrics cannot be applied to nonsynthesized data in offline experiments, as the problem data sparsity challenges the calculations. There could be recommendations that are not rated and evaluating them can lead to certain biases. These metrics are also less relevant for domains with non-binary granularity of true preference. The particular metrics that are commonly used for measuring classification accuracy include Precision and Recall and ROC. a) Precision and Recall and Related Measures Precision and recall are the most popular metrics for evaluating information retrieval systems [9]. They have been used for the evaluation of recommender systems [3, 5, 33, 34]. Precision and recall are computed from a 2×2 table, such as the one shown in Table I. for calculating precision and recall, the item set is first divided into two classes—relevant or not relevant. Secondly, the set is further divide into two segments one that was returned to the user selected/recommended), and the other set that was not. Precision is defined as the ratio of relevant items selected to number of items selected, shown in Eq. (2) (2) Where P is precision Nrs is relevant items Ns are the total number of items selected Precision represents the probability that a selected item is relevant. Recall, shown in Eq. (3), is defined as the ratio of relevant items selected to total number of relevant items available. Recall represents the probability that a relevant item will be selected (3) Where R is Recall Nrs is relevant items Ns are the total number of irrelevant items available Figure 2. Relevant and irrelevant items b) F1 metric Further an approach that combines precision and recall into one metrics is termed as F1 metric (Eq. (4)). The F1 has been used to evaluate recommender systems in Sarwar et al. [33, 34]. Mean Average Precision is another approach taken by the TREC community is to compute the average precision across several different levels of recall or the average precision at the rank of each relevant document [19]. F1 and mean average precision may be appropriate if the underlying precision and recall measures on which it is based are determined to be appropriate (4) Where P is precision R is recall c) ROC Curves, Swets’ A Measure, and Related Metrics The ROC metric [40] is an alternative method that measure the degree to which an information filtering system can successfully distinguish between signal (relevance) and noise. There is an assumption in ROC model that states that every item is assigned a predicted level of relevance. Consequently, there will be two distributions, shown in Figure 1 and figure 2. Fig 1 represents the probability distribution of the system for items that are not relevant yet the system predict a given level of relevance for them (the x-axis). Fig 2 represents the same probability distribution for items that are relevant (the y- axis). A system performs better in differentiating relevant items from non-relevant items when these two probability distributions are clearly differentiable. Figure 2. ROC Curves 3) Rank Accuracy Metrics. This metrics measure the capacity of a recommendation algorithm to produce a recommended ordering of items that matches how the user would have ordered the same items. For evaluating algorithms that that create recommendation lists for the user, ranking metrics are more especially when user preferences are non-binary. When user preferences are binary (user is concerned with items that are good enough and not their order), rank accuracy metrics are generally not preferred. Few other metrics like Half-life Utility Metric as defined by Breese et al. [8], a new evaluation metric for recommender systems that is designed for tasks where the user is presented with a ranked list of results, and is unlikely to browse very deeply into the ranked list. Another description of this metric is given by Heckerman et al. [20] defines it on the pre-assumption that most Internet users will not browse very deeply into results returned by search engines. Another metrics NDPM Measure (normalized distance-based performance measure) was used to evaluate the accuracy of the FAB recommender system [2]. It was originally proposed by Yao [41]. Yao developed NDPM theoretically, using an approach from decision and measurement theory. Although the literature is filled with a number of many other similar metrics but our focus will be on the widely used metrics which includes accuracy and classification metrics. IV. CONCLUSION In this paper we presented different types of experimental set up that are generally used for recommender system evaluation. We also gave a review of literature and listed efforts carried out by various researchers in enlisting such approaches and development of some new approaches. An overview of major accuracy metrics and metrics that go beyond the scope of accuracy as researcher in the past few years is also discussed in detail. We also proposed a new methodology that is particularly suited for temporal evaluation of recommender system in general. Therefore, this paper provided some innovative guidelines that help researchers to choose an appropriate evaluation metric for a temporal evaluation scenario. In our future work, we will consider the implementing of our evaluation framework to a set of benchmark recommendation algorithm that will justify its usage. REFERENCES [1] Agrawal, R., T. Imielinski and A. Swami, 1993. Mining association between sets of items in massive database. Proceedings of the ACM SIGMOD International Conference on Management of Data, June 1, 1993, ACM New York, USA., pp: 207-216. [2] Balabanovic, M., & Shoham, Y. 1997. Combining Content-Based and Collaborative , Recommendation Communications of the ACM, 40(3): 66-72. [3] Basu, C., Hirsh, H., and Cohen W. 1998. Recommendation as classification: using social and content-based information in recommendation. In Proceedings of the 1998 National Conference on Artificial Intelligence (AAAI-98), pp. 714-720. [4] Bellog´ın, A., Castells, P., Cantador, I. 2011. Precision-based evaluation of recommender systems: An algorithmic comparison. In: Proc. RecSys 2011, pp. 333–336. [5] Billsus, D. & Pazzani, M. 1998. Learning Collaborative Information Filters, In Shavlik, J., ed., Machine Learning: Proceedings of the Fifteenth International Conference, Morgan Kaufmann Publishers, San Francisco, CA. [6] Box, G.E.P., Hunter, W.G., and Hunter, J.S. 1978, Statistics for Experimenters: an Introduction to Design, Data Analysis, and Model Building. John Wiley & Sons. New York [7] Boutilier, C. and Zemel, R. 2002. Online queries for collaborative filtering, Ninth International Workshop on Artificial Intelligence and Statistics. [8] Breese, J., Heckerman, D., & Kadie, C. 1998. Empirical Analysis of Predictive Algorithms for Collaborative Filtering. In Proceedings of the 14th Conference on Uncertainty in Artificial Intelligence (UAI-98), pp 43-52. [9] Cleverdon, C. and Kean, M. 1968. Factors Determining the Performance of Indexing Systems. Aslib Cranfield Research Project, Cranfield, England. [10] Cremonesi, P. Koren, Y. Turrin. R. Performance of Recommender Algorithms on Top-N Recommendation Tasks. In RecSys 2010. [11] Das, A. Datar, M. Gang, A.and Rajaram, S. 2007. Google news personalization: Scalable online collaborative filtering. Proceedings of the 16th International Conference on World Wide Web (WWW'07). [12] Demsar, J. 2006. Statistical Comparisons of Classifiers over Multiple Data Sets. Journal of Machine Learning Research 7: 1-30. [13] Fu, C., Silver, D. 2004. Time-Sensitive Sampling for Spam Filtering. In Tawfik, A.; and Goodwin, S. (eds.), Advances in Artificial Intelligence. Springer Berlin / Heidelberg. [14] Ge, M. Battenfeld, C. D. and Jannach, D. 2010. Beyond accuracy: evaluating recommender systems by coverage and serendipity. In Proceedings of the fourth ACM conference on Recommender systems (RecSys '10). ACM, New York, NY, USA, pp. 257-260. [15] Karypis, G. 2001. Evaluation of Item-Based Top-N Recommendation Algorithms. CIKM pp. 247-254 [16] Good, N., Schafer, J.B., Konstan, J.A., Borchers, A., Sarwar, B., Herlocker, J., & Riedl, J. 1999. Combining Collaborative Filtering with Personal Agents for Better Recommendations, Proceedings of Sixteenth National Conference on Artificial Intelligence (AAAI99), AAAI Press. pp 439-446. [17] Goldberg, D., Nichols, D., Oki, B. M., and Terry, D. 1992. Using collaborative filtering to weave an information tapestry. Communication of the. ACM, 35(12), pp. 61-70. [18] Krohn-Grimberghe, A. Nanopoulos, A. and Schmidt-Thieme. L. 2010. Proceedings of LWA2010 - Workshop-Woche: Lernen, Wissen \& Adaptivitaet, Kassel, Germany. [19] Harman, D. R. Naremore, R. C., Densmore, A. E. 1995. Language intervention with school-aged children: Conversation, narrative, and text. San Diego, CA: Singular. Overview of the fourth text retrieval conference. [20] Heckerman, D., Chickering, D. M., Meek, C., Rounthwaite, R., And Kadie, C. 2000. Dependency networks for inference, collaborative filtering, and data visualization. Journal of Machine Learning Research 1: 49–75. [21] Herlocker, J. Konstan, J. Terveen, L. & Riedl, J. 2004. Evaluating collaborative filtering recommender systems, ACM Trans. Inf. Syst., 22(1): 5-53. [22] Kohavi, R. Provost, F.: Applications of data mining to electronic commerce. Data Mining and Knowledge Discovery , 5(1–2): 5–10. [23] Koren, Y. 2009. Collaborative filtering with temporal dynamics, Proceedings of the 15th ACM International Conference on Knowledge Discovery and Data Mining, 53(4): 447-452. [24] Koychev, I. and Schwab, I. 2000. Adaptation to Drifting User's Intersects - Proceedings ECML2000/MLnet workshop: ML in the New Information Age, Barcelona, Spain, 2000, pp. 39-45. [25] Lathia, N., Hailes, S., & Capra, L. 2009. Temporal collaborative filtering with adaptive neighbourhoods, Proceedings of the 32nd international ACM SIGIR conference on Research and development in information retrieval SIGIR 09, pp. 796-797. [26] Mahmood, T. and Ricci, F. 2007. Learning and adaptivity in interactive recommender systems. In Dellarocas, C., and Dignum, F., editors, Proceedings of the ICEC’07 Conference, Minneapolis, USA, pages 75-84. . [27] McNee, S. M.; Riedl, J. & Konstan, J. 2006, Accurate is not always good: How Accuracy Metrics have hurt Recommender Systems, in 'Extended Abstracts of the 2006 ACM Conference on Human Factors in Computing Systems (CHI 2006)' . [28] Mobasher, B. Dai, H. Luo, T. Nakagawa, M. 2001. Effective personalization based on association rule discovery from web usage data. WIDM, pp. 9-15 [29] Olma H. F. & Gaudioso, E., 2008. Evaluation of recommender systems: A new approach. Expert Systems with Applications, 35(3):.790-804. [30] Pedro G. Campos, Fernando Díez, Alejandro Bellogín, 2011. Temporal Rating Habits: A Valuable Tool for Rater Differentiation. In Proceedings of the 2nd Challenge on Context-Aware Movie Recommendation (CAMRa 2011), at the 5th ACM Conference on Recommender Systems (RecSys 2011). Chicago, IL, USA, October 2011 [31] Resnick, P. and Varian, H.R. 1997. Recommender Systems, Communications of the ACM, 40(3): 56–58. [32] Salzberg, S.L. 1997. On Comparing Classifiers: Pitfalls to Avoid and a Recommended Approach. Data Mining and Knowledge Discovery 1(3): 317-327. [33] Sarwar, B. M., Karypis, G., Konstan, J. A., and Riedl, J. 2000. Analysis of Recommender Algorithms for E-Commerce, In Proceedings of the 2ndACM E-Commerce Conference (EC'00). Sarwar, B. M., Karypis, G., Konstan, J. A., and Riedl, J. 2000. Application of Dimensionality Reduction in Recommender System -- A Case Study". In ACM WebKDD 2000 Web Mining for E-Commerce Workshop.