LEARNING AS APPLIED TO SIMULATED ANNEALING

advertisement

Learning as Applied to Stochastic Optimization for Standard Cell Placement

Lixin Su, Wray Buntine, A. Richard Newton, and Bradley S. Peters

University of California at Berkeley, Department of EECS

Email: lixinsu@eecs.berkeley.edu

Abstract

Although becoming increasingly important, stochastic

algorithms are often slow since a large number of

random design perturbations are required to achieve an

acceptable result—they have no built-in “intelligence”.

In this work, we used regression to learn the swap

evaluation function while simulated annealing is applied

to 2D standard-cell placement problem. The learned

evaluation function is then applied to the trained

simulated annealing algorithm (TSA). The annealing

quality improvement of TSA was 15% ~ 43% for the set

of examples used in learning and 7% ~ 21% for new

examples. With the same amount of CPU time, TSA could

improve the annealing quality by up to 28% for some

benchmark circuits we tested. In addition the use of the

evaluation function successfully predicted the effect of the

windowed sampling technique and derived the informally

accepted advantages of windowing from the test set

automatically.

1. INTRODUCTION

Stochastic combinatorial optimization techniques, such

as simulated annealing and genetic algorithms, have

become increasingly important in design automation as

the size of design problems have grown and the design

objectives have become increasingly complex. In

addition, as we move towards deep-submicron

technologies, the cost function must often evolve or

handle a variety of tradeoffs between area power, and

timing, for example. Design technologists can often tackle

simplified versions of some of these problems, where the

optimizing objective can be stated in terms of a small

number of well-defined variables, using deterministic

algorithms. Such algorithms can produce as good or even

better results than the stochastic approaches in a shorter

period of time. Unfortunately, these algorithms run into a

variety of difficulties as the problems scale up and the

objective functions begin to capture the real constraints

imposed on the design, such as complex timing, power

dissipation, or test requirements. Stochastic algorithms

are naturally suited to these large and more complex

problems since they are very general, making random

perturbations to designs and, in some regular fashion,

letting a cost function determine whether to keep the

resulting change.

However, stochastic algorithms are often slow since a

large number of random design perturbations are required

to achieve an acceptable result—they have no built-in

“intelligence”. The goal of this research was to determine

whether statistical learning techniques can improve the

run-time performance of stochastic optimization for a

particular solution quality. In this paper, we present

results for simulated annealing as representative

stochastic optimization approach and the standard-cellbased layout placement problem was selected to evaluate

the utility of such a learning-based approach. The

standard cell problem was selected since it is a very well

explored problem using both deterministic as well as

“manually trained” stochastic approaches. While the

longer-term goal of this research is to apply incremental

probabilistic learning approaches to the stochastic

optimization, such as Bayesian [13,21] learning, in this

paper we report results for a regression-based empirical

approach to learning, to determine whether the overall

approach had a basis for further research.

Stochastic placement algorithms have evolved

significantly since their initial application in the EDA

area more than a decade ago[1]. Over that period, the

qualities of results they can produce have improved

significantly. For example, in the development of

TimberWolf system [6-8], which is a general purpose

placement and routing package based on simulated

annealing, many approaches have been tried to speed up

the algorithm. They include reducing the computation

time of each move, early rejection of bad moves, the use

of efficient and adaptive cooling schedules combined

with windowed sampling, and hierarchical or clustered

annealing. In many ways, these variations and

improvements can be viewed as “manually learned”

approaches, based on the application of considerable

experimental as well as theoretical work taking place over

a long time.

In this work, we explore another opportunity for

improving the utility of a stochastic algorithm through

automatic learning of the relative importance of various

criteria in the optimization strategy. We learn from

previous annealing runs to distinguish potentially good

moves from bad ones. The good ones will be selected

with a higher probability to expand the search.

Some preliminaries of both simulated annealing and

linear regression are presented in the next section. In

Section 3, we describe in detail how we apply linear

regression as our learning technique and how we apply

the learned information in the modified simulated

annealing. Section 4 contains our experimental results and

in Section 5 we present our conclusions and areas for

future work.

2. SIMULATED ANNEALING AND REGRESSION

There are many versions of the simulated annealing

algorithm [14, 17-19]. All of them require the definition

of the move set, which is the set of local perturbations

that can be made to the current solution. The algorithm is

composed of a series of Metropolis procedures [9,16].

Each Metropolis procedure is composed of a series of

moves, which are picked up from the move set according

to the so-called proposal distribution [5]. Each of the

moves will be accepted if it can pass a Boltzmann test [1],

which is controlled by a parameter called temperature;

otherwise, it is rejected. The algorithm starts from an

initial solution, goes through all the perturbations, when it

stops, the last or best ever seen solution will be returned

as the final solution.

The move set totally defines the structure of the solution

space, which can be represented as a graph, where the

nodes in the space represent solutions, and the edges

represent specific moves leading from one solution to

another. In our implementation of the simulated annealing

placement algorithm, pair-wise cell swaps constitutes the

move set.

Linear regression is a well-developed statistical

technique for data fitting, it can establish the response

model between the inputs and the output taking the

following form: y P [12]. P is called the design

matrix. By linear regression, we estimate as

ˆ

( P P ) 1 P y , which makes the Residual Sum of Squares

ˆ

ˆ

RSS ( y P )( y P ) minimum, where ^ indicates an

estimated value. For additional properties of this estimate,

Page 2

we refer the reader to [12]. The following fact will used

in the discussions later in this paper.

Fact 1: If any parameter in the model is scaled by , the

corresponding ̂ will be scaled by 1 , all the other

ˆ ' s and the predicted response will remain unchanged.

3. LEARNING

Learning [11,24], in this paper, means the construction

of the evaluation function of the swaps based on the past

annealing experience. The deduction procedure of the

evaluation function we use in this work is linear

regression, however incremental/adaptive approaches, in

particular those based on Bayesian theory [13,21] or

Support Vector Machines (SVM) [24], offer significant

potential. Learning is appealing to us because the

evaluation function need not be obtained by explicit

insight into the problem and explicit programming.

Rather, the problem-specific details, or even better the

problem-domain-specific

details,

are

extracted

automatically from the history of running the optimization

method on representative design problems and observing

the quality of the results.

In conventional simulated annealing, the Boltzmann test

is the only guidance used during the search procedure.

Given a finite amount of computation time, it is clear that

this guidance alone is not sufficient to yield an efficient

search and so we employ an additional evaluation

function. The evaluation function is used to help us

locally expand the node. In choosing an evaluation

function, the goal is that it is able to guarantee a highquality final solution. For our test problem, the evaluation

of the swap should reflect the swap's contribution to the

quality of the final solution. Regression, with the final

annealing quality as its response, was chosen as our

learning engine. Each swap is characterized as a

parameter vector, which serves as the input to the

regression model.

3.1 The form of the response model

To this end, first we run the conventional simulated

annealing and collect a variety of data that is used to

perform the linear regression and establish the response

model, which ideally should take the following form:

y 0

t

q

l

i 1

j 1 k 1

i

jk

p ijk

(1)

In our conventional implementation of simulated

annealing, we have t=300 temperatures and l=20 swaps

for each temperature. Each move is characterized with

q=7 parameters.

p ijk is then the jth parameter of the kth

swap tried at the ith temperature if the swap is accepted,

otherwise it is set to zero. y is the final cost function

returned by each simulated annealing run. Hence the

model tries to correlate each accepted swap with the final

solution quality. In order to have more flexibility and

reduce the number of predictors, we evenly divide the

temperatures into r = 10 ranges [10], and assume

that ijk jg if temperature i belongs to the gth range.

modification on our placement test problem than the

theoretical issues. In this paper the ChooseSetSize is

selected to be 5 [10].

SmartMove {

for(i=1,ChooseSetSize )

{ mi RandomMove;

y (m i )

So the response model now takes a new form:

y 0

r

q

i 1

j 1

i

j

Pji

r

r

// r : current temperature range}

m m | y ( m ) is min

(2)

return(m );}

where Pji is the sum of the jth parameter of all accepted

swaps in the ith temperature range. Clearly, all swaps

tried in the same temperature range were treated equally.

3.2 Model parameter definition

The cost function for the placement problem is defined

as the sum of half perimeters of bounding boxes of all

nets in the net-list. The swap parameters we selected for

our training experiment pi , i 1,...7, are defined as

follows. For detailed definition, please see the full version

of the paper [25].

p1 : The Euclidian distance between cells c1 and c2

p2 : The square of the distance between c1 and the origin

(0,0) (a control parameter)

p3 : The square of the distance between c2 and the origin

(0,0) (a control parameter)

p4 : The connectivity between

1 p1i ... q p qi ;

c1 and c2

p5 : The external connectivity of the cells in the

candidate move.

p 6 , p 7 : Total force change on the swapped cell [15,25]

All the above parameters and the final annealing quality

were normalized to be between 0 and 1. As the first step,

an individual model is constructed for each specific

circuit. We expect that the normalization help the

individual model less instance specific.

3.3 Application of the evaluation function

The trained simulated annealing is the same as the

conventional one except that SmartMove, shown in

Figure 1, is used instead of RandomMove when a new

move is proposed for the Boltzmann test. At each

temperature, the walk in the solution space is actually a

Markov chain. This modification of the algorithm [5]

makes it extremely hard to do theoretical analysis based

on the Markov chain theory [2-4]. However, we would

rather be more concerned with the practical effect of the

Page 3

Figure 1: SmartMove

However, is it possible to use only part of the response

model as the evaluation function? From the scaling

independence of the model parameters as summarized by

Fact 1 in Section 2, the ith segment of the model actually

correlates p

with the final annealing quality. The

average of the parameter vector was done over all the

accepted swaps in a certain temperature range. So this

segment actually captured the “average” information of

all the accepted swaps in that temperature range, hence it

was eligible to be used as the evaluation function.

Another question is that, since the input to the learned

model is the summation of accepted swap parameters, can

a single swap parameter be used as input in the evaluation

function?

Recall

that

given

the

assumption

that ijk jg , then Equation (1) and Equation (2) are

equivalent. Hence, the evaluation function can be seen as

the contribution to the final cost function of a single

accepted swap.

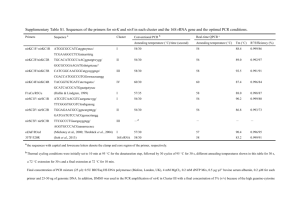

4. EXPERIMENTAL RESULTS

We compare three variations of standard cell

placement: the Conventional Simulated Annealing (CSA),

the Trained Simulated Annealing (TSA) where the 's

are obtained by running CSA on each of the eight

benchmark circuits (Group 1), and an Untrained version

of Simulated Annealing (USA), where the 's are all

equal and set to 1. The following are the benchmark

circuits used in this paper.

NAME

CELLS

NETS

SOURCE

GROUP

C5315

C3540

I10

Struct

C6288

Industry1

729

761

1269

1888

1893

2271

907

811

1526

1920

1925

2593

1

1

1

2

1

2

1

1

1

1

1

1

Primary2

Biomed

Industry2

Industry3

Avq.small

Avq.large

S9234

S15850

S38417

2907

6417

12142

15059

21854

25114

979

3170

8375

3029

5737

13419

21940

22118

25378

1007

3183

8403

2

2

2

2

2

2

3

3

3

For each fixed number of SPT, the annealing quality of

TSA was recorded. In this paper, annealing quality means

the average of the final cost function values returned by

annealing runs so that the accuracy is kept within 1%

[25]. Meanwhile, CSA was also similarly conducted. The

results are summarized in Table 2. For each SPT, we

compute the ratio of the annealing quality returned by

TSA and CSA. (1- ratio) is the Percentage Improvement

(PI) of the annealing quality of TSA over CSA. Both the

best and worst PI’s are summarized in Table 2.

1

1

2

2

2

2

2

2

2

Table 1: benchmarks used in experiments

The circuits from Source 1 were taken from mcnc91

combinational benchmark set, the circuits from Source 2

were taken from [26]. The circuits from Source 3 were

taken from ISCAS89 sequential benchmarks.

All the above benchmark circuits are divided into two

groups. Group 1 contains circuits used to construct the

response models, Group 2 consists of those only used to

“blind” test the generality of the trained model.

Parameters in the experiment such as the number of

temperature regions, the choose set size, and so on were

selected after extensive empirical analysis that

demonstrated that these values were sufficient to

represent the problem domain well [10].

4.1 Self test of the individual and general model

First, we constructed individual models for each of the

circuits of group 1. The learning data were obtained by

running CSA 5,000 [25] times for each of the circuits.

The 300 temperatures in CSA were evenly divided into

10 ranges. The number of swaps per temperature was

determined to be 20 considering the size of the net-lists.

Although around 30 million swaps were tried during the

learning phase, it’s still a small number compared to the

total number of possible swaps N ! N ( N 1) / 4 . Here,

N is the number of cells in the net-list, which is at least

700 for our experiments. Hence it can’t be taken for

granted that the individual model can help improve the

annealing quality even for the same individual circuit. So,

we decided to apply each individual model to the

individual circuit by running TSA to see if the individual

model is robust for the whole huge solution space.

Second, we used simple averaging of the parameters

across the test circuits to build the overall general model.

While there are more effective statistical approaches to

the combination of the individual models, simple

averaging can be seen as a simple and sub-optimal

approach. This general model was then applied to all the

circuits of group 1 by running TSA in order to see if the

general model works as well as the individual model

does.

The above TSA was conducted with the variation of the

number of Swaps Per Temperature (SPT) from 10 to 800.

Page 4

Example

C5315

C3540

I10

Struct

C6288

Industry1

Primary2

Biomed

TSA with Individual Model

Best PI

Worst PI

25%

15%

34%

24%

25%

18%

39%

26%

42%

23%

33%

20%

43%

28%

38%

17%

TSA with General Model

Best PI

Worst PI

25%

15%

34%

24%

25%

18%

39%

26%

42%

23%

34%

20%

44%

18%

39%

17%

Table 2: Comparison of TSA, CSA for group 1

TSA with both the individual and the general model

works pretty well within a large range of SPT although

the models were learned with SPT = 20. With the same

number of steps walked in the solution space, the

annealing quality returned by TSA is at least 15% better

than that returned by CSA.

4.2 Blind test of the general model

Next we tested the generality of the general model in a

more significant way. The general model was applied to

circuits in Group 2 which have nothing to do with the

learning of the model. The experiments were conducted

similarly as what we did for the Group 1 circuits, except

that only the general model was used in TSA. The results

are summarized in Table 3.

circuit

Best PI

Worst PI

Circuit

Best PI

Worst PI

Industry2

Industry3

Avq.small

Avq.large

40%

37%

35%

34%

10%

7%

8%

7%

S9234

S15850

S38417

37%

41%

38%

27%

21%

14%

Table 3: Comparison of TSA, CSA for group 2

With the same number of steps walked in the solution

space, TSA with the general trained model improved the

annealing quality by 7% ~ 41% compared to CSA. Notice

that except for s9234, s15850 and s38417, all the other

“blindly” tested circuits are 1 ~ 3 times larger than the

largest circuit in group 1 from which the general model

was trained.

4.3 Information captured by the model

We were concerned about whether the model had really

learned something general and of use. The untrained USA

approach was used to determine whether the choose-set

itself was playing a key role in the improvement, rather

than the training. For a representative example, say

C6288, the quality of CSA and USA was almost the

same, with TSA again producing significantly better

results. For the data in the following table SPT is 20 and

the general model is used in TSA.

CSA

USA

TSA

Min

Mean

Max

Std

38484

37779

27805

39293

38835

28686

39915

39993

29426

347

361

343

Based on the two relationships, for a given CPU time,

TSA always returns better annealing result than CSA.

The best percentage improvement for each circuit was

recorded and is summarized in Table 5. For the same

amount of CPU time, the annealing quality was improved

from 12% ~ 22% for all the Group 2 circuits using TSA

instead of CSA. For Group 1, circuits, the best percentage

improvement of final quality was 14% ~ 28%.

Circuit

Industry2

Industry3

Avq.small

Avq.large

Industry1

Best PI

12%

14%

15%

14%

18%

circuit

S15850

S38417

C5315

C3540

I10

Best PI

21%

17%

15%

23%

14%

circuit

struct

C6288

S9234

Primary2

biomed

Best PI

20%

28%

22%

32%

18%

Table 5: Best percentage annealing quality

improvement for given CPU time

Table 4: Distribution of solutions of TSA, CSA,

and USA

Furthermore we investigated a typical TSA annealing

run for avq.small. SPT was set at 70 and at each

temperature the average distance between the two cells of

the swap proposed by the model right before the

Boltzmann test was recorded. Over the years, researchers

have found that a windowing approach, where the

maximum distance between cells in the candidate move is

decreased as temperature decreases, tends to improve the

overall run-time performance of the annealing at little or

no cost in the quality of the final result [1,6]. As the data

in Figure 2 illustrates, the general model derived purely

from the training runs on the test examples, derived

without any a priori hints that a windowing approach

would lead to an optimal utility result. Our approach

indeed has learned something nontrivial!

4.4 CPU timing analysis

The use of response model in the TSA introduces some

computational overhead, since it performs learning, swap

evaluation/selection, and extra updating of the internal

data structure if the swap is accepted. Because of the

generality of the learned model, training need only be

performed once so it is not a real problem.

In our implementation, the overall CPU time can be

controlled by changing SPT. CSA was run with SPT

varying from 10 to 1,500, TSA was run with SPT varying

from 10 to 800 using the general model. Both the

annealing quality and CPU time were recorded. For each

circuit, we established the piecewise linear relationship

between annealing quality and CPU time through linear

interpolation of the data points for both CSA and TSA.

Page 5

Figure 2: Average proposed swap distance as a

function of temperature for TSA on avq.small

5. CONCLUSIONS AND FUTURE WORK

We have demonstrated that a stochastic algorithm, in

this case simulated annealing, can be trained using prior

examples to improve its quality-of-results on a

mainstream EDA application by 7% ~ 43% over a

conventional approach with the same number of moves in

the solution space. For a given CPU time, TSA can

outperform CSA by up to 28%. A simple regression

model was used to train the algorithm, however we

believe that incremental probabilistic approaches to

training, such as the application of Bayes' Rule or SVM's

[24], can also be used. We demonstrated the robustness of

the approach by using representative "blind" examples to

test the general model.

Although we did not intend to make our TSA to beat

the best existing simulated annealing algorithms for

standard cell placement. Instead, we did show that TSA

with learned information outperformed CSA significantly.

We believe that despite our simple implementation of

CSA, learning has revealed some useful properties of the

solution space (within the framework of simulated

annealing) for the standard cell placement problem.

Hence this learning approach can now be applied to more

complicated simulated annealing algorithms [20,22,23] to

improve their performance. We also believe the

application of general-purpose stochastic algorithms, with

built-in general-purpose approaches to learning, could

eventually form the basis of a general and adaptive

approach to the solution of a variety of VLSI CAD

problems.

6. ACKNOWLEDGEMENTS

This work was supported in part by Semiconductor

Research Corporation under contract DC-324-030, by the

Digital Equipment Corporation and by Intel. The authors

sincerely thank them for their continuing support.

7. REFERENCES

[1] S .Kirkpatrick, C. D. Gelatt, and Jr., M. P. Vecchi,

“Optimization by simulated annealing”, Science, vol.220,

pp.671-880, 13 May 1983

[2] D. Mitra, F. Romeo, et al. “Convergence and finite time

behavior of simulated annealing”, Advances in Applied

Probability, 18:747-771, 1986

[3] P. J. M. van Laarhoven and E. H. L. Arts, “Simulated

annealing: theory and applications”, Kluwer Academic

Publishers, 1987

[4] Alistair Sinclair and Mark Jerrum, “Approximate counting,

uniform generation and rapidly mixing markov chains”,

Information and Computation, 82:93-133 (1989)

[5] Brian D. Ripley, “Stochastic Simulation”, John Wiley &

Sons, 1987

[6] Carl Sechen and Alberto Sangiovanni-Vincentelli, “The

TimberWolf placement and routing package”, IEEE

Journal of Solid-State Circuits, vol. sc-20, No. 2, April

1985

[7] Wern-Jieh Sun and Carl Sechen, “Efficient and effective

placement for very large circuits”, IEEE Transactions on

Computer-Aided Design of Integrated Circuits and

Systems, vol.14, No.3, March 1995

[8] William Swartz, et al. “Timing driven placement for large

standard cell circuits”, Proceedings of the 32nd Design

Automation Conference, pp.211-215, 1995

[9] D. F. Wong, et al. “Simulated annealing for VLSI design”,

Kluwer Academic Publishers, 1988

[10] Bradley S. Peters, Lixin Su and Richard Newton,

“Improvement of stochastic optimization through the use

of learning”, UC-Berkeley SRC Review, October 1995

[11] L. G. Valiant, “A theory of the learnable”,

Communications of the ACM, vol.27, November 1984

Page 6

[12] G. A. F. Seber, “Linear Regression Analysis”, John Wiley

& Sons, 1977

[13] Kai-Fu Lee and Sanjoy Mahajan, “A pattern classification

approach to evaluation function learning”, Artificial

Intelligence, 36 (1988) 1-25

[14] David Aldous and Umesh Vazirani, “Go with the winners

algorithms”, Proceedings. 35th Annual Symposium on

Foundations of Computer Science, pp.492-501, 1994

[15] M. Hannan, P. K. Wolff and B. Agule,

“Some

experimental results on placement technique”, Proc. 12th

Design Automation Conference, 1976, pp. 214-244

[16] N. Metropolis, et al. “Equations of state calculations by

fast computing machines”, J. Chem. Phys. 21(1953), 108792

[17] J. W. Greene and K. J. Supowit, “Simulated annealing

without rejected moves”, International Conference on

Computer Design, 1984, 658-63

[18] T. P. Moore and Aart J. de Geus, “Simulated annealing

controlled by a rule-based expert system”, International

Conference on Computer Aided Design, 1985, 200-202

[19] S. Mallela, et al. “Clustering based simulated annealing

for standard cell placement”, 25th ACM/IEEE Design

Automation Conference, 1988, 312-317

[20] E. H. L. Aarts and P. J. M. van Laarhoven, “A new

polynomial-time cooling schedule”, International

Conference on Computer Aided Design, 1985, 206-208

[21] S. James Press, “Bayesian statistics: principles, models,

and applications”, New York: Wiley, c1989

[22] M. D. Huang, F. Romeo and A. Sangiovanni-Vincentelli,

“An efficient general cooling schedule for simulated

annealing”, International Conference on Computer Aided

Design, 1986, 381-384

[23] Jimmy Lam, Jean-Marc Delosme, “Performance of a new

annealing schedule”, 25th ACM/IEEE Design Automation

Conference, 1988, 306-311

[24] Vladimir N. Vapnik, “The nature of statistical learning

theory”, Springer, 1995

[25] http://www-cad.eecs.berkeley.edu/~lixinsu

[26] http://www.cbl.ncsu.edu/benchmarks