Solutions

advertisement

CS 151, Written Homework, Set 3, Solutions

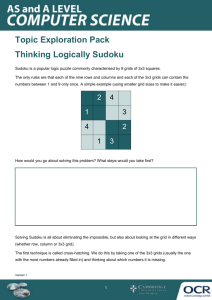

1. This problem returns to the Sudoku game we considered in week 2. In this problem

we’re going to try to formalize the Sudoku game as a Constraint Satisfaction Problem

(CSP).

1. What is the set of variables, and what is the domain of possible values for each?

There is one variable for each square (empty or full). The domain of each variable is

{1, …, 9}. You could also formulate the problem as one variable per empty square

only and eliminate the unary constraints below, and slightly modify the other

constraints to account for the filled in values.

2. List all of the constraints for the problem, including the ones provided by the

starting board. How many variables are involved in each constraint?

There are 4 classes of constraints. One set of constraints ensures that variables in

each row all take a different value. Another set ensures the same for columns and

another set for squares. In each of the previous constraints, 9 variables are involved.

Finally, there is a set of unary constraints that ensures that the initially full squares

take on their original values (i.e., we can’t change the values on the starting board).

3. We said in class that the order in which you consider variables can have a big

impact on the performance of basic backtracking search in CSPs. Which variable

ordering would you expect to work better, the degree heuristic, or the minimum

remaining values heuristic? Why?

The remaining values heuristic. In general this heuristic works better for CSPs. Also,

in Sudoku, the number of remaining values for some variable decreases very quickly.

4. An interesting recent finding about the 3-SAT problem is that the compute time

required to solve a particular problem instance is correlated with the number of

solutions that exist (problems with lots of solutions or exactly one solution are

easy, but it’s the ones in the middle that are hard). However, every Sudoku

problem has exactly one solution. What, then, is the difference between “easy”

and “hard” Sudoku problems?

The difference is that with easy problems, the constraints limit the values of variables

very quickly, making it efficient to find the right assignment because large portions of

the search space can be avoided. In very easy problems, running arc consistency will

yield only one consistent value for each variable. In contrast, “hard” problems do not

limit the values of variables, forcing the algorithm to explore more of the search

space before finding the solution.

2. Another technique that might work well in solving the Sudoku game is local search.

Please design a local search algorithm that is likely to solve Sudoku quickly, and

write it in psuedocode (in C style or in Russell&Norvig style). You may want to look

at theWalkSAT algorithm for inspiration. Do you think it will work better or worse

than the best incremental search algorithm on easy problems? On hard problems?

Why?

function solveSudoku( SudokuBoard game, int numIter)

foreach unbound var in game, assign randChoice([1…9])

for i=0 to numIter:

if checkConstraints(game) return game

choose a variable, v, that violates a constraint

v = randChoice([1…9]) (ensuring v gets a new value)

This algorithm will probably work better than incremental search on hard problems, but

not easy problems because on easy problems, incremental search will direct itself quickly

toward the correct solution, while the random search will needlessly explore fruitless

assignments of values to variables. On the other hand, on hard problems, this approach

might stumble on the right solution pretty quickly, while local search will probably take a

very long time to find it.

3. We presented simulated annealing in lecture as a local search algorithm which uses

randomness to avoid getting stuck in local maxima and plateaus.

1. For what types of problems will greedy local search (hill climbing) work better

than simulated annealing? In other words, when is the random part of simulated

annealing not necessary?

For problems with no local maxima in the cost function, greedy search will work

better.

2. For what types of problems will randomly guessing the state work just as well as

simulated annealing? In other words, when is the hill-climbing part of simulated

annealing not necessary?

For problems where the cost function has no real structure—i.e., the area around the

global maximum looks like any other area of the function, so you would have no idea

that you are even getting close to the solution, nor would the shape of the function

necessarily push you in the right direction to find it.

3. Reasoning from your answers to parts (b) and (c) above, for what types of

problems is simulated annealing a useful technique? What assumptions about the

shape of the value function are implicit in the design of simulated annealing?

Problems with a cost function with an overall global structure, but with some local

maxima.