Rapovy MS Paper - Old Dominion University

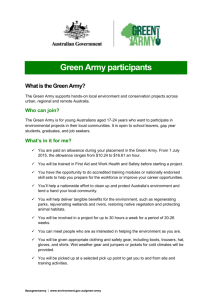

advertisement