RELIABILITY

advertisement

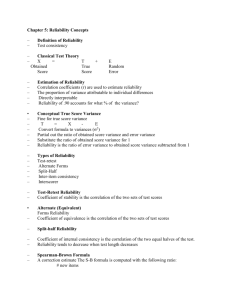

Reliability 1 RELIABILITY Course: Writing Professor: Dr. Ahmadi Student: Date: 24.10.2012 Reliability 2 In different books on testing there are definitions of reliability. As Grant Hening (Guide to Language testing. P.73) expressed, reliability is a measure of accuracy, consistency, depended ability or fairness of scores resulting from administration of a particular examination. Also, Caroline V. Gipps ( Beyond testing, P. 67) expressed that reliability is concerned with the accuracy with which the test measure the skill or attainment it is designed to measure. According to these definitions reliability can be defined as a quality of test scores which refers to the consistency of measures. The reliable test may not be valid, but a valid test is reliable. Bachman (1990, P.161) expressed that the concerns of reliability and validity leading to two complementary objectives in designing and developing tests: 1. to minimize the effects of measurement error, and 2) to maximize the effects of the language abilities we want to measure. Investigation of reliability involves both logical analysis and empirical research; we identify sources of error and estimate the magnitude of their effects on test scores. Describing these theories such as a) Classical true score measurement theory, b) Generalizability theory, c) Item response theory, can provide more powerful tools for test development and interpretation, estimate of reliability. a) Classical true score measurement Theory Bachman (1990, P.166), in investigation of reliability distinction between unobservable abilities and observed test score is essential. As Bachman stated CTS theory consist of set of assumptions about the relationship between actual or observed test score and the factors that affect test scores. Observed scores comprise two factors: 1. true score that is Reliability 3 due to an individual’s level of ability, 2. error score that is due to factors other than the ability being tested. This assumption represent as follow: X= xt + xe Another assumption is the relationship between true and error scores. Error scores are unsystematic or random and are unrelated with true scores. In CTS model two scores of variance in a set of test scores: the true score variance, which is due to difference in the ability of the individuals tested and measurement error, which is unsystematic, or random. Parallel tests Bachman (1990, P.168) Parallel tests is the concept of CTS theory. In classical measurement theory parallel test defined as two tests of the same ability that have the same means and variances and are equally correlated with other tests of that ability. Reliability in CTS theory defined as a true score variance. Reliability refers to the score not the test itself. Bachman (1990, P.172) mentioned that there are three approaches in CTS model to estimate reliability a) internal consistency, which is concerned primarily with sources of error from within the test and scoring procedures, b) stability estimates indicate how consistent test scores are over time, c) equivalence estimates provide an indication of the extent to which scores on alternate forms of a test are equivalent. The estimates of reliability that these approaches yield are called reliability of coefficients. a) Internal consistency Reliability Moreover, there are approaches to examine the internal consistency of test; one is Splithalf method and the other is the spearman- Brown split-half estimate and The Guttman Split-half estimate. Split- half method: in this method test divided into two halves and then determine the extent to which scores on these two halves are consistent with each other. Treat the halves as parallel tests. These two halves are independent of each other. In other hand there might be two interpretations for a correlation between the haves: 1. they both measure the same trait. 2. Individual’s performance on one half depends on how they perform on the other. However, the problem in method is that most language tests are designed as ‘power test’ with easiest questions at the beginning and the more difficult in the rest so the assumption of equivalence would not be satisfied. The spearman- Brown split-half estimate: in this approach compute the correlation between two set of scores. In order to use this method two assumptions are met: 1 assumes that two halves as parallel tests have equal means and variances.2. Assume that two halves are independent from each other. Bachman (1990, P.175, No.10) The Guttman split-half estimate: this method developed by Guttman (1945), which does not assume equivalence of the halves and which does not require to computing a correlation between them. This reliability is based on the ratio of the sum of variances of the two halves to the variance of the whole test. Bachman (1990, P.175, No.11) Reliability estimates based on item Variances There are many different ways to estimate reliability with split- half approach. The approach on the basis of the statistical characteristic of the test item developed by Kuder 4 Reliability 5 and Richardson (1937), involves computing the means and variances of the item that constitute the test. Cronbach (1951) developed general formula for estimating internal consistency which called ‘Coefficient alpha’ or ‘Cronbach’s alpha’ Rater consistency: Bachman (1990, P.178) in test scores that are obtained subjectively, such as rating of compositions or oral interviews, a sources of error is inconsistency in there rating. If there is a single rater, we need to be concerned about consistency within that individual’s ratings, or with intra- rater reliability. Intra-rater reliability Inter-rater reliability: Inter-rater (or inter-observer) reliability is an important consideration in the social sciences because there are many conditions for which the best means of measurement is the report of trained observers. Some classes such as gymnastics can only be accessed through the ratings of expert judges. . Particularly, the acceptability of the reports of two or more observers increases when their observations are similar. The measure of the similarities of the observations coming from two or more sources is the inter-rater reliability. (www. education.com) b) Stability estimate Second approaches in CTS is Stability, in this approach reliability can be estimated by given the test more than once to same group of individuals. Also this approach called ‘test-retest’ and provides an estimate of the stability of the test score over time. Differential practice effect and differential changes in ability are two sources of inconsistency. Differential practice effect is when given the test twice with little time Reliability 6 between. A differential change in ability is when given the test twice with long time between. c) Equivalence estimate According to Heaten (1988. P.163) another way to estimate reliability is equivalence estimate that administering parallel forms of the test to the same group. This assume that two similar versions of the particular test can be constructed: such tests must be identical in the nature of their sampling, difficulty, length, rubrics, etc. if the results derived from the two tests correspond closely to each other, then the test can be reliable. Problems with the CTS model As Bachmn defined (1990,P.186) one problem in CTS model is that it treats error variance as homogeneous in origin. And the other problem is that CTS model considers all error to be random, and consequently fails to distinguish systematic error from random error. b) Generalizability Theory Bachman (1990, P.187) another framework to estimate reliability is Generalizability theory. This theory has been developed by Cronbach and his colleagues. Reliability is a matter of generalizability, and the extent to which we can generalize from a given score is a function of how we define the universe of measures. Application of G-theory to tests development and use takes place in two stages. First, considering the uses that will be made of the test scores, the test developer designs and constructs a study to investigate the sources of variance that are of concern or interest. It is generalizability study (G-study) the test developer obtains estimates of the relative sizes of the different sources of variance. Second stage is a decision study (D-study). In Reliability 7 D-study the test developer administers the test under operational conditions, under the condition in which the test will be used to make the decisions for which it is designed and uses G-theory procedures to estimate the magnitude of the variance components. The application of G-theory enables test developer and test user to specify the different sources of variance that are concern for a given test use to estimate the relative importance of these different sources simultaneously. CTS model is a special case of G-theory in which there are only two sources of variance: a single ability and a single source of error. c) Item Response Theory Bachman (1990, P.202) another framework for estimating reliability is Item response theory. Factors that affect reliability estimates Bachman (1990, P.220) There are general characterictics of tests and test scores that influence the estimate of reliability. Understanding these factors help us to better determine which reliability estimates are appropriate to given set of test scores and interpret their meaning. These factors include: a) length of test, b) difficulty of test and score variance, c) cut-off score, d) systematic measurement error, e) the effect of systematic measurement error, f) the effect of test method. Conclusion Reliability is the consistency of measure, the score of the test is important. Reliability estimated in three frameworks such as 1. Classical true score measurement theory, 2. Generalizability theory, 3. Item Response theory. There are three approaches in CTS for estimating reliability, they included: a) internal consistency which involves approaches to Reliability examine internal consistency such as parallel test, split-half reliability estimates, spearman- Brown split- half estimate, The Gutten split-half estimate, Kuder- Richardson reliability coefficients, coefficient alpha, Rater consistency, Intra- rater reliability, Interrater reliability, b) Stability estimate, c) equivalence estimate. Also, there two problems with CTS model in estimating reliability. There are some factor that effect reliability estimates understanding them help us determine which reliability estimates are appropriate to a given set of test score. 8 Reliability 9 REFRENCES Bachman, L.F. (1990) Fundamental Consideration in Language Testing. Oxford:Oxford University Press. Brown, H.D. (2004) Language Assessment Principles and Classroom Practices. Longman Gipps , V. C. (1994) Beyond Testing:Towards a Theory of Educational Assessment. The Falmer Press (A member of the Taylor & Francis Group) London • Washington, D.C. Hening Grant. (2001) Guide to Language testing: Development, Evaluation and Research. Foreign language Teaching and Research Press. Heaten, J.B. (1988) Writing English Language Tests. Longman and New York