Advanced Compiler Design and Implementation

advertisement

Advanced Compiler Design and Implementation

Mooly Sagiv

Course notes

Case Studies of Compilers and Future

Trends

(Chapter 21)

By Ronny Morad & Shachar Rubinstein

24/6/2001

Case Studies

This chapter deals with real-life examples of compilers. For each compiler this scribe

will discuss three subjects:

A brief history of the compiler.

The structure of the compiler with emphasis on the back end.

Optimization performed on two programs. It must be noted that this

test can’t be used to measure and compare the performance of the

compilers.

The compilers examined are the following:

SUN compilers for SPARC 8, 9

IBM XL compilers for Power and PowerPC architectures. The Power

and PowerPC are classes of architectures. The processors are sold in

different configurations.

Digital compiler for Alpha. The Alpha processor was bought by Intel.

Intel reference compiler for 386

Historically, compilers were built for specific processors. Today, it is not so obvious.

Companies use other developers’ compilers. For example, Intel uses IBM’s compiler

for the Pentium processor.

The compilers will compile two programs: a C program and a Fortran 77 program.

The C program

int length, width, radius;

enum figure {RECTANGLE, CIRCLE} ;

main()

{

int area = 0, volume = 0, height;

enum figure kind = RECTANGLE;

for (height=0; height < 10; height++)

{

if (kind == RECTANGLE) {

area += length * width;

volume += length * width * height;

}

else if (kind == CIRCLE){

area += 3.14 * radius * radius ;

volume += 3.14 * radius * height;

}

}

process(area, volume);

}

Possible optimizations:

1. The value of ‘kind’ is constant and equals to ‘RECTANGLE’.

Therefore the second branch, the ‘else’ part is dead code and the first

‘if’ is also redundant.

2. ‘length * width’ is loop invariant and can be calculated before the loop.

3. Because ‘length * width’ is loop invariant, area can be calculated

simply by using a single multiplication. Specifically, 10 * length *

width.

4. The calculation of ‘volume’ in the loop can be done using addition

instead of multiplication.

5. The call to ‘process()’ is a tail-call. The fact can be used to prevent to

need to create a stack frame.

6. Compilers will probably use loop unrolling to increase pipeline

utilization.

Note: Without the call to ‘process()’, all the code is dead, because ‘area’ and ‘volume’

aren’t used.

The Fortran 77 program:

integer a(500, 500), k, l;

do 20 k=1,500

do 20 l=1,500

a(k, l)= k+l

20 continue

call s1(a, 500)

end

subroutine s1(a,n)

integer a(500, 500), n

do 100 i = 1,n

do 100 j = i + 1, n

do 100 k = 1, n

l = a(k, i)

m = a(k, j)

a(k, j) = l + m

100 continue

end

Possible optimizations:

1. a(k,j) is calculated twice. This can be prevented by using commonsubexpression elimination.

2. The call to ‘s1’ is a tail-call. Because the compiler has the source of

‘s1’, it can be inlined at the main procedure. This can be used to further

optimize the resulting code. Most compilers will leave the original

copy of ‘s1’ intact.

3. If the procedure is not inlined, interprocedural constant propagation

can be used to find out that ‘n’ is a constant equals 500.

The access to ‘a’ is calculated using multiplication. This can be

averted using addition. The compiler “knows” how the array will be

realized in memory. For example, in Fortran, arrays are ordered by

columns. So it can add the correct number of bytes every time to the

address, instead of recalculating.

5. After 4, the counters aren’t needed and the conditions in the loop can

be replaced by testing the address. That’s done using linear test

replacement.

6. Again, loop unrolling will be used according to the architecture.

4.

Sun SPARC

The SPARC architecture

SPARC has two major versions of the architecture, Version 8 and Version 9.

The SPARC 8 has the following features:

32 bit RISC superscalar system with pipeline.

Integer and floating point units.

The integer unit has a set of 32-bit general registers and executes load,

store, arithmetic, logical, shift, branch, call and system-control

instructions. It also computers addresses (register + register or register

+ displacement).

The floating-point unit has 32 32-bit floating-point data register and

implements the ANSI/IEEE floating-point standard.

There are 8 general purpose integer registers (from the integer unit).

The first has a constant value of zero (r0=0).

Three address instructions at the following form: Instruction

Src1,Src2,Result.

Several 24 register windows (spilling by OS). This is used to save on

procedure calls. When there aren’t enough registers, the processor

sends an interrupt and the OS handles saving the registers to memory

and refilling them with the necessary values.

SPARC 9 is a 64-bit version, fully upward-compatible with Version 8.

The assembly language guide is on pages 748-749 of the course book, tables A.1, A.2,

A.3.

The SPARC compilers

General

Sun SPARC compilers originated from the Berkeley 4.2 BSD UNIX software

distribution and have been developed at Sun since 1982. The original back end was

for the Motorola 68010 and was migrated successively to later members of the

M68000 family and then to SPARC. Work on global optimization began in 1984 and

on interprocedural optimization and parallelization in 1989. The optimizer is

organized as a mixed model. Today Sun provides front-ends, and thus compilers, for

C, C++, Fortran 77 and Pascal.

The structure

Front End

Sun IR

yabe

Relocatable

Automatic inliner

Aliaser

Iropt

(global optimization)

Sun IR

Code

generator

Relocatable

The four compilers: C, C++, Fortran 77 and Pascal, share the same back-end. The

front-end is Sun IR, an intermediate representation discussed later. The back end

consists of two parts:

Yabe – “Yet Another Back-End”. Creates a relocatable code without

optimization.

An optimizer.

The optimizer is divided to the following:

The automatic inliner. This part works only on optimization level 04

(discussed later). It replaces some calls to routines within the same

compilation unit with inline copies of the routines’ body. Next, tailrecursion elimination is preformed and other tail calls are marked for

the code generator to optimize.

The aliaser. The aliaser used information that is provided by the

language specific front-end to determine which sets of variables may,

at some point in the procedure, map to the same memory location. The

aliaser aggressiveness is determined on the optimization level. Aliasing

information is attached to each triple that requires it, for use by the

global optimizer.

IRopt, the global optimizer

The code generator.

The Sun IR

The Sun IR represents a program as a linked list of triples representing executable

operations and several tables representing declarative information. For example:

ENTRY “s1_” {IS_EXT_ENTRY, ENTRY_IS_GLOBAL}

GOTO LAB_32

LAB_32:

LTEMP.1 = (.n { ACCESS V41} );

i=1

CBRANCH (i <= LTEMP.1, 1: LAB_36, 0: LAB_35);

LAB_36:

LTEMP.2 = (.n { ACCESS V41} );

j=i+1

CBRANCH (j <= LTEMP.2, 1: LAB_41, 0: LAB_40);

LAB_41:

LTEMP.3 = (.n { ACCESS V41} );

k=1

CBRANCH (k <= LTEMP.3, 1: LAB_46, 0: LAB_45);

LAB_46:

l = (.a[k, i] ACCESS V20} );

m = (.a[k, j] ACCESS V20});

*(a[k,j] = l+m {ACCESS V20, INT});

LAB_34:

k = k+1;

CBRANCH(k>LTEMP.3, 1: LAB_45, 0: LAB_46);

LAB_45:

j = j+1;

CBRANCH(j>LTEMP.2, 1: LAB_40, 0: LAB_41);

LAB_40:

i = i+1;

CBRANCH(i>LTEMP.1, 1: LAB_35, 0: LAB_36);

LAB_35:

The CBRANCH is a general branch, not attached to the architecture. It provides two

branches, the first when the expression is correct and the second when not.

This IR is somewhere between LIR and MIR. It isn’t LIR because there are no

registers. It isn’t MIR because there is access to memory using the compiler memory

organization, the use of LTEMP.

Optimization levels

There are four optimization levels:

01

Limited optimizations. This level invokes only certain optimization

components of the code generator.

02

This and higher levels invoke both the global optimizer and the optimizer

components of the code generator. At this level, expressions that involve global or

equivalent variables, aliased local variables’ or volatile variables are not candidates

for optimization. Automatic inlining, software pipelining, loop unrolling, and the

early phase of instruction scheduling are not done.

03

This level optimizes expressions that involve global variables but make worstcase assumptions about potential aliases caused by pointers and omits early

instruction scheduling and automatic inlining. This level gives the best results.

04

This level aggressively tracks what pointers may point to’ making worst-case

assumptions only where necessary. It depends on the language-specific front ends to

identify potentially aliased variables, pointer variables, and a worst-case set of

potential aliases. It also does automatic inlining and early instruction scheduling. This

level turned out to be very problematic because of bugs in the front-ends.

The global optimizer

The optimizer input is Sun IR and the output is Sun IR.

The global optimizer performs the subsequent on that input:

Control-flow analysis is done by identifying dominators and back

edges, except that the parallelizer does structural analysis for its own

purposes.

The parallelizer searches for commands the processor can execute in

parallel. Practically, it doesn’t improve execution time (The alpha

processor is where it has an effect, if any). Most of the time it is just

for not interrupting the processor’s parallelism.

The global optimizer processes each procedure separately, using basic

blocks. It first computes additional control-flow information. In

particular, loops are identified at this point, including both explicit

loops (for example, ‘do’ loops in Fortran 77) and implicit ones

constructed from ‘if’s and ‘goto’s.

Then a series of data-flow analysis and transformations is applied to

the procedure. All data-flow analysis is done iteratively. Each

transformation phase first computes (or recomputes) data-flow

information if needed. The transformations are preformed in this order:

1. Scalar replacement of aggregates and expansion of Fortran

arithmetic on complex numbers to sequences of real-arithmetic

operations.

2. Dependence-based analysis and transformations (levels 03 and

04 only, as described below).

3. Linearization of array addresses.

4. Algebraic simplification and reassociation of address

expressions.

5. Loop invariant code motion.

6. Strength reduction and induction variable removal.

7. Global common-subexpression elimination.

8. Global copy and constant propagation.

9. Dead-code elimination

The dependence based analysis and transformation phase is designed

to support parallelization and data-cache optimization and may be done

(under control of a separate option) when the optimization level

selected is 03 or 04. The steps comprising it (in order) are as follows:

1. Constant propagation

2. Dead-code elimination

3. Structural control flow analysis

4. Loop discovery (including determining the index variables,

lower and upper bounds and increment).

5. Segregation of loops that have calls and early exits in their

bodies.

6. Dependence analysis using GCD and Banerjee-Wolfe tests,

producing direction vectors and loop-carried scalar du- and

ud-chains.

7. Loop distribution.

8. Loop interchange.

9. Loop fusion.

10. Scalar replacement of array elements.

11. Recognition of reductions.

12. Data-cache tiling.

13. Profitability analysis for parallel code generation.

The code generator

After global optimization has been completed, the code generator first translates the

Sun IR code input to it to a representation called ‘asm+’ that consists of assemblylanguage instructions and structures that represent control-flow and data dependence

information. An example is available on page 712. The code generator then performs

a series of phases, in the following order:

1. Instruction selection.

2. Inline of assembly language templates whose computational impact is

understood (02 and above).

3. Local optimizations, including dead-code elimination, straightening,

branch chaining, moving ‘sethis’ out of loops, replacement of

branching code sequences by branchless machine idioms, and

communing of condition-code setting (02 and above).

4. Macro expansion, phase 1 (expanding of switches and a few other

constructs).

5. Data-flow analysis of live variables (02 and above).

6. Software pipelining and loop unrolling (03 and above).

7. Early instruction scheduling (04 only).

8. Register allocation by graph coloring (02 and above).

9. Stack frame layout

10. Macro expansion, phase 2 (Expanding of memory-to-memory moves,

max, min, comparison of value, entry, exit, etc.). Entry expansion

includes accommodating leaf routines and generation of positionindependent code.

11. Delay-slot filing.

12. Late instruction scheduling

13. Inlining of assembly language templates whose computational impact

is not understood (02 and above).

14. Macro expansion, phase 3 (to simplify code emission).

15. Emission of relocatable object code.

The Sun compiling system provides for both static and dynamic linking. The selection

is done by a link-time option.

Compilation results

The assembly code for the C program appears in the book on page 714. The code was

compiled using 04 optimization.

The assembly code for the Fortran 77 program appears in the book on pages 715-716.

The code was compiled using 04 optimization.

The numbers in parentheses are according to the numbering of possible optimizations

for each program.

Optimizations performed on the C program

(1) The unreachable code in ‘else’ was removed, except for π, which is

still loaded from .L_const_seg_900000101 and stored at %fp-8.

(2) The loop invariant ‘length * width’ has been removed from the

loop (‘smul %o0,%o1,%o0’).

(4) Strength reduction of “height”. Instead of multiplying by ‘height’,

addition of previous value is used.

(6) Loop unrolling by factor of four. (‘cmp %lo,3’)

Local variables in registers.

All computations in registers.

(5) Identifying tail call and optimizing it by eliminating the stack

frame.

Missed optimizations on the C program

Removal of computation.

(3) Compute area in one instruction.

Completely unroll the loop. Only the first 8 iterations were unrolled.

Optimizations performed on the Fortran 77 program

(2) Procedure integration of s1. The compiler can make use of the fact

that n=500 to unroll the loop, which it did.

Common subexpression elimination of ‘a[k,j]’

Loop unrolling, from label .L900000112 to .L900000113.

Local variables in registers

Software pipelining. Note, for example, the load just above the starting

label of the loop.

An example for software pipelining:

When running the following commands, assuming all depend on each other:

Load

Add

Store

The add can’t be started until load is finished and ‘store’ can’t be started until ‘add’ is

finished. The compiler can improve this code by writing the following:

Load

*Load

Add

*Store

Store

The compiler inserts here the commands with * needed later. This way, when ‘add’

will start execution, the result of the first load will be available. Same for ‘store’ and

‘add’ respectively.

Missed optimizations on the Fortran 77 program

Eliminating s1. The compiler produced code for ‘s1()’ although the

main routine is the only one calling ‘s1()’.

Eliminating addition in the loop via linear function test replacement.

This would have eliminated one of the additions in the resulting code.

POWER/PowerPC

The POWER/PowerPC architecture

The POWER architecture is an enhanced 32-bit RISC machine with the following

features:

It consists of branch, fixed-point, floating-point and storage-control

processors.

Individual implementations may have multiple processors of each sort,

except that the registers are shared among them and there may be only

one branch processor in a system. That is, a processor is configurable

and may be purchased with different number of processors.

The branch processor includes the condition, link and count registers

and executes conditional and unconditional braches and calls, system

calls and condition register move and logical operations.

The fixed-point processor contains 32 32-bit integer general purpose

registers, with register ‘gr0’ delivering the value zero when used as an

operand in an address computation. (gr0=0). It implements loads and

stores, arithmetic, logical, compare, shift, rotate and trap instructions.

It also implements system control instructions. There are two modes of

addressing: register + register or register + displacement, plus the

capability to update the base register with the computed address.

The floating-point processor contains 32 64-bit data register and

implements the ANSI/IEEE floating-point standard for doubleprecision values only.

The storage-control processor provides for segmented main-storage,

interfaces with caches and translation look-aside buffer and does

virtual address translation.

The instructions typically have three operands, two sources and one

result. The order is opposite to SPARC, first the result and then the

operands: Instructions result, src1, src2.

The PowerPC architecture is a nearly upward compatible extension of POWER that

allows for 32- and 64-bit implementations. It isn’t 100% compatible because, for

example, some instructions, which were troublesome corner cases, have been made

invalid.

The assembly language guide is on page 750 of the course book, table A.4.

The IBM XL compilers

General

The compilers for these architectures are known as the XL family. The XL family

originated in 1983, as a project to provide compiler to an IBM RISC architecture that

was an intermediate stage between the IBM 801 and POWER, but that was never

released as a product. It was an academic project. The first compilers created were an

optimizing Fortran compiler for the PC RT that was release to a selected few

customers and a C compiler for the PC RT used only for internal IBM development.

The compilers were created with interchangeable back ends, so today they generate

code for POWER, Intel 386, SPARC and PowerPC. The compilers were written in

PL.8.

The compilers don’t perform interprocedural optimizations. Almost all optimization

are preformed on a proprietary low level IR, called “XIL”. Some optimizations, which

require higher level IR, for example, optimizations on arrays, are performed on YIL, a

higher level representation. It’s created from XIL.

The structure

Translator

XIL

Optimizer

XIL

Instruction

scheduler

Register allocator

Instruction

scheduler

Root services

XIL

Instruction

selection

XIL

Final assembly

relocatable

Each compiler consists of a front end called a translator, a global optimizer, an

instruction scheduler, a register allocator. An instruction selector and a phase called

final assembly that produces the relocatable image and assembly language listings.

The root services module interacts with all phases and serves to make compilers

compatible with multiple operating systems by, for example, holding information

about how to produce listings and error messages.

The translator and XIL

A translator converts the source language to XIL using calls to XIL library routines.

The XIL generation routines do not merely generate instructions. They may perform a

few optimizations, for example, generate a constant in place of an instruction that

would compute the constant. A translator may consist of a front end that translates a

source language to a different IR language, followed by a translator from the other

intermediate form to XIL.

The XIL compilation unit uses data structures illustrated on page 720. The illustration

shows the relationships among the structures. It may save memory space while

compiling but it makes debugging the compiler more difficult. The data structures are:

A procedure descriptor table that holds information about each

procedure, such as the size of its stack frame and information about

global variables it affects, and a pointer to the representation of its

code.

A procedure list. The code representation of each procedure consists of

a procedure list that comprises pointers to the XIL structures that

represent instructions. The instructions are quite low level and sourcelanguage independent.

Computation table. Each instruction is represented as an entry in this

table. The computation table is an array of variable length records that

represent preorder traversals of the intermediate code for the

instructions.

Symbolic register table. Variables and intermediate results are

represented by symbolic registers, each comprises an entry in this

table. Each entry points to the computation table entry that defines it.

An example of XIL is on page 721.

TOBEY

The compiler back end (all the phases except the source to XIL translator) is named

TOBEY, an acronym for TOronto Back End with Yorktown, indicating the heritage

of the two group which created the back end.

The TOBEY optimizer

The optimizer does the subsequent:

YIL is used for storage-related optimization.

o YIL is created by TOBEY from XIL and includes; in

addition to the structures in XIL, representations for

looping constructs, assignment statements, subscripting

operation, and conditional control flow at the level of

‘if’ statements.

o It also represent the code is SSA form.

o The goal is to produce code that is appropriate for

dependence analysis and loop transformations.

o After the analysis and transformations, the YIL is

translated back to XIL.

Alias information is provided by the translator to the optimizer by calls

from the optimizer to front end routines.

Control flow uses basic blocks. It builds the flow graph within a

procedure, uses DFS to construct a search tree and divides it into

intervals.

Data flow analysis is done by interval analysis. It’s an older method

that the dominator method for finding loops. The iterative form is used

for irreducible intervals.

Optimization is preformed on each procedure separately.

The register allocator

TOBEY includes two register allocators:

A “quick and dirty” local , used when optimization is not requested.

A graph-coloring global based on Chatin’s, but with spilling done in

the style of Brigg’s work.

The instruction scheduler

Performs basic-block and branch scheduling.

Performs global scheduling.

Run after register allocations if any spill code has been generated.

The final assembly

The final assembly phase does 2 passes over the XIL:

peephole optimizations – removing compares.

generate relocatable image and listings.

Compilation results

The assembly code for the C program appears in the book on page 724.

The assembly code for the Fortran 77 program appears in the book on pages 724-725.

The numbers in parentheses are according to the numbering of possible optimizations

for each program.

Optimizations performed on the C program

(1) The constant value of kind has been propagated into the conditional

and the dead code eliminated.

(2) The loop invariant length*width has been removed from the loop.

(6) the loop has been unrolled by factor of two.

the local variables have been allocated to registers.

instruction scheduling has been performed.

Missed optimizations on the C program

(5) tail call to process().

(4) accumulation of area has not been turned into a single

multiplication

Optimizations performed on the Fortran 77 program

(3) find out that n=500.

(1) common sub-expression elimination of a[k,j].

(6) The inner loop has been unrolled by a factor of two.

The local variables have been allocated to registers.

Instruction scheduling has been performed.

Missed optimizations on the Fortran 77 program

(2) The routine s1() has not been inlined.

(5) Eliminating addition in loop via linear function test replacement.

Intel 386

The Intel 386 architecture

The Intel 386 architecture includes the Intel 386 and its successors, the 486, Pentium,

Pentium Pro and so on. The architecture is a thoroughly CISC design, however some

implementations utilize RISC principles such as pipelining and superscalarity.

It has the following characteristics:

There are eight 32-bit integer registers.

It supports 16 and 8 bit registers.

There are six 32-bit segment registers for computing addresses.

Some registers have dedicated purposes (e.g. point to the top of the

current stack frame).

There are many addressing modes.

There are eight 80-bit floating point regisers.

The assembly language guide is on page 752-753 of the course book, tables A.7 and

A.8.

The Intel compilers

Intel provides compilers from C, C++, Fortran 77 and Fortran 90 for the 386

architecture family.

The structure of the compilers, which use the mixed model of optimizer organization,

is as follows:

Front end

IL-1

Interprocedural

optimizer

IL-1 + IL-2

Memory

optimizer

IL-1 + IL-2

Global

optimizer

IL-1 + IL-2

Code selector

Register

allocator

Instruction

scheduler

Relocatable

Code genrator

The fron-end is derived from work done at Multiflow and the Edison Design Group.

The fron-ends produce a medium-level intermediate code called IL-1.

The interprocedural optimizer operates accross modules. It performs a series of

optimizations that include inlining, procedure cloning, parameter substitution, and

interprocedural constant propagation.

The output of the interprocedural optimizer is a lowered version of IL-1, called IL-2,

along with IL-1’s program-structure information; this intermediate form is used for

the remainder of the major components of the compiler, down through input to the

code generator.

The memory optimizer improves use of memory and caches mainly by performing

loop transformations.It first does SSA-based sparse conditional constant propagation

and then data dependence analysis.

The global optimizer does the following optimizations:

constant propagation

dead-code elimination

local common subexpression elimination

copy propagation

partial-redundancy elimination

a second pass of copy propagation

a second pass of dead-code elimination

Compilation results

The assembly code for the C program appears in the book on page 741.

The assembly code for the Fortran 77 program appears in the book on pages 742-743.

The numbers in parentheses are according to the numbering of possible optimizations

for each program.

Optimizations performed on the C program

(1) The constant value of kind has been propagated into the conditional

and the dead code eliminated.

(2) the loop invariant length*width has been removed from the loop.

strength-reduction of height.

the local variables have been allocated to registers.

instruction scheduling has been performed.

Missed optimizations on the C program

(6) loop unroll.

(5) tail-call optimization.

(3) accumulation of area into a single multiplication.

Optimizations performed on the Fortran 77 program

(2) s1() has been inlined, and therefore it is found out that n=500.

(1) common subexpression elimination of a[k,j]

(5) linear-function test replacement

local variables allocated to regisers

Missed optimizations on the Fortran 77 program

(6) loop unroll

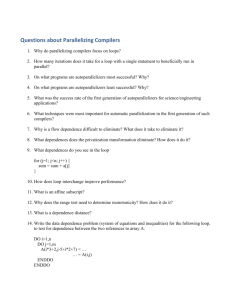

Compilers comparison

The performance of each

following table:

optimization

constant propagation of

king

dead-code elimination

loop-invariant code

motion

strength-reduction of

height

reduction of area

computation

loop unrolling factor

rolled loop

regiser allocation

instruction scheduling

stack frame eliminated

tail call optimized

of the compilers on the C example is summarized in the

The performance of each

the following table:

optimization

address of a(i) a

common subexpression

precedure integration of

s1()

loop unrolling factor

rolled loop

instructions in innermost

loop

linear-function test

replacement

software pipelining

register allocation

instruction scheduling

elimination of s1()

subroutine

of the compilers on the Fortran example is summarized in

Sun SPARC

yes

yes

IBM XL

Intel 386 family

yes

almost all

yes

yes

yes

yes

yes

yes

yes

yes

no

no

no

4

yes

yes

yes

yes

yes

2

yes

yes

yes

no

no

none

yes

yes

yes

no

no

Sun SPARC

yes

yes

IBM XL

Intel 386 family

yes

yes

no

yes

4

yes

21

2

yes

9

none

yes

4

no

no

yes

yes

yes

yes

no

no

yes

yes

no

no

yes

yes

no

Future trends

There are several clear main trends developing for the near future of advanced

compiler design and implementation:

SSA is being uses more and more:

o allows methods designed to basic blocks & extended

basic blocks to be applied to whole procedures

o improves performance

more use of partial-redundancy elimination

partial-redundancy elimination & SSA are being combined

scalar-oriented optimizations integrated with parallelization and

vectorization.

advance in data-dependence testing, data-cache optimization and

software pipelining

The most active research in scalar compilation will continue to be

optimization.

Other trends

More and more work will be shifted from hardware to compilers.

More advanced hardware will be available.

Higher order programming languages will be used:

o Memory management will be simpler

o Modularity facilities will be available.

o Assembly programming will hardly be used.

Dynamic (runtime) compilation will become more significant.

Theoretical Techniques in Compilers

technique

data structures

automata algorithms

graph algorithms

linear programming

Diophantic equations

random algorithms

compiler field

all

front-end, instruction selection

control-flow analysis, data-flow analysis, register

allocation

instruction selection (complex machines)

parallelization

not use yet