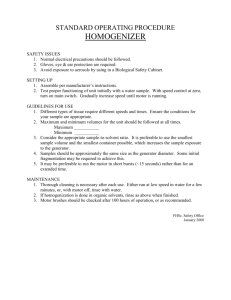

For Classifications of Hardware/Software: (Be as complete & detail

advertisement