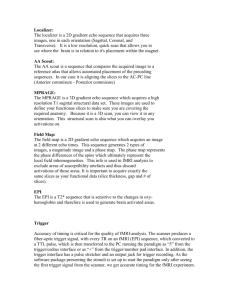

user training guide/FAQ - Henry H. Wheeler Jr. Brain Imaging Center

advertisement