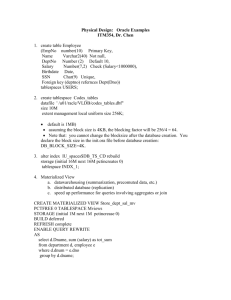

DBA-625

Material to be Covered During DBA625 Lectures, WINTER 2014

Text Book: Oracle 11g Notes -- DBA ( official Oracle Notes)

Week 1:

Intro: INTRODUCTION incl. Course Objectives

Lesson 1: ORACLE DATABASE ARCHITECTURE

Commands/Content:

Course Overview and Course Objectives

History of Oracle Evolution , Years and Versions

Oracle Products and Services

Interacting with an Oracle Database – Steps

Oracle Database Server Structure

What is an Instance and what is a Database --> together they form a Database Server

Instance is made of SGA (Shared - System Global Area) and several Background Processes

Database is made of bunch of files and the Database Core contains Control files, Redo Log files and

Data files (all are in binary format)

All SGA Regions: 1. SHARED_POOL

-- Library Cache (holds most recently executed SQL and PL/SQL statements, their parsed versions and their Execution plans

-- Dictionary (Row) Cache (holds information about Data Dictionary Objects and User's Privileges)

-- New

TO 11g are SQL and PL/SQL Result Caches

Both caches behave by the LRU algorithm, so that data blocks are aged out if not being used very often

2. DATA BUFFER CACHE (DBC)

-- holds recent Data Blocks that contain rows being processed by users' SQL statements

-- 3 states of buffers --> a) Clean) b) Dirty c) Pinned (which will become clean or dirty)

-- behaves according to the LRU algorithm as well

3. LOG BUFFER CACHE (LBC)

-- holds only Data Blocks that are “dirty” – blocks that contain either DML or DDL modifications by users

-- behaves in a circular manner , so that data blocks are aged out in the order they arrived

4. LARGE POOL (optional region)

-- holds information about Shared Server, I/O related tasks and RMAN related topics for

Backup/Restore operations (only if used)

5. JAVA POOL (optional region)

-- stores information for JVM and Java related applications (only if used)

6. STREAMS POOL (optional region)

-- holds information about product ORACLE STREAMS (if being used0

Important SGA parameters are:

1. DB _BLOCK_SIZE (static, lifetime) -- 2K, 4K, 8K (default), 16K,32K --> can NOT be changed ever

2.. SGA_MAX_SIZE (static) -- in hundreds of M till several GB, this is MAX value for SGA that can be used (if specified)

3. SGA_TARGET (dynamic) -- less or equal to SGA_MAX_SIZE , if non-zero value then considers values for parameters 6,7,9,10 as MINIMAL ones (if specified) and

it means that DBA is going for ASMM (Auto Shared Memory

Management) Feature

4. MEMORY_MAX_SIZE (dynamic) -- if specified, this is MAX amount of memory for BOTH

SGA components and PGA part

5.

MEMORY_TARGET (dynamic) -- less or equal to MEMORY_MAX_SIZE, Server takes care of BOTH SGA components and PGA with non-zero value here

-- Parameters 4 and 5 are new to 11g.

6..

SHARED_POOL_SIZE (dynamic) -- at least 64MB till several GB

7. DB_CACHE_SIZE (dynamic) -- at least 32MB till several GB

8. LOG_BUFFER (static) -- from 2.7M till several MB

9. LARGE_POOL_SIZE (dynamic) -- from 4MB till several hundreds MB, might be set to 0 initially at startup

10. JAVA_POOL_SIZE (dynamic) -- from 4MB till several hundreds MB, might be set to 0 initially at startup

Week 2:

Lesson 1: ORACLE ARCHITECTURAL COMPONENTS continued

Lesson 2: PREPARING THE DATABASE ENVIRONMENT (on LINUX) incl. DBA Tasks and

Duties

Commands/Content:

Process architecture – User and Database (it may be Server and Background ) processes

Two types of Server Process – Dedicated (one for each User) and Shared (may serve many Users and needs one or more Dispatcher processes)

Important Background Processes -- SMON, PMON, LGWR, DBW0, CKPT, ARC0 (optional),

MMON, MMAN, CJQ0, Jnnn

Task of DBW0 is to flush off all “dirty” buffers from DBC to Data Files on disk. Here is

Checkpoint a crucial criteria or when it can not find Free buffer after scanning a certain number of buffers.

Note: "Dirty buffers" flush off by DBWR to Data Files may happen anytime before/after the Commit and it may happen that some NOT committed buffers finish on Disk

Task of LGWR is to flush off SEQUENTIALLY all buffers from LBC to Redo Log Files on disk.

Here is Commit a crucial criteria or

when Log buffer one third full, or every 3 000 mili-seconds or always before Database Writer writes.

SMON is performing at the STARTUP following 3 actions (if the Instance Crash happened)

1. ROLL FORWARD -- moving committed transactions from Redo Logs to Data Files

2. OPENS DATABASE -- only partially (the Database part not affected with step 3)

3. ROLLBACK -- all NOT committed transactions that were placed in Data Files (by using Undo

Segments)

Note: It might happen (although rarely) that some NOT committed transactions finish on

Disk (in Data Files)

PMON is performing cleanup after User process has failed, which involves 3 steps:

1. Releasing all Locks that were imposed by that User session

2. Rollback of Not committed transactions by that User session

3. Releasing all Resources (memory and disk) that were held by that User session

Oracle Database Files -- CONTROL FILE (min 1), REDO LOG FILES (min 2) and DATA FILES

(min 3),

Other Key Files -- Password File, Alert Log File alert_SID.log

, Trace Files (for Background and

Server processes)

Server Parameter file spfileSID.ora

and Initial Parameter File initSID.ora

Database Storage Architecture

Tablespace, Segment, Extent and Data Block vs. Data Files and OS

Blocks

SYSTEM and SYSAUX tablespaces as mandatory ones (SYSTEM must be On-Line always). The third mandatory tablespace is UNDOTBSP.

Tasks and Duties of a DBA.

Ten tasks incl. Performance Tuning.

Database Administration Tools --

ORACLE UNIVERSAL INSTALLER , DATABASE CONFIGURATION ASSISTANT , EM,

SQL*PLUS , SQL*LOADER, EXPORT/IMPORT etc.

System Requirements – Kernel parameters, Memory and Disk space

Planning Database File Locations, What is OFA (Optimal Flexible Architecture)

Important Environment Variables like ORACLE_BASE, ORACLE_HOME, ORACLE_SID, HOME,

PATH then

where to look for 'admin', 'oradata', 'diag', 'trace', 'pfile' etc. subdirectories

How to set the Environment Variable with export VAR=value and how to check that value with echo

$VAR

Oracle Universal Installer --> Installing 11g Enterprise Database on Linux --> Steps and Options

(incl. Advanced options)

Planning the Database (Capacity Planning)

Week 3:

Lesson3: CREATING AN ORACLE DATABASE

Lesson 4: MANAGING the ORACLE INSTANCE

Commands/Content:

Creating a New Database -- GUI by using the Database Configuration Assistant (DBCA) or by using

Scripts

Configuring a Starter Database by using DBCA, Steps and Options

Other features of DBCA

Delete Database, Configure Database Template, Manage Templates and

Configure ASM (Automatic Storage Management)

Default Administrator's Accounts/Passwords --- Three types with the different power descending:

SYS/ORACLE AS SYSDBA, --> Password may be ORACLE as well

SYS/ORACLE AS SYSOPER --> Password may be ORACLE as well

SYSTEM --> Password may be ORACLE as well

DBSNMP --> Password may be ORACLE as well

Two basic Authentication Methods -- OPERATING SYSTEM and PASSWORD FILE

Value of the parameter REMOTE_LOGIN_PASSWORDFILE to either

NONE for OS authentication > CONNECT / AS SYSDBA

EXCLUSIVE for the Single Instance and Oracle Password option and

> CONNECT SYS/ORACLE AS SYSDBA

SHARED for the RAC ( FORMER OPS ) option with Oracle Password

What is Listener (Process) and how to ADD one by using Oracle Net Configuration Assistant

(NETCA)

How to START and STOP Listener in Linux with:

$ lsnrctl start | stop

How to START and STOP Database Control (EM) in Linux with:

$ emctl start | stop dbconsole

What is ENTERPRISE MANAGER (EM) and how to invoke it with https://hostname:PORT/em

Home Page of EM

What is SQL*PLUS and how to use the COMMAND LINE MODE

What is the Initialization Parameter File initSID.ora and what are the crucial parameters and their possible values:

Db_name, Instance_name, Db_block_size, Db_cache_size, Shared_pool_size, Log_buffer,

Control_files, Compatible,

Processes, Undo_management, Memory_Target, SGA_Target, Memory_Max_Target,

SGA_Max_Size, PGA_Aggregate_Target

PFILE (ASCII text/static/manual editing) vs. SPFILE (binary/dynamic-persistent/modified through command line)

Using SHOW PARAMETER string to see the names and values for all parameters containing string or

going for data dictionary view V$PARAMETER and V$SPPARAMETER

Two ways to Set (change) a Database Initialization Parameter --

STATIC (editing the entry in the parameter file PFILE , shutting down and restarting the instance) or

DYNAMIC (by either changing it for a SESSION or SYSTEM or SYSTEM Delayed option) by using:

> ALTER { SESSION| SYSTEM} SET parameter=value [DEFERRED]

How to MODIFY dynamically values in SPFILE by specifying SCOPE, where default value for PFILE is Memory and for SPFILE is Both

> ALTER SYSTEM SET paramater=value [SCOPE = {MEMORY| SPFILE| BOTH}]

How to see content of the SPFILE (using 'more' or Wordpad) , but it can NOT be edited.

Three Database Startup Stages -- NOMOUNT, MOUNT and OPEN (default), also possible

RESTRICT mode upon startup for DBA’s only

Changing the stage with ALTER DATABASE {MOUNT | OPEN}or

ALTER DATABASE OPEN READ {WRITE | ONLY}

Four Database Shutdown Modes -- NORMAL (default for SQL), TRANSACTIONAL, IMMEDIATE

(default in EM) and ABORT

What activity is NOT allowed in each of these four stages

How to Enable/Disable a Restricted Session while the Instance is running, but only for new users with:

> ALTER SYSTEM { ENABLE| DISABLE} RESTRICTED SESSION;

What is an Alert Log File -- Database diary or journal and its default directory

$ORACLE_BASE/diag/rdbms/Db_Name/SID/alert

How to view Alert Log file dynamically with tail -f alert_SID.log

Two types of Trace Files -- for BACKGROUND PROCESSES, they reside in the same directory as

Alert Log file

and SERVER PROCESSES, they reside in default directory

ORACLE_BASE/diag/rdbms/Db_Name/SID/trace

What are Dynamic Performance Views – dynamic info about operation/performance of the instance, owned by SYS user, all names stored in the V$FIXED_TABLE

and which ones can be accessed at NOMOUNT, MOUNT or OPEN stage

like V$INSTANCE, V$PARAMETER, V$SGA, V$CONTROLFILE, V$DATABASE, V$LOG,

V$LOGFILE, V$DATAFILE, DBA_viewname etc.

Data Dictionary Views and Categories ( USER_name ALL_name and DBA_name )

Week 4:

Lesson 6: MANAGING DATABASE STORAGE STRUCTURES

– about CREATE TABLESPACE

(Permanent) and ALTER TABLESPACE/DATABASE

Commands/Content:

Database Storage Hierarchy -- Logical vs. Physical Storage Model

Logical components are: Database, Tablespaces, Segments, Extents and Database Blocks

Physical components are: Datafiles and Operating System Blocks

Two basic Types of Tablespaces are:

Mandatory -- SYSTEM, SYSAUX, UNDO and Optional - USERS, TEMP, INDEX, TOOLS,

EXAMPLES etc.

How to add a new Tablespace with default options (PERMANENT, ONLINE, LOGGING, READ

WRITE)

and default EXTENT MANAGEMENT mode --> LOCAL with:

CREATE TABLESPACE tbsp_name

DATAFILE '/path/filename' SIZE { 100M | n {K | M }} -- where 'path' means the Full

Unix Path to the directory

[ AUTOEXTEND { OFF | ON [NEXT n{K|M}] [MAXSIZE {UNLIMITED | n{K|M}}] } ]

[ EXTENT MANAGEMENT LOCAL [{ AUTOALLOCATE | UNIFORM [SIZE {1M | n{K|M}}]}] ]

[ SEGMENT SPACE MANAGEMENT { AUTO | MANUAL}] ;

How to choose Dictionary Management Mode instead of Local one by using Default Storage clause that allows for customizing the size of all extents with: :

EXTENT MANAGEMENT DICTIONARY [MINIMUM EXTENT n{K|M}]

[DEFAULT STORAGE (INITIAL n{K|M} NEXT n{K|M} MINEXTENTS n MAXEXTENTS n

PCTINCREASE n)]

Local Mode means that Free Extents are managed through a Bitmap (bunch of 1 and 0), each bit represents a Group of Blocks

Dictionary Mode means that Free Extents are managed through a Data Dictionary (slower and may lead to the Fragmentation)

Default Extent Management Submode for LOCAL is AUTOALLOCATE --> Server will decide about the Extent Size (MULTIPLES of 64K) and

we will NOT know what will be that value; If we go for UNIFORM without specifying SIZE --> it will be 1M always

How to change (modify) Default Tablespace options with:

ALTER TABLESPACE tbsp_name NEWOPTION ;

where NewOption may be TEMPORARY, OFFLINE, NOLOGGING, READ ONLY

Three Methods to ADD space or to CHANGEsize of a Tablespace:

1) Add a new datafile to a Tablespace with:

ALTER TABLESPACE tbsp_name

ADD DATAFILE 'path' SIZE { 100M | n{K|M}} [autoextend_option]

2) Manually resize (desize as well) an existing datafile with:

ALTER DATABASE DATAFILE 'path/filename' RESIZE n{K|M}

3) TURN ON the automatic extent increasing for an existing datafile with:

ALTER DATABASE DATAFILE 'path/filename'

AUTOEXTEND ON [NEXT n{K|M} [MAXSIZE {UNLIMITED | n{K|M}}]]

How to move a system datafile or any datafile with active Undo segments with:

SHUTDOWN,

OS move with mv command

STARTUP MOUNT

ALTER DATABASE RENAME FILE 'old_path' TO 'new_path' and

ALTER DATABASE OPEN

How to move any ordinary (non-system and non-undo) datafile with:

ALTER TABLESPACE tbsp_name OFFLINE,

OS move with mv command

ALTER TABLESPACE RENAME DATAFILE 'old_path' TO 'new_path' and

ALTER TABLESPACE tbsp_name ONLINE

How to drop an existing Tablespace and all Datafiles there with just one command:

DROP TABLESPACE tbsp_name [INCLUDING CONTENTS AND DATAFILES]

Note: OS removal of all files associated with this tablespace is NOT needed anymore, becuse of

AND DATAFILES option

It is possible to drop a SINGLE DATAFILE in SQL, but you should NOT use it, because the mistake here is very costly

(you have to go for a WHOLE TABLESPACE or ignore the not-needed datafiles)

Important Data Dictionary views are:

V$TABLESPACE, V$DATAFILE (it shows the current and original file size) and can be observed in the MOUNT or OPEN stage and

DBA_TABLESPACES, DBA_DATA_FILES ( it shows all 3 Auto-extent components) and can be observed only in the OPEN stage

Week 5:

Lesson 6: MANAGING DATABASE STORAGE STRUCTURES -- about TEMPORARY

TABLESPACES

Lesson 14: BACKUP AND RECOVERY CONCEPTS

only the part about Instance Recovery and

Checkpoints, Logfiles and Controlfiles

Lesson 10: MANAGING UNDO DATA

Commands/Content:

Temporary Tablespaces are used strictly for SORT operations (as with ORDER BY, GROUP BY,

DISTINCT etc.)

and they can NOT contain Permanent objects (like Tables, Indexes, Clusters etc)

How to add a new Temporary Tablespace with the

CREATE TEMPORARY TABLESPACE tbsp_name

TEMPFILE 'path' SIZE n{K|M}

[EXTENT MANAGEMENT {LOCAL UNIFORM [SIZE { 1M | n{K|M}}] | DICTIONARY}]

Default Management Mode is LOCAL and UNIFORM of 1M, and you can NOT use

AUTOALLOCATE option for Extent Mgmt.

Tempfiles are always at NOLOGGING mode and they can NOT be made READ ONLY

Default Temporary Tablespace may be declared when Database is created or later by using:

ALTER DATABASE DEFAULT TEMPORARY TABLESPACE tbsp_name;

Default Temporary Tablespace can NOT be dropped, nor taken offline, nor altered to a permanent one

Important Data Dictionary views are:

V$TEMPFILE, DBA_TEMP_FILES,

What is NOT POSSIBLE in Oracle11g:

1. To create any Tablespace with the DICTIONARY option if System Tablespace is created as

LOCAL

2. To change the Submode for Local option from AUTOALLOCATE to UNIFORM and vice versa

3. To change the size of the Extents for an UNIFORM Tablespace

4. To create a TEMPORARY Tablespace with the AUTOALLOCATE option --> it has to be

UNIFORM (default size is 1M)

5. To create an UNDO Tablespace as UNIFORM --> it has to be AUTOALLOCATE option

Usage of the Control File -- Small binary file, Linked only to the one Database, Mandatory (should be multiplexed)

Content of the Control File --

Database name, Time stamp of the Database creation, Names and Locations of all core Database files,

Tablespace info, Log history, Location and Status of archived log files, Current Log Sequence

Number (LSN)

Current Checkpoint Number and Last Commited Transaction Number (System Change Number --

SCN)

Multiplexing the Control File -- Steps

Add new name (s) to the parameter CONTROL_FILES in the PFILE (initSID.ora file)

Shutdown,

Copy (Move) the file (s) to another location(s) in Linux

Startup with PFILE

Important Data Dictionary view is V$CONTROLFILE

Purpose of Redo Log files ---

To enable Instance Recovery and with Archived Log files to enable Media (Disk)/ Human

Errors Recovery

Redo Log Groups and Members, Minimum is to have two groups and one member

What is the Current Log Group and Log Sequence Number

What triggers LGWR to write into the current log group (most important is COMMIT request)

How to achieve a Log Switch --

Naturally by file being filled and Manually with > ALTER SYSTEM SWITCH LOGFILE

What happens during a Checkpoint – all datafile headers are in sync with controlfile info about the latest SCN and its Checkpoint Position

What types of Checkpoints do exist:

a) FULL -- caused by clean SHUTDOWN or by DBA manual command > ALTER SYSTEM

CHECKPOINT

b) PARTIAL – caused by placing Tablespace or Datafile OFFLINE

c) INCREMENTAL -- Influenced by setting just ONE parameter, so that DBW0 becomes more active and Checkpoint Position

in the Redo Log File (the position where SMON would begin an Instance Recovery) advances with better pace

(ensuring that all transactions with the SCN before that position are saved in Datafiles on disk)

FAST_START_MTTR_TARGET (in seconds, Default 0 -- meaning Incremental

Checkpointing is turned off)

Important dynamic views V$LOG and V$LOGFILE and different values of column STATUS in these views

How to add a new Log Group or a new Log Member with:

ALTER DATABASE ADD LOGFILE { 'path' SIZE n{K|M} | MEMBER 'path' TO GROUP n}

How to move (relocate) a Redo Log File with just two steps (SHUTDOWN is not needed anymore):

1. OS move witth mv command, then using

2. ALTER DATABASE RENAME FILE 'old_path' TO 'new_path'

How to drop (remove) an existing Log Group or Log Member with

1. ALTER DATABASE DROP LOGFILE {GROUP n | MEMBER 'path'}and then

2. OS removal with rm command

Undo Data is a COPY of original, pre-modified data (by DML statements) captured for EVERY transaction

It supports 4 different things:

1) ROLLBACK (Undo) operations by developers and users

2) Read Consistent Queries by users who only want to read data being modified (by someone else)

3) Instance Recovery by SMON -- SECOND part called SYSTEM ROLLBACK (performed just after Roll Forward)

4) FLASHBACK Features – Query, Transaction and Table (but NOT for Flashback Database)

Each Transaction uses ONLY ONE Undo Segment, while several Transactions may share the same segment

Undo Segments are stored in the UNDO TABLESPACE, and the preferred type of Undo Management is AUTOMATIC (and default one)only one such Tablespace may be active at the time

Parameter UNDO_MANAGEMENT may be AUTO (Default) or MANUAL, and parameter

UNDO_TABLESPACE is by Default UNDOTBS1

In AUTO management system assigns 10 Undo Segments of the same size to the created Tablespace and handles usage of these segments automatically

You may create several Undo Tablespaces with > CREATE UNDO TABLESPACE tbspaname

DATAFILE ‘/path/filename’ SIZE {100M | n {K|M}}

and also you may AUTOEXTEND its Datafile, but the EXTENT MANAGEMENT must be system determined (AUTOALLOCATE)

Only ONE Undo Tablespace may be active at the time, while the other ones are in dormant state. You declare it active with the following syntax:

> ALTER SYSTEM SET undo_tablespace = undonew;

Parameter UNDO_RETENTION (specified in Seconds, Default 900) determines how long (at least) already committed transaction is to be retained in Undo Segment

It is enabled for the following circumstances by system (otherwise, this parameter is ignored):

1) The UNDO Datafile has the AUTOEXTENSION set to ON -- it keeps committed data for at least the Retention Interval

2) The UNDO Tablespace has GUARANTEED retention -- it keeps committed data for at least the Retention Interval

3) When modifying LOB datatypes

Undo Data is divided into 3 categories:

1) Uncommitted (ACTIVE) -- supports current transaction. It is NEVER overwritten

2) Committed (UNEXPIRED) -- transaction has ended, but it is still within the Retention

Interval. If NOGUARANTEE is enabled, the undo data will be retained (if possible),

without causing a new transaction to fail, because of the lack of the disk space. Otherwise (for GUARANTEE option) will be always honored.

3) Committed (EXPIRED) -- transaction has ended, but it is past (beyond) the Retention

Interval. It will be overwritten firstly to make a space for a new transaction.

How to change Guarantee option for an Undo Tablespace with:

> ALTER TABLESPACE undotbsp {GUARANTEE | NOGUARANTEE} ;

How to use UNDO ADVISOR in EM to get an estimate for the Undo Tablespace SIZE to satisfy given RETENTION INTERVAL

Week 6:

Lesson9: MANAGING DATA and CONCURRENCY

just the part about RESOLVING LOCKS

Lesson 5: CONFIGURING ORACLE NET ENVIRONMENT

Commands/Content:

Locks prevent multiple sessions from changing the same piece of data (row, rows or table) at the same time. They are obtained at the lowest possible level (row) by server.

Default Locking Mechanism in Oracle Database has 4 important features that enable the highest possible level of Data Concurrency:

1) AUTO Queue Management by Server – it keeps track of the following: a) What Sessions are waiting for locks b) Order in which these Sessions are waiting c) Requested Lock Mode

2) Row Exclusive Level locks for DML statements

3) NO locks required for SELECT statement (except when FOR UPDATE option is used)

4) Locks are held until transaction ends (Commit or Rollback) and then are automatically released

You can use the following syntax to impose MANUAL LOCKING mode for Tables (NOT recommended by Oracle)

> LOCK TABLE [owner.]tablename IN {ROW SHARE | ROW EXCLUSIVE | SHARE | SHARE

ROW EXCLUSIVE | EXCLUSIVE} MODE [{WAIT | NOWAIT |WAIT n}];

ROW EXCLUSIVE locks are automatically obtained when performing DML statements

-- they allow multiple readers and only one writer

SHARE locks are automatically obtained when creating an Index on a table -- they allow multiple readers and NO Updates

EXCLUSIVE lock is obtained when Dropping a table -- they allow multiple readers and nothing else

Most important causes of Lock Conflicts that cause sessions to WAIT to execute their DML/DDL statements are:

1) Users do NOT commit their changes promptly

2) Long running transactions with Commit only at the end

3) Manual Locking by developers who are not trained well or who do not understand how

Locking Mechanism (imposed by Server) works

You can detect Lock Conflicts by using EM Home Database Page

Performance

Additional

Monitoring Links

Blocking Sessions OR

by checking V$LOCK and V$SESSION Performance Views

To resolve Lock Conflict either Force User who holds the Blocking Session to Commit (Rollback)

OR

Terminate (kill) that session with > ALTER SYSTEM KILL SESSION

‘SID, SERIAL#’ IMMEDIATE;

, a r l g u

-

-

R e y p e s l e

T

T a b a r

P i t t

Deadlocks happen if two sessions are modifying (in the opposite order) two same rows at the same time, without committing the first change

Oracle Server will detect Deadlock automatically and undo the second change by the first session, that will receive Deadlock Error ORA-060,

and then this session needs to commit (rollback) its first change, while the Second session will experience just a bit of waiting without any error message

The material related to Oracle Net Services (Chapter 5) should be mastered over

Session8 document on the Work Page

Week 7:

SAMPLE TEST / Assignment One is due

Lesson 8: MANAGING SCHEMA OBJECTS – TABLES

Commands/Content:

T a b e d i z a n

) l e

x d e r

O g l

C a n d t e u s r

I

, d i o n e

I

( n

O

T

f c

# o l o u m n t s o

( r s r e

e t u r r u c t

S

R o w e d d e e a

-

H

t a u t s c k l o a

) s a f o n d t

D a n d

( c a o i n h a

, s c i n i n g

) r

O l a c v a d l u e e n l l u m n a n t g h

t i l i

1 e

1

B u n

D a t t a

d

T a n

) l e a b

(

V a o n i t e c a y r r l a a

S c

e s y p

-

-

, r

C l l o

t a r

D a c l a a

S

) f p i h

(

R e e s t y p

-

-

C h l e

R a n d o n s i t a

,

N

) l e a b i r r e u m n d a v a

D a

, i c

, t e

R r t e a r a c x e d f

( i

t h a

)

W t s e c e r g j

O b n d

L a w a

(

L a

O

B e

R o s i t h w i

M g

o c k l b

-

-

)

> o a t w r o e n e w m o v

( n i t o r a a w i n g l h o

i n g e a s r c i n

P

C

T d c e b y

E

F

R

E a n d b t h i m g e d u r e

i h a o w

C h e

R i t a w h t s n g i n r b

( e h u g a i n a k r g e o

a n

> c

-

-

) t n o i e p c e s i f x b e i d e d s t o i w n l r a e v e

t h e g e a n c h l o

B a n

N

O

T i

S z c k e v e e l y r t e c u c

( y o

i o m t u s d z e e i w b l e c t h n e e a t a w t e a c r t o o w

) r

H

r t e a m e r a p b y s l b e o c k

: a n t x t e n p a c d s

_ n a l b e t a

.

] m e

[ u s e r

(

E

A

T

E

C

R

B

L

E

T

A

t n g l x e

(

[ m a

]

) h t a t a y p e

O

T

[

N

L

L

N

U

] l u m n c o d

1

.

.

.

l o u m n

2

)

, c

S

T

O

R

A

G t b

A

C

E

L

E

S

P

T

A

B

_ n s p e a m

n

{

T

|

K

M

}

|

M

N

E

X

I

T

I

N

(

I

E n

{

K

A

L

X

T

E

N

T

}

M

I

N

E

M

A

X n

S

) n

N

C

R

E

A

S

E

S

N

T

E

X

T

E

I

C

T n

P

R

E

E n

P

C

T

F

R

E

E

F

S

T

I

L

S

T

R

A

M

A

X n

N

S n

S

I

T

R

A

N

I

N n

U

S

E

D

P

C

T

; n

Note: If Tablespace to hold that table was created with SEGMENT SPACE MANAGEMENT AUTO

(default), then PCTUSED and FREELISTS are ignored

How to change extent values (all of them may be modified except INITIAL) or space block parameter values (all of them may be modified except FREELISTS) with:

ALTER TABLE [user.]table_name

STORAGE (NEXT n{K|M} MINEXTENTS n MAXEXTENTS n PCTINCREASE n)

PCTFREE n PCTUSED n INITRANS n MAXTRANS n ;

Week 8:

MID-TERM TEST

Lesson 8: MANAGING SCHEMA OBJECTS – TABLES cont.

Lesson 8: MANAGING SCHEMA OBJECTS -- INDEXES

Commands/Content:

How to manually allocate a new extent for a table with:

ALTER TABLE [user.]table_name ALLOCATE EXTENT [SIZE n{K|M}] [DATAFILE 'path'];

How to move a non-partitioned table into a different tablespace with:

ALTER TABLE [user.]table_name MOVE TABLESPACE tbsp_name ; this command might be used also for changing parameters like INITIAL (extent) and FREELISTS, that previously could have not been changed OR for reorganizing the free space situation in tables' extents with just:

ALTER TABLE [user.]table_name MOVE ;

How to truncate an existing table , so that table structure, all constraints, indexes and triggers are not affected and the space is released or not with:

TRUNCATE TABLE [user.]table_name [ {DROP | REUSE} STORAGE];

How to drop an existing table with or without data and with possible FK reference by using:

DROP TABLE [user.]table_name [CASCADE CONSTRAINTS];

Important dynamic views are: DBA_TABLES, DBA_TAB_COLUMNS

Classification of Indexes:

LOGICAL (Single or Composite ; also Unique or Non-unique; also Regular or Function based)

PHYSICAL (B-tree Normal or Reverse and Bitmap; also Partitioned or Non-partitioned)

Structure of the B-tree Index Leaf Entry :

1. Entry Header that stores # of columns and locking info

2. Key column length and value pairs -- if the Index is composite (otherwise, it is just one length and one value)

3. ROWID -- it points to a mother table's row with that key value.

Structure of the Bitmap Index Entry :

1. Entry Header that stores # of columns and locking info

2. Key column length and distinct value

3. Start Rowid and End Rowid to indicate the range of rows for this table (Only Restricted

Rowid is used)

4. Long binary string where the bit is set to 1 if the corresponding row contains that distinct key

Comparison between B-tree and Bitmap Indexes --

Columns with lots of distinct values in the OLTP environment should use B-tree and

Columns with few distinct values in DSS or Data Warehousing should use Bitmap Indexes

How to create an Index with default options (LOGGING, NOCOMPRESS, SORT) and customized extent and space block parameters:

CREATE { ____ | UNIQUE | BITMAP } INDEX [user.]index_name

ON [user.]table_name (column1 [, column2, ...]) [REVERSE]

TABLESPACE tbsp_name

STORAGE (INITIAL n{K|M} NEXT n{K|M} MINEXTENTS n MAXEXTENTS n PCTINCREASE n)

PCTFREE {10 | n } INITRANS {2 | n } MAXTRANS { 255 | n };

Guidelines on how and when to create an Index -- avoid them if DML operations are frequent,

PCTFREE works differently than for tables -- it is used for future inserts and should be set to a higher value, while PCTUSED can not be set, NOLOGGING may be used for large indexes.

How to rebuild an Index with:

ALTER INDEX [user.]index_name REBUILD {OFFLINE | ONLINE}

[TABLESPACE tbsp_name] [{REVERSE | NOREVERSE}] [{LOGGING | NOLOGGING}]

[PCTFREE];

Note: It is possible to rebuild an Index and NOT to lock a whole table (which will allow concurrent

DML operations) by using the ONLINE option (OFFLINE is default)

Main reasons for doing a Rebuild are:

1. Changing the tablespace or PCTFREE,

2. Switching from Normal to Reverse mode and vice versa (only for B-tree indexes) or

3. Just to get rid of deleted leaf entries and to use space better (defragment the Index)

How to drop an existing index by using:

DROP INDEX [user.]index_name ;

Main reasons for dropping an index are:

1. It is no longer needed or used only periodically

2. It is Invalid

3. Before huge bulk loads (inserts) to speed up this process and re-create index later

Important dynamic views are DBA_INDEXES, DBA_IND_COLUMNS

Week 9:

Lesson 8: MANAGING SCHEMA OBJECTS -- CONSTRAINTS

Commands/Content:

Data integrity Methods -- Database Triggers , Application Code and Integrity Constraints

Types of Constraints -- PRIMARY KEY, UNIQUE, FOREIGN KEY, CHECK and NOT NULL (as a special CHECK type)

Four possible States when enabling/disabling a constraint:

Disable Novalidate -- constraint is TURNED OFF i.e NOT enforced at all (default state, if

DISABLE option)

Disable Validate -- the Table becomes READ ONLY when any of its constraints gets this state i.e

NO DML operation will be allowed for this Table (only Select statements)

Enable Novalidate -- new (future) Insert/Update statements will be checked against constraint violation,

but existing rows in the Table will NOT be checked, it will NOT lock the table

Enable Validate -- both existing (old) and future (new) rows WILL BE checked against constraint violation,

i.e when this state becomes active NO false rows can exist in the Table.

This is default state for the constraint state (if nothing specified), it will lock the table if going from Disable state

Deffering a constraint checking may have two Modes :

Not Deferrable ( Immediate ) -- this mode will enforce the constraint at the end of EACH DML statement,

i.e each Line in the DML Procedure will be inspected against the constraint violation

(Default mode)

Deferrable -- this mode might POSTPONE (DEFER) constraint checking till COMMIT time and must be

specified when the constraint is CREATED. This mode has two Options :

Initially Immediate (SubDefault option) -- still the constraint will be checked as the

Immediate one,

but in the future DBA/Developer may manually change its state to deferred.

Initially Deferred -- upon creation of the constraint, its action will be

POSTPONED(DEFERRED)

till COMMIT time and also may be later manually switched to the immediate one.

Syntax Part for defining constraint Type , State and Mode when doing it In-Line (with the column together) is: column_name DATATYPE [(maxlength)] [ CONSTRAINT constraint_name ] TYPE ...

[ {NOT DEFERRABLE | DEFERRABLE INITIALLY {IMMEDIATE | DEFERRED}} ]

[ {ENABLE {VALIDATE | NOVALIDATE} | DISABLE {NOVALIDATE | VALIDATE}} ]

How to Switch a Deferrable constraint to the Deferred/Immediate one-- Two Levels exist:

1. Session level will set all Deferrable constraints created as Initally Immediate to become

Deferred with:

ALTER SESSION SET CONSTRAINTS = DEFERRED;

or in the opposite case will set all Deferrable constraints created as Initially Deferred to become Immediate with:

ALTER SESSION SET CONSTRAINTS = IMMEDIATE;

2. Database level will set either any individual constraint(s) or all of them to become Deferred or Immediate with:

SET CONSTRAINT {constraint1, constraint2 ... | ALL} {DEFERRED | IMMEDIATE};

Guidelines for Defining constraints:

1. Place Indexes created by Server (that enforce PK/UK constraints) in the separate tablespace from table data

by specifying USING INDEX TABLESPACE tbsp_name when you create a PK/UK constraint

2. If huge data loads are expected for the table with PK/UK constraints, they may be created as

DEFERRABLE

and then the support Index will be NONUNIQUE, meaning that if the constraint is disabled before that

load, the support Index will NOT be dropped and after enabling the constraint it will be still active

Features of setting the constraint state to Enable Novalidate:

It puts NO LOCKS on a table, it is much faster than Validate state (does NOT check the existing data),

In order for PK/UK constraints to be enforced they had to be created as DEFERRABLE and this command

should be used when there is lots of DML activity going on; the comand for this setting is:

ALTER TABLE [user].table_name ENABLE NOVALIDATE CONSTRAINT constraint_name; OR

ALTER TABLE [user].table_name MODIFY CONSTRAINT constraint_name ENABLE

NOVALIDATE;

Features of setting the constraint state to Enable Validate:

It LOCKS whole table if going from DISABLE state (that is why you should do firstly ENABLE

NOVALIDATE),

it is much slower than setting to Novalidate (it checks BOTH the incoming and he existing data),

PK/UK constraints will be enforced in either Mode and this command should be used when NO or MINIMAL

activity is going on; the comand for this setting is:

ALTER TABLE [user].table_name ENABLE [VALIDATE] CONSTRAINT constraint_name;

OR

ALTER TABLE [user].table_name MODIFY CONSTRAINT constraint_name ENABLE

[VALIDATE];

Six Steps Recipe to identify rows that are involved in the constraint violation :

1. Create table EXCEPTIONS in the SYS schema by running the script utlexcpt.sql

2. Execute the following command:

ALTER TABLE [user].table_name ENABLE CONSTRAINT constraint_name

EXCEPTIONS INTO EXCEPTIONS; --> will fail

3. Execute the following command:

ALTER TABLE [user].table_name ENABLE NOVALIDATE CONSTRAINT constraint_name;

--> will be successfull if Constraint was created as DEFERRABLE

4. Identify invalid rows (their ROWID) by using a Subquery method

5. Rectify the errors by supplyuing the invalid row's ROWID in the WHERE clause of your

UPDATE statement

6. Truncate Exceptions table and perform Step2 again --> will success

Important Data Dictionary views are: DBA_CONSTRAINTS, DBA_CONS_COLUMNS

Week 10:

Lesson 7: MANAGING USERS AND PROFILES

Lesson 7: SYSTEM and OBJECT PRIVILEGES , also MANAGING ROLES

Commands/Content:

Week 11:

Lesson 14: BACKUP AND RECOVERY CONCEPTS

Commands/Content:

Week 12:

Lesson 15: PERFORMING and MANAGING DATABASE BACKUPS

Assignment Two is due

Commands/Content:

Week 13:

Lesson 16: PERFORMING DATABASE RECOVERY

Commands/Content:

FINAL EXAM is on Friday, April 11 th – you may bring ONE reference sheet, both sides, printed or handwritten