Abhisek*Pan*&*Vijay*S.*Pai

advertisement

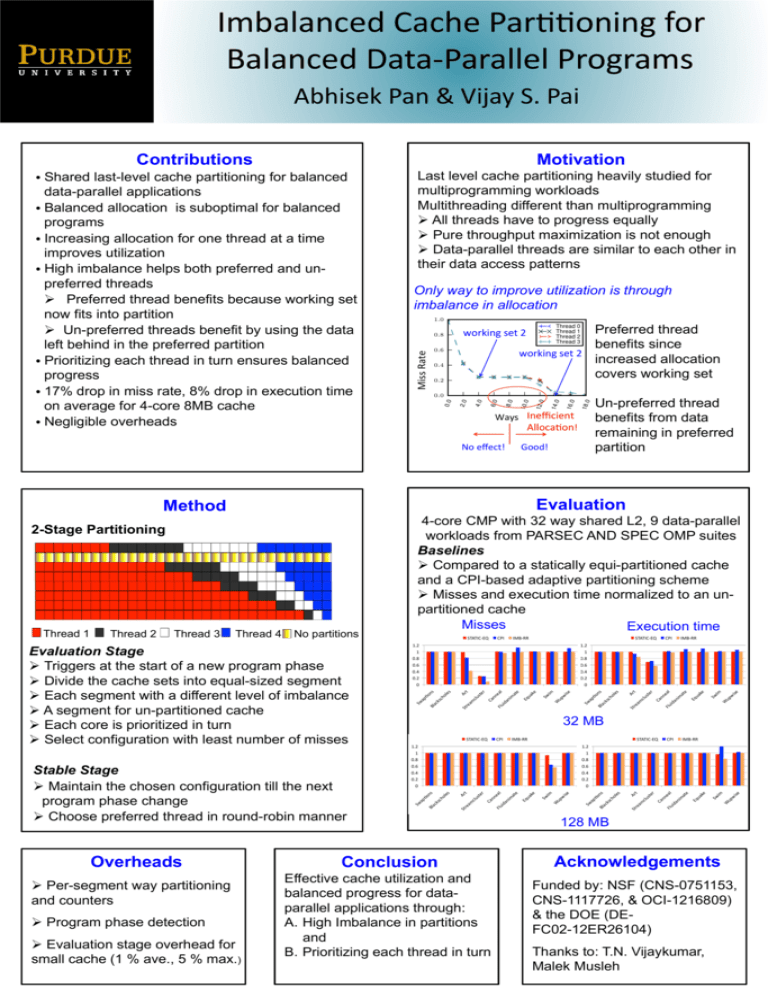

Imbalanced*Cache*Par//oning*for* Balanced*Data7Parallel*Programs* Abhisek*Pan*&*Vijay*S.*Pai* Contributions Motivation • Shared last-level cache partitioning for balanced data-parallel applications • Balanced allocation is suboptimal for balanced programs • Increasing allocation for one thread at a time improves utilization • High imbalance helps both preferred and unpreferred threads ! Preferred thread benefits because working set now fits into partition ! Un-preferred threads benefit by using the data left behind in the preferred partition • Prioritizing each thread in turn ensures balanced progress • 17% drop in miss rate, 8% drop in execution time on average for 4-core 8MB cache • Negligible overheads Last level cache partitioning heavily studied for multiprogramming workloads Multithreading different than multiprogramming ! All threads have to progress equally ! Pure throughput maximization is not enough ! Data-parallel threads are similar to each other in their data access patterns Only way to improve utilization is through imbalance in allocation 1.0 Miss*Rate* Miss Rate 0.8 Preferred thread benefits since working*set*2* increased allocation covers working set working*set*2* 0.6 0.4 0.2 18.0 16.0 14.0 Good!* Overheads ! Per-segment way partitioning and counters ! Program phase detection ! Evaluation stage overhead for small cache (1 % ave., 5 % max.) IMBERR" STATICEEQ" CPI" IMBERR" " W up w ise " im Sw St Ar t" re am clu ste r" Ca nn ea Flu l" id an im at e" Eq ua ke " ck sc ho le s" " ns -o Bl a Sw ap " W up w ise " im Sw St Ar t" re am clu ste r" Ca nn ea Flu l" id an im at e" Eq ua ke " le s" ck sc ho Bl a -o ns " 1.2" 1" 0.8" 0.6" 0.4" 0.2" 0" 32 MB STATICEEQ" CPI" IMBERR" STATICEEQ" CPI" IMBERR" Conclusion Effective cache utilization and balanced progress for dataparallel applications through: A. High Imbalance in partitions and B. Prioritizing each thread in turn W up w ise " " im Sw Ar t" re am clu ste r" Ca nn ea Flu l" id an im at e" Eq ua ke " St Bl a ck sc ho le s" " ns -o Sw ap W up w ise " " im Sw Ar t" re am clu ste r" Ca nn ea Flu l" id an im at e" Eq ua ke " St ck sc ho le s" 1.2" 1" 0.8" 0.6" 0.4" 0.2" 0" " 1.2" 1" 0.8" 0.6" 0.4" 0.2" 0" ns Stable Stage ! Maintain the chosen configuration till the next program phase change ! Choose preferred thread in round-robin manner CPI" 1.2" 1" 0.8" 0.6" 0.4" 0.2" 0" -o Evaluation Stage ! Triggers at the start of a new program phase ! Divide the cache sets into equal-sized segment ! Each segment with a different level of imbalance ! A segment for un-partitioned cache ! Each core is prioritized in turn ! Select configuration with least number of misses STATICEEQ" Bl a No partitions Sw ap Thread 4 4-core CMP with 32 way shared L2, 9 data-parallel workloads from PARSEC AND SPEC OMP suites Baselines ! Compared to a statically equi-partitioned cache and a CPI-based adaptive partitioning scheme ! Misses and execution time normalized to an unpartitioned cache Misses Execution time Sw ap 2-Stage Partitioning Thread 3 12.0 Reuse Distance Inefficient* Ways* Alloca/on!* Un-preferred thread benefits from data remaining in preferred partition Evaluation Method Thread 2 10.0 8.0 6.0 4.0 2.0 0.0 0.0 No*effect!* Thread 1 Thread 0 Thread 1 Thread 2 Thread 3 128 MB Acknowledgements Funded by: NSF (CNS-0751153, CNS-1117726, & OCI-1216809) & the DOE (DEFC02-12ER26104) Thanks to: T.N. Vijaykumar, Malek Musleh

![[#JAXB-300] A property annotated w/ @XmlMixed generates a](http://s3.studylib.net/store/data/007621342_2-4d664df0d25d3a153ca6f405548a688f-300x300.png)