The Architecture of Oracle

advertisement

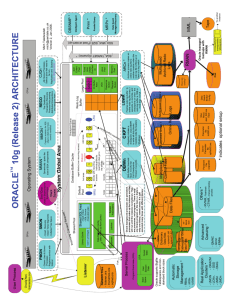

The Architecture of Oracle The Three Components of Oracle The Oracle Architecture has three major components: • Memory Structures • Processes • Files Memory structures store data, data structures and executable program code in the server’s memory. Processes run the actual database and perform tasks on behalf of the users connected to the database. Files store the database’s data, database structures and executable program code on the physical media of hard disk drives. There is interaction between all these components so that, to understand how Oracle works, we need to understand how these components operate independently and interactively. A database system is made up of the database and the instance. An instance is the memory area in addition to the running programs that service the memory. An instance can be considered to be a picture of part of the data (the “active” data) in the database at a moment in time. This picture is continually changing as data is added, queried, updated and deleted. The database is simply a collection of files that store the data. The database is “mounted”, or attached, to the instance. The database is the permanent representation of all the data, whereas the instance is a snapshot at that moment in time. To keep with normal naming conventions, we will use the misnomer of database to refer to the Oracle product. We will use the term database files to refer to what is technically the database. Memory Structures Memory structures in Oracle can be classified into two distinct areas: areas that are shared by all users, and areas that are unique to, and used by, only one user. The Shared Global Area is the area which, as its name would suggest, is shared by all users. The Program Global Area is the area(s) of memory that is unique to an individual user (or, to be more exact, an individual process acting on behalf of one user). The System Global Area The System Global Area, or SGA as it is commonly referred to, is itself divided into three areas. See diagram below. These areas comprise: • The Shared Pool • The Database Buffer Cache • The Redo Log Buffer The Shard Pool contains SQL statements that have already been submitted to the database, together with the data dictionary. There are many stages in “running” an SQL statement. Rather than repeating each stage again for an SQL statement which has already been run, information from these stages (e.g. “has this user the right to access this data?”) is stored in the Shared Pool and can be reused. Also stored in this area is data dictionary information. The data dictionary contains information about storage of the data, not the data itself (e.g. “what type of data is stored in the second column, how many rows are in the sales table?”) The System Global Area Shared Pool Database Buffer Cache Redo Log Buffer The Database Buffer Cache stores the data currently (or recently) being accessed by users. If a table is in constant use for queries, then it would be a good idea to keep the data in memory rather than having to constantly read it from the disk. The Redo Log Buffer is used to support one of Oracle’s most beneficial functions – the ability to recover from a database crash. As changes are made to the data in the database, these changes are recorded in the Redo Log Buffer. It is not possible to backup the database on a continuous basis; so, a system problem would mean that we could only recover the database to a certain point in time – one week ago, one day ago, one hour ago. We would therefore have lost data. In a bank, for example, this would be totally unacceptable. The Redo Log Buffer (working along with the redo log files, discussed later) has a record of all changes made to the database. Once we have restored the data from our backup tapes, the database is now consistent with its state at a specific point in time (let us say two days ago). The missing changes can be found in the Redo Log Buffer (and/or redo log files) and can be reapplied to the database, i.e., rolled forward. The Program Global Area The Program Global Area, PGA, is an area in memory dedicated to a user (user’s process, or a background process acting on behalf of a user’s process)- as opposed to the SGA which is shared by all users. Processes Similar to memory areas, where there are areas for all users and areas for individual users, there are processes in Oracle which serve the database as a whole and processes which are dedicated to a specific user. Processes are jobs or tasks that work in the memory of computers. The processes are subdivided into three: • Background Processes • User Processes • Server Processes Background Processes When an Oracle instance is started, an assortment of background processes are spawned to support the database activities. Background processes service the System Global Area. The SGA requires processes to transfer information to and from it. The background processes provide this role. There are nine principal background processes: PMON, LGWR, ARCH, DBWR, LCKn, CKPT, SNPn, SMON and RECO. When a user process fails it can keep a hold on resources, preventing other processes from using them. The Process Monitor process (PMON) frees these resources, recovering the failed process. The Redo Log Buffer in memory, as discussed previously, stores changes made to the database, to enable complete recovery after a crash. There is a finite amount of memory in the server and memory is cleared during a server crash. It is therefore necessary to store the changes to the database on disk (on-line redo log files). The Log Writer process (LGWR) copies the data in the redo log buffer in memory to files on disk. Only a certain amount of disk space will be made available for the redo log files. These operate in a circular fashion where, when all the redo logs are full, the sequence restarts by overwriting the first again, then the second, and so on. Therefore, the redo information required, to recover completely from a system crash, may have been overwritten. Oracle provides the option to archive these redo log files to tape or to other disks. The process that moves the data from redo log files to archived files is the Archiver process (ARCH). The Database Buffer Cache, as mentioned previously, stores recently used data. This provides the benefit of eliminating the need to reload all data from disk. It is therefore beneficial to keep recently used data in memory, but it also necessary to ensure that there is sufficient space in the buffer cache for new data, and that modified data is saved to disk. This is the role of the Data Base Writer (DBWR). To ensure that data being used by one set of processes is not inadvertently modified by another set of processes, it is necessary to have locks. The Lock process (LCKn) provides this locking functionality in the parallel server environment.1 Database files have headers that contain information about the storage of data in the file. These headers are updated by the Checkpoint Process (CKPT). The term Checkpoint comes from the fact that these updates occur at checkpoints. A checkpoint can occur when a redo log file is full and/or at a configurable time period. LGWR can also provide this functionality; CKPT is usually used only if LGWR is overloaded. Snapshots can be considered as copies of data tables. Snapshots are read-only copies of an entire table or set of its rows (simple snapshot) or a collection of tables, views, or their rows using joins, grouping, and selection criteria (complex snapshots). In a distributed environment, remote locations may need to query the master database. With snapshots, a copy of the data is available locally. The Snapshot process (SNPn) is responsible for making the snapshot the same as the original table. If the entire database, rather than an individual process, fails then the System Monitor process ( SMON ) is responsible for its recovery, i.e., the recovery of transactions. Another feature supported by Oracle is distributed databases. Distributed databases can be thought of as two or more servers having copies of the same data. Transaction failures in this environment would be beyond the abilities of SMON; therefore, this role is given to a separate process – the Recoverer process (RECO). User and Server Processes When a user connects to the Oracle database via an application such as SQL*Plus, in order to run the user’s application Code, a User process is created. Each user attached to the database will have a user process assigned. The user process is not able (not allowed) to access the SGA area of memory. Yet, for SQL commands to execute, SGA access is required. Server processes provide this functionality. A server process executes the user’s SQL code and passes the data to and from the database buffer cache in the SGA. Files Files on disks store the data that makes up the database. Files, though, store more than just the data and the structures around the data. There are four “types” of files used by Oracle: • Data Files • Control Files • Redo Log Files • Archived Files Data files, as mentioned previously, store the data and the logical structures required for that data. It would be incorrect, however, to use the previous statement as a complete description of data files. They are also used for indexes, rollback segments, the data dictionary, application data, and other items discussed later. These datafiles are divided into logical units called tablespaces, which will be discussed later. Oracle requires only one database file, but database files are fixed in size. Therefore, to increase storage capacity, more data files must be added. Data files are associated with only one database (the alternative is having different databases conflicting for resources). Data can be read from the data files into memory, can be updated, or new data can be written into the data files. Control Files (there is one control file with one or more copies) store information about the physical layout of the database. They basically detail the location and names of the files that constitute the database. Redo Log Files were discussed when describing the Log Writer (LGWR) process. They provide a permanent media for the redo log buffers in memory. LGWR copies the contents of the redo log buffer to the redo log files. Therefore, the redo log files store the changes made to the database. There must be at least two redo log files. Redo log files are usually grouped together. Groups of redo log files are mirrored copies: if one redo log file is lost/destroyed then Oracle can use the other file in the group. Archived Files are used as backups. These could be backups of the actual data (and data structures) or backups of the redo log files. They are used to restore the database in the event of a crash/failure/loss of data. Archived logs generally refer to the backups of the redo log files. While defining a database, as opposed to an instance, it was stated that the database is simply a collection of files. This is correct – but not all files represent the database. The parameter file is not part of the database: it specifies such things as how big the instance will be and how the instance will be used. It is a collection of parameters whose values affect the instance. An example of this would be shared_pool_size which determines the size of the shared pool. A default parameter file comes with the standard installation of Oracle. The file is called init.ora, or a variant thereof. The Logical Layout of Oracle We have already seen that Oracle uses files on disk as a permanent medium for its data, code, structures, etc. What may seem strange is that Oracle actually has no inbuilt knowledge of disks. It doesn’t know where the files are stored and, in its normal day to day running, has no dealing with files (although it knows of their existence). Files and disks are physical structures which relate to the Operating System Level, whereas Oracle works with logical structures. We will now look at these logical structures. Tablespaces Data File 1 Tablespace A Data File 2 Data File 3 Tablespace B Tablespaces and Files The diagram above shows the relationship between tablespaces and datafiles. Oracle has a data structure called tablespaces that are not seen by the operating system. Tablespaces are groupings of data files; each tablespace requires at least one datafile. Oracle requires at least one tablespace to function. The tablespaces contain the various other types of objects to store user data. Tablespaces are the largest logical structure in Oracle. A formula which applies is: Size of database = sum (size of tablespaces) Size of tablespaces = sum (size of tablespace’s datafiles) When Oracle is installed, there is one tablespace present. This tablespace is called the System Tablespace. It contains the data dictionary, a collection of tables that describe the database. It stores information such as what files are in what tablespace, what user is allowed to access what tables, what indexes are defined on tables, etc. Tablespaces are used by Oracle as an administrative tool. With tablespaces we can control our storage capabilities. Tablespace size is increased by adding datafiles, not by increasing the size of the datafiles. Datafiles are fixed in size so it only possible to increase the size of the tablespace by adding one or more datafiles. Thus tablespaces can be used as a mechanism for controlling disk utilization. Tablespaces can be online (available for use) or offline (unavailable for use). This gives the Database Administrator the ability to make part of the database unavailable if necessary. One use of this function is to allow a tablespace to be backed up – backing up an online tablespace could lead to potential problems. One caveat to this is that the system tablespace cannot be taken offline. This is due to the fact that the system tablespace contains the data dictionary, which must be available for the database to function. Components of a Tablespace We have already mentioned that tablespaces are the largest of Oracle’s logical structures. Tablespaces themselves contain logical structures, which we will now examine. Starting with the largest, the logical structures that make up a tablespace are: • Segments • Extents • Blocks Segments A segment stores an Oracle object. Objects are the units of storage that we generally refer to when we discuss Oracle. When users talk about information storage in Oracle, they don’t usually discuss tablespaces, segments or datafiles. They talk about tables and indexes. Tables and indexes are Oracle objects, along with other items to be discussed. A segment stores an object; so segments, in effect, store the tables and indexes. An object belongs to a specific user. The term schema refers to all the objects owned by a specific user. There are four different types of segment, each of which stores a different type of object. This does not mean that there are only four types of object in Oracle. Not all objects are stored in segments. A synonym is an Oracle object that is basically an alias for another object. For example, if user Scott has access to user John’s phone_number table, then Scott would access the table using the database name john.phone_number. Many users of Oracle prefer to give tables like this an alias – a synonym. The user Scott could create a synonym for the table john.phone_number, calling it “phoneno”. Apart from simplifying references to objects, synonyms can be used to hide the storage details of tables and/or who owns them. phoneno is an Oracle object but it doesn’t contain actual data – it is simply a link to another table (the “link” is stored in the data dictionary). As there is no information/data in phoneno, it does not require space on disk (apart from the small amount required in the data dictionary). Therefore, there is no segment assigned to it. The four types of segment are: • Data segment • Index segment • Rollback segment • Temporary segment Data segments store tables of rows of data. Index segments store table indexes. Each index in the database is stored in an index segment. As changes are made to data in the database, rollback segments hold the old values. This ensures that an update by one user will not affect a query from another user – the query can use the old value (value prior to the update/insert), guaranteeing an accurate picture of the data for the query at the point in time the data was requested. The rollback segment also supports a very useful Oracle function – (explicit) rollback. Although a user may have made several inserts/updates/deletes to a table, these changes have not yet been made permanent. Changes are made permanent when the commit command is executed. Alternatively, the user may decide not to make the changes permanent and issue the rollback command. This will return the data items to their original values, before the changes. The original values are stored in the rollback segment (or in memory). A transaction is a logical unit of work that comprises one or more SQL statements executed by a single user. A transaction ends when it is explicitly commited or rolled back. Temporary segments can be considered as an extension of memory. If a large sort has to be performed, it will usually be performed in memory. There are occasions where there is not sufficient memory to perform the sort, e.g., a very large table may require sorting. The sort would then be performed on disk, in the temporary segment. SQL statements which may need a temporary segment include select … order by and select … union. Access to the Data The first stage in accessing data is processing a submitted SQL statement. This involves three stages which are performed in the Shared Pool area of SGA: • Validating the syntax of the SQL Statement • Checking the data dictionary cache for the user’s access permissions on the data (These two steps are known as parsing) • Determining the statement’s execution plan Utilizing the Shared SQL Area The shared SQL area contains the parse tree and execution plan for an SQL statement. To execute the statement, Oracle checks the Shared Pool in an area where previously executed SQL is stored – the Shared SQL Area. If an identical statement is found then the statement can be executed without reparsing. The statement must be identical to the one found in the Shared SQL area; Oracle is very literal: Select * from emp where ename=’John’; is not the same as Select * from emp where ename=’john’; Similarly, Select * from emp where ename=’John’; is not the same as Select * from emp where ename=’John’; because spaces and tabs are taken into account. If an identical statement is not found in the Shared SQL Area, then the statement must be parsed and an execution plan derived. The Database Buffer Cache The database buffer cache contains database records that are waiting to be written to the disk or are available for reading. Once the SQL statement is executed, the required data is read into the database buffer cache, if necessary. It may already be there. If so, Oracle will use it. The database buffer cache stores two types of buffer (each the size of an Oracle Block): Data blocks and Rollback blocks. Data blocks store unchanged data (data that is being used just for reads) and changed data (data that has been updated). Rollback Blocks hold the “before images” of data. When data is updated, the data block will hold the new values while the rollback block will hold the data values before the change. These “before images” allow the changes to be rolled back. As data is requested, it is loaded into the buffer cache from disk. This could not continue indefinitely, as eventually there would be no available space in the buffer cache for new loads. To counteract this, Oracle has a mechanism to determine which buffers are no longer urgent/required. These buffers can be overwritten by new loads of data. A buffer can be in one of three states. It can be free, pinned, or dirty. A free buffer can be simply empty or can be one where the data in the buffer matches that on the disk. In other words, the data has not been changed, or has been changed but then saved to disk. This may seem a strange description – that there can be data in the buffer but it is free. Free is probably not a good description: “freeable” would be more apt, even if the word technically does not exist. Essentialy, there is no problem in overwriting such a buffer. If the data is required again, it can be read from disk. If a buffer is currently in use then it is pinned. Dirty buffers are those containing data that has been changed but not yet written to disk. This data must not be overwritten; if it were, there would be data loss – modified data being overwritten before it is saved. Dirty buffers are moved to a list called the Dirty List, where they wait to have the data saved to disk. Logging Transactions One of the Oracle database’s most useful procedures is its ability to recover from a crash. The crash could be a server crash, Oracle crash, disk failure, or one of the other unpredictable elements that a Database Administrator/System Administrator must contend with. Oracle is able to recover from these situations because it logs transactions. These transactions are logged in the redo log buffers, redo log files, or, possibly, the archived log files. A commit does not force Oracle to write dirty buffers to disk. This means that committed data could be lost if the system crashed – memory is volatile and the only way to guarantee that data is permanent is if it is saved to disk (although even this is not a 100% guarantee). Recovery of a datafile As described earlier, LGWR writes data from the redo log buffer to the redo log files. This data comprises the changes made to the database (the before and after images). If a system failure/crash occurs, this data is used to perform a recovery. “A transaction is not considered complete until the write to the redo log file has been complete.” LGWR will copy data in the redo log buffer to the redo log files at predetermined intervals, after a commit, and when the redo log buffer is full (if there are infrequent commits, the log buffer can fill up). When one redo log file is full, Oracle will switch to the next redo log file. This involves creating a checkpoint. A checkpoint is signaled by LGWR to ensure that DBWR writes all committed data to disk. There is a sequence number given to the redo log files. At each checkpoint, this sequence number is incremented and allocated to the next redo log file. This sequence number is stored in all database files and used as a reference point for recovery. As an example, let us say that the disk containing one of our tablespaces failed when the sequence number was 50. The last system backup was taken when the system was at sequence number 45. Oracle then knows that the last backup (which brings us to sequence number 45) and redo log files with sequence numbers 45 to 50 must be restored. We can see this with the example shown in the diagram above. One thing worth noting here is that the above example requires six redo log files to be restored. More than likely, only two or three redo log files, or redo log groups, exist on disk. So where are the other redo log files? There are two possibilities: they have been overwritten or they have been saved to tape (or another disk). Which possibility applies depends on which mode Oracle is operating in – Archive Log Mode or No Archive Log Mode. Archiving is where Oracle will backup the redo log file before it overwritten (Oracle will start writing to the first redo log file after all the others are full). The ARCH process is responsible for this function. It is called at a checkpoint (LGWR switching to the next redo log file as the current one is full.) In the diagram above, the DBWR process has the data in the datafiles up to date at sequence number 50. Let us say that datafile one is corrupted. We can restore the datafile from backup, but that was only up to date at sequence number 45. The data for sequence number 46 to 49 are available on tape (can also be on disk) as saved by the ARCH process. The data for sequence number 50 is available from the on line redo log, as kept up to date by LGWR. We can therefore restore the data in the datafile, bringing it completely up to date.