Electronic Versus Manual Data Processing

advertisement

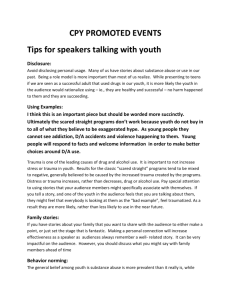

RESEARCH METHODS & STATISTICS Electronic Versus Manual Data Processing: Evaluating the Use of Electronic Health Records in Out-of-hospital Clinical Research Craig D. Newgard, MD, MPH, Dana Zive, MPH, Jonathan Jui, MD, MPH, Cody Weathers, and Mohamud Daya, MD, MS Abstract Objectives: The objective was to compare case ascertainment, agreement, validity, and missing values for clinical research data obtained, processed, and linked electronically from electronic health records (EHR) compared to ‘‘manual’’ data processing and record abstraction in a cohort of out-of-hospital trauma patients. Methods: This was a secondary analysis of two sets of data collected for a prospective, populationbased, out-of-hospital trauma cohort evaluated by 10 emergency medical services (EMS) agencies transporting to 16 hospitals, from January 1, 2006, through October 2, 2007. Eighteen clinical, operational, procedural, and outcome variables were collected and processed separately and independently using two parallel data processing strategies by personnel blinded to patients in the other group. The electronic approach included EHR data exports from EMS agencies, reformatting, and probabilistic linkage to outcomes from local trauma registries and state discharge databases. The manual data processing approach included chart matching, data abstraction, and data entry by a trained abstractor. Descriptive statistics, measures of agreement, and validity were used to compare the two approaches to data processing. Results: During the 21-month period, 418 patients underwent both data processing methods and formed the primary cohort. Agreement was good to excellent (kappa = 0.76 to 0.97; intraclass correlation coefficient [ICC] = 0.49 to 0.97), with exact agreement in 67% to 99% of cases and a median difference of zero for all continuous and ordinal variables. The proportions of missing out-of-hospital values were similar between the two approaches, although electronic processing generated more missing outcomes (87 of 418, 21%, 95% confidence interval [CI] = 17% to 25%) than the manual approach (11 of 418, 3%, 95% CI = 1% to 5%). Case ascertainment of eligible injured patients was greater using electronic methods (n = 3,008) compared to manual methods (n = 629). Conclusions: In this sample of out-of-hospital trauma patients, an all-electronic data processing strategy identified more patients and generated values with good agreement and validity compared to traditional data collection and processing methods. ACADEMIC EMERGENCY MEDICINE 2012; 19:217–227 ª 2012 by the Society for Academic Emergency Medicine From the Center for Policy and Research in Emergency Medicine, Department of Emergency Medicine, Oregon Health & Science University (CDN, DZ, JJ, CW, MD), Portland, OR; the Multnomah County Emergency Medical Services, Multnomah County Health Department (JJ), Portland, OR; and Tualatin Valley Fire and Rescue (MD), Aloha, OR. Received May 9, 2011; revisions received July 25 and August 2, 2011; accepted August 9, 2011. Presented at the Society for Academic Emergency Medicine annual meeting, June 2010, Phoenix, AZ. This project was supported by grants from the Robert Wood Johnson Foundation Physician Faculty Scholars Program; the National Heart, Lung, and Blood Institute (#5-U01-HL077873-01); the American Heart Association; and the Oregon Clinical and Translational Research Institute (grant # UL1 RR024140). These analyses were carried out by the investigators; neither the Clinical Trials Center nor the Publications Committee of the Resuscitation Outcomes Consortium takes responsibility for the analyses and interpretation of results. The authors have no potential conflicts of interest to disclose. Supervising Editor: James Holmes Jr., MD. Address for correspondence and reprints: Craig D. Newgard, MD, MPH; e-mail: newgardc@ohsu.edu. ª 2012 by the Society for Academic Emergency Medicine doi: 10.1111/j.1553-2712.2011.01275.x ISSN 1069-6563 PII ISSN 1069-6563583 217 218 T he amount of funding allocated to scientific research and development in the United States is large and has continued to climb over the past 50 years.1 A substantive portion of this funding is spent on the collection and processing of data. While manual record abstraction and data entry have been standard practice for collecting clinical research information, use of electronic health records (EHR) and electronic data processing methods have been suggested as more efficient mechanisms for conducting research, quality assurance, and epidemiologic surveillance.2–4 Use of EHR is being actively promoted in the United States.5 However, it remains unclear whether clinical research data obtained and processed directly from EHR yield sufficient data quality compared to manual record abstraction. While EHR and electronic processing would seem to have multiple advantages over more traditional approaches, studies directly comparing the reliability (consistency of measurements, precision), validity (approximation of ‘‘true’’ values, accuracy), and case ascertainment (identification of all eligible subjects) for different data processing strategies are limited. Several studies have suggested cost savings, reduction in source-to-database error rates, and good agreement with data abstraction values when using electronic methods.3,4,6,7 Other research has demonstrated the validity and efficiency of probabilistically matching large electronic data sets.8–10 While these studies suggest a benefit of EHR and electronic methods in clinical research, none of them provide a direct comparison of a maximized EHR approach (all-electronic processing, plus probabilistic linkage) compared to a more traditional approach (record abstraction, manual chart matching, and data entry) for collecting clinical research data. In this study, we compare and contrast several aspects of data collection and processing among a cohort of out-of-hospital trauma patients using two separate strategies: all-electronic data processing versus a more conventional ‘‘manual’’ data processing approach. We evaluated these strategies using three aspects of data collection and processing: 1) data capture (case ascertainment), 2) data quality (agreement, validity), and 3) differences in the proportion of missing values. We hypothesized that an all-electronic data collection and processing strategy would yield broader capture of eligible study patients and similar data quality when compared to a more conventional approach. METHODS Study Design This was a secondary analysis comparing two separate and independent strategies (manual vs. electronic) for collecting and processing clinical research data for a population-based, out-of-hospital, prospective cohort of trauma patients. Each data processing strategy was used for a separate and independent study, which ran in parallel on the same population of trauma patients. Personnel collecting and processing data for each strategy were blinded to patients in the other group and to Newgard et al. • ELECTRONIC VS. MANUAL DATA PROCESSING the study objective during data processing. The institutional review boards at all participating hospitals reviewed and approved this project and waived the requirement for informed consent. Study Setting and Population This study was performed with 10 emergency medical services (EMS) agencies (four private ambulance transport agencies, six fire departments) and 16 hospitals (three trauma centers, 13 community and private hospitals) in a four-county region of Northwest Oregon and Southwest Washington. The region operates a dual-advanced life support EMS system, where the majority of 9-1-1 responses are served by both fire (first responder) and ambulance (transport) agencies, typically generating two EMS charts for each patient. In this project, we compiled all available sources of EMS data for each patient in both the electronic and the manual processing strategies, as illustrated in Data Supplements S1 (electronic approach) and S2 (manual approach, available as supporting information in the online version of this paper). The study was conducted at one site participating in a multisite out-of-hospital research network (Resuscitation Outcomes Consortium [ROC]) that has been described in detail elsewhere.11 The primary cohort consisted of consecutive injured children and adults requiring activation of the emergency 9-1-1 system within the four-county region, meeting predefined values for physiologic compromise, undergoing separate and independent data collection by manual and electronic methods (detailed below), with matched records available for each data collection strategy (n = 418). Field physiologic compromise (at any point during out-of-hospital evaluation) was defined as: systolic blood pressure (sBP) £ 90 mmHg, respiratory rate of <10 or >29 breaths ⁄ min, Glasgow Coma Scale (GCS) score £ 12, advanced airway intervention, or traumatic death in the field.12–15 Persons meeting the above criteria were included in the study regardless of field disposition or outcome. The dates for enrollment included a 21-month time period with concurrent data processing efforts (January 1, 2006, through October 2, 2007). Patients meeting the study inclusion criteria, but not included in the primary cohort (e.g., due to unmatched records or differences in case ascertainment), were also tracked to further describe differences between the data collection strategies. Study Protocol There were two methods of case identification and data collection performed separately, but in parallel, on the same group of out-of-hospital trauma patients. All EMS agencies included in this study had EHR systems in place and used electronic processes for dispatch, charting, and billing. All source files were obtained from these EHR systems. The ‘‘manual’’ strategy followed traditional research processes for case identification, data processing (including data abstraction and data entry from printed hard copy records), and outcome matching. The ‘‘electronic’’ strategy involved data queries, data export routines, database management, and record linkage. ACADEMIC EMERGENCY MEDICINE • February 2012, Vol. 19, No. 2 Manual Data Processing. Manual data processing was based on patients enrolled in the ROC epidemiologic out-of-hospital trauma registry (the ‘‘ROC EpistryTrauma’’).16 Eligible patients were identified primarily by monthly review of participating EMS agency trauma records and supplemented by review of hospital trauma logs by research staff. Case ascertainment began by requesting all EMS records for patients entered into the trauma system (i.e., those meeting standard field trauma triage criteria), as all injured patients with physiologic compromise meet ‘‘mandatory’’ trauma triage guidelines for entry into the trauma system in this region. Records constituted hard copy versions of the EMS chart (typically converted from an agency’s EHR into a PDF file and then printed) that were sent in either hard copy or PDF format to our research staff. Because EMS providers from multiple agencies care for the same patients in this system, all available records from fire and ambulance agencies were manually matched to provide a comprehensive assessment of out-of-hospital information. Discrepancies between records were resolved by a trained data abstractor, who then handentered the data into Web-based electronic data forms using a standardized manual of operations. Outcomes were collected by matching EMS records to hospital records, locating these records within respective hospitals, and abstracting the hospital data into the Webbased forms. The research staff involved in manual data processing included: a data manager with extensive experience working with EMS data systems, EMS record queries, chart matching, and hospital chart reviews; a research assistant for reviewing EMS records and hospital trauma logs, plus matching EMS records between agencies; and a research associate with over 15 years of experience reviewing and abstracting EMS and hospital records. Quality assurance processes included data element range and consistency checks in the Web-based data entry forms, dual data entry, chart re-review for a randomly selected sample of records, and annual site visits by members of the ROC Data Coordinating Center to review randomly selected study records, data capture processes, and local data quality efforts. There were 629 patients identified and processed in the ‘‘manual processing’’ sample. Electronic Data Processing. Electronic data processing was undertaken for the same sample of patients in a separate, but parallel project investigating field trauma triage practices in the region. Injured patients were identified using an EHR data query within each EMS agency for the charting field ‘‘EMS provider primary impression’’ listed as ‘‘injury’’ or ‘‘trauma.’’ This query generated a broad sample of injured patients (n = 38,387), including those with minor and serious injury and normal and abnormal physiology. Of these patients, 3,008 met the physiologic inclusion criteria. Although each of the 10 participating EMS agencies had EHR charting platforms, there was variability in EHR type, features, use of the National EMS Information System (NEMSIS) data standards,17 functionality (including export routines), and integration of automated electronic central dispatch times. Aggregate • www.aemj.org 219 EHR files were exported from each of the participating EMS agencies (typically in 6- or 12-month time blocks, depending on the agency, availability of agency-based data personnel, and volume of calls) over a 2-year period and were restricted to the same dates used for the manual processing sample (January 1, 2006, through October 2, 2007). Data files representing a variety of different formats (e.g., XML, text files, comma-delimited, relational structure, and hybrid report outputs) were exported and reformatted. Database management, including checks for nonsensible values and recoding using standardized NEMSIS definitions for variables, was performed using SAS (Version 9.1, SAS Institute, Cary, NC). We matched multiple EMS records for the same patients, as well as hospital outcomes from existing trauma registries (three) and state discharge databases (two), using probabilistic linkage8,10,18,19 (LinkSolv, Version 5.0, Strategic Matching, Inc., Morrisonville, NY). Record linkage is an analytic method used to match records from different data sets using common variables when a unique identifier is not available. Probabilistic linkage has been used previously to match EMS and police records to ED and hospital data sources8,9 and has been validated in our system using EMS and trauma databases.10 The process of probabilistic linkage involves calculating estimates for error, discriminatory power, and the resulting positive (agreement) and negative (disagreement) match weights for all common variables between the two data sets. These and other factors were used to generate potential matches between the data files, with all matches having a cumulative match weight above a given threshold value (equivalent to 90% probability of a match) accepted as ‘‘true’’ matches and matches below this weight rejected. Human overview of records just above and below the 90% match probability value was used to confirm the accuracy of the calculated cutoff value.8,10,20 We performed several sequential linkage analyses. For matching EMS records to trauma registry records (and EMS-to-EMS record linkage), we used 18 common variables including: date of service, times, date of birth, zip code (home, incident), demographics (age, sex), field vital signs, field procedures, incident city, trauma band number, and destination hospital. For linking EMS records to patient discharge data, we used six variables (date of service, date of birth, home zip code, age, sex, and hospital). Probabilistic linkage was also used to match electronically processed patient records to manually processed records using linkage variables unrelated to those being compared (to avoid potentially inflating agreement between the samples). These linkage variables included the following: EMS incident number, date of service, dispatch time, age, sex, hospital, and trauma band number. Study staff involved with electronic data processing included the following: a fellowship-trained emergency care researcher ⁄ methodologist with expertise in database management, statistical analysis, and probabilistic linkage and two research associates with 10+ years experience each in data formatting and file conversion (for reformatting databases). 220 Newgard et al. Variables. We evaluated 18 clinical, operational, procedural, and outcome variables obtained using each data processing strategy. Clinical variables included the initial and ‘‘worst’’ field vital signs (GCS score, sBP in mmHg, respiratory rate in breaths ⁄ min, heart rate in beats ⁄ min). Operational variables included four time intervals (response, on-scene, transport, and total outof-hospital time).21 Field procedures included intravenous (IV) line placement and endotracheal intubation. Outcomes included mortality (field and in-hospital) and duration of hospital stay. Data Analysis We compared values obtained from manual versus electronic data processing using nonparametric descriptive statistics (median, interquartile range [IQR], and proportion). Case ascertainment was assessed by comparing the total number of patients meeting the prespecified inclusion criteria for each data processing approach. We considered two perspectives in quantifying agreement and validity between electronic versus manual values. First, we used statistical measures of agreement (kappa, weighted kappa, intraclass correlation coefficient [ICC]) and Bland-Altman plots.22 This comparison assumed that some level of error was associated with both data processing strategies (i.e., that neither approach was a ‘‘criterion standard’’). The second approach involved the assumption that values obtained by a trained abstractor represented the ‘‘true’’ values (i.e., the criterion standard), with validity of electronic values quantified using absolute agreement, sensitivity, and specificity (for categorical variables), plus median and IQR differences (for continuous variables) against values obtained by the manual data strategy. We assessed heteroscedasticity (differing variance across the range of potential values) for all continuous variables by regressing the difference in values (manual minus electronic) against the averaged value for each observation. All statistical analyses were based on observed values (patients with missing values excluded) and were conducted with SAS (Version 9.1 SAS Institute, Cary, NC). Manual data processing (patients identified from hospital and EMS trauma logs, review and abstraction of printed, hard copy records) During the 21-month period, 629 injured patients with physiologic compromise were identified, enrolled, and processed using manual data processing. Case ascertainment using electronic methods yielded 3,008 injured patients meeting the same inclusion criteria during the same time period. A total of 418 patients matched between the two data processing groups and formed the primary cohort for comparison (Figure 1). While electronic data processing yielded almost five times the number of subjects meeting inclusion criteria, there were a portion of patients (n = 35) who were missed by electronic processing, but captured by manual case ascertainment. An additional 211 patients in the manual processing group did not match to a record from the electronic group. Clinical, operational, procedural, and outcome variables are described for the various matched and unmatched groups in Table 1. Patients in the first three columns represent the manual data processing group (matched and unmatched to electronic cases), while those in the last column were only identified by electronic processing (the electronic-only group). In general, cases identified by manual methodology tended to have greater physiologic compromise (e.g., lower GCS, higher percentage of field intubations) and worse prognosis (e.g., higher mortality) than patients identified solely by electronic methods, although this was not universal in all groups. The median out-of-hospital time values fluctuated between groups, but overall were comparable. Mortality was lower in the electronic-only group (16%, 95% confidence interval [CI] = 14% to 18%) compared to the matched sample (22%, 95% CI = 18% to 27%) and the unmatched manual sample (36%, 95% CI = 29% to 43%) and was similar to cases identified solely by manual processing (13%, 95% CI = 4% to 31%). When the columns in Table 1 are collapsed into complete electronic (n = 3,008) and manual (n = 629) cohorts, overall mortality in the electronic versus manual cohorts was 18% (95% CI = 16% to 20%) versus 27% (95% CI = 23% to 31%). n = 35 common records n = 211 unmatched patients n = 418 ELECTRONIC VS. MANUAL DATA PROCESSING RESULTS EMS records from agencies with EHR charting: 4 transporting ambulance agencies 6 nontransporting fire departments 629 injured patients meeting physiologic inclusion criteria over identical date range (1/1/2006 – 10/2/2007) • n = 383 common records Electronic data processing (EHR exports, standardized data cleaning routines, probabilistic linkage for record matching) 38,387 patients with EMS provider primary impression of “injury” or “trauma” (1/1/2006 – 10/2/2007 3,008 injured patients meeting physiologic inclusion criteria over identical date range (1/1/2006 – 10/2/2007) n = 383 418 injured patients undergoing dual data processing strategies n = 2,625 unmatched patients n = 35 Figure 1. Schematic of patients included in manual versus electronic data processing. EHR = electronic health record. ACADEMIC EMERGENCY MEDICINE • February 2012, Vol. 19, No. 2 • www.aemj.org 221 Table 1 Clinical, Operational, Procedural, and Outcome Information for the Different Manual and Electronic Data Processing Samples* Matched patients identified by both ‘‘manual’’ and ‘‘electronic’’ case ascertainment (n = 383) Data Element Initial field vital signs sBP GCS RR HR Worst field vital signs Lowest sBP Lowest GCS Highest RR Lowest RR Highest HR Lowest HR Time intervals Response On-scene Transport Total out-of-hospital Field procedures (%) IV line placement Intubation attempt Outcomes Hospital LOS Mortality (%) Unmatched patients identified by ‘‘manual’’ case ascertainmentà Patients only identified by ‘‘manual’’ case ascertainment (n = 211) (n = 35) Patients only identified by ‘‘electronic’’ case ascertainment (n = 2,625) 127 9 18 93 (106–141) (4–12) (14–22) (80–110) 122 10 18 94 (90–143) (6–13) (14–24) (78–110) 132 12 18 97 (100–160) (7–14) (16–22) (84–105) 120 15 22 95 (90–141) (11–15) (16–30) (80–112) 119 8 20 16 100 83 (100–134) (3–11) (16–26) (12–20) (84–120) (70–100) 116 8 20 16 100 88 (90–134) (3–12) (16–24) (12–18) (86–120) (70–99) 122 12 20 18 103 87 (100–140) (7–14) (18–26) (16–20) (90–120) (71–108) 113 14 24 20 99 90 (90–136) (11–15) (18–30) (16–28) (82–116) (75–106) 5 18 14 38 (3–6) (13–25) (11–18) (33–45) 5 18 13 39 6 13 15 37 (4–8) (6–19) (10–21) (30–46) 4 16 14 34 (3–6) (12–21) (9–18) (28–43) 331 (86) 104 (27) 2 (1–7) 78 (22) 137 (65) 38 (18) 2 (0–7) 72 (36) (3–5) (11–26) (9–19) (29–52) 31 (89) 0 (0) 4 (1–12) 4 (13) 1,216 (67) 112 (5) 3 (1–6) 159 (16) Data are reported as median (IQR), unless otherwise specified. GCS = Glasgow Coma Scale score; HR = heart rate (beats ⁄ min); IQR = interquartile range; LOS = length of stay; RR = respiratory rate (breaths ⁄ min); sBP = systolic blood pressure (mm Hg). *See Figure 1 for a schematic diagram detailing the origination of each of these samples. Descriptive statistics are based on observed values (e.g., 32 patients in the matched sample had embargoed or missing outcomes, so the calculated mortality was 78 ⁄ 351 = 22%). For patients who matched between both manual and electronic processing, manual values are presented. àThese 211 patients were identified by manual data processing and did not match to electronic processing records, which may represent either patients missed by electronic processing or cases that did not match to an electronic record (even if present) because record linkage match rates were less than 100% between the two samples. Clinical, operational, procedural, and outcome variables (including the proportion of missing values) among matched patients who underwent both data processing strategies are compared in Table 2. Overall, there were very similar characteristics generated from both data processing approaches. However, there was a higher proportion of missing hospital outcomes with electronic data processing (87 of 418, 21%, 95% CI = 17% to 25%) compared to the manual approach (11 of 418, 3%, 95% CI = 1% to 5%). In addition, four patients identified as dying during their hospital stays with manual chart review were listed as survivors with electronic data processing methods. There was good agreement and validity between the two data processing approaches (Tables 3 and 4). For categorical variables, kappa values ranged from 0.76 (IV line placement) to 0.97 (intubation attempt), with exact agreement from 67% to 99%. The ICC for continuous terms ranged from 0.49 (response interval) to 0.97 (transport and total out-of-hospital intervals) and tended to be higher for variables measured throughout the out-of-hospital time period as opposed to single (i.e., initial) time points. The median difference was zero for all continuous variables, with all but two terms having an IQR of zero for these differences. In-hospital mortality agreed exactly in 99% of cases (kappa = 0.96), while hospital length of stay agreed exactly in 62% of cases (ICC = 0.56). There was some evidence of heteroscedasdicity among 5 of the 15 ordinal and continuous variables, as assessed by regressing differences against averaged values. The coefficients for these variables (initial respiratory rate = 0.20, p = 0.01; initial heart rate = )0.20, p = 0.008; lowest heart rate = 0.24, p = 0.002; response interval = )0.40, p < 0.0001; and length of stay = 0.56, p < 0.0001) did not suggest a systematic over- or underestimation of values for electronic data processing. The 10 remaining variables did not demonstrate statistical evidence of heteroscedasdicity (all p ‡ 0.20). Figure 2 shows Bland-Altman plots for initial and lowest field sBP. There was less variability (as quantified by the 95% interval of differences) for the ‘‘lowest’’ values compared to initial values. Similar plots for additional clinical (GCS), operational (total out-of-hospital 222 Newgard et al. • ELECTRONIC VS. MANUAL DATA PROCESSING Table 2 Clinical, Operational, Procedural, and Outcome Information for the Matched Sample, Separated by Manual Versus Electronic Data Processing (n = 418)* Manual Data Processing Median (IQR) Data Element Initial field vital signs sBP GCS RR HR Worst field vital signs Lowest sBP Lowest GCS Highest RR Lowest RR Highest HR Lowest HR Time intervals Response On-scene Transport Total out-of-hospital Field procedures (%) IV line placement Intubation attempt Outcomes Hospital LOS Mortality (%) Electronic Data Processing % Missing Median (IQR) % Missing 128 9 18 94 (105–142) (4–12) (14–22) (80–110) 20 28 6 8 127 10 18 90 (102–141) (5–14) (14–22) (79–111) 26 18 11 12 119 8 20 16 100 84 (100–135) (3–12) (16–26) (12–20) (85–120) (70–100) 16 29 6 6 6 6 120 9 20 16 100 85 (100–136) (3–12) (16–24) (12–20) (82–120) (72–100) 13 8 4 4 5 5 4 6 6 7 5 13 13 34 (4–7) (9–19) (9–18) (28–43) 6 0 10 10 4 16 13 35 (3–6) (12–21) (9–18) (28–44) 362 (87) 104 (25) 0 0 2 (1–7) 82 (22) 13 3 365 (89) 108 (26) 2 1 3 (1–8) 78 (24) 24 21 Data are reported as median (IQR), unless otherwise specified. GCS = Glasgow Coma Scale score; HR = heart rate (beats ⁄ min); IQR = interquartile range; LOS = length of stay; RR = respiratory rate (breaths ⁄ min); sBP = systolic blood pressure (mm Hg). *All comparisons are based on observed values. Twenty-six patients enrolled in a concurrent clinical trial with embargoed outcomes (Hypertonic Resuscitation Following Traumatic Injury, ClinicalTrials.gov identifiers NCT00316017 and NCT00316004) were excluded from the comparison of mortality in this study, but are represented in calculation of percent missing for manual data mortality. Mortality rates are based on observed values. Table 3 Measures of Agreement and Validity for Clinical, Operational, Procedural, and Outcome Information Between Manual Versus Electronic Data Processing (n = 418): Field Vital Signs and Times Kappa* 95% CI ICC 95% CI Exact Agreement, % Median Difference IQR of Difference Initial field vital signs sBP GCS RR HR — 0.78 — — — (0.72–0.83) — — 0.89 — 0.67 0.69 (0.85–0.93) — (0.47–0.86) (0.57–0.81) 81 67 59 59 0 0 0 0 (0,0) (0,0) (0,0) (0,0) Worst field vital signs Lowest sBP Lowest GCS Highest RR Lowest RR Highest HR Lowest HR — 0.88 — — — — — (0.83–0.93) — — — — 0.94 — 0.92 0.87 0.94 0.77 (0.91–0.96) — (0.86–0.98) (0.77–0.97) (0.91–0.97) (0.68–0.86) 88 87 87 86 84 81 0 0 0 0 0 0 (0,0) (0,0) (0,0) (0,0) (0,0) (0,0) — — — — — — — — 0.49 0.81 0.97 0.97 (0.23–0.74) (0.71–0.90) (0.95–0.99) (0.96–0.99) 56 53 97 87 0 0 0 0 (–2,0) (0,3) (0,0) (0,0) Data Element Time intervals (minutes) Response On-scene Transport Total Differences were calculated as manual value minus electronic value. GCS = Glasgow Coma Scale score; HR = heart rate (beats ⁄ min); IQR = interquartile range; RR = respiratory rate (breaths ⁄ min); sBP = systolic blood pressure (mm Hg). *For categorical and ordinal variables, kappa and weighted kappa values are presented. ACADEMIC EMERGENCY MEDICINE • February 2012, Vol. 19, No. 2 • www.aemj.org 223 Table 4 Measures of Agreement and Validity Between Manual Versus Electronic Data Processing (n = 418): Field Procedures and Hospital Outcomes Data Element Kappa* Field procedures IV line 0.76 Intubation 0.97 Outcomes LOS — Mortality 0.96 95% CI ICC 95% CI Exact Agreement, % (0.67–0.86) (0.95–1.00) — — — — 95 99 98 100 (96–99) (97–100) 76 99 (62–87) (97–100) — — — — — (0.93–1.00) 0.56 — (0.33–0.79) — 62 99 — 95 — (87–99) — 100 — (99–100) 0 — (-1,0) — Sensitivity, % 95% CI Specificity, % 95% CI Median Difference IQR of Difference Differences were calculated as manual value minus electronic value. LOS = length of stay; ICC = intraclass correlation coefficient; IQR = interquartile range. *For categorical and ordinal variables, kappa and weighted kappa values are presented. Twenty-six patients enrolled in a concurrent clinical trial with embargoed outcomes (Hypertonic Resuscitation Following Traumatic Injury, ClinicalTrials.gov identifiers NCT00316017 and NCT00316004) were excluded from the comparison of mortality in this study. time), and outcome (hospital length of stay) measures are included in Figures 3, 4, and 5, respectively. Differences in GCS suggest that the most consistent agreement occurred at the ends of the GCS spectrum (particularly for initial GCS) and that there was improved agreement for the ‘‘lowest’’ GCS (as indicated by a narrower 95% interval of values). For total out-of-hospital time, most values clustered on the zero difference line, but those that differed tended to be underestimated by electronically processed time values. Hospital length of stay had the lowest exact agreement (62%), with eight notable outlier values (including the single omitted 365-day outlier) that substantially increased the 95% interval of differences. Two-by-two tables for field procedures (IV line placement, intubation) and outcomes (mortality) are included in Figure 6. DISCUSSION In this study, we compared two data processing strategies (manual vs. electronic) for obtaining clinical research data from existing EHR among a cohort of out-of-hospital trauma patients. We found good to excellent agreement between the two approaches, with electronic methods having notably larger case capture. This is the first study we are aware of that directly compares a maximized all-electronic approach to more traditional case identification and data abstraction routines for outcomes-based out-of-hospital research. With increased emphasis on the implementation and utilization of EHR systems,5 this study is important in affirming the data quality and gains in case ascertainment when using an electronic approach for clinical research.23 Our findings are notable for several reasons. First, we compared the data processing strategies using clinically meaningful variables and outcomes, rather than simply evaluating the number of errors per data field. Second, the electronic methods used in this study completely removed the need for data abstraction and data entry (paperless), thus maximizing the benefits of EHR sources. Third, electronic data processing was based on aggregate data exports and processing routines that can handle large volumes of records with relatively small additional increases in processing time. Previous studies have defined ‘‘electronic data capture’’ or ‘‘electronic data collection’’ as data entry from source paper records into an electronic database;3,6,7 however, such an approach is relatively inefficient and cumbersome when the source files exist in an electronic format. Finally, data quality using electronic methods was comparable to manual processing methods and identified many more eligible patients, findings that capitalize on the national push for EHR and suggest that the requirement for manual record abstraction in some clinical research studies may be unnecessary. There were notable differences in case ascertainment and acuity between patients identified with the two approaches. The smaller sample size generated through manual processing is primarily explained by a more restrictive approach for case identification. Our findings suggest that not all injured patients with abnormal field physiology are entered into the trauma system (or that a portion of such patients are omitted from the respective EMS and trauma logs) and therefore relying on assumptions can miss eligible patients. These results illustrate that comprehensive case ascertainment requires a broad patient query with few assumptions and that hand-sorting through EMS records and case logs does not match the comprehensiveness of a broad electronic record query. While electronic data processing yielded more eligible patients, these additional patients had less severe physiologic compromise and lower mortality, suggesting that manual patient identification may be inherently biased towards higher-acuity patients with worse prognosis or that use of electronic patient queries identifies more heterogeneous and therefore lower-acuity subjects. The implications of these competing risks may differ depending on the study question being pursued and therefore need to be considered for each research project entertaining both approaches to patient identification. While we did not directly quantify the differences in time efficiency between data processing approaches, we gained substantive insight by assessing the relative effort expended for each strategy. Electronic processing time was affected by the inclusion of several EMS agencies that had not previously exported data files, the use of multiple different EHR systems, and the need to 224 (a) Newgard et al. 100 (a) Difference D S) (manual GC CS - electronic GCS Difference (manual sBP - electronic sBP) 80 60 40 20 0 -20 -40 -60 -80 -100 50 75 100 125 150 175 200 225 250 275 • ELECTRONIC VS. MANUAL DATA PROCESSING 12 10 8 6 4 2 0 -22 -4 -6 -8 -10 -12 12 300 3 4 5 6 7 Initial sBP (mmHg) (b) 9 10 11 12 13 14 15 11 12 13 14 15 Initial GCS 100 80 (b) 60 40 Difference (manual GCS G - electronic GCS) Differencce (manual sBP - electronic sBP) 8 20 0 -20 -40 -60 -80 -100 50 75 100 125 150 175 200 225 250 275 300 Lowest sBP (mmHg) 12 10 8 6 4 2 0 -2 4 -4 -6 -8 -10 -12 3 Figure 2. Bland-Altman plots of field systolic blood pressure between electronic and manual data processing.* (a) Initial sBP, n = 287 . (b) Lowest sBP, n = 350à. *The Bland-Altman plots graph the difference in out-of-hospital systolic blood pressure (sBP; manual processing value minus electronic processing value) on the y-axis, plotted against the mean value on the x-axis for each observation. Dotted lines represent the interval that contains 95% of all differences (1.96· standard deviation). This interval was ±21.7 mm Hg for initial sBP and ±16.6 mm Hg for lowest sBP. There was 81% exact agreement between values for initial sBP, with an ICC of 0.89. à There was 88% exact agreement between values for lowest sBP, with ICC of 0.94. ICC = intraclass correlation coefficient. sBP = systolic blood pressure electronically match records between multiple EMS agencies. The time savings would be expected to increase when using a single EHR program, data exports with industry-standardized processes, familiar data routines, standardized data fields (e.g., NEMSIS), and batched processes for reformatting, cleaning, and linking data. For manual methods, the time required per record is fixed after maximizing the experience and speed of a given data abstractor and chart identification processes. Because electronic data processing can handle large sample sizes with relatively little additional time requirement, the time differences between electronic and manual data processing are likely to be magnified with increasing sample sizes, providing a tremendous advantage of electronic processing with large or massive record reviews. 4 5 6 7 8 9 10 Lowest GCS Figure 3. Bland-Altman plots of field GCS score between electronic and manual data processing.* (a) Initial GCS score, n = 256 . (b) Lowest GCS score, n = 278à. *The Bland-Altman plots graph the difference in out-of-hospital GCS score (manual processing value minus electronic processing value) on the y-axis, plotted against the mean value on the x-axis for each observation. Dotted lines represent the interval that contains 95% of all differences (1.96· standard deviation). This interval was ±5.1 for initial GCS and ±4.0 for lowest GCS. Scatter at each value has been included to enhance visual interpretation. There was 67% exact agreement between values for initial GCS, with a weighted kappa of 0.78. à There was 87% exact agreement between values for lowest GCS, with a weighted kappa of 0.88. GCS = Glasgow Coma Scale. However, electronic data processing is not a panacea for research and has important limitations that must be considered. Electronic processing can be slowed (or halted) by multiple factors, including lack of export functionality in commercial EHR software, poorly formatted data, lack of personnel with appropriate expertise, and the availability (and timeliness) of existing outcome data sources. There is also the potential that information contained in the EHR does not adequately cover all data fields required for a given research project, requiring additional data forms or chart abstraction. The time, effort, and cost requirements for organizations implementing and maintaining EHR can also be substantial. Examples of hospital-based health care systems that have successfully navigated such obstacles with broad EHR systems have been described.24 ACADEMIC EMERGENCY MEDICINE • February 2012, Vol. 19, No. 2 20 • www.aemj.org 225 Electronic data (a) Diffference (manual time - electronic time) 15 no yes 38 12 50 8 353 361 46 365 411 10 5 Manual data 0 -5 no yes -10 -15 -20 0 10 20 30 40 50 60 70 80 90 100 110 120 Total out-of-hospital time (minutes) Figure 4. Bland-Altman plot of total out-of-hospital time interval (in minutes) between electronic and manual data processing (n = 354).* *The Bland-Altman plot graphs the difference in the total out-of-hospital time interval (manual processing value minus electronic processing value) on the y-axis, plotted against the mean value on the x-axis for each observation. Dotted lines represent the interval that contains 95% of all differences (1.96· standard deviation). This interval was ±4.5 minutes. There was 87% exact agreement between values, with ICC of 0.97. ICC = intraclass correlation coefficient. (b) Manual data Electronic data no yes no yes 50 307 4 311 0 104 104 307 108 415 Differeence (manual LOS - electronic e LOS) 40 30 20 10 0 -10 Electronic data no yes (c) -20 -30 -40 no -50 0 5 10 15 20 25 30 35 40 45 50 55 60 65 70 75 80 85 90 95 100105 Hospital length of stay (LOS) (days) Figure 5. Bland-Altman plot of hospital length of stay (in days) between electronic and manual data processing (n = 288).* *The Bland-Altman plot graphs the difference in hospital length of stay (manual processing value minus electronic processing value) on the y-axis, plotted against the mean value on the x-axis for each observation. Dotted lines represent the interval that contains 95% of all differences (1.96 · standard deviation). A single outlier value with difference of 365 days was removed from the figure for clarity. The 95% interval with the outlier value included was ±43.6 days. With the single outlier value removed from calculations, the 95% interval of differences was ±11.1 days (grey broken-dashed line). There was 62% exact agreement between values, with ICC of 0.56. ICC = intraclass correlation coefficient. Finally, while the proportion of missing data was similar between the processing approaches for out-ofhospital information, there were more missing outcomes using electronic data processing. This was likely secondary to less than 100% match rates for probabilistic linkage and no outcome data sources for certain subsets of patients (e.g., patients evaluated and discharged Manual data yes 228 0 228 4 72 76 232 72 304 Figure 6. Two-by-two tables comparing electronic and manual data processing values for field interventions (IV line placement, intubation) and outcome (mortality). (a) IV line placement. There was exact agreement among 95% of values, with a kappa of 0.76. (b) Intubation attempt. There was exact agreement among 99% of values, with a kappa of 0.97. (c) In-hospital mortality. There was exact agreement among 99% of values, with a kappa of 0.96. Twenty-six patients enrolled in a concurrent clinical trial with embargoed outcomes (Hypertonic Resuscitation Following Traumatic Injury, ClinicalTrials.gov identifiers NCT00316017 and NCT00316004) were excluded from the comparison of mortality in this study. from the ED). Electronic outcome matching using existing data sources would be expected to improve with availability of patient identifiers,20 additional match terms,10 and additional data sources (e.g., ED data). While we did not integrate methods for directly handling missing values in this study, our preferred 226 approach is multiple imputation, which is widely available in statistical software, can reduce bias and preserve study power25–29 and has been validated for handling missing out-of-hospital values.30 LIMITATIONS Our sample included injured patients with physiologic compromise treated within a single region. Therefore, it is uncertain whether these results can be generalized to other regions or to patients with other medical conditions. Also, this was an observational cohort, rather than a clinical trial. These results will need to be replicated in a clinical trial setting to validate our results in an interventional research environment, including the timeliness of hospital outcomes and safety information. Our results also require replication with different study personnel, including quantification of the time required for each approach. Agreement between the variables in our study was good and we believe the differences were not clinically meaningful. However, whether apparently small differences, misclassification, and heteroscedasticity are large enough to substantively alter testing of specific hypotheses and study results may be specific to a given research question. In addition, we focused the analysis on 18 variables available in the EHR, yet the inability to obtain all relevant research information from the EHR is a real possibility, depending on the research question and topic under study. Also, there were 211 manual processing patients who did not match to a record from the electronic processing sample, which may be explained by less than complete match rates between the samples or from additional patients missed by electronic methods. Finally, defining a functional criterion standard for data collection and processing is difficult and generally limited by resource constraints, practical challenges, and the nuances of different clinical environments. We believe that the manual data processing strategy used in this study can be considered a gold standard for purposes of comparison to alternative data strategies (i.e., electronic processing), although this could be debated. Our results suggest that the electronic strategy was superior in case identification and that manual processing was superior in some aspects of data quality (e.g., minimizing missing outcomes), which suggests that there may be a role for both strategies to maximize value, depending on the priorities of a given research project. CONCLUSIONS Our findings demonstrate that epidemiologic research data obtained using out-of-hospital EHR and processed with electronic methods can be used to increase case ascertainment without compromising data quality in out-of-hospital trauma research. However, the broader group of electronically identified patients may have important differences in acuity and prognosis, as well as a greater percentage of missing outcomes. If replicated in other research settings, the gains in efficiency and capacity with electronic processing support a new Newgard et al. • ELECTRONIC VS. MANUAL DATA PROCESSING ‘‘electronic’’ paradigm for collecting and processing clinical research data, including a vision for increased integration of information systems between different phases of clinical care, potentially increasing the scope and speed of scientific inquiry. We acknowledge and thank the many contributing EMS agencies, EMS providers, and study staff for their willingness to participate in and support this project, for their continued dedication to improving the quality and efficiency of out-of-hospital data collection and for supporting data-driven approaches to improving the care and outcomes for patients served by EMS. References 1. Boroush M. New Estimates of National Research and Development Expenditures Show 5.8% Growth in 2007. Available at: http://www.nsf.gov/statistics/ infbrief/nsf08317/. Accessed Nov 15, 2011. 2. Edwards JR, Pollock DA, Kupronis BA, et al. Making use of electronic data: the National Healthcare Safety Network eSurveillance Initiative. Am J Infect Control. 2008; 36(3 Suppl):S21–6. 3. Eisenstein EL, Collins R, Cracknell BS, et al. Sensible approaches for reducing clinical trial costs. Clinical Trials. 2008; 5:75–84. 4. Yip A, Leduc M, Teo V, Timmons M, Schull MJ. A novel method to link and validate routinely collected emergency department clinical data to measure quality of care. Am J Med Qual. 2009; 24:185–91. 5. Congress of the United States of America. American Recovery and Reinvestment Act of 2009. 111 Final Feb 19, 2009. Title XIII – Health Information Technology, pp 112–165. 6. Pavlovic I, Kern T, Miklavcic D. Comparison of paper-based and electronic data collection process in clinical trials: costs simulation study. Contemp Clin Trials. 2009; 30:300–16. 7. Nahm ML, Pieper CF, Cunningham MM. Quantifying data quality for clinical trials using electronic data capture. PLoS ONE. 2008; 3:e3049. 8. Dean JM, Vernon DD, Cook L, Nechodom P, Reading J, Suruda A. Probabilistic linkage of computerized ambulance and inpatient hospital discharge records: a potential tool for evaluation of emergency medical services. Ann Emerg Med. 2001; 37:616–26. 9. National Highway Traffic Safety Administration, US Department of Transportation. Why data linkage? The Importance of CODES (Crash Outcome Data Evaluation System). DOT HS 808461. Available at: http://www-nrd.nhtsa.dot.gov/Pubs/811181.pdf. Accessed Nov 15, 2011. 10. Newgard CD. Validation of probabilistic linkage to match de-identified ambulance records to a state trauma registry. Acad Emerg Med. 2006; 13:69–75. 11. Davis DP, Garberson LA, Andrusiek D, et al. A descriptive analysis of emergency medical service systems participating in a large, out-of-hospital resuscitation research network. Prehosp Emerg Care. 2007; 11:369–82. 12. American College of Surgeons. Committee on Trauma. Resources for Optimal Care of the Injured ACADEMIC EMERGENCY MEDICINE • February 2012, Vol. 19, No. 2 13. 14. 15. 16. 17. 18. 19. 20. 21. 22. 23. Patient. Chicago, IL: American College of Surgeons, 2006. Norcross ED, Ford DW, Cooper ME, Zone-Smith L, Byrne TK, Yarbrough DR 3rd. Application of American College of Surgeons’ field triage guidelines by pre-hospital personnel. J Am Coll Surg. 1995; 181:539–44. Henry MC, Hollander JE, Alicandro JM, Cassara G, O’Malley S, Thode HC Jr. Incremental benefit of individual American College of Surgeons trauma triage criteria. Acad Emerg Med. 1996; 3:992–1000. Lerner EB. Studies evaluating current field triage: 1966–2005. Prehosp Emerg Care. 2006; 10:303–6. Newgard CD, Sears GK, Rea TD, et al. The Resuscitation Outcomes Consortium Epistry-Trauma: design, development, and implementation of a North American epidemiologic prehospital trauma registry. Resuscitation. 2008; 78:170–8. Dawson DE. National Emergency Medical Services Information System (NEMSIS). Prehosp Emerg Care. 2006; 10:314–6. Clark DE. Practical introduction to record linkage for injury research. Inj Prev. 2004; 10:186–91. Jaro MA. Probabilistic linkage of large public health data files. Stat Med. 1995; 14:491–8. Cook LJ, Olson LM, Dean JM. Probabilistic record linkage: relationships between file sizes, identifiers, and match weights. Methods Inform Med. 2001; 40:196–203. Spaite DW, Valenzuela TD, Meislin HW, Criss EA, Hinsberg P. Prospective validation of a new model for evaluating emergency medical services systems by in-field observation of specific time intervals in prehospital care. Ann Emerg Med. 1993; 22:638– 45. Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986; 1:307–10. The Mitre Corporation and National Center for Research Resources. Electronic Health Records Overview. Available at: http://www.ncrr.nih.gov/ • www.aemj.org 24. 25. 26. 27. 28. 29. 30. 227 publications/informatics/ehr.pdf. Accessed Nov 15, 2011. Weiner MG, Lyman JA, Murphy S, Weiner M. Electronic health records: high-quality electronic data for higher-quality clinical research. Inform Prim Care. 2007; 15:121–7. Little RJ, Rubin DB. Statistical Analysis with Missing Data. 2nd ed. New York, NY: John Wiley & Sons, Inc., 2002. Van Der Heijden GJ, Donders ART, Stijnen T, Moons KG. Imputation of missing values is superior to complete case analysis and the missing-indicator method in multivariable diagnostic research: a clinical example. J Clin Epidemiol. 2006; 59:1102–9. Crawford SL, Tennstedt SL, McKinlay JB. A comparison of analytic methods for non-random missingness of outcome data. J Clin Epidemiol. 1995; 48:209–19. Greenland S, Finkle WD. A critical look at methods for handling missing covariates in epidemiologic regression analyses. Am J Epidemiol. 1995; 142:1255–64. Newgard CD, Haukoos J. Missing data in clinical research–part 2: multiple imputation. Acad Emerg Med. 2007; 14:669–78. Newgard CD. The validity of using multiple imputation for missing prehospital data in a state trauma registry. Acad Emerg Med. 2006; 13:314–24. Supporting Information The following supporting information is available in the online version of this paper: Data Supplement S1. Electronic approach. Data Supplement S2. Manual approach. The documents are in PDF format. Please note: Wiley Periodicals Inc. is not responsible for the content or functionality of any supporting information supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.