Mining Atomic Chinese Abbreviation Pairs with a Probabilistic

advertisement

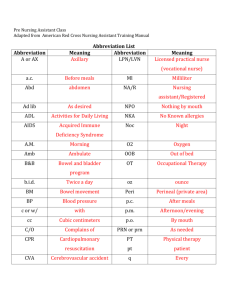

Mining Atomic Chinese Abbreviation Pairs with a Probabilistic Single Character Word Recovery Model JING-SHIN CHANG and WEI-LUN TENG Department of Computer Science & Information Engineering National Chi-Nan University Puli, Nantou, Taiwan, ROC. Email: jshin@csie.ncnu.edu.tw and s3321512@ncnu.edu.tw Abstract. An HMM-based Single Character Recovery (SCR) Model is proposed in this paper to extract a large set of “ atomic abbreviation pairs”from a text corpus. By an “ atomic abbreviation pair,”it refers to an abbreviated word and its root word (i.e., unabbreviated form) in which the abbreviation is a single Chinese character. This task is important since Chinese abbreviations cannot be enumerated exhaustively but the abbreviation process for Chinese compound words seems to be “ compositional” ; one can often decode an abbreviated word, such as “ 台大”(Taiwan University), character-by-character back to its root form, “ 台灣”plus “ 大 學” . With a large “ atomic abbreviation dictionary,”one may be able to handle problems associated with multiple-character abbreviations more easily based on the compositional property of abbreviations. Key words: Abbreviations, Atomic Abbreviation Pairs, Chinese Language Processing, Hidden Markov Model, Lexicon, Synonyms, Translation Equivalent, Unknown Words. 1. Motivation Chinese abbreviations are widely used in the modern Chinese texts. They are a special form of unknown words, which cannot be exhaustively enumerated in a dictionary. A Chinese abbreviation is normally formed by deleting some characters in its “ root”(unabbreviated) form, while retaining representative characters that preserve meaning. Many abbreviations originated from important lexical units such as named entities. However, the sources for Chinese abbreviations are not solely from the noun class, but from most major categories, including verbs, adjectives, adverbs and others. In fact, no matter what lexical or syntactic structure a string of characters could be, one can almost always find a way to abbreviate it into a shorter form. Therefore, it may be necessary to handle them in a separated layer beyond any other classes. Furthermore, abbreviated words are semantically ambiguous. For example, “ 清大”can be the abbreviation for “ 清華大學”or “ 清潔大隊” ; on the opposite direction, multiple choices for abbreviating a word are also possible. For instance, “ 台北大學”may be abbreviated as “ 台大” ,“ 北大”or “ 台北大” . This kind of “ two-way ambiguity”makes it difficult either to 2 JING-SHIN CHANG and WEI-LUN TENG generate the abbreviated form from a root word or to recover the root form of an abbreviation. An abbreviation serves many linguistic functions with respect to its root. First of all, an abbreviation is a “ synonym”of its root. Secondly, it is also a translation equivalent of its root in cross-lingual environments. Therefore, they can be used interchangeably in mono- or multi-lingual applications. As such, it results in difficulty for correct Chinese language processing and applications, including word segmentation (Chiang et al., 1992; Lin et al., 1993; Chang and Su, 1997), information retrieval, query expansion, lexical translation and more. For instance, a keyword-based information retrieval system may requires the two forms, such as “ 中研院”and “ 中央研究院” (“ Ac a d e mi aSi n i c a ” ), in order not to miss any relevant documents. The Chinese word segmentation process is also significantly degraded by the existence of unknown words (Chiang et al., 1992), including unknown abbreviations. An abbreviation model or a large abbreviation lexicon is therefore highly desirable for Chinese abbreviation processing. However, abbreviations cannot be enumerated exhaustively due to the constantly generated new words and their abbreviations. This implies that we may have to find the atomic abbreviation patterns associated with sub-word units in order to completely solve the problems. Since the smallest possible Chinese lexical unit into which other words can be abbreviated is a single character, identifying the set of root words for all individual Chinese characters is especially interesting. Such a root-abbreviation pair in which the abbreviation is a single Chinese character will be referred to as an “ atomic abbreviation pair”hereafter. Actually, the abbreviation process for Chinese compound words seems to be “ compositional” . In other words, one can often decode an abbreviated word, such as “ 台大”(“ Taiwan University” ), character-by-character back to its root form “ 台灣大學”by observing that “ 台”can be an abbreviation of “ 台灣”and “ 大”can be an abbreviation of “ 大學”and “ 台灣大學”is a frequently observed character sequence in real text. On the other hand, multi-character abbreviations of compound words may often be synthesized from single character abbreviations. In other words, one can decompose a compound word into its constituents and then concatenate their single character equivalents to form its abbreviated form. If we are able to identify all such “ atomic abbreviation pairs”associated with all Chinese characters, then resolving multiple character abbreviation problems might become easier. Therefore, a model for mining the root forms of the finite Chinese character set could be a significant task. The Chinese abbreviation recovery problem can be regarded as an error recovery problem (Chang and Lai, 2004) in which the suspect root words are t he“ e r r or s ”t ober e c ov e r e df r om as e tofc a nd i da t e s .Such a problem can be 2 MINING ATOMIC CHINESE ABBREVIATION PAIRS 3 mapped to an HMM-based model for both abbreviation identification and root word recovery; it can also be integrated as part of a unified word segmentation model easily. In an HMM-based model, the parameters can usually be trained in an unsupervised manner; the most likely root words can also be automatically extracted, by finding those candidates that maximizes the likelihood of the sentences. An abbreviation lexicon, which consists of the highly probable root-abbreviation pairs, can thus be constructed automatically in the training process of the unified word segmentation model. In the current work, a method, known as the Single Character Recovery (SCR) Model, is exploited to automatically acquire a large “ atomic” abbreviation lexicon for all Chinese characters from a large corpus. In the following sections, the unified word segmentation model with abbreviation recovery capability in (Chang and Lai, 2004) is reviewed first. We then described how to adapt t h i sf r a me wor kt oc o ns t r uc ta n“ atomic abbreviation lexic on”a ut oma t i c a l l y . 2. Unified Word Segmentation Model for Abbreviation Recovery To resolve the abbreviation recovery problem, one can identify some root candidates for each suspect abbreviation, then enumerate all possible sequences of such root word candidates, and confirm the most probable root word sequence by consulting local context. Such a root word recovery process can be easily mapped to an HMM model (Rabiner and Juang, 1993), which is good at finding the best unseen state sequence, if we regard the input characters a sou r“ obs e r v a t i o ns e que n c e ” ,a ndr e g a r dt heun s e e nroot word c a ndi da t e sa st h eu nd e r l y i ng“ s t a t es e q ue nc e ” .The abbreviation recovery process can then be integrated into the word segmentation model as follows. Firstly we can regard the segmentation process as finding the best underlying root words w1m w1 , , wm , given the input characters c1n c1 , , cn c1 , , cm . The segmentation process is then equivalent to finding the best (non-abbreviated) word sequence w * such that: w* arg max P w1m | c1n arg max P c |w P w w1m :w1m c1n w1m :w1m c1n n 1 m 1 m 1 arg max P ci | wi P wi | wi1 w1m :w1m c1n i 1,m wi ci 3 (1) 4 JING-SHIN CHANG and WEI-LUN TENG where ci refers to the surface form of wi , which could be in the abbreviated or non-abbreviated form of wi . The last equality assumes that the generation of an abbreviation is independent of context, and the language model is a word-based bigram model. The word-wise transition probability P wi | wi 1 in the language model is used to impose contextual constraints over neighboring root words so that the underlying word sequence forms a legal sentence with high probability. In the absence of abbreviations, such that all surface forms are identical to t he i r“ r o ot ”f or ms , we will have P ci | wi 1 . In this special case, Equation (1) will simply reduce to a word bigram model for word segmentation (Chiang et al., 1992). In the presence of abbreviations, however, the generation probability P ci | wi can no longer be ignored. As an example, if ci and ci 1 are ‘ 台’and ‘ 大’ , respectively, then their roots, wi and wi 1 , could be ‘ 台灣’plus ‘ 大學’( Ta i wa nUni v e r s i t y )o r‘ 台 灣’plus ‘ 大聯盟’( Ta i wa nMa j o rLe a g ue ) .I nt hi sc a s e ,t he probability scores P(台|台灣) x P(大|大學) x P(大學|台灣) and P(台|台灣) x P(大|大聯盟) x P(大聯盟|台灣)wi l li n di c a t ehowl i k e l y‘ 台大’i sa na bbr e v i a t i on,a ndwhi c h of the above two compounds is the more probable root form. By applying the unified (abbreviation-enhanced) word segmentation model, Equation (1), to the underlying word lattice, some of the root candidates may be preferred and others be discarded, by consulting the neighboring words and their transition probabilities. If the best wi ci , a root-abbreviation pair will be identified. It is desirable to estimate the abbreviation probability using some simple features, in addition to the lexemes (i.e., surface character sequences) of the roots and abbreviations. Some useful heuristics about Chinese abbreviations might suggest other simple and useful features. For instance, many 4-character words are abbreviated as 2-character abbreviations (as in the case for the <台灣大學, 台大> pair.) Abbreviating into words of other lengths is less probable. It is also known that many 4-character words are abbreviated by preserving the first and the third characters, which can be represented by a ‘ 1010 ’bi tpa t t e r n,whe r et he‘ 1 ’or ‘ 0’means to preserve or delete the respective character. Therefore, a reasonable simplification for the abbreviation model is to introduce the length and the positional bit pattern as additional features, resulting in the following abbreviation probability. P c | w P(c1m , bit , m | r1n , n) P (c1m | r1n ) P (bit | n) P (m | n) 4 (2) MINING ATOMIC CHINESE ABBREVIATION PAIRS 5 where c1m are the characters in the abbreviation of length m , r1n are the characters in the root word of length n , and bit is the above-mentioned bit pattern associated with the abbreviation process. 3. The Single Character Recovery (SCR) Model As mentioned earlier, multiple character Chinese abbreviations are often “ compositional.”This means that those atomic N-to-1 abbreviation patterns may form the basis for the underlying Chinese abbreviation process. The other M-to-N abbreviation patterns might simply be a composition of such basic N-to-1 abbreviations. Therefore, it is interesting to apply the abbreviation recovery model to acquire all atomic N-to-1 abbreviation patterns, so that of multi-character abbreviations can be detected and predicted more easily. Such a mining task can be greatly simplified if each character in the training corpus is assumed to be a probable abbreviated word whose root form is to be recovered. In other words, the surface form ci in Equation (1) is reduced to a single character abbreviation, and thus the associated abbreviation pair is an “ atomic”pair. The abbreviation recovery model based on this assumption will be referred to as the Single Character Recovery (SCR) Model. To acquire the atomic abbreviation pairs, based on the above assumption, the following iterative training process can be applied. Firstly, the root candidates for each single character are enumerated to form a word lattice, and each path of the lattice will represent a non-abbreviated word sequence. The underlying word sequence that is most likely to produce the input character sequence, according to Equation (1), will then be identified as the best word sequence. Once the best word sequence is identified, the model parameters are re-estimated. And the best word sequence is identified again. Such an iterative process is repeated until the best sequence no more changes. Afterward, the corresponding <root, abbreviation> pairs will be extracted as atomic abbreviation pairs. While it is highly simplified to use this SCR model for conducting a general abbreviation enhanced word segmentation process (since not all single characters are really abbreviated words), the single character assumption might be useful for extracting roots of real single-character abbreviations with high demand on recall rate. The reason is: based on the SCR model, a real root will be extracted only when it has high transition probability (local constraint) against neighboring words and high output probability to produce the input character. As long as we have sufficient training data, spurious roots will be suppressed. The over-generative assumption may be harmful for the 5 6 JING-SHIN CHANG and WEI-LUN TENG recovery precision a bit, but will cover most interested abbreviation pairs, which might be more important for the current mining task. To estimate the model parameters of the HMM-based recovery models, an unsupervised training method, such as the Baum-Welch re-estimation process (Rabiner and Juang, 1993) or a generic EM algorithm (Dempster et al., 1977) can be applied. If a word-segmented corpus is available, which is exactly our situation, the parameter estimation problem as well as the root word candidate generation problem can be greatly simplified. Under such circumstances, the re-estimation method can still be applied for training the abbreviation probabilities iteratively in an unsupervised manner, with the word transition probabilities estimated in a supervised manner from the segmented corpus. Furthermore, given the segmented corpus, the initial candidate <root, abbreviation> pairs can be generated by assuming that all word-segmented words in the training corpus are potential roots for each of its single-character constituents. For example, if we have “ 台灣”as a word-segmented token, then the abbreviation pairs <台灣, 台> and <台灣, 灣> will be generated. By default, each single character is its own abbreviation. This is required to handle the case that the character is not really an abbreviation. In addition, to estimate the initial abbreviation probabilities, each abbreviation pair is associated with the frequency count of the root word in the word segmentation corpus. This means that each single-character abbreviation candidate is equally weighted with respect to a root word. The equal weighting strategy, however, may not be appropriate (Chang and Lai, 2004). In fact, the character position and word length features, as mentioned in Equation (2), may be helpful. The initial probabilities are therefore weighted differently according to the position of the character and the length of the root. The weighting factors are directly acquired from a previous work in (Chang and Lai, 2004). Finally, before the initial probabilities are re-estimated, Good-Turning smoothing (Katz, 1987) is applied to the raw frequency counts of the abbreviation pairs in order to smooth unseen pairs. 4. Experiments To evaluate the SCR Model, the Academia Sinica Word Segmentation Corpus (dated July, 2001), ASWSC-2001 (CKIP, 2001), is adopted for parameter estimation and performance evaluation. Among 94 files of this balanced corpus, 83 of them (13,086KB) are randomly selected as the training set and 11 of them (162KB) are used as the test set. Table 1 shows some examples of atomic abbreviation pairs acquired from the training corpus. Note that the acquired abbreviation pairs are not merely named entities as one might expect; a wide variety of word classes have actually been acquired. The 6 MINING ATOMIC CHINESE ABBREVIATION PAIRS 7 examples here partially justify the possibility and usefulness to use the SCR model for acquiring atomic abbreviation pairs from a large corpus. Abbr:Root Example Abbr:Root Example Abbr:Root Example Abbr:Root Example 籃:籃球 籃賽 宣:宣傳 文宣 網:網路 網咖 設:設計 工設系 檢:檢查 安檢 農:農業 農牧 股:股票 股市 湖:澎湖 臺澎 媒:媒體 政媒 艙:座艙 艙壓 韓:韓國 韓流 海:海洋 海生館 宿:宿舍 男舍 攜:攜帶 攜械 祕:祕書 主秘 文:文化 文建會 臺:臺灣 臺幣 汽:汽車 汽機車 植:植物 植被 生:學生 新生 漫:漫畫 動漫 咖:咖啡店 網咖 儒:儒家 新儒學 新:新加坡 新國 港:香港 港人 職:職業 現職 盜:強盜 盜匪 花:花蓮 花東 滿:滿意 不滿 劃:規劃 劃設 房:房間 機房 資:資訊 資工 Table 1. Examples of atomic abbreviation pairs. Several submodels using different feature sets for estimating the abbreviation probabilities (Equation (2)) are investigated in order to see their effects on the performance. With more added features, the performance is seen to be improved successively. Overall, using all the lexemes, positional feature, and length feature achieves the best results. The iterative training process, outlined in the previous section, converges quickly after 3~4 iterations. The numbers of unique abbreviation patterns for the training and test sets are 20,250 and 3,513, respectively. Since the numbers of patterns are large, a rough estimate on the acquisition accuracy rates is conducted by randomly sampling 100 samples of the <root, abbreviation> pairs. The pattern is then manually examined to see if the root is correctly recovered. The precision is estimated as 50% accurate for the test set, and 62% for the training set. Since the SCR model uses an over-generative assumption to enumerate potential roots for all characters, the recall is expected to be high. Under such circumstances, the recall rate is not particularly interesting. Furthermore, it’ s hard to estimate the recall for the large corpus. Therefore, the recall is not estimated. Although a larger sample may be necessary to get a better estimate, the preliminary result is encouraging. 5. Concluding Remarks Chinese abbreviations, which form a special kind of unknown words, are widely seen in the modern Chinese texts. This results in difficulty for correct Chinese language processing. Mining a large abbreviation lexicon automatically is therefore highly desirable. In particular, since abbreviated 7 8 JING-SHIN CHANG and WEI-LUN TENG words are generated without stop, it is desirable to find the “ atomic” abbreviation units down to a single character level together with their potential root words. In this work, we had adapted a unified word segmentation model (Chang and Lai, 2004), which is able to handle the kind of“ e r r or s ”i n t r oduc e dbyt hea bb r e v i a t i onpr o c e s s , for mining possible roots of all Chinese characters. An iterative training process, based on a Single Character Recovery (SCR) Model, is developed to automatically acquire an abbreviation dictionary for single-character abbreviations from large corpora. The acquisition accuracy of the proposed SCR Model achieves 62% and 50 % precision for the training set and the test set, respectively. For systems that need to handle unlimited multiple-character abbreviations, the automatically constructed “ atomic abbreviation dictionary”could be invaluable. Acknowledgements This work is supported by the National Science Council (NSC), Taiwan, Republic of China (ROC), under the contract NSC 93-2213-E-260-015. References Chang, Jing-Shin and Keh-Yih Su, 1997. “ An Unsupervised Iterative Method for Chinese New Lexicon Extraction” , International Journal of Computational Linguistics and Chinese Language Processing (CLCLP), 2(2): 97-148. Chang, Jing-Shin and Yu-Tso Lai, 2004. “ A Pr e l i mi nary Study on Probabilistic Models for Chi ne s eAbbr e v i a t i ons . ”Proceedings of the Third SIGHAN Workshop on Chinese Language Learning, pages 9-16, ACL-2004, Barcelona, Spain. Chiang, Tung-Hui, Jing-Shin Chang, Ming-Yu Lin and Keh-Yih Su, 1992. “ Statistical Models for Word Segmentation and Unknown Word Resolution,”Proceedings of ROCLING-V, pages 123-146, Taipei, Taiwan, ROC. CKIP 2001, Academia Sinica Word Segmentation Corpus, ASWSC-2001, (中研院中文分詞語 料庫), Chinese Knowledge Information Processing Group, Acdemia Sinica, Tiapei, Taiwan, ROC. Dempster, A. P., N. M. Laird, and D. B. Rubin, 1977. “ Ma x i mum Li k e l i hoodf r om I nc ompl e t e Data via the EM Al g or i t hm” ,Journal of the Royal Statistical Society, 39 (b): 1-38. Katz, Slava M., 1987. “ Estimation of Probabilities from Sparse Data for the Language Model Component of a Speech Recognizer,”IEEE Trans. ASSP-35 (3). Lin, Ming-Yu, Tung-Hui Chiang and Keh-Yih Su, 1993. “ A Preliminary Study on Unknown Word Problem in Chinese Word Segmentation,”Proceedings of ROCLING VI, pages 119-142. Rabiner, L., and B.-H., Juang, 1993. Fundamentals of Speech Recognition, Prentice-Hall. 8