Media in performance: Interactive spaces for dance, theater, circus

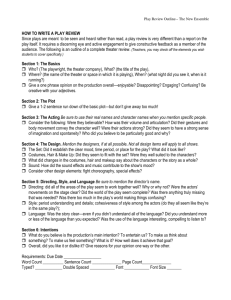

advertisement