ARTICLE IN PRESS

Consciousness

and

Cognition

Consciousness and Cognition xxx (2004) xxx–xxx

www.elsevier.com/locate/concog

Opposition logic and neural network models

in artificial grammar learningq

John R. Vokeya,* and Philip A. Highamb

a

Department of Psychology and Neuroscience, University of Lethbridge, Lethbridge, Alta., Canada T1K 3M4

b

University of Southampton, UK

Received 27 May 2004

Available online

Abstract

Following neural network simulations of the two experiments of Higham, Vokey, and Pritchard (2000),

Tunney and Shanks (2003) argued that the opposition logic advocated by Higham et al. (2000) was incapable of distinguishing between single and multiple influences on performance of artificial grammar

learning and more generally. We show that their simulations do not support their conclusions. We also

provide different neural network simulations that do simulate the essential results of Higham et al. (2000).

Ó 2004 Elsevier Inc. All rights reserved.

Keywords: Conscious; Unconscious; Controlled; Automatic; Implicit learning; Opposition logic; Dissociation logic;

Connectionist modelling; Simple recurrent network; Autoassociative network

1. Introduction

Tunney and Shanks (2003) responded to our experiments in which we used opposition logic to

separate controlled from automatic influences in artificial grammar learning (Higham, Vokey, &

Pritchard, 2000). They used a simple neural network simulation that they claimed produced

similar results without the need of separate processes or influences. There is a somewhat disq

Preparation of this work was supported by a Natural Sciences and Engineering Research Council of Canada

operating grant to J.R.V. and a British Academy research grant to P.A.H. We thank Professor Lee Brooks for his very

helpful comments on an earlier draft of this paper.

*

Corresponding author. Fax: +1-403-329-2555.

E-mail address: vokey@uleth.ca (J.R. Vokey).

1053-8100/$ - see front matter Ó 2004 Elsevier Inc. All rights reserved.

doi:10.1016/j.concog.2004.05.008

ARTICLE IN PRESS

2

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

missive tone in Tunney and Shanks (2003) that seems to imply that a major contention that we

made has been disproven. We are not sure what claim we have made that has been disproven by

their simulation, but we do think that the value of the experiments reported by Higham et al.

(2000) is being underestimated in their discussion.

Tunney and Shanks (2003) argued for a single memory system, which we also have consistently

argued for over at least the last 10 years (see, e.g., the ‘‘episodes and abstractions’’ section of

Higham et al., 2000). This claim is not a matter of contention between us, and, indeed, is rather a

growing consensus in the literature (e.g., Banks, 2000; Palmeri & Flanery, 2002; Ratcliff, Van

Zandt, & McKoon, 1995). What appears to be at contention is whether the opposition procedure

provides evidence that is usefully interpreted as a distinction between controlled and automatic

influences. Manipulations intended to vary the degree of participants’ control, such as deadlines

(e.g., Higham et al., 2000; Toth, 1996), divided attention (e.g., Debner & Jacoby, 1994; Jacoby,

1991), or delayed testing (e.g., Higham et al., 2000; Jacoby, Kelley, Brown, & Jasechko, 1989a),

produce separable effects when investigated with the opposition procedure. Whether this separation of effects is called a difference in influences or processes or changes of parameters within a

single system, the opposition procedure has produced clear and stable patterns of results in research on memory, word perception, and artificial grammar learning. Over a wide range of experimental variations, the effects of these variables are computationally separable into controlled

and automatic components, both of which may be manipulated without influencing the other:

deadlines and divided attention selectively affect the controlled component (e.g., Toth, 1996;

Yonelinas & Jacoby, 1994), whereas variations in such things as habit strength and proactive

interference do so for the automatic component (Hay & Jacoby, 1996; Jacoby, Debner, & Hay,

2001). Furthermore, this pattern of influence is clear and simple using opposition logic, as opposed to its traditional alternatives, which was the central contrast of our paper.

In what follows, we briefly review the critical results of Higham et al. (2000), and Tunney and

Shanks’ neural network simulation of them. We demonstrate that their model failed to reproduce

the central pattern of dissociations from Higham et al. (2000). We present a different neural

network simulation that provides a better fit with the results, and, finally, discuss the implications

of such models for opposition logic.

2. Higham, Vokey, and Pritchard (2000)

Higham et al. (2000) conducted two artificial grammar experiments during which participants

first learned training items all of which conformed to a finite state grammar. Unlike most artificial

grammar experiments (e.g., Higham, 1997a, 1997b; Reber, 1967, 1989; Vokey & Brooks, 1992),

two different grammars were used to construct the training stimuli, henceforth referred to as

Grammar A (GA) and Grammar B (GB), and participants studied the strings under headings that

distinguished between the grammars. Participants were not told anything about the rule structures

until the beginning of the test phase, at which point, participants were informed that the rule

structures existed, but they were not provided with any details. Instead, participants were required

to classify a series of test strings in one of two ways. In the in-concert (IC) condition, participants

were asked to rate test strings ‘‘grammatical’’ if they conformed to either grammar, and to rate

‘‘nongrammatical’’ items that conformed to neither grammar. In the opposition (O) condition,

ARTICLE IN PRESS

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

3

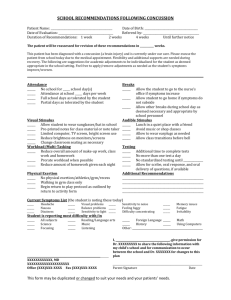

Table 1

Assignment rates in the in-concert, opposition, and baseline conditions as a function of standard vs. deadline test

(Experiment 1) and immediate vs. delayed test (Experiment 2) conditions in the original Experiments 1 and 2 of Higham

et al. (2000)

Conditions

Experiment 1

Standard test

Deadline test

Experiment 2

Immediate test

Delayed test

Experimental category

Effects

Dissociation

index

In-concert

Opposition

Baseline

Controlled

Automatic

.69

.61

.48

.53

.37

.41

.21

.08

.11

.12

.14

.46

.41

.35

.40

.26

.26

.11

.01

.09

.13

.14

Note. Controlled effects are computed as (in-concert ) opposition) and automatic effects as (opposition ) baseline).

The dissociation index is then computed as the difference between these two effects over the two test conditions of each

simulated experiment.

participants were required to rate test strings ‘‘grammatical’’ only if they conformed to GB; all

other items were to be rated ‘‘nongrammatical.’’

Higham et al. (2000) reasoned that in the IC condition, both knowledge whose influence could

be controlled by participants (i.e., applied if needed, or discounted if not), and knowledge whose

influence could not be controlled (i.e., applied regardless of intent) would contribute to the

likelihood of endorsing the GA test strings. Thus, controlled and automatic influences were inconcert. In contrast, in the O condition, only knowledge whose influence was automatically applied would contribute to the likelihood of endorsing GA items. If the knowledge applied at test

was under the control of the participant, it would not have contributed to false-alarming to the

GA items because this would have resulted in performance that was counter to the demands of the

O instructions. Thus, we reasoned that a comparison of GA endorsements in the IC and O

conditions provided an estimate of the controlled influence of learning. On the other hand, a

comparison of the rate of endorsing GA items in the O condition and the rate of endorsing items

that were nongrammatical (NG) with respect to both GA and GB (i.e., the baseline [B] condition)

provided an estimate of automatic or uncontrolled influences of learning.1

In support of this distinction, Higham et al. (2000) found that manipulating the amount of time

that participants had to provide responses at test (either 1s ¼ deadline testing condition, or no

time limit ¼ standard testing condition), had an effect on the controlled influence, but no effect on

the automatic one (see Table 1). This dissociation was predicted a priori because previous research

in memory has found that response deadlines produce effects exclusively on controlled memory

influences (e.g., Toth, 1996; Yonelinas & Jacoby, 1994).

In Experiment 2, Higham et al. (2000) derived the IC and O conditions from a source-monitoring test. After training similar to Experiment 1, participants were asked to classify test strings

1

Opposition logic has traditionally been used to separate automatic and controlled influences of memory in such

tasks such as recognition, and forms the basis of the process dissociation procedure (Jacoby, 1991). However, although

the process dissociation procedure relies on the logic of opposition, it also assumes that (a) the two influences revealed

by the logic are identified with two, distinct memory processes, and (b) that these two processes are functionally

independent. As we see it, it is not necessary to equate influences and memory processes in this way. The two influences

revealed by the logic of opposition could arise from any number of memory processes, independent or otherwise.

ARTICLE IN PRESS

4

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

as either belonging to GA, GB, or neither grammar. Higham et al. (2000) reasoned that in cases

where grammatical strings from either grammar were assigned to the correct grammar (i.e., GA

string assigned to GA [GAA ] or GB string assigned to GB [GBB ]), both knowledge that was

controllable, and knowledge that had an automatic influence on classification, were working inconcert. Therefore, the average of the GAA and GBB rates constituted the IC condition. In

contrast, in cases where grammatical strings from either grammar were assigned to the other

grammar (i.e., GA string assigned to GB [GAB ] or GB string assigned to GA [GBA ]), only

knowledge that had an automatic influence on classification was operative. Therefore, the average

of the GAB and GBA rates constituted the O condition. Just as in Experiment 1, a comparison of

the IC and O conditions provided an estimate of controlled influences, whereas a comparison of O

against B (the average of NG items assigned to GA [NGA ] and NG items assigned to GB [NGB ])

provided an estimate of automatic influences. Additionally, support for this distinction was obtained in that manipulating the retention interval between training and test (either immediate test,

or 12 day interval) had an effect on the controlled influence, but left the automatic one invariant

(see Table 1). This dissociation was predicted because some research in memory has shown that

retention interval selectively affects controlled influences of memory, leaving automatic influences

intact (e.g., Jacoby et al., 1989a).

Tunney and Shanks (2003), in contrast, argued that opposition logic, as we applied it within

our experiments, is not able to provide evidence for two qualitatively separable types of influence

in classification and memory tasks. For example, Tunney and Shanks (2003, p. 202) stated ‘‘we

question whether dissociations . . . require any separation of influences at all.’’ This is a serious

claim, and one which is deserved of attention, if for no other reason than literally hundreds of

experiments have been conducted using opposition logic since Jacoby et al. (1989a, 1989b) first

introduced it over a decade ago.

3. The Tunney and Shanks (2003) model

Tunney and Shanks (2003) simulated the two experiments on the learning of artificial grammars

of Higham et al. (2000) using cosine similarities derived from the learning of the study items in a

variant of a serial, recurrent network connectionist model (Elman, 1988), often referred to as

a ‘‘simple recurrent network,’’ or SRN. It was first proposed as a model of the learning of

single artificial grammars by Servan-Schreiber, Cleeremans, and McClelland (1989) (see also

Cleeremans, 1993; Cleeremans & McClelland, 1991). This SRN has multiple layers of units,

including an input layer, a ‘‘hidden layer’’ trainable by error back-propagation, a ‘‘context layer,’’

and an output layer. The context layer holds the activation of the hidden layer from the previous

input. The model is trained by presenting, sequentially, one letter (or a start/stop) code (a

bit pattern indicating whether it is a given letter or not) at a time to the input units, and adjusting

the connection weights in the direction of reproducing the input on the output units. To model

the Higham et al. (2000) experiments, Tunney and Shanks (2003) added two further bits to the

letter codes to indicate whether the current letter was from either a GA or a GB item. Each

such sequence of codes (i.e., a training item) was presented 100 times, and 50 different runs

were performed with different settings of the learning rate and momentum parameters of the

model.

ARTICLE IN PRESS

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

5

Testing consisted of presenting each of the test items to the input units in much the same way,

except the weights were not adjusted, and each sequence was presented with both the GA and GB

units ‘‘off.’’ The complete set of outputs for a given test item was compiled into an output vector.

This output vector was then correlated (using the cosine of the angle between vectors) with the

corresponding input vector, once with the GA unit ‘‘on’’ (and GB ‘‘off’’), and again with the GB

unit ‘‘on’’ (and GA ‘‘off’’). So, if GAA were the cosine for that item with the GA input unit ‘‘on’’

in the correlated input vector, and GAB were the cosine for the correlated input vector with the

GB input unit ‘‘on’’, then if GAA were greater than GAB for a given test item, the SRN model

would be said to have ‘‘endorsed’’ that item as a GA item.

These cosines were converted into p values (i.e., response proportions) using a logistic function.

The logistic function has two parameters: a and b. a is the multiplicative parameter (similar to

slope) and b is the additive parameter, similar to intercept. The larger a is, the more spread-out the

cosine values will be from one another (e.g., small differences are magnified when converted to p

values); Tunney and Shanks (2003) refer to a as the sensitivity of the network to the discrimination

of grammatical from nongrammatical items. b is similar to bias—the tendency to respond independently of the cosine of a particular item. a and b were solved for by nonlinear, iterative fitting

to the means from Higham et al. (2000).

The IC condition for both experiments was simulated by selecting the higher of the two vector

cosines for a given item; O condition cosines were obtained from the input vector for each test

item with the GB inputs ‘‘on.’’ Thus, for each condition of each experiment there were 6 mean

values to fit, 3 (i.e., GA, GB, and NG) for each of the ‘‘in-concert’’ and ‘‘opposition’’ conditions,

with 2 free parameters for each fit. The relevant results of the Tunney and Shanks (2003) simulations are shown in Table 2. The effect of fitting the cosines to the means of the experiments of

Higham et al. (2000) was to produce (a) endorsement rates for the IC condition greater than that

for the O condition, which in turn was greater than the endorsement rate to the B condition (i.e.,

IC > O > B), and (b) lower a (sensitivity) values for the deadline and delay conditions, relative to

the standard or immediate conditions.

Tunney and Shanks (2003) argued that ‘‘the network successfully reproduced the pattern observed by Higham et al. (2000, p. 212)’’. Although it is true that the SRN successfully simulated

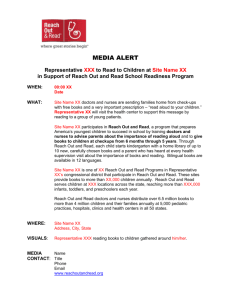

Table 2

Assignment rates in the in-concert, opposition, and baseline conditions as a function of standard vs. deadline test

(Experiment 1) and immediate vs. delayed test (Experiment 2) conditions in the Tunney and Shanks (2003) simulations

of Experiments 1 and 2

Conditions

Experiment 1

Standard test

Deadline test

Experiment 2

Immediate test

Delayed test

Experimental category

Effects

Dissociation

index

In-concert

Opposition

Baseline

Controlled

Automatic

.66

.61

.53

.53

.35

.41

.13

.08

.18

.12

).01

.46

.43

.36

.36

.26

.28

.10

.08

.09

.08

.01

Note. Controlled effects are computed as (in-concert ) opposition) and automatic effects as (opposition ) baseline).

The dissociation index is then computed as the difference between these two effects over the two test conditions of each

simulated experiment.

ARTICLE IN PRESS

6

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

the basic IC > O > B pattern, virtually any model that responded to the basic similarity and

structure of Higham et al.’s materials should produce this very crude ordering of conditions. The

IC > O difference occurs simply because a given grammatical item shares more features with other

members of the same grammatical set than with members of the other grammatical set. Simply

tallying the anchor position chunks reveals this fact. For example, the chunk RX, which occurs in

the last position of the first GA test item shown in Higham et al.’s Appendix B (VXVTRRX)

occurs twice in the same position of the GA training set, but never in the GB training set. The

O > B difference occurs, in contrast, because NG items, by definition, share fewer features with

either set of grammatical training items than the grammatical sets do (Higham et al., 2000).

A far more important consideration than the simple ordering of the IC, O, and B conditions is

the extent to which the SRN is able to simulate the effect of experimental manipulations on the

controlled effect, defined as IC minus O, and the automatic effect, defined as O minus B. We had

found that both response deadline (Experiment 1) and retention interval (Experiment 2) dissociated these effects. In particular, both manipulations significantly reduced the controlled effect,

but had no effect on the automatic effect. It was particularly these dissociations that validated

opposition logic and our method of separating controlled and automatic influences of learning; a

priori, both response deadline and retention interval have been shown to produce similar dissociations of controlled and automatic influences in memory research (e.g., response deadline: see

Toth, 1996; Yonelinas & Jacoby, 1994; retention interval: see Jacoby et al., 1989a). Hence, if our

measures of the controlled and automatic influences of artificial grammar learning were valid, we

should have found analogous results. We did; as can be seen in Table 1, response deadline in

Experiment 1 significantly reduced the controlled effect by .13, whereas the automatic effect remained invariant ().01). Similarly, retention interval in Experiment 2 significantly reduced the

controlled effect by .09, whereas the automatic effect, again, remained invariant (actually increasing numerically, but not significantly, by .04).

For the sake of ease of exposition in this paper, we have calculated a dissociation index for each

experiment, which reflects the degree to which an experimental manipulation dissociates the

controlled and automatic effects. This index is simply the difference between the effect the manipulation has on the controlled influence and the effect the manipulation has on the automatic

influence. Stated more formally, the dissociation index is

D ¼ ½ðIC OÞ1 ðIC OÞ2 ½ðO BÞ1 ðO BÞ2 ;

ð1Þ

where ‘‘1’’ and ‘‘2’’ refer to levels of the experimental manipulation (e.g., standard and deadline

testing conditions of Experiment 1). Higher values of the dissociation index reflect a greater reduction in the controlled effect relative to the automatic effect. As can be seen in Table 1, the

observed dissociation index for Experiment 1 was .14, and it was also .14 for Experiment 2. These

positive dissociation indices indicate that both deadline testing and retention interval decreased

the controlled effect more than the automatic effect; that is, both manipulations produced dissociations. Shown in Table 2 are the Tunney and Shanks’ (2003) simulations of our observed data

and the dissociation indices of the simulations for Experiments 1 and 2. In contrast to the observed data, the dissociation index reveals that the simulations did not even approximate the

dissociative pattern in our data. The simulated dissociation index for Experiment 1 was .01, and it

was ).01 for Experiment 2. The fact that the dissociation indices for the simulations were effectively zero for both Experiments 1 and 2 indicates that there was virtually no difference in the

ARTICLE IN PRESS

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

7

effect that either response deadline or retention interval had on controlled and automatic influences. In other words, Tunney and Shanks’ simulations completely failed to replicate the dissociations of our experiments, indeed, the most important aspects of our results.

Thus, we are unconvinced by one of Tunney and Shanks’ central arguments, namely that

their model is more parsimonious than describing the results in terms of automatic and

controlled influences, and that it embodies only one process or influence or system. Parsimony

is a criterion that makes sense only if the models being discriminated actually explain the extant

data.

4. The autoassociative network model

The failure of Tunney and Shanks’ (2003) neural network simulation to reproduce the critical

dissociations of Higham et al. (2000) motivated us to investigate whether another neural network

simulation could capture these patterns. Our simulations of the results of the two experiments of

Higham et al. (2000) used a linear autoassociative neural network. The hidden units of autoassociators are in the form of the eigenvectors of the covariance space of the input stimuli, which

can be solved for directly, rather than having to be learned via many iterations over epochs and

learning rate parameters, as with the hidden units of the SRN. Our stimuli are not abstract codes,

but are actual pixel-maps of the study items from the grammars. The resulting net of interconnection weights then serves as the episodic memory of the study experiences.

Such simple network memories are capable of reproducing many of the phenomena associated

with the learning of single artificial grammars, either by applying the logistic function to the

outputs of the model, as with the SRN (e.g., Dienes, 1992), or by using a simple signal detection

model applied to reproduction cosines to produce responses (Vokey, 1998), as we do here. For

example, the latter faithfully reproduces the independent grammaticality and specific similarity

effects of Vokey and Brooks (1992), and the above-chance detection of the letters that rendered

the nongrammatical stimuli of Reber and Allen (1978) inconsistent with their grammar (see

Dulaney, Carlson, & Dewey, 1984). Among other advantages of this approach, the use of pixelmaps allows for the direct, visual depiction of the stimuli and their various representations in the

network. We adapt it here to the two-grammar case of Higham et al. (2000).

4.1. Pixel-map coding of the stimuli

To create the pixel-map input patterns of the items, each of the five letters (M, R, T, V, and X)

used by the two grammars from Higham et al. (2000) were digitised as an 8 5 matrix of pixels

with values of 0 or 1, similar to that of letter patterns on a dot-matrix printer. To separate the

digitised letters from one another when combined to create the items, the eighth row of each letter

matrix was blank (i.e., all zeroes). Items were created by stacking the letter matrices vertically to

spell the item. As the maximum length in letters of an item was 7, shorter items were terminated

with empty (i.e., all zeroes) letter matrices so that every digitised item was represented as a 56 5

matrix of 0 or 1 pixel values. Each item, k, was then converted to a column vector (i.e., a 280 1

matrix), xk , by successively concatenating each of the rows of the digitised item. The set of 16

study items from GA were combined with those from GB to form a 280 32 matrix, X, obtained

ARTICLE IN PRESS

8

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

by storing each of the 32 study items as the columns of the matrix. The 24 test items from each of

GA and GB, and the 20 NG test items were similarly represented as a 280 68 matrix, T.

4.2. Autoassociative memory of the study items

The matrix of the 32 study stimuli, X, was learned as a linear autoassociative memory using the

Widrow-Hoff learning algorithm. This memory—the 280 pixels 280 pixels weight matrix, W,

relating the connection value between each pixel and every other pixel over the 32 study stimuli—was computed directly via the singular-value-decomposition (SVD) of the 280 pixels 32

matrix, X, of training stimuli (for details Abdi, Valentin, & Edelman, 1999). The SVD of a

rectangular matrix, X, is expressed as X ¼ UDVT , where U is the matrix of eigenvectors of XXT , V

is the matrix of eigenvectors of XT X, and D is the diagonal matrix of singular values—the squareroot of the eigenvalues of either XXT or XT X (as they are the same). In statistics, the related

eigendecomposition of the data matrix is called principal components analysis (PCA), and so such

linear autoassociators are often referred to as PCA neural networks. Accordingly, W can be

represented in terms of the eigenvectors, U, of the pixels pixels cross-products matrix, XXT , in

which dropping the usual diagonal matrix of eigenvalues reflects the spherising of the weight

matrix that would result from from Widrow-Hoff learning (see Abdi et al., 1999):

W ¼ UUT ;

^i , is

where UT is the transpose of the matrix U. Retrieval of a study item from this memory, x

computed as

^i ¼ Wxi ¼ Ul:m ðUTl:m xi Þ;

x

where the subscript, l : m, denotes the range of eigenvectors used to reconstruct the item. For our

purposes, the eigenvectors are ordered in terms of the magnitude of the associated eigenvalues

(i.e., proportion of variance accounted for), from most to least. As only the eigenvectors with

associated eigenvalues greater than zero are retained, there are at most as many eigenvectors as

there are items in the training set. The expression in parentheses can be interpreted as the projection, pijl:m , of the item into the space defined by the eigenvectors,

pijl:m ¼ UTl:m xi ;

where the values of pijl:m are the weights on each eigenvector used to reconstruct the item from the

linear combination of eigenvectors:

^i ¼ Ul:m pijl:m :

x

Thus, given the eigenvectors of the set as a whole, each item can be represented in a very reduced form as its projection weights on the eigenvectors. It is in this sense that the eigenvectors

can be seen as the ‘‘macrofeatures’’ of the items (see, e.g., Abdi, Valentin, Edelman, & O’Toole,

1995; O’Toole, Abdi, Deffenbacher, & Valentin, 1993; O’Toole, Deffenbacher, Valentin, & Abdi,

1994; Turk & Pentland, 1991; Valentin, Abdi, & O’Toole, 1994; Valentin, Abdi, O’Toole, &

Cottrell, 1994, for similar analyses of pixel-maps of photographs of faces). As it turns out, there is

a good deal of redundancy among the 32 study items such that the entire set can be represented

perfectly with only 27 (rather than 32) eigenvectors with eigenvalues greater than zero.

ARTICLE IN PRESS

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

9

4.3. Heteroassociative learning of the study item labels

The learning of the grammar labels (A/B) associated with the study items was simulated as a

simple classifier, a variant of a perceptron known as an ‘‘adaline’’ (see, e.g., Anderson, 1995). The

adaline is a simple linear heteroassociator with Widrow-Hoff error-correction, composed of a

multiple-unit input layer and one binary output unit, which, again, can be solved for directly. In

statistical terms, it is a simple linear discriminant function analysis of the inputs to predict the

binary classification of the items (see, e.g., Abdi et al., 1995). The inputs to the classifier were the

projection weights on the eigenvectors for each study item to produce a final set of discriminative

weights to predict the grammar category, in the form of a simple linear equation, from the projection weights for any given input item. This approach is equivalent to fitting a hyper-plane to the

projections of the study items that best (in the sense of the least-squares criterion) separates the GA

study inputs from the GB study inputs. The separation is perfect, if all the eigenvectors of the space

are used (a consequence of the Widrow-Hoff error-correction), or maximal (in the least-squares

sense) if some sub-set of eigenvectors is used. Thus, a study item from GA or GB is labelled by its

clustering with other GA or GB items in the eigenspace. These prediction weights were then frozen

for test, and used to predict the grammar category from the projection weights of the test stimuli.

4.4. Simulation of Experiment 1 of Higham et al. (2000)

The IC condition of Experiment 1 of Higham et al. (2000) required that participants discriminate the 64 test items into two classes, grammatical (i.e., from either GA or GB) and nongrammatical. That is, the two-grammar nature of the experiment has been reduced to a single

grammar experiment, with no requirement to discriminate between GA and GB. This discrimination was simulated as in Vokey (1998) by computing the cosine between each test item and its

reproduction from the projection of it into the eigenspace defined by the study items. The higher

the reproduction cosine, the more ‘‘familiar’’ the test item is to the autoassociative memory of the

study items. These reproduction cosines were computed as a function of different eigenevector

ranges used to reproduce each test item, just the first eigenvector, just the first two, three, . . ., to all

27 eigenvectors (the last of which, all 27 eigenvectors, would reproduce the study stimuli perfectly,

i.e., and yield cosines of 1.0). For each eigenvector range, the mean reproduction cosine was

computed and used as a signal-detection decision criterion: items with cosines greater than the

criterion were accepted as grammatical, otherwise nongrammatical. Using the mean of the scale is

known as the ‘‘optimal observer’’ criterion because it maximises the difference between hits and

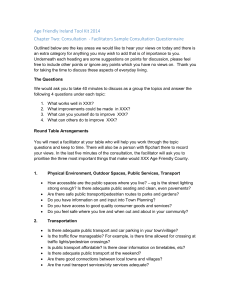

false-alarms. The proportions of GA, GB, and NG test items labelled ‘‘grammatical’’ as a

function of eigenvector range are shown in Fig. 1. As can be seen, even with projections derived

from just the first few eigenvectors, the grammatical test items are discriminated quite well from

the NG test items.

The O condition of Experiment 1 of Higham et al. (2000) required participants not only to

distinguish grammatical from nongrammatical test items, but to discriminate GA items from GB

items so as to label the former as also NG (i.e., not GB). To simulate this latter requirement, we

submitted the projection of any test item considered grammatical by the IC criterion to the

heteroassociative classifier. Such items were then either accepted as GB items, or rejected, again as

a function of the eigenvector range used to project the item.

ARTICLE IN PRESS

10

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

Fig. 1. The proportion of GA, GB, and NG test items labelled grammatical as a function of eigenvector range in the

simulation of the in-concert condition of Experiment 1.

Both the IC and O conditions of Experiment 1 of Higham et al. (2000) occurred under deadline

testing conditions for some participants, and under standard testing conditions for others. This

manipulation was simulated by using a different eigenvector range for each condition. As the

use of all 27 eigenvectors in which to project the items reproduces the study items perfectly, as

well as providing for 100% accuracy in the assignment of their category labels, it was considered

to represent a maximal knowledge state of participants in the standard testing condition. In

contrast, using only the first few eigenvectors (we used the first 3) in which to project the items

would produce the poorest performance on the study items and, accordingly, should provide

for an extreme version of the reduced knowledge state of participants in the deadline testing

condition.

The proportion of GA items endorsed as either grammatical (IC condition) or as GB (O

condition) items and the proportion of NG items endorsed as GB items (B condition) as a

function of standard vs. deadline test conditions are shown in Table 3. Given the extremes we used

for the standard and deadline conditions (in addition to the ‘‘optimal observer’’ criterion used for

ARTICLE IN PRESS

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

11

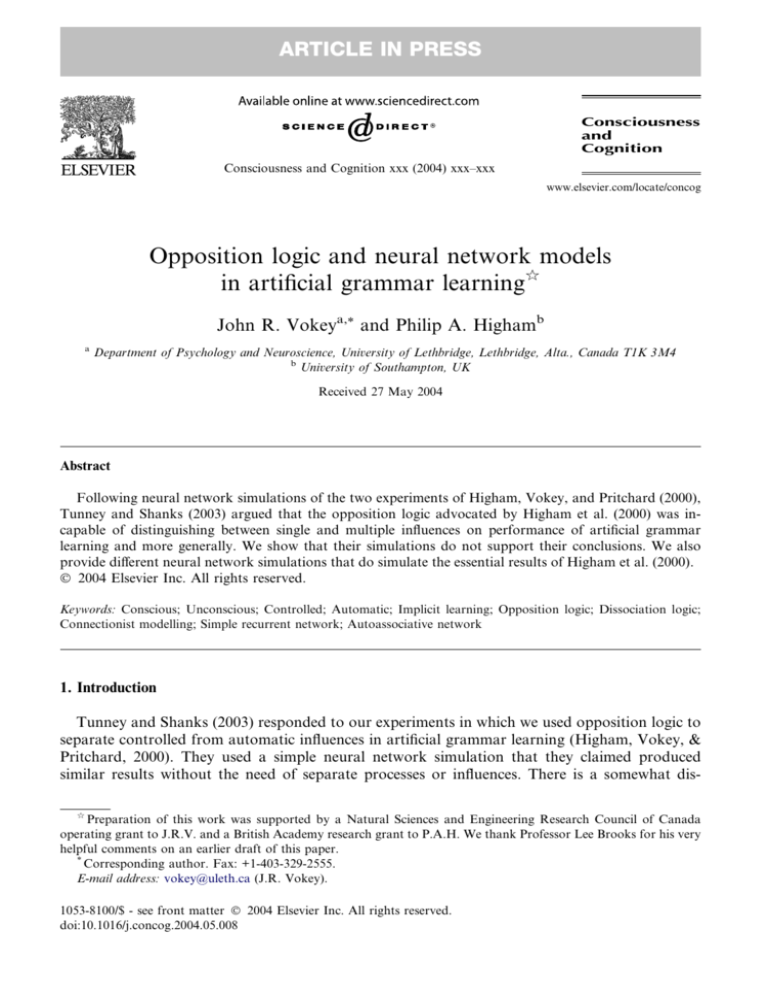

Table 3

Assignment rates in the in-concert, opposition, and baseline conditions as a function of standard vs. deadline test

(Experiment 1) and immediate vs. delayed test (Experiment 2) conditions in the simulations of Experiments 1 and 2

Conditions

Experiment 1

Standard test

Deadline test

Experiment 2

Immediate test

Delayed test

Experimental category

Effects

Controlled

Automatic

Dissociation

index

In-concert

Opposition

Baseline

1.00

.71

.54

.50

.15

.10

.46

.21

.40

.40

.25

.54

.38

.44

.40

.17

.10

.10

).02

.26

.30

.16

Note. Controlled effects are computed as (in-concert ) opposition) and automatic effects as (opposition ) baseline).

The dissociation index is then computed as the difference between these two effects over the two test conditions of each

simulated experiment.

the grammaticality judgement), the proportions shown in Table 3 do not resemble the magnitude

of the corresponding values from Experiment 1 of Higham et al. (2000). However, the pattern of

the results over conditions does resemble the pattern of Higham et al. (2000). Indeed, as shown in

Table 3, both the standard and the deadline conditions yielded the requisite IC > O > B pattern.

Furthermore, the critical comparisons producing the controlled (IC ) O) and automatic (O ) B)

effects, evince the pattern from Experiment 1 of Higham et al. (2000) over the standard and

deadline conditions: virtually constant values of the automatic effect, and reduction in the controlled effect moving from standard to deadline conditions. These values in turn result in a dissociation index of ð:46 :21Þ ð:40 :40Þ ¼ :25.

4.5. Simulation of Experiment 2 of Higham et al. (2000)

The simulation of Experiment 2 of Higham et al. (2000) was performed similarly, except that, as

in Higham et al. (2000), in-concert scores for grammatical items were obtained as the average of

GA and GB items correctly endorsed as GA and GB items, respectively; opposition scores for

grammatical items were the average of GA and GB items incorrectly endorsed as GB and GA

items, respectively; and baseline scores were obtained as the average of the NG items endorsed as

GA and GB items. The delayed condition was simulated in the same way as the deadline condition for the simulation of Experiment 1, by deriving the values from projecting into the space

defined by just the first three eigenvectors. The results are shown in Table 3. As in Experiment 2 of

Higham et al., 2000, the model successfully produced IC > O > B, and the controlled effect was

reduced in the delayed condition, but no such diminution occurred for the automatic effect. The

dissociation index for this simulation was ð:10 ð:02ÞÞ ð:26 :30Þ ¼ :16.

5. Discussion

What conclusions are we to draw from our successful simulations of the experiments in Higham

et al. (2000)? Tunney and Shanks (2003) claimed that their simulation of Higham et al.’s (2000)

results with a similarity-based neural network model necessarily undermined the logic of

ARTICLE IN PRESS

12

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

opposition because, in their view, such simulations implied only a single system. On the other

hand, we would argue that even such simple systems are susceptible to multiple influences, some

of which the system can successfully control, and some not.

In the case of our model, we would argue that the uncontrollable or automatic influence arises

from the structure of the stimuli that provides for the judgement of grammaticality (see Fig. 1):

the model could attempt to control this influence by adjusting the response criterion to reduce the

difference between hits—grammatical (GA and GB) items—and false-alarms—NG items, but

could not reduce it to zero, and still be able to discriminate some GA items from GB items. The

controllable influence in the model, in contrast, arises from the aspects of the structure of the

stimuli that allow for the discrimination between GA and GB items, provided in our model by the

heteroassociative classifier. When applied maximally (i.e., using all the eigenvectors), it is capable

of discriminating every GA study item from every GB study item. Had only study items been used

in the opposition condition, O would be zero, with no evidence of an automatic effect. However,

even when applied maximally to the test items, our model is incapable of discriminating every GA

test item from GB test items. Combined, these two influences produce the IC > O > B pattern.

Furthermore, reducing the number of eigenvectors has a greater effect on the controllable influence (the discrimination of GA from GB) than the automatic influence (the discrimination of

grammatical from nongrammatical), producing the dissociations.

This characterisation of the influences raises an issue that we believe is at the core of the

criticisms of our use of opposition logic. To wit: is it really the case that Higham et al.’s (2000)

results reduce to a mere issue of similarity among items? The argument that Tunney and Shanks

(2003) have made, which is echoed in Redington’s (2000) commentary on Higham et al. (2000), is

that all that our results have shown is that GA and GB items are similar to each other, whereas

NG items are distinctive. Deadline and retention intervals merely serve to make classification

more difficult, which virtually eliminates the controlled effect, as it is dependent on the difficult

discrimination between GA and GB items. On the other hand, the automatic influence is left

intact because the discrimination between grammatical and nongrammatical items is easy.

Rather than undermine opposition logic or our results, however, we regard the particular

similarity profile of our materials as a requirement to reveal automatic effects. If GA, GB, and NG

test items were all completely different from each other (i.e., there was no feature overlap between

sets) relative to the GA and GB training items, our participants would likely have produced very

large controlled effects, and virtually no automatic effects; that is, they would have had no

problem correctly accepting items in the IC condition, and correctly rejecting items in the O and B

conditions (i.e., IC > O ¼ B). In the same vein, had all test items been virtually indistinguishable

(i.e., very large feature overlap) relative to the GA and GB training items, it is unlikely that we

would have revealed any effects, controlled or otherwise (i.e., IC ¼ O ¼ B). Rather than being

viewed as some kind of design flaw or artefact with our materials, it is necessary that there be

some confusability between GA and GB test items relative their training stimuli, and some distinctiveness of the NG items (with respect to the GA and GB training items), in order to reveal

both controlled and automatic effects.

The fact that the effects obtained in our research can be re-described in terms of the similarity

profile of the particular items used has no bearing whatsoever on the suitability of the terms

controlled and automatic. People (and neural nets) in learning experiments are influenced by

similarity. Some of that influence of similarity is controllable, and some of it is not. An influence

ARTICLE IN PRESS

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

13

that remains intact despite instructions to control it is quite sensibly referred to as automatic.

Perhaps Tunney and Shanks (2003) do not believe in the notion of automaticity at all, but it is

certainly the case that we can find no well-reasoned argument for its denial based on Tunney and

Shanks simulations or their analysis of our experiments.

We have argued analogous points elsewhere (e.g., see Higham et al., 2000, pp. 465–467; Higham & Vokey, 2000, pp. 476–479). The apparent insight that GA–GB distinction is ‘‘hard,’’

whereas the (GA, GB)-NG discrimination is ‘‘easy,’’ does not really change anything. ‘‘Hard’’ and

‘‘easy,’’ after all, are just labels to describe participants’ experience performing some task; they are

not, in and of themselves, an explanation for that experience. Indeed, cast in our terms, participants find the ‘‘hard’’ (exclusion) task difficult because there are influences in this condition that

need to be controlled, whereas these same influences do not need to be controlled in the ‘‘easy’’

(inclusion) condition. Thus, as we see it, the labels ‘‘hard’’ and ‘‘easy’’ merely represent re-descriptions of the very phenomena we are investigating. In summary, opposition logic is intact, and

the most constructive interpretation of Higham et al.’s results is that they provided evidence of

two separable influences in artificial grammar learning.

References

Abdi, H., Valentin, D., & Edelman, B. (1999). Neural networks. Thousand Oaks, CA: Sage Publications.

Abdi, H., Valentin, D., Edelman, B., & O’Toole, A. (1995). More about the difference between men and women:

Evidence from linear neural networks and the principal component approach. Perception, 24, 539–562.

Anderson, J. A. (1995). An introduction to neural networks. Cambridge, Massachusetts: The MIT Press.

Banks, W. P. (2000). Recognition and source memory as multivariate decision processes. Psychological Science, 11,

267–273.

Cleeremans, A. (1993). Mechanisms of implicit learning: Connectionist models of sequence processing. Cambridge, MA:

MIT Press.

Cleeremans, A., & McClelland, J. L. (1991). Learning the structure of event sequences. Journal of Experimental

Psychology: General, 120, 235–253.

Debner, J. A., & Jacoby, L. L. (1994). Unconscious perception: Attention, awareness, and control. Journal of

Experimental Psychology: Learning, Memory, and Cognition, 20, 304–317.

Dienes, Z. (1992). Connectionist and memory array models of artificial grammar learning. Cognitive Science, 16, 41–79.

Dulaney, D. E., Carlson, R. A., & Dewey, G. I. (1984). A case of syntactical learning and judgment: How conscious and

how abstract? Journal of Experimental Psychology: General, 113, 541–555.

Elman, J. L. (1988). Finding structure in time. CRL Technical report 9001 (Tech. Rep.). University of California, San

Diego: Center for Research in Language.

Hay, J. F., & Jacoby, L. L. (1996). Separating habit and recollection: Memory slips, process dissociations, and

probability matching. Journal of Experimental Psychology: Learning, Memory, and Cognition, 22, 1323–1335.

Higham, P. A. (1997a). Chunks are not enough: The insufficiency of feature frequency-based explanations of artificial

grammar learning. Canadian Journal of Experimental Psychology, 51, 126–137.

Higham, P. A. (1997b). Dissociations of grammaticality and specific similarity effects in artificial grammar learning.

Journal of Experimental Psychology: Learning, Memory, and Cognition, 23, 1029–1045.

Higham, P. A., & Vokey, J. R. (2000). The controlled application of a strategy can still produce automatic effects: Reply

to Redington (2000). Journal of Experimental Psychology: General, 129, 476–480.

Higham, P. A., Vokey, J. R., & Pritchard, J. L. (2000). Beyond dissociation logic: Evidence for controlled and

automatic influences in artificial grammar learning. Journal of Experimental Psychology: General, 129, 457–470.

Jacoby, L. L. (1991). A process dissociation framework: Separating automatic from intentional uses of memory.

Journal of Memory and Language, 30, 513–541.

ARTICLE IN PRESS

14

J.R. Vokey, P.A. Higham / Consciousness and Cognition xxx (2004) xxx–xxx

Jacoby, L. L., Debner, J. A., & Hay, J. F. (2001). Proactive interference, accessibility bias, and process dissociations:

Valid subjective reports of memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 27, 686–

700.

Jacoby, L. L., Kelley, C. M., Brown, J., & Jasechko, J. (1989a). Becoming famous overnight: Limits on the ability to

avoid unconscious influence of the past. Journal of Personality and Social Psychology, 56, 32–338.

Jacoby, L. L., Woloshyn, V., & Kelley, C. (1989b). Becoming famous without being recognized: Unconscious influences

of memory produced by dividing attention. Journal of Experimental Psychology: General, 118, 115–125.

O’Toole, A. J., Abdi, H., Deffenbacher, K. A., & Valentin, D. (1993). A low-dimensional representation of faces in the

higher dimensions of the space. Journal of the Optical Society of America A, 10, 405–411.

O’Toole, A. J., Deffenbacher, K. A., Valentin, D., & Abdi, H. (1994). Structural aspects of face recognition and the

other race effect. Memory & Cognition, 22, 208–224.

Palmeri, T. J., & Flanery, M. A. (2002). Memory systems and perceptual categorization. In B. Ross (Ed.), The

psychology of learning and motivation (Vol. 42, pp. 141–189). San Diego, California: Elsevier Science.

Ratcliff, R., Van Zandt, T., & McKoon, G. (1995). Process dissociation, single-process theories, and recognition

memory. Journal of Experimental Psychology: General, 124, 352–374.

Reber, A. S. (1967). Implicit learning of artificial grammars. Journal of Verbal Learning and Verbal Behaviour, 5, 855–

863.

Reber, A. S. (1989). Implicit learning and tacit knowledge. Journal of Experimental Psychology: General, 118, 219–235.

Reber, A. S., & Allen, R. (1978). Analogy and abstraction strategies in synthetic grammar learning: A functionalist

interpretation. Cognition, 6, 189–221.

Redington, M. (2000). Not evidence for separable controlled and automatic influences in artificial grammar learning:

Comment on Higham, Vokey, and Pritchard (2000). Journal of Experimental Psychology: General, 129, 471–475.

Servan-Schreiber, D., Cleeremans, A., & McClelland, J. L. (1989). Learning sequential structure in simple recurrent

networks. In D. S. Touretzky (Ed.), Advances in neural information processing systems: Vol. 1. San Mateo, CA:

Morgan Kaufman.

Toth, J. P. (1996). Conceptual automaticity in recognition memory: Levels-of-processing effects on familiarity.

Canadian Journal of Experimental Psychology, 50, 123–138.

Tunney, R. J., & Shanks, D. R. (2003). Does opposition logic provide evidence for conscious and unconscious processes

in artificial grammar learning? Consciousness and Cognition, 12, 201–218.

Turk, M., & Pentland, A. (1991). Eigenfaces for recognition. Journal of Cognitive Neuroscience, 3, 71–86.

Valentin, D., Abdi, H., & O’Toole, A. J. (1994). Categorization and identification of human face images by by neural

networks: A review of of the linear autoassociative and principal component approaches. Journal of Biological

Systems, 2, 413–429.

Valentin, D., Abdi, H., O’Toole, A. J., & Cottrell, G. W. (1994). Connectionist models of face processing: A survey.

Pattern Recognition, 27, 1209–1230.

Vokey, J. R. (1998). ‘‘Pixel-based, linear autoassociative categorisation and identification of items from an artificial

grammar’’. Annual meeting of the Canadian Society for Brain, Behaviour, and Cognitive Science, Ottawa, Ontario.

Vokey, J. R., & Brooks, L. R. (1992). Salience of item knowledge in learning artificial grammars. Journal of

Experimental Psychology: Learning, Memory, and Cognition, 18, 328–344.

Yonelinas, A. P., & Jacoby, L. L. (1994). Dissociations of processes in recognition memory: Effects of interference and

response speed. Canadian Journal of Experimental Psychology, 48, 516–534.