Assignment 5

advertisement

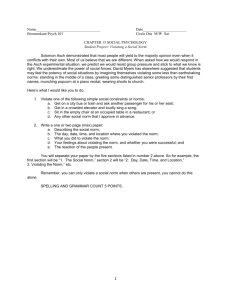

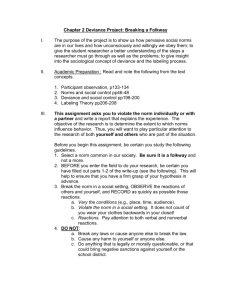

Johan Helsing, October 6, 2011 NUMA11/FMNN01 fall ’11: Numerical Linear Algebra Numerical Analysis, Centre for Mathematical Sciences Assignment 5 Deadline: Friday September 30. Purpose: more practice on linear systems. Problem 1(E). Do exercise 18.1 i Trefethen and Bau. Give exact answers to subproblems (a) and (b) and numerical approximations to (c), (d), and (e). Subproblem (e) needs four perturbations, δb or δA, corresponding to the four condition numbers κy(b) , κx(b) , κy(A) , and κx(A) in the table on page 131. It is possible that the same δb or δA works for several cases. Construct your perturbations in terms of quantities that appear in a full SVD of A and scale them so that ||δb||2 = ||δA||2 = 10−6 . Verify according to the following: Use the exact expressions for A, P , x, y, and b. Construct à = A + δA, P̃ = ÃÃ+ , or b̃ = b + δb for the various cases. Compute ỹ = P b̃ or ỹ = P̃ b and solve Ax̃ = b̃ or Ãx̃ = b for x̃. Insert your computed x̃ and ỹ in the condition number formula κz(w) = ||z̃ − z|| ||w|| , ||z|| ||δw|| where z(w) is the appropriate quantity, and compare to theoretical predictions. It is enough if your perturbation for κy(A) achieves 63% of the upper bound, and 99% is enough for κx(A) . Hand in program and output. (2p) Problem 2(PF). Do exercise 19.2 in Trefethen and Bau. Find the name of the Matlab function whose action is described by the code. Hint: The program is related to topics dealt with on pages 81-84 and 143. Problem 3(E). Write a Matlab function mysolve which solves m × m linear systems Ax=b, using LU factorization without pivoting (Algorithm 20.1) and forward- and back substitution (Algorithm 17.1). As for details in the LU factorization, follow the instructions of exercise 20.4. Furthermore, store L and U in the same matrix called LU. Structure yous solver as follows function x=mysolve(A,b,m) LU=LUdecomp(A,m); y=forwsubst(LU,b,m); x=backsubst(LU,y,m); where the function LUdecomp looks something like this page 1 of 6 function LU=LUdecomp(LU,m) for k=1:m-1 LU(k+1:m,k)=???????????????????; LU(k+1:m,k+1:m)=???????????????????????????????????????; end The functions doing forward- and back substitution should be even simpler. The operators “\” and “inv” must, however, not be used. Compute the relative error in the solution x and compare with the error in the solution given by A\b. Use a few reasonbly large system matrices A, right hand sides b, and reference solutions xref generated by the function function [A,xref,b]=Abgen(m) randn(’state’,0) A=randn(m)+1i*randn(m); xref=randn(m,1)+1i*randn(m,1); b=A*xref; Compare also with the theoretical upper bound O (cond(A)²mach ) for a backward stable algorithm. See, further, comment on page 6. Hand in program, output, and conclusions about stability. (1p) Problem 4(E). Implement partial pivoting in the solver you constructed for Problem 3 by adding a few lines. Structure your new solver as follows function x=mysolvePiv(A,b,m) [LU,ind]=LUdecompPiv(A,m); y=forwsubst(LU,b(ind),m); x=backsubst(LU,y,m); Here LUdecompPiv now performs LU factorization with partial pivoting. L and U are still stored in the same matrix LU. The vector ind saves the row exchanges by letting its k:th element tell which row in the original system should appear on row k after completion of the entire pivoting process. Your code should work both for real and complex matrices. Can you spot any improvement? Hand in program and some output. (1p) Problem 5(PF). Your solver could perhaps be made even better. Not in term of speed, but in terms of accuracy. When you have computed your solution x you can also compute the residual res=b-A*x. It is now possible to perform forward- and back substitution once more, on res rather than on b, and in a cheap way extract a correction which, when added to the previously computed solution, hopefully makes the solution even more accurate. Try this on some large systems. Can you spot any improvement? page 2 of 6 Problem 6(E). Gaussian elemination with partial pivoting may be the most efficient numerical method for solving general full-rank m × m linear systems. Unfortunately, the operation count is ∼ 2m3 /3. Luckily, system matrices arising in real-life applications are seldom general, but often have some special property which could be taken advantage of in more efficient special purpose algorithms: “Symmetry”, “Positive definiteness”, “Sparsity”, “Clustered eigenvalues”, “Band strucure”, “Possible decomposition in a simple part and a low-rank part”, “Toeplitz”, “Triangular”, “Hessenberg” are a few examples. This problem deals with “Vandermonde”. The Vandermonde matrix was introduced in Problem 1 of Assignment 4. There you did a least-squares approximation for the coefficients of an 11 degree polynomial approximating the function cos(4t) on the interval [0, 1] based on 50 interpolation points. Had you plotted the pointwise error of the actual approximating polynomial along [0, 1] it would have looked as in Figure 1. Approximation error 0 10 −2 10 Relative error in max−norm −4 10 −6 10 −8 10 −10 10 −12 10 −14 10 −16 10 0 0.1 0.2 0.3 0.4 0.5 t 0.6 0.7 0.8 0.9 1 Figure 1: Interpolation error for cos(4t) approximated with a polynomial of degree 11. Here you shall do a straight-forward interpolation of that same function (no least-squares), for stability reasons scaled and shifted to the interval [−1, 1]. Use an interpolating polynomial of degree 19 based on 20 interpolation points placed according to the nodes of the Legendre polynomial of order 20. Most of the programming is already done on the next page... page 3 of 6 function ex56 close all t=mynodes(20); A=fliplr(vander(t)); condA=cond(A) b=f(t); % coeffbad=inv(A)*b; % 2n^3 FLOPs coeffml=A\b; % 2n^3/3 FLOPs coeffsp=special(t,b); % 5n^2/2 FLOPs % diff1=norm(coeffbad-coeffml)/norm(coeffml) diff2=norm(coeffbad-coeffsp)/norm(coeffml) diff3=norm(coeffml-coeffsp)/norm(coeffml) % tlong=linspace(-1,1,1000)’; fapproxbad=polyval(flipud(coeffbad),tlong); fapproxml=polyval(flipud(coeffml),tlong); fapproxsp=polyval(flipud(coeffsp),tlong); % ftrue=f(tlong); apperrbad=max(1e-16,abs(fapproxbad-ftrue)/norm(ftrue,’inf’)); apperrml=max(1e-16,abs(fapproxml-ftrue)/norm(ftrue,’inf’)); apperrsp=max(1e-16,abs(fapproxsp-ftrue)/norm(ftrue,’inf’)); % semilogy(tlong,apperrbad,’v’,tlong,apperrml,’o’,tlong,apperrsp,’*’) axis([-1 1 1e-16 1e0]) grid on title(’Approximation error’) legend(’inv’,’Gauss’,’Special’) xlabel(’t’) ylabel(’Relative error in max-norm’) % function fout=f(x); fout=cos(2*(x+1)); % function T=mynodes(n) % *** Theorem 37.4 in Trefethen and Bau *** k=1:n-1; B=k./sqrt(4*k.*k-1); T=sort(eig(diag(B,1)+diag(B,-1))); Now implement the function special so that coeffsp and coeffml become essentially the same, but so that the operation count is reduced from page 4 of 6 ∼ 2n3 /3 to ∼ 5n2 /2. This problem can be solved by a literature study. Further questions: (0.5p) a) The vectors coeffsp and coeffml will not be the same in finite precision. Since the condition number of A is 107 we could expect a relative difference between the two vectors of up to 10−9 . Does this happen? (0.1p) b) What can be said about the vector coeffbad? Is it really a bad solution and, if so, in what sense? Is it decent in some sense? Try to quantify how much worse coeffbad is than coeffml, compared to an assumed exact solution coeff. (0.2p) c) Is one of the vectors coeffsp and coeffml likely to contain more accurate coefficients than the other? and if so, which and why? (0.1p) d) Does it help to know A−1 ? Suppose that coeffi = Ai ∗ b is used, and that Ai is A−1 to machine precision. Would the coefficients of coeffi be more, less, or equally accurate than those of coeffsp and coeffml? Would the interpolation based on coeffi be more, less, or equally accurate than the interpolations based on coeffsp and coeffml? Back your answer with some mathematical arguments. (0.3p) e) In view of the high condition number of A, explain the extremely small errors that you will see in the figure that the program produces. (0.3p) Hand in program, plots, and observations. Problem 7(E). Consider the following program function fastergauss n=input(’Give n: ’) m=2*n; A=randn(n); B=randn(n); u1=rand(n,1); u2=rand(1,n); v1=rand(n,1); v2=rand(1,n); C=[A u1*u2;v1*v2 B]; rhs=randn(m,1); % % % *** MATLAB’s backslash *** tic x0=C\rhs; t0=toc *** my own LU with partial pivoting *** tic x1=mysolvePiv(C,rhs,2*n); t1=toc page 5 of 6 Here a particular m × m matrix C and a random right hand side rhs are created. Two solutions x0 and x1 are computed. The operation count is ∼ 2m3 /3 to leading order in m for both algorithms, since they essentially are the same. In theory x0 and x1 should therefore be computed equally fast. But since Matlab’s built-in functions are faster than code we write ourselves, t0 will be much smaller than t1. Now, construct a new solver which takes advantage of the special structure of C to compute a solution x2 with an operation count that is only ∼ m3 /6 to leading order in m, that is, asymptotically four times faster in theory. Make sure that your solution x2 agrees with x0 approximately as well as x1 does. Your new solver may use any of the variables n, m, A, B, u1, u2, v1, v2, C, and rhs as input. Hand in, program and output (timings and relative differences between solution vectors). (1p) If you are interested in how ideas connected to this problem can be used for extremely rapid construction of inverses to certain very large full matrices, see W.Y. Kong, J. Bremer and V. Rokhlin An adaptive fast direct solver for boundary integral equations in two dimensions, Appl. Comput. Harmon. Anal. 31(1), pp. 346–369, (2011), available in the Course Library. Comment to Problem 3 and 4. The theoretical upper bound to the relative forward error of a backward stable algorithm is given by Theorem 15.1 which in this case reads ||x̃ − x|| ≤ Cm · κ(A) · ²mach , ||x|| where Cm is a constant depending on m. The bound is valid for a particular algorithm and all input for each fixed dimension m in the limit of small ²mach . Uniformity with respect to m is not required. See pages 105 and 166 in the textbook. Naturally, we cannot change ²mach on our computer. But we know that ²mach is small. And we can change input A and b, and thereby get an idea of what Cm is, under the assumption that our algorithm indeed is backward stable. If the algorithm is not backward stable, there is no Cm . Note that the line randn(’state’,0) should be removed from the function Abgen and placed in the beginning of the main program if you want to generate several different system matrices A of the same dimension. page 6 of 6