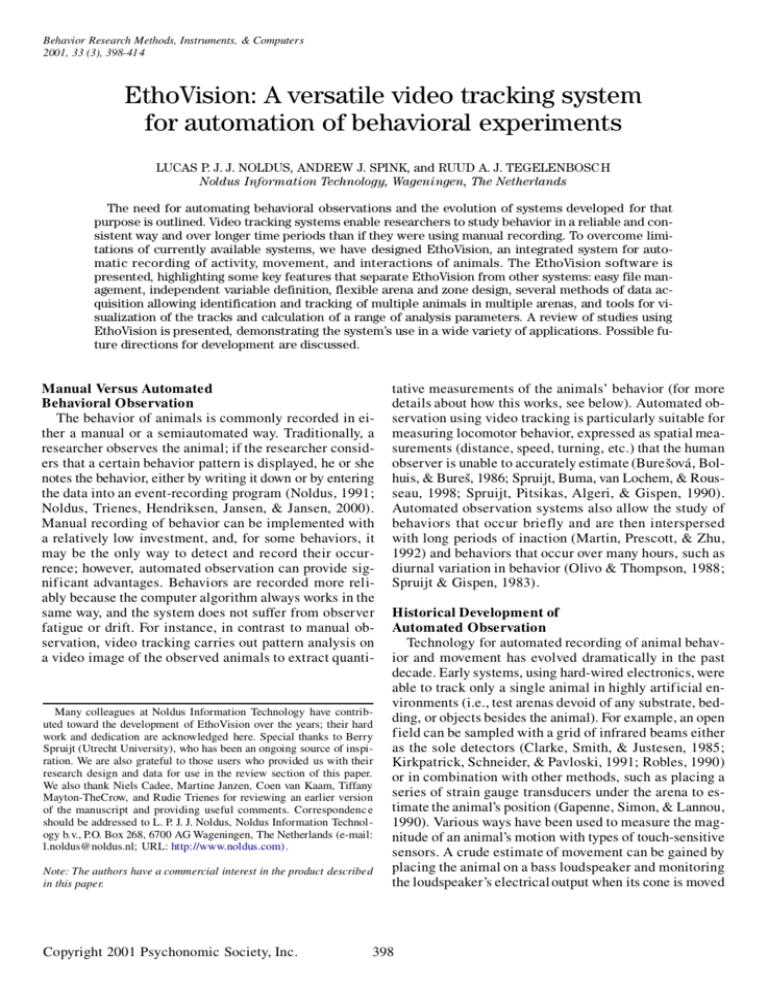

A versatile video tracking system for automation of

advertisement