Introduction

advertisement

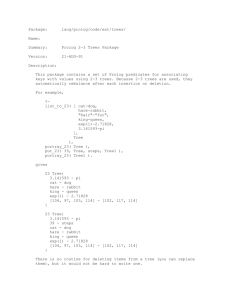

Introduction Verónica Dahl Professor, Comp. Sci. Dept Simon Fraser University, Canada Marie Curie Chair of Excellence,Universidad de Tarragona, Spain My scientific dream Bridge the gap between formal and empirical approaches pluridisciplinarity: linguistics, logic, computing sciences, AI, cognitive sciences, internet, molecular biology --> Cognitive theories and methodologies. 2 March 4- Intro to Natural Language Processing 3 “Understanding” language Levels of Language Processing Recognition, Analysis, Synthesis Main problems: Ambiguity, Long Distance Dependencies, elided constituents, contextual and world knowledge, intentions, presuppositions. Processing Language through Prolog and DCGs. Examples. Levels of Language Processing 4 Phonetic Lexical Syntactic Semantic Pragmatic Main Types of Language Processing 5 Recognition Analysis (parsing)- by far the most studied Synthesis (generation) Single sentence vs. discourse Main problems Ambiguity: Lexical (part of speech): The overflown bank Teacher strikes idle kids Syntactic (structural): Time flies like an arrow 6 Main problems: long distance dependencies How to relate constituents that can be arbitrarily far apart. E.g. Topicalization Logic, we love . Logic, he thinks we love . Logic, they pretended they did not know we love . 7 Main problems: long distance dependencies Anaphora (e.g. relating a pronoun with its antecendent- or postcedent) Mona Lisa smiled. Leonardo painted her smile. Ann smiled. Lissa frowned. Tom photographed her. Near her, Alice saw a Bread-and-Butterfly. 8 Main problems:elided constituents - How to guess material left implicit The workshop was interesting and the talks inspiring. were Flexibility needed. Bottom-up approaches with some kind of constraint reasoning have helped (e.g. datalog with constraints 9 upon word boundaries) Main problems: idioms, contextual and world knowledge, intentions He kicked the bucket. It’s too warm in here. Je m’appele Monsieur Leblanc. Et vous? Nous aussi. 10 Main problems: presuppositions The king of France is bald How many students learnt Mirandes last year? 11 Basic Prolog tools for NLP: DCGs An example: two word sentences We’d like CF-like grammar rules, e.g.: sentence --> [Word1],[Word2]. We can have a similar plain Prolog rule: sentence(Input,Output):- find_in(Input, W1,Rest), find_in(Rest,W2,Output). find_in([Word|Words],Word,Words). N.B. find_in/3 is a primitive, but is called ‘C’/3 Sample query for recognizing a sentence: 12 ?- sentence([it,rains],[]). DCGs compile into Prolog: Eg. s --> np, vp. compiles into: s (Input,Output):- np(Input,Rest), vp(Rest,Output). Where words are involved, we get calls to ‘C’: E.g. noun --> [moon]. compiles into: noun(Input,Output):- 'C'(Input,moon,Output). So DCG calls still require two added arguments: ?- sentence([the,moon,shines],[]). 13 A first CF grammar in DCG % Syntax s --> np, vp. np --> d, n. np --> name. vp --> iv. vp --> tv, np. vp --> bv, np, pp. 14 pp --> p, np. CF grammar in DCG (cont.) % Lexicon d --> [the]. n --> [sun]. n --> [moon]. n --> [world]. name --> [gaia]. name --> [helios]. iv --> [shines]. tv --> [reflects]. tv --> [illuminates]. bv --> [reflects]. p --> [upon]. 15