Risk Assessment and Management: Part 1

advertisement

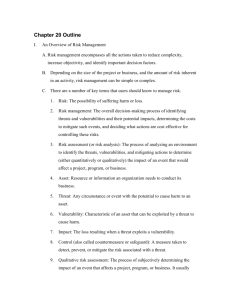

Previous screen 85-01-20.1 Risk Assessment and Management: Part 1 Will Ozier Payoff This article, the first in a two-part series, reviews the basic tools and processes of risk assessment and management and then provides a brief overview of the history of information risk assessment and management. A comprehensive listing of automated risk management software packages is provided. The second article in this series examines recent technical and regulatory developments and provides an approach that security practitioners can follow to assist in implementing a risk management program. Problems Addressed The information risk management process of identifying, assessing, and analyzing risk is poorly understood by most people. Although information risk management is a powerful concept, it is often shunned or given half-hearted support even when regulation requires it precisely because it is not well understood. In fact, many mistakenly believe that the associated technology of risk assessment is relevant only to major disasters. Yet there are now skilled information risk management experts and evolving tools that provide management with the ability to identify and cost-effectively manage the many varieties of information risk. Key Terms and Concepts To discuss the history and evolution of information risk assessment and analysis, several terms whose meanings are central to this discussion are first defined. Annualized Loss Expectancy or Exposure (ALE) This discrete value is derived, classically, from the following algorithm (see also the definitions for Single Loss Expectancy [SLE] and annualized rate of occurrance[ARO]): Single Loss Expectancy X Annualized Rate = Annualized Loss Expectancy of Occurence To effectively identify risk and to plan budgets for information risk management and related risk mitigation activity, it is helpful to express loss expectancy in annualized terms. For example, the preceding algorithm shows that the Annualized Loss Expectancy for a threat with an single loss expectancy of$1,000,000 that is expected to occur about once in 10,000 years is $1,000,000 divided by 10,000, or only $100.00.Factoring the ARO into the equation provides a more accurate portrayal of risk and helps establish a basis for meaningful cost/benefit analysis of risk reduction measures. Annualized Rate of Occurrence (ARO) This term characterizes, on an annualized basis, the frequency with which a threat is expected to occur. For example, a threat occurring once in 10 years has an ARO of 1/10 or 0.1; a threat occurring 50times in a given year has an ARO of 50.0. The possible range of frequency values is from 0.0 (the threat is not expected to occur) to some whole number whose magnitude depends on the type and population of threat sources. For example, the Previous screen upper value could exceed 100,000events per year for minor, frequently experienced threats such as misuse-of-resources. For an example of how quickly the number of threat events can mount, imagine a small organization about 100 staff members having logical access to an information processing system. If each of those 100 persons misused the system only once a month, misuse events would occur at about the rate of 1,200 events per year. It is useful to note here that many confuse ARO or frequency with the concept of probability (defined below). Although the statistical and mathematical significance of these metrics tend to converge at about 1/100 and become essentially indistinguishable below that level of frequency or probability, they increasingly diverge above 1/100 to the point where at 1.0 probability stops (i.e., certainty is reached) and frequency continues to mount undeterred. Exposure Factor (EF) This factor is a measure of the magnitude of loss or impact on the value of an asset, expressed within a range from 0% to 100% loss arising from a threat event. This factor is used in the calculation of single loss expectancy, which is defined below. Information Asset This term represents the body of information an organization must have to conduct its mission or business. Information is regarded as an intangible asset separate from the media on which it resides. There are several elements of value to be considered: the simple cost of replacement, the cost of supporting software, and the several costs associated with loss of confidentiality, availability, or integrity. These three elements of the value of an information asset often dwarf all other values relevant to an assessment of risk. It should be noted as well that these three elements of value are not necessarily additive for the purpose of assessing risk. In both assessing risk and establishing cost-justification for risk-reducing safeguards, it is useful to be able to isolate safeguard effects among the three. Clearly, for an organization to conduct its mission or business, the necessary information must be where it should be, when it should be there, and in the expected form. Further, if desired confidentiality is lost, results could range from loss of market share in the private sector to compromise of national security in the public sector. Qualitative or Quantitative These terms indicate the binary categorization of risk and information risk management techniques. Quantitative analysis attempts to assign independently objective numeric values (e.g., monetary values) to the components of the risk assessment and, finally, to the assessment of potential losses; qualitative analysis does not attempt to apply such metrics. In reality, there is a spectrum across which these terms apply, virtually always in combination. This spectrum may be described as the degree to which the risk management process is quantified. If all elements asset value, impact, threat frequency, safeguard effectiveness, safeguard costs, uncertainty and probability are quantified, the process may be characterized as fully quantitative. It is virtually impossible to conduct a purely quantitative risk management project, because the quantitative measurements must be applied to the qualitative properties of the target environment. However, it is possible to conduct a purely qualitative risk management project. A vulnerability analysis, for example, may identify only the presence or absence of risk-reducing countermeasures(though even this simple qualitative process has a quantitative element in its binary method of evaluation). In summary, risk assessment techniques should be described not as either qualitative or quantitative but in terms of the degree to which such elementary factors as asset value, Exposure Factor, and threat frequency are assigned quantitative values. Probability Previous screen This term characterizes the chance or likelihood, in a finite sample, that an event will occur. For example, the probability of getting a six on a single roll of a die is 1/6, or 0.16667. The possible range of probability values is 0.0 to 1.0. A probability of 1.0 expresses certainty that the subject event will occur within the finite interval. Conversely, a probability of 0.0 expresses certainty that the subject event will not occur within the finite interval. Risk The potential for loss, best expressed as the answer to four questions: · What could happen? (What is the threat?) · How bad could it be? (What is the consequence?) · How often might it happen? (What is the frequency?) · How certain are the answers to the first three questions? (What is the degree of confidence?) The key element among these is the issue of uncertainty captured in the fourth question. If there is no uncertainty, there is no risk, per se. Risk Analysis This term represents the process of analyzing a target environment and the relationships of its risk-related attributes. The analysis should identify threat vulnerabilities, associate these vulnerabilities with affected assets, identify the potential for and nature of an undesirable result, and identify and evaluate risk-reducing countermeasures. Risk Assessment This term represents the assignment of value to assets, consequence(i.e., exposure factor) and other elements of chance. The reported results of risk analysis can be said to provide an assessment or measurement of risk, regardless of the degree to which quantitative techniques are applied. For consistency in this article, the term risk assessment is used to characterize both the process and the result of assessing and analyzing risk. Risk Management This term characterizes the overall process. The first phase, risk assessment, includes identifying risks, risk-reducing measures, and the budgetary impact of implementing decisions related to the acceptance, avoidance, or transfer of risk. The second phase includes the process of assigning priority to, budgeting, implementing, and maintaining appropriate risk-reducing measures. Risk management is a continuous or periodic process of risk assessment, analysis, and the resulting management or administrative risk reduction. Safeguard This term represents a risk-reducing measure that acts to detect, prevent, or mitigate against loss associated with the occurrence of a specified threat or category of threats. Safeguards are also often described as controls or countermeasures. Safeguard Effectiveness This term represents the degree, expressed as a percent, from 0% to 100%, to which a safeguard may be characterized as effectively mitigating a vulnerability (defined below) and reducing associated loss risks. Single Loss Expectancy or Exposure (SLE) Previous screen This value is derived from the following algorithm to determine the monetary loss (impact) for each occurrence of a threatened event: ASSET VALUE x EXPOSURE FACTOR = SINGLE LOSS EXPECTANCY The single loss expectancy is usually an end result of a Business Impact Analysis. Abusiness impact analysis typically stops short of evaluating the related threats ARO or its significance. The single loss expectancy represents only one element of risk, the expected impact (monetary or otherwise) of a specific threat event. Because the business impact analysis usually characterizes the massive losses resulting from a catastrophic event, however improbable, it is often unreasonably employed as a scare tactic to get management attention and loosen budgetary constraints. Threat This defines an undesirable event that could occur (e.g., a tornado, theft, or computer virus infection). Uncertainty This term characterizes the degree to which there is less than complete confidence in the value of any element of the risk assessment. Uncertainty is typically measured inversely with respect to confidence, from 0.0% to 100% (i.e., if uncertainty is low, confidence is high). Vulnerability This term characterizes the absence or weakness of a risk-reducing control or safeguard. It is a condition that has the potential to allow a threat to occur with greater frequency, greater impact, or both. For example, not having a fire suppression system could allow an otherwise minor, easily quenched fire to become a catastrophic fire. Both expected frequency and exposure factor for fire are increased as a consequence of not having a fire suppression system. Central Tasks of Risk Management The following sections describe the tasks central to the comprehensive information risk management process. These tasks provide concerned management with the identification and assessment of risk as well as cost-justified recommendations for risk reduction, thus allowing the execution of well-informed management decisions on whether to avoid, accept, or transfer risk cost-effectively. The degree of quantitative orientation determines how the results are characterized and, to some extent, how they are used. Project Sizing This task includes the identification of background, scope, constraints, objectives, responsibilities, approach, and management support. Clear project-sizing statements are essential to a well-defined and well-executed risk assessment project. It should also be noted that a clear articulation of project constraints (i.e., what is not included in the project) is very important to the success of a risk assessment. Asset Identification and Valuation This task includes the identification of assets, (usually) their replacement costs, and the further valuing of information asset availability, integrity, and confidentiality. These values may be expressed in monetary or nonmonetary terms. This task is analogous to a Business Impact Analysis in that it identifies what assets are at risk and their value. Previous screen Threat Analysis This task includes the identification of threats that may affect the target environment. (This task may be integrated with the next task, vulnerability analysis.) Vulnerability Analysis This task includes the identification of vulnerabilities that could increase the chance or degree of impact of a threat event affecting the target environment. Risk Evaluation This task includes the evaluation of information regarding threats, vulnerabilities, assets, and asset values in order to measure the associated chance of loss and the expected magnitude of loss for each of an array of threats that could occur. Results are usually expressed in monetary terms on an annualized basis or graphically as a probabilistic risk curve. Safeguard Selection and Risk Mitigation Analysis This task includes the evaluation of risk to identify risk-reducing safeguards and to determine the degree to which selected safeguards can be expected to reduce threat frequency or impact. Cost/Benefit Analysis This task includes the valuation of the degree of risk reduction that is expected to be achieved by implementing the selected risk-reducing measures. The gross benefit, less the annualized cost to achieve a reduced level of risk, yields the net benefit. Tools such as present value and return on investment are often applied to further analyze safeguard costeffectiveness. Interim Reports and Recommendations These key reports are often issued during this process to document significant activity, decisions, and agreements related to the project: · Project Sizing. This report presents the results of the project sizing task. The report is issued to senior management for their review and concurrence. This report, when accepted, assures that all parties understand and concur in the nature of the project. · Asset Valuation. This report details and summarizes the results of the asset valuation task, as appropriate. It is issued to senior management for their review and concurrence. Such review helps prevent conflict about value later in the process. This report often provides management with their first insight into the often extraordinary value of the availability, confidentiality, or integrity of their information assets. · Risk Evaluation. This report presents management with a documented assessment of risk in the current environment. Management may choose to accept that level of risk (a legitimate management decision) with no further action or to proceed with a risk mitigation analysis. · Final Report. This report includes the interim reports as well as details and recommendations from the safeguard selection, risk mitigation, and supporting cost/benefit analysis tasks. This report, with approved recommendations, provides responsible management with a sound basis for subsequent risk management action and administration. There are numerous variations on this risk management process, based on the degree to which the technique applied is quantitative and how thoroughly all steps are executed. For Previous screen example, the asset identification and valuation analysis could be performed independently as a business impact analysis; the vulnerability analysis could also be executed independently. It is commonly but incorrectly assumed that information risk management is concerned only with catastrophic threats, that it is useful only to support contingency planning. A well-conceived and well-executed risk assessment can effectively identify and quantify the consequences of a wide array of threats that can and do occur, often with significant frequency, as a result of ineffectively implemented or nonexistent controls. A well-run integrated information risk management program can help management to significantly improve the cost- effective performance of its information systems environment and to ensure cost-effective compliance with regulatory requirements. The integrated risk management concept recognizes that many often uncoordinated units within an organization play an active role in managing the risks associated with the failure to assure the confidentiality, availability, and integrity of information. A Review of the History of Risk Assessment To better understand the current issues and new directions in risk assessment and management, it is useful to review the history of their development. The following sections present a brief overview of key issues and developments, from the 1970's to the present. Sponsorship, Research, and Development During the 1970s In the early 1970s, the National Bureau of Standards (now the National Institute of Standards and Technology [NIST])perceived the need for a risk-based approach to managing information security. The Guideline for Automatic Data Processing Physical Security and Risk Management (FIPSPUB 31, June 1974), though touching only superficially on the concept of risk assessment, recommends that the development of the security program begin with a risk analysis. Recognizing the need to develop a more fully articulated risk assessment methodology, National Institute for Standards and Technology engaged Robert Courtney and Susan Reed to develop the Guideline for Automatic Data Processing Risk Analysis(FIPSPUB 65, August 1979). In July 1978, the Office of Management and Budget developed OMB A-71, a regulation that established the first requirement for periodic execution of quantitative risk analysis in all government computer installations and other computer installations run on behalf of the government. As use of FIPSPUB 65 expanded, certain difficulties became apparent. Chief among these were the lack of valid data on threat frequency and the lack of a standard for valuing information. A basic assumption of quantitative approaches to risk management is that objective and reasonably credible quantitative data is available or can be extrapolated. Conversely, a basic assumption of qualitative approaches to risk management is that reasonably credible numbers are not available or are too costly to develop. In other words, to proponents of qualitative approaches, the value of assets(particularly the availability of information expressed as a function of the cost of its unavailability) was considered difficult if not impossible to express in monetary terms, with confidence. They further believed that statistical data regarding threat frequency was unreliable or nonexistent. Despite the problems, the underlying methodology was sufficiently well-founded for FIPSPUB 65 to become the defacto standard for risk assessment. Also in the early 1970s, the US Department of Energy initiated a study of risk in the nuclear energy industry. The standard of technology for nuclear engineering risk assessment had to meet rigorous requirements for building credible risk models based in part on expert opinion. This was necessary due to the lack of experience with nuclear threats. Another technique, decomposing complex safeguard systems into simpler elements about which failure rates and other known or knowable statistical data could be Previous screen accumulated, was enhanced and applied to assess risk in these complex systems. The resulting document, the Nuclear Regulatory Commission Report Reactor Risk Safety Study (commonly referred to as the WASH 1400 report) was released in October 1975. The rigorous standards and technical correctness of the WASH 1400 report found their way into the more advanced integrated risk management technology, as the need for a technically sound approach became more apparent. Changing Priorities During the 1980s During the 1980s, development of quantitative techniques was impeded by a shift in federal government policy regarding the use of quantitative risk assessment. On December 12, 1985, OMB A-130, Appendix III, which required a formal, fully quantified risk analysis[only in the circumstance] of a large-scale computer system, replaced OMB A-71, Appendix C, which required quantitative risk assessment for all systems, regardless of system size. For those whose pressing concern was to comply with the requirement (rather than to proactively or cost-effectively manage risk), this minor change in wording provided instant relief from what was perceived to be the difficult efforts necessary to perform quantitative risk assessments. In an attempt to develop a definitive framework for information risk assessment that is technologically sound and meets the varying needs of a multitude of information processing installations, NIST established and sponsored the International Computer Security Risk Management Model Builders Workshops in the late 1980s. The results of this NISTsponsored effort may help to provide a more comprehensive, technically credible federal guideline for information risk management. An early result of this working group s efforts was the recognition of the central role that uncertainty plays in risk assessment. During the 1980s, implementation of risk assessment methodologies encountered major obstacles. Manually executed risk assessment projects could take from one work-month for a high-level, qualitative analysis to two or more work-years for a large-scale, in-depth quantitative effort. Recommendations resulting from such lengthy projects were often out of date, given changes that would occur in the information processing environment during the course of the project. Efforts often bogged down when attempts were made to identify asset values (especially the values of information assets) or to develop credible threat frequency data. Another problem resulted from use of oversimplified risk assessment algorithms (based on FIPSPUB 65) that were incapable of discriminating between the risks of a lowfrequency, high-impact threat (e.g., a fire) and a high-frequency, low-impact threat (e.g., misuse of resources). Exhibit 1 illustrates this problem. The resultant Annualized Loss Expectancy of $50,000 for each threat appears to indicate that the risks of fire and misuse of resources are the same. Managers refused to accept such an analysis. Intuitively they felt that fire is a more immediate and potentially more devastating threat. Management, correctly, was willing to spend substantial sums to avoid or prevent a devastating fire, but they would spend only minor sums on programs(e.g., awareness programs) to prevent misuse of resources. This sort of anomaly seriously undermined the credibility of quantitative risk assessment approaches. Simplified Algorithms Cannot Distinguish Between Threat Previous screen SINGLE ANNUALIZED ANNUALIZED THREAT ASSET EXPOSURE LOSS RATE OF LOSS ID VALUE x FACTOR = EXPECTANCY x OCCURRENCE = EXPECTANCY ----------------------------------------------------------------------------FIRE $1.0m x 0.5 = $500,000 x 0.1 = $50,000 MISUSE ------------------------------------------------------------------------------ In response to such concerns, some software packages for automated information risk management were developed during the 1980s. Several stand out for their unique advancement of important concepts. RISKPAC, a qualitative risk assessment software package did not attempt to fully quantify risks but supported an organized presentation of the users subjective ranking of vulnerabilities, risks, and consequences. Although this package was well received, concerns about the subjective nature of the analysis and the lack of quantitative results that could be used to justify anything other than the most coarse information security budget decisions persisted. In the mid-1980s, work began simultaneously on two risk assessment products that represented a technological breakthrough: The Bayesian Decision Support System (BDSS) and the Lawrence Livermore Laboratory Risk Analysis Methodology (LRAM). LRAM was later automated and became ALRAM. Both Bayesian Decision Support System and ALRAM applied technically advanced risk assessment algorithms from the nuclear industry environment to information security, replacing the primitive algorithms of FIPSPUB 65. Although both packages use technically sound algorithms and relational data base structures, there are major differences between them. BDSS is an expert system that provides the user with a comprehensive array of knowledge bases that address vulnerabilities and safeguards as well as threat Exposure Factor and frequency data. All are fully mapped and cross-mapped for algorithmic risk modeling and natural language interface and presentation. ALRAM requires significant expertise to build and map knowledge bases and then to conduct a customized risk assessment. The Buddy System, an automated qualitative software package, can be used to determine the degree to which an organization is in compliance with an array of regulatory information security requirements. This package has been well-received in government organizations. Several other risk management packages were introduced during the 1980s, though many have not survived. A list of risk management software packages is provided in Exhibit 2. Risk Management Software Packages @RISK. Palisade Corp, Newfield NY. (607) 277-8000. Previous screen Bayesian Desicion Support System. Ozie, Peterse & Associates, San Francisco, CA. (415) 989-9092. Control Matrix Methodology for Microcomputers. Jerry FitzGerald & Associates, Redwood City CA. (415) 591-5676. Expert Auditor. Boden Associates. East Williston NY.(516) 294-2648 LAVA.Los Alamos National Laboratory, Los Alamos NM. (505) 667-7777. MARION.Coopers & Lybrand, London, England. 44-71-583-5000. Micro Secure Self Assessment. Boden Associates, East Williston NY. (516)-294-2448. Predictor System. Concorde Group International, Westport CT.(203) 227-4539. RANK-IT. Jerry FitzGerald & Associates, Redwood City CA. (415) 591-5676. RISKPAC. Profile Analysis Corp, Ridgefield CT. (203) 431-8720. RISKWATCH. Expert Systems Software Inc, Long Beach CA. (213) 499-3347. The Buddy System Risk Analysis and Management System for Microcomputers. Countermeasures Inc, Hollywood MD. (301) 373-5166. Risk management software can improve work efficiency substantially and can help focus the risk analyst s attention on the most significant risk factors. Even relatively primitive risk assessment software can reduce the work effort by 20% to 30%; some knowledge-based packages have demonstrated work reductions of 80% to 90%. Knowledge-based packages are particularly effective in helping analysts avoid superfluous information gathering and analysis of insignificant information. Recommended Course of Action Although the history of information risk assessment has been troubled, continuing research and development has succeeded in solving key problems and creating a powerful new generation of risk management tools. The second article in this series will explore these new developments and will also present a program that security practitioners can follow when assisting in implementing a risk management program. Bibliography Comptroller of the Currency, Administrator of National Banks. “End-User Computing.“ Banking Circular-226 (January 1988). Comptroller of the Currency, Administrator of National Banks, “Information Security.” Banking Circular-229 (May 1988). Federal Deposit Insurance Corp. “Federal Financial Institutions Examination Council Supervisory Policy on Contingency Planning.” Report BL-22-88(July 1990). Guarro, S.B. “Risk Analysis and Risk Management Models for Information Systems Security Applications.” Reliability Engineering and System Safety (1989). J. Mohr, “Fighting Computer Crime with Software Risk Analysis.”Journal of Information Systems Management (vol 1, no 2 1984). Previous screen National Institute of Standards and Technology. “Guidelines for Automatic Data Processing Physical Security and Risk Management.” FIPSPUB 31(June 1974). National Institute of Standards and Technology. “Guidelines for Automatic Data Processing Risk Analysis.” FIPSPUB 65 (August 1974). National Institute of Standards and Technology. “Guidelines for Security of Computer Applications.” FIPSPUB 73 (June 1980). Ozier, W. “Disaster Recovery and Risk Avoidance/Acceptance.”Data Processing & Communications Security (vol 14, no 1 1990). Ozier, W. “Risk Quantification Problems and Bayesian Decision Support System Solutions.”Information Age (vol 11, no 4 1989). US Senate and House of Representatives.“Computer Security Act of 1987.” Public Law 100-235(January 1988). Author Biographies Will Ozier Will Ozier is president and founder of the information security firm of Ozier, Peterse & Associates (USA), San Francisco. He is an expert in risk assessment and contingency planning, with broad experience in consulting for many Fortune 500 companies as well as the federal government. He is a member of the Computer Security Institute, the Information Systems Security Association, and the EDP Auditors Association, and he has chaired the ISSA Information Valuation Committee, which devised standards for valuing information. He currently chairs the ISSA Committee to Develop Generally Accepted System Security Principles as recommended in the National Research Council's Computer at Risk Report.