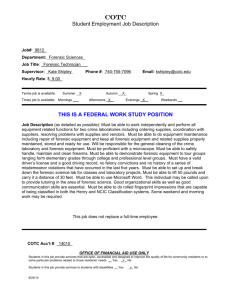

Assignment Cover Sheet – External

advertisement

UNIVERSITY OF SOUTH AUSTRALIA

Assignment Cover Sheet – External

An Assignment cover sheet needs to be included with each assignment. Please complete all details clearly.

Please check your Course Information Booklet or contact your School Office for assignment submission locations.

ADDRESS DETAILS:

Full name:

Daniel John Walton

Address:

Unit2, 11-15 Rutland st, Allawah, NSW 2218 Australia

Postcode:

2218

If you are submitting the assignment on paper, please staple this sheet to the front of each assignment. If you are submitting the

assignment online, please ensure this cover sheet is included at the start of your document. (This is preferable to a separate

attachment.)

Student ID

110071749

Email: waldj007@mymail.unisa.edu.au

Course code and title: Masters Computing Minor Thesis 2 (COMP 5003)

School:UNIVERSITY OF SOUTH AUSTRALIA

Program Code: COMP 5003

Course Coordinator:Dr Elena Sitnikova

Tutor: Dr Elena Sitnikova

Assignment number: Thesis Proposal

Due date: 2013

Assignment topic as stated in Course Information Booklet:

Further Information: (e.g. state if extension was granted and attach evidence of approval, Revised

Submission Date)

I declare that the work contained in this assignment is my own, except where acknowledgment of sources is made.

I authorise the University to test any work submitted by me, using text comparison software, for instances of plagiarism. I

understand this will involve the University or its contractor copying my work and storing it on a database to be used in future to test

work submitted by others.

I understand that I can obtain further information on this matter at http://www.unisa.edu.au/learningadvice/integrity/default.asp

Note: The attachment of this statement on any electronically submitted assignments will be deemed to have the same authority as

a signed statement.

Signed: Daniel John Walton

Date received from

student

Recorded:

Date:24/06/2013

Assessment/grade

Assessed by:

Dispatched (if applicable):

By

Daniel Walton

Student Number:

110071749

Thesis Proposal

for the University of South Australia

for the course: Masters Computing Minor Thesis 2 (COMP 5003)

Building F, Mawson Lakes Campus

University of South Australia

Mawson Lakes, South Australia 5095

1

TABLE OF Contents

SECTION

PAGE

SECTION 1 - STATEMENT OF THE TOPIC AND RATIONALE FOR THE RESEARCH..............................3

Research Topic....................................................................................................................... 3

Research Problem................................................................................................................... 3

Sub-Problems..........................................................................................................................3

EXPLANATION OF THE SUB-PROBLEMS......................................................................3

RESEARCH thesis title.......................................................................................................... 4

Background.............................................................................................................................4

Background and Motivation...............................................................................................................4

Forensic Readiness.............................................................................................................................4

Forensic evidence acquisition :...........................................................................................................5

Forensic Analysis.................................................................................................................................5

Automated analysis............................................................................................................................6

Significance of the Problem................................................................................................... 6

SECTION 2 – RESEARCH METHODOLOGY................................................................... 8

SECTION 3 - TRIAL TABLE OF CONTENTS................................................................... 9

SECTION 4 – LITERATURE REVIEW............................................................................. 10

Automating processing of evidence.................................................................................................10

Computer Profiling ...........................................................................................................................11

Timeline analysis...............................................................................................................................12

Analysis.............................................................................................................................................13

Proposal 14

SECTION 5 – EXAMINATION CRITERIA FULFILMENT............................................ 15

SECTION 6 – REFERENCES............................................................................................. 16

Title:..................................................................................................................................................18

Student:............................................................................................................................................18

Student ID:........................................................................................................................................18

Supervisor:........................................................................................................................................18

2

SECTION 1 - STATEMENT OF THE TOPIC AND RATIONALE FOR THE

RESEARCH

RESEARCH TOPIC

Research Problem

How can automation be used to improve the Digital Forensic analysis of computer evidence ?

Sub-Problems

1. What are the existing tools for extracting relevant information from evidence as well as the quality of

the extracted information from these tools ?

2. What solutions are the for parsing the many undocumented file and metadata formats which are yet

to be discovered and documented but could contain information of interest?

3. How to ensure a low false-positive and false-negative detection rate while keeping a high detection

rate of relevant information?

4. How would a tool be implemented to validate the proposed automatic analysis method?

EXPLANATION OF THE SUB-PROBLEMS

There are many different formats for the storing of data and metadata on computers which compounds the

analysis problem(Brownstone 2004). For many of these files the format is undocumented or proprietary which

also complicates analysis(Kent, Chevalier & Grance 2006). For example for an intellectual property theft case

the investigator would want to at least parse the registry (Accessdata 2005; Wong 2007)and the

setupapi.dev.log/setupapi.log to gain details with regard to USB removable device history, parse all link files

(.LNK files) to gain details with regard to files opened from removable devices, parse Internet Explorer for

local file access to get further detail regarding access to removable devices and also parse the NTFS USN

Journal (Carrier 2005) to check that files were not renamed before being copied to removable devices (helps

use know that relevant files were renamed to what looked like irrelevant file names). It is quite time

consuming to parse all these different areas and combine the information to analyse what has happened.

As many of these different files and metadata are undocumented the ability to extract useful information

from them is often dependant on individual analysts performing research and discovering the internal formats

and writing software to extract useful data. e.g. Didier Stevens in 2006 posted his Userassist tool to parse the

user assist entries from the windows registry(Stevens 2006), he later discovered that for windows 7 and

windows server 2008R2 that the format had changed and so released an newer version of the tool with

support for extracting these values from the newer versions of windows (Stevens 2010) and in 2012 released

an updated version with beta support for Windows 8 (Stevens 2012). As there are new discoveries of different

files and metadata that are useful for forensic analysis there is always the concern that investigators who do

not keep up to date with new discoveries with regard to which metadata which can be extracted and new

tools for the extraction of this metadata may be performing investigations where less information is extracted

leading to a lower standard of care potentially resulting in innocent people being incarcerated or guilty people

being let out because of the lack of detail in an investigation.

Regarding automatic analysis current forensic tools are not really able to analyse evidence and give the

Investigator a report showing discovered suspicious activity as well as provide a profile of activity on the

3

computer. What we have are tools which: able to view many different file types, that are able to parse and

extract data from many different files, that can help exclude known irrelevant files and detect known relevant

files, that can analyse email and are able to index and search the evidence. There are many impressive tools

out there yet their abilities can be mostly summed up as assisting the investigator analyse the evidence as

compared to analysing the evidence themselves and providing the investigator with the results of analysis for

the investigator to check.

The best we can hope for is better assistance for the investigator with analysis as compared to better analysis.

The investigator still needs to combine together the different sources of data and perform analysis themelves

to discover what actually happened.

The detection rate of an automated analysis system is important as investigators don't want to be flooded

with irrelevant data. We can think of the example of an email spam filter where a high false-positive rate

means spam is not removed and the user gets too much spam or with a high false-negative rate lots of

legitimate emails are filtered out as spam which is also unwanted. What is wanted is a high detection rate (or

high signal to noise ratio) for items of interest and unwanted items removed.

To automatically analyse evidence, software would need to be able to read all the relevant parts of evidence

needed for the analysis being performed. For intellectual property theft for example, to detect the use of a

USB flash drive to copy files off a computer information from the filesystem,link files and the registry are used

together to link together activity. Multiple sources of data need to be combined together and used to help

detect things. This provides challenges as different formats of information need to be combined in ways

where their content is still readable without conflicting or losing detail.

RESEARCH THESIS TITLE

The proposed research thesis title is ‘Developing a rule based tool for the automated analysis of Digital

Forensic evidence’.

BACKGROUND

Background and Motivation

Digital forensic investigators are swimming in a sea of data, the size of storage keeps increasing as well as well

as the amount of data people are generating is also increasing. On the other side digital forensic tools abilities

to deal with all this data isn't increasing at the same rate. Data reduction is done by removing known files by

their cryptographic hash (a unique identifier) , using date ranges or finding files based on specific keywords.

There currently isn't a solution which will examine and analyse the evidence and and then provide the

investigators with a profile of the computer, with an overview of activity and areas of interest including

suspicious activity.

The motivation for this paper is to find what is currently in this area , what research has been done, find

research areas of interest and investigate the viability of creating a tool to automatically analyse evidence.

Forensic Readiness

Forensic investigations are usually started when someone thinks something suspicious has occurred or to

double check that nothing untoward has happened.

4

Forensic investigations are normally started when something suspicious is detected and forensic analysis is

initiated to find out exactly what happened. This detection either is manually detected by a human suspicious

about some activity (or lack of) who then initiates procedures to get this investigated or the suspicious activity

is detected automatically by a specialised computer monitoring system which then sends an alert and then a

decision can be made whether or not forensic analysis is required. If forensic analysis is required then the

evidence is acquired and analysis started.

There are many different types of anomaly detection systems and methods for computers. Anti-virus software

is used on most computers and is used to detect and clean viruses ( including other forms like malware,

worms, Trojan horses and spyware) . An Intrusion Detection System (IDS) is used to detect suspicious network

traffic; it monitors network traffic and raises alerts when suspicious traffic is detected. Security Information

Event Management (SIEM) systems monitor the logs generated from Computers(mostly servers), IDS's and

network devices like Firewalls,routers and switches for suspicious activity. Anti-Spam software is used to

detect unsolicited commercial email otherwise known as SPAM. These systems all have methods for

discerning between legitimate content and behaviour and abnormal content or behaviour.

Forensic readiness is a model for the early detection and collection of evidence relating to suspicious

activity(Tan 2001) . SIEM systems when configured correctly provide Forensic Readiness abilities for the ICT

infrastructure that they have been configured to monitor especially by providing rule based event detection

and secure logging systems. This works by collecting logs from all servers,network infrastructure

(switches,routers, IDS's) and sometimes also from workstations. As these logs all come from different places

they are also in different formats with windows event logs from windows, syslog event logs from unix/linux

and more commonly different types of text based delimited logs. Conversions are performed across the

different formats so as to combine them in one aggregated log. These aggregated log's are then automatically

examined for suspicious activity. Forensic readiness helps detect incidents which will need investigation by

Computer Forensic Analysis.

Forensic evidence acquisition :

Once an event is detected evidence must be acquired into a form for analysis. Acquired evidence is a snapshot

of the system at the point of acquisition(Rider, Mead & Lyle 2010). This could be a copy of a mobile phone,

Memory from a computer or storage like hard disk drives (HDD's) and USB flash drives(Sutherland et al. 2008).

The acquired evidence is collected in a way so that it can be verified afterwards to confirm the evidence hasn't

been modified since the initial acquisition (NIST 2004). This is done with a cryptographic hash and is usually a

MD5 hash or a SHA1 hash.

Once the evidence has been acquired a copy can be made of the acquired evidence, the master copy stored

securely away and the working copy used for analysis.

Forensic Analysis

Analysis is always performed on the acquired evidence files. As the evidence is a static resource it is easy to

try different analysis methods as well as have the time to compare tools to make sure the analysis results are

repeatable. Most forensic analysis is manually performed by investigators using any of the three main forensic

tools; Guidance software Encase, AccessData's Forensic Tool Kit and X-Way's Winhex. These tools have many

automated features for the processing of evidence and extraction of data of interest but the actual analysis

still has to be done by the investigator.

The automated features provided by these tools are very useful for removing irrelevant data, the opening and

viewing of many different file types, viewing the content of container files (like .7z .zip. Rar … etc), parsing

operating system files for metadata (e.g. .EVT,EVTX,LNK files and Internet history), finding deleted files, data

carving and the ability to search for files (Raw searches as well as indexed). All these features help the

5

investigator with the forensic analysis of evidence yet don't actually do any automated analysis of the

evidence.

For example, for the detection of IP theft (Malik & Criminology 2008)the investigator will analyse the USB

device history, .LNK files for removable device access, Internet history, Shell bags and sequential access time

of files and correlate the results in the interest to find whether there are signs that data was copied to a USB

flash drive. This is quite a manual process yet it could be possible to to automate this.

Automated analysis

The open source Intrusion Detection System (IDS) Snort uses a simple rule based detection system to detect

network packets of interest (Roesch 1999) . The syntax for Snorts rule language is flexible enough that rules

can be written to detect items of interest in traffic in protocols that it wasn't originally designed to detect ,

e.g. SCADA MODBUS communications over TCPIP (Morris, Vaughn & Dandass 2012).

Snorts rule based analysis is primarily packet by packet and rules don't normally analyse more than once

packet at once. Now the network packets it analyses are usually standardised and documented protocols but

for the automated analysis of computer evidence there is a lot more variety to deal with. For a thorough

analysis of evidence of a windows computer we would at least need to examine the following: the filesystem,

Event logs, Registry, parsed registry structures (e.g. shell bags, userassist, USB … etc), registry, Internet history

of all installed browsers and image EXIF metadata. Now these different pieces of data are stored in different

files and in different formats and reading from all these different areas would be complicated as the different

formats would all need their own custom parser to extract out intelligence and the different data from each

file would need to be combined.

As Snorts rule based system lends itself to adaptation to monitor and analyse different protocols from the

same packet dump a similar rule based system should also work over a simplified,standardised file format for

the recording of metadata from evidence which differentiates between the different sources of metadata (i.e.

differentiates between the entries sources).

With all the different forensic tools there are these days, we are still unable to find one that does more in the

way of automated analysis than previous versions of the same tools from 2007. Their functionality is mostly

still the same as it was and they are mostly just scaled up versions to deal with more data as well as parsing

more filetypes. Automated analysis has the potential to revolutionise the digital forensic area by building on

existing tools and providing actual analysis of evidence. This would save the investigator having to manually

looking through evidence looking for areas of interest as the results of the automated analysis would

immediately highlight the areas of interest for them to analyse. The investigator could then spend more time

on validating the results of the automated analysis and collating their findings together as the automated

analysis system quickly directs them to areas of interest saving much labour time. If the analysis system used

rules they could be shared and investigators could write new rules providing additional analysis features.

SIGNIFICANCE OF THE PROBLEM

Investigators currently spend a lot of time manually analysing evidence and with large cases with much data it

would make a big difference if there was a way to speed up analysis of the supplied evidence so that

investigators can quickly get a profile of the activity on each computer as well easily finding signs of suspicious

activity as this will enable them to spend more time on the solving of cases and less on the extraction and

analysis of evidence.

6

In many police departments there are backlogs for digital forensic analysis which would benefit with an

automatic analysis system which could analyse evidence and provide reports with profiles of the computer

and all users as well as results of automatic analysis showing what suspicious activity has occurred on the

computer. As usual investigators will need to backup any findings with proof and so the reports will contain

information explaining where the results came from. Hard disk drives are only getting bigger and a way to

quickly automate the analysis of evidence will be of large benefit for the digital forensic field.

Development of the log2timeline tool gave investigators a large jump in the capabilities for investigators as it

enabled them to automatically extract file information and metadata where previously it was a manual task

(Guðjónsson 2010) . The plan for this thesis is to take the next big leap in forensic capability by combining with

the capabilities of log2timeline by adding automated analysis so as to reduce the amount of analysis the

investigator needs to perform.

7

SECTION 2 – RESEARCH METHODOLOGY

The research methodology will be similar to the Agile software development model working in an iterative

model with analysis , development, implementation and evaluation as different stages.

It will involve examining the data which the system collects after performing certain suspicious actions.

Windows XP will be the operating system of choice as it very well understood by forensic tools and provides a

wealth of data for parsing.

The methodology to address each of the sub-problems is proposed below.

Sub-Problem 1 – What are the existing tools for extracting relevant information from evidence as well as the quality of the

extracted information from these tools ?

Comparison will be done between the Log2timeline tool, the Plaso tool and different specific tools for the

extraction of file metadata. The use of the Plaso and log2timeline tools in investigations isn't quite so common

as it could be. The integration of these tools with an automated analysis system will help reduce many of the

issues there are with the many different tools for the extraction of metadata. These tools will be compared

and the one most suitable for integration with an automated analysis system will be used.

Sub-Problem 2 – What solutions are the for parsing the many undocumented file and metadata formats which are yet to

be discovered and documented but could contain information of interest?

Microsoft and Apple will both continue making new operating systems with new filesystems, filetypes and

metadata to be extracted. Collaboration on the log2timeline and Plaso tools by adding the new file types and

metadata will help enable that new file types and metadata can be parsed and understood by forensic tools.

Forensic analysis systems would need to keep up to date and have new rules to deal with the new metadata.

Sub-Problem 3 – How to ensure a low false-positive and false-negative detection rate while keeping a high detection rate

of relevant information?

Research with regarding to text based analysis systems, rule based analysis systems like Snort, statistical

analysis systems and comparisons made to discover which methods provide a more reliable analysis system

with higher detection rates and more relevant data reliability. Testing with regard to which rules work better

and comparisons to find the most reliable methods.

Sub-Problem 4 - How would a tool be implemented to validate the proposed automatic analysis method?

Comparison with similar tools like Bayesian spam filters and primarily with Snort and how it's rule based

system works as well as examining and comparing different analysis based systems like using statistics,

Markov chains, natural language processing, rule based systems and combining different features which

compliment.

8

SECTION 3 - TRIAL TABLE OF CONTENTS

LIST OF FIGURES

iv

LIST OF TABLES

v

GLOSSARY

vi

Abbreviations

vi

SUMMARY

viii

AUTHOR’S DECLARATION

ix

ACKNOWLEDGEMENTS

x

CHAPTER ONE - INTRODUCTION

1

Background

1

Research Issue

1

Method

1

CHAPTER TWO – Computer profiling

2

Introduction

2

Methods

2

CHAPTER THREE – Log2timeline and Plaso

4

Introduction

4

Comparison

5

CHAPTER FOUR – Analysis of different analysis systems

6

Introduction

6

Snort

7

Markov chain analysis methods

8

Further different analysis methods

8

CHAPTER FIVE – Tests and implementations

9

CHAPTER SIX - CONCLUSIONS AND FURTHER WORK

9

REFERENCES

10

9

SECTION 4 – LITERATURE REVIEW

The main aim of this research is to discover what existing systems there are for the automated analysis of

computer evidence as well as research into what is required for the implementation of such a system.

An implemented analysis system would need to be able to extract from evidence all the relevant information

for analysis as well as provide analysts findings from the analysis of evidence.

With the proposal of an automatic evidence analysis system some compromises need to be made. When we

look at Snort an Intrusion Detection System (IDS) for network traffic, the data to be analysed is network

packets, which arrive serially. This makes the task of analysis a lot simpler as every packet has an arrival time

connected to it as well as network traffic follows documented standards and protocols which helps analysis

and the job of detecting anomalies. With the forensic analysis of computers there are many different places

for data to be stored as well as many different file formats and most are either not standardised or the format

isn't open.

Automating processing of evidence

Richard and Roussevin wrote a paper discuss the processing of evidence in ways to minimise and lower the

analysis load for investigators because of the growth in the size of collected evidence (Golden G. Richard &

Roussev 2006). They propose a distributed system with which to divide up the evidence so as to be able to get

multiple computers processing this evidence. This is automated processing not automated analysis and it

seems very similar to what the commercial product FTK does. They include many ways to help with culling

known irrelevant files as well as ways to help reveal data of relevance by extracting metadata and other

additional information. This automated processing concept could be of use for automated analysis as it is

designed to scale processing across many systems and might be of use for large cases where resources are of

concern.

In Ayers paper “A second generation computer forensic analysis system” he proposes a system for the

processing of evidence which is very similar to what was proposed above by Richard and Roussev 2006 and as

with theirs it's mostly concerned with the processing of evidence, searches and hashing of evidence to aid the

investigators time spent analysing the evidence, one point of difference is with his focus on creating an audit

trail with regard to actions by the software and users (Ayers 2009).

Farrell 2009 in his thesis is working from a similar premise in that storage devices are getting cheaper and

larger and so the automatic processing of evidence to create automated reports will help remove “some of

the load” from law enforcement staff (Farrell 2009). His analysis method is fairly easy to implement in that it

primarily collects statistics about various forms of data in the evidence and collates it together to create a

report. e.g. most used email addresses , web pages , recently accessed documents … etc. What is nice to see

is the creation of different reports for different users, which is very useful to differentiate who on the

computer did what actions. As with Richard and Roussevin he mentions using hashing functions combined

with known good and known bad files to detect files to ignore and to flag as important to help with the

extraction of relevant data and removal of irrelevant data (e.g. DeNISTing). Statistics can be a simple method

of analysis to implement in an automatic analysis system which helps provide a profile of the system.

Elsaesser & Tanner discuss the idea of using Abstract models to “guide” or help the analysis of a computer

which has attacked to find details regarding the network intrusion (Elsaesser & Tanner 2001) . As the previous

papers it also is looking at ways to help the investigator deal with the great amount of data in computers,

although in this case its specifically looking at log files to find signs of network intrusions. The abstract models

10

they put forward can be also applied in the general analysis of evidence to determine capabilities that a user

may have and with that determine which activities they could or couldn't of been able to do. e.g. new

software was installed on the system but the user in question didn't have the rights to install the software,

which should raise an alert to analyse this further. These concepts are quite viable for detecting abnormal

activity but may require more processing time

Regarding the use of general purpose programming languages Garfinkel's paper on the creation of fiwalk.py

using the pyflag library shows how python can be used to extract filesystem information and file metadata as

well as outputting the processed information into easily parsible XML (eXtensible Markup Language) files

which would leave the output easily available for others to parse and extend in other programs (Garfinkel

2009). This sort of system helps the development of other tools as it extracts information which it presents in

an open format easy for modification and use.

Computer Profiling

Andrew Marrington was involved in the writing of several important papers on the subject of “Computer

profiling”. His premise is to simplify analysis of evidence by generating a profile of the evidence which will

enable the investigator to get a good idea of what activity has occurred, saving the investigator from having to

do the analysis themselves and enables them to make an easy choice whether or not they will need to do a

full analysis on the evidence. This is a common theme as storage is increasing in size and being able to create

profiles of evidence will help investigators to quickly see whether or not they may be findings of interest in

the evidence. This is more towards the concept of digital forensics automatic analysis.

Marrington with regard to the forensic reconstruction of computer activity by using events, mentions that

there are four classes of objects to be found on a computer system namely “Application, Content, Principal,

and System” and they discuss the detection of relationships between these (Marrington et al. 2007) . They

mention that finding relationships between objects is important and its is complicated in computers because

of many different formats of data and put forward models and ideas on how this could be done. The

proposed profiling system uses information from the filesystem,event logs, file metadata (using libextractor),

word metadata and user information from the registry which covers the most important data areas on a

computer although there are no mentions of using link files,jump lists,the registry (dates and times,user

assist,shell bags,shim cache … etc) or internet history which would help broaden the information for analysis.

The analysis performed is profiling based and there is no checking for common item's of interest, e.g. signs of

ip theft, malware … etc, but does make it a lot easier for an analyst to get a good idea of the evidence.

A PHD thesis written by Andrew Marrington expands further on the previous paper with discussion of

Computer Profiling with further in depth analysis of related areas with examination of datamining and analysis

of files with statistics based on extractable text and some major analysis with regard to different

“Computational models” . Examines computational models put forward by Brian Carrier as well as by

Gladyshev and Patel concluding that these models are not feasible without a method to automatically

describe evidence based on “a finite state model (Marrington 2009) and decides a better system is to model

the computer history by using as a foundation the computer event log. Analysis of computer evidence is a

complex problem as there is lots of different types of file formats and metadata as well as different event logs

to be processed and analysed. He mentioned models sometimes are hard to translate to the real world and

implementation of their model is the best test.

It can be seen in this following paper about detecting inconsistency in time lines what the Marringtons model

with a software implementation can perform. Marrington et al discuss some specific automatic analysis in

their paper, regarding detecting the changing of computer clocks by examining events from the event log with

regard to the fact that certain events and actions cannot occur before a user has logged on to the system

(Marrington et al. 2011). Users need to complete login proceedings before they can open applications, if there

are events showing the user has open applications at a time when they were not logged in, then that could

11

only occur if the time has been changed. To detect this they correlate filesystem and document metadata

with event log information to compare user activity with login and logoff events, this is an excellent system

which could be paired with more user activity information extracted from the users local registry file

(NTUSER.dat), internet history and other areas to get more detail regarding user activity.

Regarding the detection of changing the system time there are additional methods which could also of been

mentioned in the paper as there are gaps in their analysis. The windows event log files in themselves are

sequential (ring buffers) and new entries shouldn't be older than previous entries, which can easily detected

by sequentially parsing the event log files then sorting by event file offset and comparing the dates for each

entry. There are further places which can be examined to detect time changing like thumbs.db thumbnails

database files , NTFS USN Journal,Windows restore points, Volume shadow snapshots … etc which all have

sequential entries.

The creator of the bulk extractor tool Garfinkel compares different computers using what he calls “Cross

Drive Analysis” over the output of the Bulk Extractor program to find which computers are related (Garfinkel

2006) . The bulk extractor tool is a program which processes a disk image at sector level, it doesn't read the

filesystem or parse any files it just processes the text it can extract from each sector, which in itself isn't all

that sophisticated but from this it is able to give an rough profile of the computers activity. Most tools are

document or filesystem based and don't focus on analysing unallocated areas which this tool does. The

gathered profile data can then be compared to over computers profiles to see if there are any connections.

The main areas of weakness are that it will not be able to read fragmented files properly although modern

fileystems are self defragmenting and so this isn't so much of an issue and the other area of weakness is the

ability to read inside compressed (e.g. PST mailboxes)or encrypted files. Bulk extractor is quite impressive in

that it has the ability to decompress and read some compressed file types and from looking at it's roadmap

this will only improve. The statistical abilities combined with the data it collects shows that showing what

occurs the most will often reveal a profile of behaviour on the machine. e.g. most visited internet urls or most

occurring email addresses and shows how useful statistics can be to quickly bring information of interest out

of a sea of irrelevant data. With regard to analysis of activities this will only be lightly covered by this tool as it

doesn't parse the registry,link files event logs, filesystem … etc

Timeline analysis

In the interest to extract as much intelligence with regard to activities and dates and times, timelines of

computer activity were created to help with analysis. Initially investigators would create a timeline of file

system activity and use that for the analysis of incidents and as it was helpful the use of this analysis method

has grown with investigators parsing as many areas of a computer as possible to extract dates and times and

signs of activity. This was originally manually done by combining in Excel the Internet history, parsed event

logs, registry entries and filesystem data culled from many different tools. This was a very manual process

using different tools with different outputs which all needed to be combined into a common format for

analysis.

This manual and time consuming job was automated by the creation of the log2timeline software as described

in Guðjónsson's 2010 seminal paper on mastering the super timeline . The log2timeline software improved

the making of timelines by parsing many different areas of the computer for artefacts and dates and times

and combining it all together into a format which is easy for analysts to use. Guðjónsson calls it the “Super

Timeline”. The log2timeline tool also helped in that provided tools to help with basic filtering.

Timelines help with the analysis of incidents as an investigator can look at a time period of interest and

examine all the recorded activity for that time. As an example, looking at the timeline of when a virus

infected the system can help find all the virus's changes to the filesystem and registry and help pinpoint the

infection vector as well as discovering how it starts and where it is stored. Creating time lines is a lot easier

using the log2timeline tool although the analyst still has to do the analysis themselves can be a burden with

12

the vast amount of data that these timelines often contain. For analysts to analyse the timeline fully they

need to have an understanding of the meaning of the entries and how they fit into the operation of a

computer(Guðjónsson, 2010) which requires a lot of knowledge.

Guðjónsson had concerns that the ability of the log2timeline tool to extract so much detailed information

raises the need for filtering to remove the irrelevant information and just keep the relevant. At the moment

there is no simple automated way to do this apart from knowing time periods of interest to focus on or having

specific whitelist or blacklist keywords to help refine the timeline. Being able to easily find suspicious entries

would speed up investigations while quickly reducing the amount of information the investigator needs to

wade through which raises the requirement for some way to quickly and easily remove irrelevant or known

good entries so as to focus more on the items of interest (Guðjónsson, 2010) which is something best

automated . Creating a graph of the number of entries with regard to time is a method which will help to

easily visualise the timeline and will help with the detection of spikes and surges of activity as well as gaining

an overall profile of activity on the computer . Easy visualisation of the timeline will also help with the

presentation of reports as it will help non technical people like lawyers and judges to understand the data.

Most of the idea's proposed by Guðjónsson for the simplification of the output of log2timeline is direectly

applicable in an automatic analysis system.

Analysis

As part of his model Marrington 2011 proposed “Four phases of analysis” which fits with his event log focus.

They are discovery, content categorisation and extraction, relationship extraction and event correlation and

pose an excellent model for the processing of data into more manageable forms (Marrington et al. 2011) . The

last of the Four phases of analysis “Event correlation” is required to link all the discovered data and

relationships to the event log entries, where a system not focussing on log entries could leave out this phase,

e.g. using the output of Guðjónssons “Log2Timeline” software (Guðjónsson 2010) .

The Log2Timeline software is quite impressive with the breadth of metadata and logs it is able to extract. As

mentioned above Marrington's system extracted filesystem, metadata and logs information from the most

important places, but this pales into insignificance compared to the list of places that Log2Timeline extracts

filesystem, metadata and log data from. Log2Timeline extracts its information from a wide array of files and

metadata and provides a vast amount of data but luckily it's in a kind of standardised format to ease analysis.

Super timelines make it a lot easier to visualise activity on the system at any point of time. Temporal Analysis

is the analysis of events around a specific time. So with a malware infection temporal analysis of the timeline

at the time of the infection can provide us with; the point of infection, all malware related files, where all

these malware files are, the viruses method for startup and other changes the malware may have made (e.g.

disabling Anti virus software). The timeline can be analysed for spikes of activity which often can indicate

events of interest. e.g. copying many files, deletion of many files, antivirus scan, software installation … etc

The log2timeline software doesn't do much in the way of analysis in itself but does collect and parse

information from many areas an presents it in a simple format ready for analysis. It would be interesting to

see the results if Marrington were to combine the information that Log2Timeline extracts with their analysis

system and see the additional level of detail and relationships that their report tool would be able to produce.

Peisert and Bishop discuss modelling system logs with regard to the detection of actions by intruders .Their

paper is mostly applicable to the implementation of Forensic Readiness with the detection of suspicious

incidents as compared to digital forensic analysis. Although they do discuss an analysis model which they label

“Requires/Provides” and involves the concept of “Capabilities” which are needed to gain a goal as well as the

capabilities then provided by gaining that goal (Peisert & Bishop 2007) . With regard to forensic analysis this

can be used for example to detect that software has been installed or used which provides capabilities that

13

don't fit with the types of activity expected from the user e.g. peer to peer software like frostwire or even

visiting certain types of websites.

Carrier and Spafford used an automated analysis technique of looking for outlier files by discovering and

classifying normal activity (Carrier & Spafford 2005). Outlier files or activity is files or activity which is not

normal and can be detected once rules have been created which whitelist normal activity. e.g. executable files

in the c:\windows\fonts directory is abnormal and should be detected as outlier activity. Categorisation of

outlier files can be helpful for automatic analysis yet is dependant on a good rule set of blacklisted files and

whitelisted files. This is also directly applicable to analysis of the registry with regard to detection of outlier

“Autoruns”. Autoruns is a term referring to programs automatically being run at system startup and are

commonly used by unwanted programs like malware and viruses.

PROPOSAL

Guðjónsson discusses in his paper with regard to future work in in the computer timeline creation area that

“The need for a tool that can assist with the analysis after the creation of the super timeline is becoming a

vital part for this project to properly succeed. ” (Guðjónsson, 2010) and this proposal is regarding research

into automated analysis over the super timeline with designs on implementing such a tool to provide

automatic analysis.

The concept for the implementation of such a system is based on the concept of the open-source network

Intrusion Detection System (IDS) tool called Snort. This is a tool which reads a standardised data source (in

snorts cases network packets) analyses them by the use of user created rules and creates alerts for packets of

internet. There any many good ideas already proposed in current research which can help in the creation of

an automatic analysis system.

Statistics as well as profiling each user account will help the investigator to get an idea of the activity of each

user on a computer, which when combined will provide a profile of the computer itself. A rules based system

like Snort could use the output of the log2timeline program as a standardised source of computer activity

information for analysis. With a flexible enough rule language investigators will be able easily write up new

rules to detect new and unforeseen activity.

14

SECTION 5 – EXAMINATION CRITERIA FULFILMENT

This is a Masters Minor Thesis; the assessment criteria and how they will be met by the execution and reporting of this

research are described below:

Requirement 1: An ordered critical and reasoned exposition of knowledge gained through the student’s efforts

The performed literature review as well as experience and additional research in this field will be elaborated

on and will show the knowledge which has been gained and is being applied to the aforementioned problems

to be solved. The different approaches and direction different papers take will be analysed and discussed to

show that the advantages and disadvantages of their different approaches have been considered.

Requirement 2: Evidence of awareness of the literature

The literature review included above will help show the current research and development in this area as well

as reveal areas where research and testing is lacking. It will help with the introduction of ideas which provide

directions for new research or development. As there may already be useful research in this area which

compliment the planned research this information will be used to help with the development of a solution to

the discovered problems in this area.

15

SECTION 6 – REFERENCES

Accessdata 2005, Registry Quick Find Chart, p. 16.

Ayers, D 2009, ‘A second generation computer forensic analysis system’, Digital Investigation, vol. 6, pp.

S34–S42, viewed 6 March 2013, <http://linkinghub.elsevier.com/retrieve/pii/S1742287609000371>.

Brownstone, RD 2004, ‘Collaborative Navigation of the Stormy’, Technology, vol. X, no. 5.

Carrier, B 2005, File system forensic analysis, viewed 20 April 2013, <http://scholar.google.com/scholar?

hl=en&btnG=Search&q=intitle:file+system+forensic+analysis#0>.

Carrier, B & Spafford, EH 2005, ‘Automated digital evidence target definition using outlier analysis and

existing evidence’, in Proceedings of the 2005 Digital Forensics Research Workshop, Citeseer, pp. 1–10,

viewed 2 April 2011, <http://citeseerx.ist.psu.edu/viewdoc/download?

doi=10.1.1.81.1345&amp;rep=rep1&amp;type=pdf>.

Elsaesser, C & Tanner, M 2001, ‘Automated diagnosis for computer forensics’, The Mitre Corporation, pp.

1–16, viewed 20 April 2013,

<http://www.mitre.org/work/tech_papers/tech_papers_01/elsaesser_forensics/esaesser_forensics.pdf>.

Farrell, P 2009, ‘A Framework for Automated Digital Forensic Reporting’, no. March, viewed 20 April 2013,

<https://calhoun.nps.edu/public/handle/10945/4878>.

Garfinkel, SL 2006, ‘Forensic feature extraction and cross-drive analysis’, Digital Investigation, vol. 3, pp.

71–81, viewed 11 March 2013, <http://linkinghub.elsevier.com/retrieve/pii/S1742287606000697>.

Garfinkel, SL 2009, ‘Automating Disk Forensic Processing with SleuthKit, XML and Python’, Ieee, 2009

Fourth International IEEE Workshop on Systematic Approaches to Digital Forensic Engineering, pp. 73–84.

Golden G. Richard, I & Roussev, V 2006, ‘Next-generation digital forensics’, ACM, Commun. ACM, vol. 49,

no. 2, pp. 76–80.

Guðjónsson, K 2010, ‘Mastering the super timeline with log2timeline’, SANS Institute, viewed 21 May 2013,

<http://scholar.google.com/scholar?

hl=en&btnG=Search&q=intitle:Mastering+the+Super+Timeline+With+log2timeline#0>.

Kent, K, Chevalier, S & Grance, T 2006, ‘Guide to integrating forensic techniques into incident response’,

NIST Special Publication, viewed 23 August 2011, <http://cybersd.com/sec2/800-86Summary.pdf>.

Malik, A & Criminology, AI of 2008, Intellectual Property Crime and Enforcement in Australia, Criminology,

viewed 27 June 2013, <http://www.aic.gov.au/documents/B/D/0/

{BD0BC4E6-0599-467A-8F64-38D13B5C0EEB}rpp94.pdf>.

Marrington, A 2009, ‘Computer profiling for forensic purposes’, Queensland University of Technology, viewed

20 April 2013, <http://eprints.qut.edu.au/31048>.

Marrington, A, Baggili, I, Mohay, George & Clark, Andrew 2011, ‘CAT Detect (Computer Activity Timeline

Detection): A tool for detecting inconsistency in computer activity timelines’, Digital Investigation, vol. 8, pp.

S52–S61, viewed 2 April 2013, <http://linkinghub.elsevier.com/retrieve/pii/S1742287611000314>.

16

Marrington, A, Mohay, GM, Clark, AJ & Morarji, H 2007, ‘Event-based computer profiling for the forensic

reconstruction of computer activity’, vol. 2007, pp. 71–87, viewed 20 April 2013,

<http://eprints.qut.edu.au/15579>.

Morris, T, Vaughn, R & Dandass, Y 2012, ‘A Retrofit Network Intrusion Detection System for MODBUS RTU

and ASCII Industrial Control Systems’, Ieee, 2012 45th Hawaii International Conference on System Sciences,

pp. 2338–2345, viewed 16 March 2013, <http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?

arnumber=6149298>.

NIST, NI of S and T 2004, Forensic Examination of Digital Evidence: A Guide for Law Enforcement, Office,

viewed 13 June 2013, <http://www.ncjrs.gov/App/abstractdb/AbstractDBDetails.aspx?id=199408>.

Peisert, S & Bishop, M 2007, ‘Toward models for forensic analysis’, UC Davis Previously Published Works,

viewed 20 April 2013, <http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=4155346>.

Rider, K, Mead, S & Lyle, J 2010, ‘Disk Drive I/O Commands and Write Blocking’, International Federation

for Information …, vol. 242, pp. 163–177, viewed 27 June 2013,

<http://cs.anu.edu.au/iojs/index.php/ifip/article/view/11099>.

Roesch, M 1999, ‘Snort-lightweight intrusion detection for networks’, Proceedings of the 13th USENIX

conference on …, viewed 9 April 2013,

<http://static.usenix.org/publications/library/proceedings/lisa99/full_papers/roesch/roesch.pdf>.

Stevens, D 2006, Userassist, viewed 27 June 2013, <http://blog.didierstevens.com/programs/userassist/>.

Stevens, D 2010, New Format for UserAssist Registry Keys, no. December.

Stevens, D 2012, UserAssist Windows 2000 Thru Windows 8, no. July.

Sutherland, I, Evans, J, Tryfonas, T & Blyth, A 2008, ‘Acquiring volatile operating system data tools and

techniques’, ACM SIGOPS Operating …, pp. 65–73, viewed 27 June 2013, <http://dl.acm.org/citation.cfm?

id=1368516>.

Tan, J 2001, ‘Forensic readiness’, Cambridge, MA:@ Stake, pp. 1–23, viewed 16 October 2011,

<http://isis.poly.edu/kulesh/forensics/forensic_readiness.pdf>.

Wong, L 2007, ‘Forensic analysis of the Windows registry’, Forensic Focus, viewed 26 June 2013,

<http://www.forensictv.net/Downloads/digital_forensics/forensic_analysis_of_windows_registry_by_lih_wern

_wong.pdf>.

17

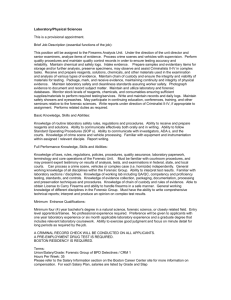

INFT 4017- 2008: Research Proposal

Marking Sheet Part 1: Supervisor’s Marking Guide

Title:

Student:

Student ID:

Supervisor:

Note to Supervisors:

• The proposal is worth 50% of the marks for INFT 4017 – and the supervisor’s mark is worth half of

that (i.e. the supervisor’s mark is worth 25% of the total marks for this course).

• The main purpose of this course is: (a) to ensure that students understand the principles of research

and the research methods relevant to their area of interest; as well as (b) to assist them to prepare an

appropriate research proposal for whichever thesis course they are enrolled in (whether this be an

Honours thesis, a Masters by Research thesis, a major research project for a Masters by coursework

degree, or a PhD thesis)

• The present research proposal submission is designed to give students feedback on their research

effort early enough in their project (ideally, before they start their empirical work) so as to: (a) ensure

they are reading in the right area for their problem; (b) identify any problems before they are built into

the student’s actual research work; and (c) to reassure students about the areas where they are

managing well.

• By the time you receive this marking guide, the student will be about to submit his/her proposal to me

as Course Coordinator of INFT 4017. These proposals will be sent to the appropriate supervisor as an

email attachment

• Please mark the proposal; and then return any annotated proposals + a copy of your completed form

to Jiuyong Li by 28th June at jiuyong.li@unisa.edu.au.

• Please feel free to discuss your feedback with the student

Criteria

Mark

Research question clearly defined and qualifies as a valid

research question within the specific area of research

(max. mark = 5)

Valid use of domain-specific literature – student has cited

the seminal literature in this field (max. mark = 5)

Motivation for selecting this

convincing (max. mark = 3)

topic

presented

and

Argument for potential contribution to knowledge in the

specific field of study (max. mark = 3)

Methodology appropriate to the specific domain of study

and clearly outlined (max. mark = 3)

Expected outcomes appropriate for the topic and clearly

described (max. mark = 3)

Scope and limits: the area to be studied is well defined

(max. mark = 3)

Supervisor’s comments

18

Comment

Overall comments:

19