Multivariate Data Analysis – CSIC

advertisement

Multivariate Data Analysis

Session 0: Course outline

Carlos Óscar Sánchez Sorzano, Ph.D.

Madrid

Motivation for this course

2

Motivation for this course

3

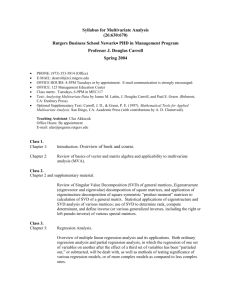

Course outline

4

Course outline: Session 1

1. Introduction

1.1. Types of variables

1.2. Types of analysis and technique selection

1.3. Descriptors (mean, covariance matrix)

1.4. Variability and distance

1.5. Linear dependence

2. Data Examination

2.1. Graphical examination

2.2. Missing Data

2.3. Outliers

2.4. Assumptions of multivariate analysis

5

Course outline: Session 2

3. Principal component analysis (PCA)

3.1. Introduction

3.2. Component computation

3.3. Example

3.4. Properties

3.5. Extensions

3.6. Relationship to SVD

4. Factor Analysis (FA)

4.1. Introduction

4.2. Factor computation

4.3. Example

4.4. Extensions

4.5. Rules of thumb

4.6. Comparison with PCA

6

Course outline: Session 3

5. Multidimensional Scaling (MDS)

5.1. Introduction

5.2. Metric scaling

5.3. Example

5.4. Nonmetric scaling

5.5. Extensions

6. Correspondence analysis

6.1. Introduction

6.2. Projection search

6.3. Example

6.4. Extensions

7. Tensor analysis

7.1 Introduction

7.2 Parafac/Candecomp

7.3 Example

7.4 Extensions

7

Course outline: Session 4

8. Multivariate Analysis of Variance (MANOVA)

8.1. Introduction

8.2. Computations (1-way)

8.3. Computations (2-way)

8.4. Post-hoc tests

8.5. Example

9. Canonical Correlation Analysis (CCA)

9.1. Introduction

9.2. Construction of the canonical variables

9.3. Example

9.4. Extensions

10. Latent Class Analysis (LCA)

10.1. Introduction

8

Course Outline

Theory

Theory

Theory

Theory

Practice

Theory

Theory

Theory

Theory

Practice

Theory

Practice

Practice

Practice

Practice

9

Suggested readings: Overviews

It is suggested to read (before coming):

• H. Abdi. Multivariate Analysis. In: Lewis-Beck M., Bryman, A., Futing

T. (Eds.) (2003). Encyclopedia of Social Sciences Research Methods.

Thousand Oaks (CA): Sage.

• S. Sanogo and X.B. Yang. Overview of Selected Multivariate Statistical

Methods and Their Use in Phytopathological Research. Phytopathology,

94: 1004-1006 (2004)

10

Resources

Data sets

http://www.models.kvl.dk/research/data

http://kdd.ics.uci.edu

Links to organizations, events, software, datasets

http://www.statsci.org/index.html

http://astro.u-strasbg.fr/~fmurtagh/mda-sw

http://lib.stat.cmu.edu

Lecture notes

http://www.nickfieller.staff.shef.ac.uk/sheff-only/pas6011-pas370.html

http://www.gseis.ucla.edu/courses/ed231a1/lect.html

11

Bibliography

•

D. Peña. Análisis de datos multivariantes, Mc GrawHill, 2002

•

B. Manly. Multivariate statistical methods: a primer. Chapman & Hall/CRC,

2004.

•

J. Hair, W. Black, B. Babin, R. Anderson. Multivariate Data Analysis (6th ed),

Prentice Hall, 2005.

•

B. Everitt, G. Dunn. Applied multivariate data analysis. Hodder Arnold, 2001

•

N. H. Timm. Applied multivariate analysis. Springer, 2004

•

L. S. Meyers, G. C. Gamst, A. Guarino. Applied multivariate research: design

and interpretation. Sage, 2005

•

J. L. Schafer. Analysis of incomplete multivariate data. Chapman & Hall/CRC,

1997

•

M. Bilodeau, D. Brenner. Theory of multivariate statistics. Springer, 2006

12

Multivariate Data Analysis

Session 1: Introduction and data examination

Carlos Óscar Sánchez Sorzano, Ph.D.

Madrid

Course outline: Session 1

1. Introduction

1.1. Types of variables

1.2. Types of analysis and technique selection

1.3. Descriptors (mean, covariance matrix)

1.4. Variability and distance

1.5. Linear dependence

2. Data Examination

2.1. Graphical examination

2.2. Missing Data

2.3. Outliers

2.4. Assumptions of multivariate analysis

2

1. Introduction

3

1.1 Introduction: Types of variables

Data

Discrete SexÎ{Male,Female}

No. SonsÎ{0,1,2,3,…}

Continuous TemperatureÎ[0,¥)

Metric

or

Quantitative

Nonmetric

or

Qualitative

Nominal/

Categorical

Scale

0=Male

1=Female

Ordinal

Scale

0=Love

1=Like

2=Neither like nor dislike

3=Dislike

4=Hate

Interval

Scale

Years:

…

2006

2007

…

Ratio

Scale

Temperature:

0ºK

1ºK

…

4

1.1 Introduction: Types of variables

Coding of categorical variables

Hair Colour

{Brown, Blond, Black, Red}

No order

Peter: Black

Molly: Blond

Charles: Brown

Company size

{Small, Medium, Big}

Company A: Big

Company B: Small

Company C: Medium

xBrown , xBlond , xBlack , xRed 0,1

4

Peter:

Molly:

Charles:

Implicit order

0, 0,1, 0

0,1, 0, 0

1, 0, 0, 0

xsize 0,1, 2

Company A: 2

Company B: 0

Company C: 1

5

1.2 Introduction: Types of analysis

Analysis

Y f (X )

f ( X ,Y ) 0

Dependence

Interdependence

A variable or set of variables is identified as the dependent variable to be

predicted or explained by other variables known as independent variables.

Example:

(No. Sons, House Type)=

f(Income, Social Status, Studies)

•Multiple Discriminant Analysis

•Logit/Logistic Regression

•Multivariate Analysis of Variance (MANOVA) and Covariance

•Conjoint Analysis

•Canonical Correlation

•Multiple Regression

•Structural Equations Modeling (SEM)

6

1.2 Introduction: Types of analysis

Analysis

Y f (X )

Dependence

f ( X ,Y ) 0

Interdependence

Example: Who is similar to whom?

(No. Sons, House Type, Income, Social Status, Studies, …)

Involves the simultaneous analysis of all variables in the set, without

distinction between dependent variables and independent variables.

•Principal Components and Common Factor Analysis

•Cluster Analysis

•Multidimensional Scaling (perceptual mapping)

•Correspondence Analysis

•Canonical Correlation

7

1.2 Introduction: Technique selection

• Multiple regression: a single metric variable is predicted by

several metric variables.

Example:

No. Sons=f(Income, No. Years working)

• Structural Equation Modelling: several metric variables are

predicted by several metric (known and latent) variables

Example:

(No. Sons, House m2)=f(Income, No. Years working, (No. Years Married))

8

1.2 Introduction: Technique selection

• Multiple Analysis of Variance (MANOVA): Several metric

variables are predicted by several categorical variables.

Example:

(Ability in Math, Ability in Physics)=f(Math textbook, Physics textbook, College)

• Discriminant analysis, Logistic regression: a single categorical

(usually two-valued) variable is predicted by several metric

independent variables

Example:

Purchaser (or non purchaser)=f(Income,No. Years working)

9

1.2 Introduction: Technique selection

• Canonical correlation: Several metric variables are predicted by

several metric variables

Example:

(Grade Chemistry, Grade Physics)=f(Grade Math, Grade Latin)

• Conjoint Analysis: An ordinal variable (utility function) is

predicted by several categorical/ordinal/metric variables

Example:

TV utility=f(Screen format, Screen size, Brand, Price)

• Classification Analysis: Predict categorical variable from several

metric variables.

Example:

HouseType=f(Income,Studies)

10

1.2 Introduction: Technique selection

• Factor analysis/Principal Component Analysis: explain the

variability of a set of observed metric variables as a function of

unobserved variables (factors)

Example:

(Grade Math, Grade Latin, Grade Physics)=f(Intelligence, Maturity)

• Correspondence analysis: similar to factor analysis but with

categorical data.

Example:

(Eye colour, Hair colour, Skin colour)=f(gene A, gene B)

• Cluster analysis: try to group individuals according to similar

characteristics

Example:

(Grade Math, Grade Latin, Grade Physics, Grade Philosophy, Grade History)

11

1.2 Introduction: Technique selection

• Multidimensional scaling: Find representative factors so that the

relative dissimilarities in the original space are as conserved as

possible

Example:

(x,y)=f(City gross income, health indexes,

population, political stability, … )

12

(Basic vector and matrix algebra)

xt

13

(Basic vector and matrix algebra)

Vector

Norm

x

x

x, x

N

Internal product

Dot product

x, y y , x x y xt y xi yi x y cos

i 1

y

x

Projx y

Orthogonality

x, y

x

ux

x, y

x

2

x x(xt x) 1 xt y

x y x, y 0

14

(Basic vector and matrix algebra)

field

Linear span

span x1 , x 2 ,..., x r x 1x1 2 x 2 ... r x r 1 , 2 ,..., r K

Linearly dependent, i.e.,

0 x 1x1 2 x 2 ... r x r 0

uz

span u y , u z

uy

ux

span u x span u y , u z

span u x C span u y , u z

Complementary spaces

15

(Basic vector and matrix algebra)

uz

y

Assuming that u x ,u y

uy

x

ux

E span u x , u y

is a basis of the spanned space

x ProjE y Proju x y Proju y y

X ( X t X ) 1 X t y

Basis vectors of E as columns

y x E y x C E

y x yx

2

2

2

16

1.3 Descriptors: Data representation

x1t x11

t

x 2 x21

X

... ...

t

x n xn1

x12

x22

...

xn 2

... x1 p

... x2 p

... ...

... xnp

Features

Individuals

X

x t2

17

1.3 Descriptors: Univariate analysis

x1t x11

t

x 2 x21

X

... ...

t

x n xn1

Sample mean

Sample standard

deviation

Sample variation

coefficient

x12

x22

...

xn 2

... x1 p

... x2 p

... ...

... xnp

1 n

x2 xi 2

n i 1

1 n

2

s2

x

x

i2 2

n i 1

VC2

x22

s22

m2

If outliers

Robust statistics

Sample median

Pr X 2 m2

1

Pr X 2 m2

2

MAD2 Sample Median

Absolute Deviation

Median x2 m2

18

1.3 Descriptors: Mean and covariance

x 1t x11

t

x 21

x

X 2

... ...

t

x n x n1

x12

...

x 22

...

...

...

xn 2

...

x1 p

x2 p

...

x np

x x1 x2 ... x p

1 t

x X 1 Sample mean

n

t

x 1 x t

x 2 x t

X

...

t

x

x

n

X X 1 x t

x x

x12 x 2 ... x1 p x p

1

11

x 21 x1 x 22 x 2 ... x 2 p x p

...

...

...

...

x n1 x1 x n 2 x 2 ... x np x p

Matrix of centered data

Vector of 1s

1 n

Sample covariance s jk xij x j xik xk

n i 1

Symmetric,

positive

semidefinite

s12

s

S 21

...

s p1

s12

s 22

...

sp2

...

...

...

...

s1 p

s2 p

...

s 2p

Measures how variables

j and k are related

n

1 t

t

1

X X

x

x

x

x

i

i

n

n

i 1

E x x x x t

19

1.3 Descriptors: Covariance

X x1

x2

X 1 N (0,1)

X 2 N (0,1)

X 3 N (0,1)

x 3 3 variables

13 E ( X 1 1 )( X 3 3 )

200 samples

0.9641

S 0.0678

0.0509

E X 1 X 3

0.0678

0.8552

0.0398

0.0509

0.0398

0.9316

Sample covariance

X 1 N (0,1)

X 2 X1

X 3 X1

X 1 N (0, 9)

X 2 X1

X 3 X1

0.9641

S 0.9641

0.9641

10.4146

S 10.4146

10.4146

1

0

0

0

0

1

0

1

0

Covariance

0.9641

0.9641

0.9641

0.9641

0.9641

0.9641

10.4146

10.4146

10.4146

10.4146

10.4146

10.4146

1

1

1

9

9

9

1

1

1

9

9

9

1

1

1

9

9

9

20

1.3 Descriptors: Covariance

X 1 N (1, 2)

X 2 N (2, 3)

X 3 X1 X 2

1.6338

S 0.0970

1.5368

0.0970

2.8298

2.7329

1 2

X1

X X 2 N 2 , 0

X

1 2

3

X 1 N ( 1 , 12 )

X 2 N ( 2 , 22 )

X 3 a1 X 1 a 2 X 2

1.5368

2.7329

4.2696

0

3

3

X N μ ,

0

22

a 2 22

1

2

0

3

3

2

3

5

2

3

5

2

1

X1

1

X X2 N

2

, 0

X

a a a 2

2 2 1 1

3

1 1

fX (x)

2

0

2

N

2

a1 12

a 2 22

a12 12 a 22 22

1

exp ( x μ ) t 1 ( x μ )

2

21

1.3 Descriptors: Covariance

X N μ ,

fX (x)

1

2

N

2

1

exp ( x μ ) t 1 ( x μ )

2

4

0

μ

0

1

0

0.2

2

0

1

X1

0.1

0

0

4

-2

2

0

-2

-4 -4

-2

0

2

4

X2

-4

-4

-2

0

2

4

X 1 and X 2 are independent

For multivariate Gaussians, covariance=0 implies independency

22

1.3 Descriptors: Covariance

X N μ ,

1

2

N

2

1

exp ( x μ ) t 1 ( x μ )

2

4

1

μ

0

1

0

f X (x)

0.2

2

0

3

0.1

0

0

4

-2

2

0

-2

-4 -4

-2

0

2

4

-4

-4

-2

0

2

4

X 1 and X 2 are independent

23

1.3 Descriptors: Covariance

X N μ ,

f X (x)

1

2

N

2

1

exp ( x μ ) t 1 ( x μ )

2

4

0.2

2

μ0

1

R

0

X1

0.1

0 t

R

3

0

0

4

-2

2

cos 60º

R

sin 60º

2.5

0.866

0.866

1.5

sin 60º

cos 60º

0

-2

-4 -4

-2

0

2

4

X2

-4

-4

-2

0

2

4

X 1 and X 2 are NOT independent

BUT there exist two independent variables

24

1.3 Descriptors: Covariance

Pitfalls of the covariance matrix

4

3

X 1 N (0,1)

X

X2 1

X 1

2

p 0.5

1 p 0.5

1

0

-1

-2

1

0

0

1

X 1 N (0,1)

X 2 X 12

1

0

0

2

-3

-4

-4

-3

-2

-1

0

1

2

3

4

C ov ( X 1 , X 2 ) 0 U ncorrelated Independent

C ov ( X 1 , X 2 ) 0 G aussian Independent

25

1.3 Descriptors: Covariance

Redundant variables

X 3 N (0,1)

1

0

0

X 1 N (0,1)

X 2 X1

X 3 X1

1

1

1

1

1

1

R

0

0 t

R

3

X 1 N (0,1)

X 2 N (0,1)

X 1 N (1, 2)

X 2 N (2, 3)

X 3 X1 X 2

2

0

2

0

0

1

0

1

0

1

0

3

3

eig () (1,1,1)

1

1

1

2

3

5

eig () (1, 0, 0)

eig () (3,1)

eig () (7.64, 2.35, 0)

26

1.4 Variability and distance

x t2

t

x10

How far are they?

How far are they from the mean?

d (xi , x j )

n

1-norm (Manhattan)

d (xi , x j ) xis x js

s 1

n

Most used

p-norm (Euclidean p=2)

Minkowski

Infinity norm

p

d (xi , x j ) xis x js

s 1

d (xi , x j ) max xis x js

1

p

s

27

1.4 Variability and distance

Height (m) Weight (kg)

Juan

John

Jean

d (xi , x j )

Juan

1.80

1.70

1.65

Juan

Height (cm) Weight (kg)

80

72

81

John

Juan

John

Jean

d (xi , x j )

Jean

----- 8.0004 1.0112

Juan

Mahalanobis distance

80

72

81

Juan

John

Jean

----- 11.3137 15.0333

M x x

d (x , x ) x x I x x x x x x

d (x , x ) x x x x

d (x , x ) k x x

t

Matrix-based distance d 2 ( xi , x j ) xi x j

Euclidean distance

180

170

165

1

i

t

2

i

j

i

t

i

j

t

1

j

2

i

j

i

j

i

j

i

j

i

j

1

j

N

Correntropy distance

i

j

k 1

ik

jk

28

1.4 Variability and distance

Mahalanobis distance d 2 ( xi , x j ) xi x j

2

height

r height weight

x x

t

1

i

j

r height weight 100 70

2

weight 70 100

height 10cm

weight 10kg

4

r 0.7

0

0

-2

-2

0

2

4

Juan

John

Jean

----- 0.7529 4.8431

4

2

-2

Juan

Independently of units!!

2

-4

-4

d (xi , x j )

-4

-4

-2

0

2

4

29

1.5 Linear dependence: Pair dependence

X 1 N ( 0 , 1)

X 2 X1

X

3

X1

X 1 N (0, 9)

X 2 X1

X

3

X1

0 .9 6 4 1

S 0 .9 6 4 1

0 .9 6 4 1

1 0 .4 1 4 6

S 1 0 .4 1 4 6

1 0 .4 1 4 6

0 .9 6 4 1

0 .9 6 4 1

0 .9 6 4 1

0 .9 6 4 1

0 .9 6 4 1

0 .9 6 4 1

1 0 .4 1 4 6

1 0 .4 1 4 6

1 0 .4 1 4 6

1 0 .4 1 4 6

1 0 .4 1 4 6

1 0 .4 1 4 6

1 r jk 1

s

s 21

S

...

s p1

2

1

s12

s 22

...

sp2

...

...

...

...

s1 p

s2 p

...

2

sp

1

s 21

R s 2 s1

...

s

s ps1

p1

s12

s1 s 2

...

1

...

...

...

sp2

s p s2

...

r jk

s jk

s j sk

s2 p

1

1

s2 s p

D 2 SD 2

...

s12 0 ...

0 s 22 ...

1

D

s1 p

s1 s p

...

0

...

0

...

...

r jk 1 x j a bx k

r jk Is invariant to linear

transformations of the

variables

0

0

...

s 2p

30

1.5 Linear dependence: Pair dependence

count =

Example:

11

7

14

11

43

11 9

13 11

17 20

13 9

51 69

Traffic count in three

different places

(thousands/day)

…

3

1

2

covariance=

643 980 1656

980 1714 2690

1656 2690 4627

More

traffic?

correlation=

1.0000 0.9331 0.9599

0.9331 1.0000 0.9553

0.9599 0.9553 1.0000

31

1.5 Linear dependence: Multiple dependence

ˆ

X 1 2 X 2 3 X 3 ... p X p βX

2 ,3,..., p

X̂ X Xˆ

1

1

1

β S 2,3,...,

p S1

1

n

Multiple

correlation

coefficient

2

R1.2

,3,... p

2

ˆ

(

)

x

x

1i 1

i 1

n

2

(

)

x

x

1i 1

Variance of X 1

explained by a linear

prediction

Total variance of X 1

s12

s 21

S

...

s p1

s12

s 22

...

sp2

...

...

...

...

s1 p

s2 p

...

s 2p

i 1

32

1.5 Linear dependence: Pair dependence

Example:

R1.3 0.9599

As seen before

R1.2 ,3 0.9615

Does X2 provide useful information

on X1 once the influence of X3 is

removed?

ˆ

ˆ

X 1 13 X 3 Y1 X 1 X 1

3

1

2

100

40

ˆ

ˆ

X 2 23 X 3 Y2 X 2 X 2

20

RY1 .Y2 0.1943

Y2

X2

50

0

-50

-50

0

p value 0.363

-20

0

50

X1

100

-40

-20

No!

-10

0

Y1

10

20

33

2. Data examination: Get acquainted with your data

34

2.1 Graphical examination

• Univariate distribution plots

• Bivariate distribution plots

• Pairwise plots

– Scatter plots

– Boxplots

• Multivariate plots

– Chernoff faces

– Star plots

35

2.1 Graphical examination: Univariate distribution

36

2.1 Graphical examination: Bivariate distributions

37

2.1 Graphical examination: Scatter plots

Coloured

by class

38

2.1 Graphical examination: Boxplots (Box-Whiskers)

Group 2 has substantially more dispersion

than the other groups.

Outlier = #13

11

75% Quartile

10

13

9

1.5IQR or min

8

X6 - Product Quality

1.5IQR or max

Inter Quantile Range (IQR)

25% Quartile

7

6

Median

5

4

N=

32

35

33

Less than 1 year

1 to 5 years

Over 5 years

X1 - Customer Type

39

2.1 Graphical examination: Multivariate plots

X 11

X1

X2

X 10

X3

X9

X4

X8

X5

X7

X6

Chernoff Faces

40

2.1 Graphical examination: Multivariate plots

X1

X2

X8

X7

X3

X6

X4

X5

Star plots

41

2.2 Missing data

Types of missing data:

• Missing Completely At Random (MCAR)

• Missing at Random (MAR)

Strategies for handling missing data:

• use observations with complete data only

• delete case(s) and/or variable(s)

• estimate missing values (imputation):

+ All-available

+ Mean substitution

+ Cold/Hot deck

+ Regression (preferred for MCAR): Linear, Tree

+ Expectation-Maximization (preferred for MAR)

+ Multiple imputation (Markov Chain Monte Carlo, Bayesian)

42

2.3 Multivariate outliers

Model: Centroid+noise

Univariate detection

xi median( x)

2.5

2

MAD( x)

1.5

4.5

1

Grubb’s statistic (assumes normality)

0.5

0

-0.5

max

-1

-1.5

xi x

sx

( N 1)

N

t 2

N

, N 2

N 2 t2

, N 2

N

-2

-2.5

-2.5

-2

-1.5

-1

-0.5

0

Multivariate detection

0.5

1

1.5

2

2.5

Number of

variables

Critical value of Student’s t

distribution with N-2 degrees of

freedom and a significance level

d 2 (xi , x ) (xi x )t S 1 (xi x ) p 3 2 p

43

2.3 Multivariate outliers

Model: Function+noise

1. For each dimension

a. Fit a model to data by regression

xˆ1 f ( x2 , x3 ,..., x p )

2.

b.

Label all those points with large residuals as

outliers

xˆ1i x1i ( p, N , )

c.

Go to step a until convergence

For each pair of dimensions

a. Fit a model to data by regression

( xˆ1 , xˆ2 ) f ( x3 ,..., x p )

b.

Label all those points with large residuals as

outliers

dist (( xˆ1i , xˆ2i ), ( x1i , x2i )) ( p, N , )

c.

Go to step a until convergence

The threshold is a function of the

number of variables, the number of

samples, and the significance level

44

2.4 Assumptions of multivariate analysis

• Normality: the multivariate variable follows a multivariate Gaussian

– Univariate variables, too

– Tests: Shapiro-Wilks (1D), Kolmogorov-Smirnov(1D), Smith-Jain (nD)

• Homoscedasticity (Homoskedasticity): the variance of the dependent

variables is the same across the range of predictor variables

– Tests: Levene, Breusch-Pagan, White

6

4

2

0

-2

-4

-6

-3

-2

-1

0

1

2

3

45

2.4 Assumptions of multivariate analysis

•

Linearity: All techniques based on correlation (multiple regression, logistic

regression, factor analysis, structure equation modelling, principal

component analysis, etc.) assume that the dependent variables depend

linearly on the independent ones.

– Test: Scatterplots

• Non-correlated errors: All prediction techniques assume that the prediction

residual is independent of the predictors. This may not be true all over the

predictor interval.

2

1.5

1

0.5

0

-0.5

-1

-1.5

-2

-3

-2

-1

0

1

2

3

46

2.4 Assumptions of multivariate analysis

•

The solution to most assumption violations is provided by data

transformations.

Y X

Y log X

Y

Table of sample pdfs and suggested transformation

Multivariate standardization

1

Y ( X 1Xt ) S X 2

1

X

47

Course outline: Session 1

1. Introduction

1.1. Types of variables

1.2. Types of analysis and technique selection

1.3. Descriptors (mean, covariance matrix)

1.4. Variability and distance

1.5. Linear dependence

2. Data Examination

2.1. Graphical examination

2.2. Missing Data

2.3. Outliers

2.4. Assumptions of multivariate analysis

48

Multivariate Data Analysis

Session 2: Principal Component Analysis and

Factor Analysis

Carlos Óscar Sánchez Sorzano, Ph.D.

Madrid

Course outline: Session 2

3. Principal component analysis (PCA)

3.1. Introduction

3.2. Component computation

3.3. Example

3.4. Properties

3.5. Extensions

3.6. Relationship to SVD

4. Factor Analysis (FA)

4.1. Introduction

4.2. Factor computation

4.3. Example

4.4. Extensions

4.5. Rules of thumb

4.6. Comparison with PCA

2

3.1 PCA: Introduction

Can we capture the information provided by the

original p variables with a fewer number of variables?

xt

3

3.1 PCA: Introduction

4

10

3

8

6

1

4

0

Y

Z

2

-1

2

-2

0

-3

-2

2

0

Y

-2

-4

-2

0

X

2

-4

-4

4

10

10

8

8

6

6

4

4

Z

Z

4

2

2

0

0

-2

-4

-3

-2

-1

0

Y

1

2

3

4

-2

-4

-3

-2

-1

0

X

1

2

3

4

-3

-2

-1

0

X

1

2

3

4

4

3.1 PCA: Introduction

3

10

2

8

1

Z

6

0

4

Principal axes

2

0

-2

4

-1

-2

5

2

0

0

-2

Y

-4

-5

X

-3

-3

-2

-1

0

X

1

2

3

5

3.1 PCA: Introduction

zi

Proja1 xi xi , a1 a1 zi a1

3

xi zi a1 ri

2

a1 a11 , a12 ,..., a1 p

Y

1

0

-1

xi

ri

-2

-3

-3

-2

Factor loadings

0

X

1

2

2

zi a1 ri

n

a arg min ri

*

1

a1

2

i 1

n

2

2

zi2 ri

2

n

arg min xi zi 2

a1

i 1

2

arg max zi 2 arg max Var Z

a1

Proja1 xi zi

-1

xi

i 1

a1

3

6

3.2 PCA: Component computation

Computation of the first component

z1 x1 , a1 x1t a1

z1 x1t

t

z2 x 2 a

... ... 1

t

zn x n

z2 x 2 , a1 xt2a1

…

zn x n , a1 xtna1

Z Sample mean:

Z

Sample variance:

a1* arg max sz2

a1

s.t.

Data matrix

z Xa1

Sample

covariance

matrix

x 0 z xa1 0

1 t

1 t t

s z z a1 X Xa1 a1t S x a1

n

n

2

z

Largest eigenvalue

arg max a1t S xa1 a1t a1 1

2

a1 1

a1

F

F

0 S xa1* a1*

a1

a1*t S xa1*

7

3.2 PCA: Component computation

arg max a1t S xa1 at2 S x a 2 1 a1t a1 1 2 at2a 2 1

a1* , a*2 arg max sz21 sz22

a1 ,a 2

2

a1 ,a2

s.t. a1 1

2

F

a 1

2

S xa1* 1a1*

10

8

Z

a

a*3

6

S xa*2 2a*2

*

1

4

Largest two eigenvalues

a*2

2

1 a1*t S xa1*

2 a*2t S xa*2

0

-2

4

5

2

0

0

-2

Y

-4

-5

X

*

In general, a i are the eigenvectors sorted by eigenvalue descending order

8

3.2 PCA: Component computation

xt

z1 Xa1*

z 2 Xa*2

z p Xa*p

…

Largest variance

of all z variables

Smallest variance

of all z variables

Z XA*

New data matrix (size=nxp)

Matrix with the projection directions as columns

Data matrix (size=nxp)

9

3.3 PCA: Example

Nine different indices of the quality of life in 329 U.S. cities. These are climate, housing, health,

crime, transportation, education, arts, recreation, and economics. For each index, higher is better

economics

recreation

arts

education

transportation

crime

health

housing

climate

0

1

2

3

Values

4

5

4

x 10

10

3.3 PCA: Example

Factor loadings

a1*

climate

housing

health

crime

transportation

education

arts

recreation

economics

a*2

0.2064

0.3565

0.4602

0.2813

0.3512

0.2753

0.4631

0.3279

0.1354

All positive, i.e.,

weighted average

0.2178

0.2506

-0.2995

0.3553

-0.1796

-0.4834

-0.1948

0.3845

0.4713

a*3

-0.6900

-0.2082

-0.0073

0.1851

0.1464

0.2297

-0.0265

-0.0509

0.6073

Difference between

(economics, recreation,

crime, housing, climate)

vs (education, health)

Difference between (economics,

education) vs (housing, climate)

11

3.3 PCA: Example

4

Low (economics,

recreation, crime, housing,

climate) and High

3

(education, health)

High (economics, recreation,

crime, housing, climate) and

Low (education, health)

Low (economics, education)

and High (housing climate)

2

3rd Principal Component

San Francisco, CA

1

Los Angeles, Long Beach, CA

0

-1

Outliers?

Boston, MA

New York, NY

-2

Washington, DC-MD-VA

-3

-4

-4

High (economics, education)

and Low (housing climate)

Chicago, IL

-2

0

2

4

6

2nd Principal Component

8

10

12

14

12

3.3 PCA: Example

0.5

a

climate

housing

health

crime

transportation

education

arts

recreation

economics

*

2

0.2178

0.2506

-0.2995

0.3553

-0.1796

-0.4834

-0.1948

0.3845

0.4713

recreation

crime

0.4

a

0.3

climate

0.2

Component 2

*

1

0.2064

0.3565

0.4602

0.2813

0.3512

0.2753

0.4631

0.3279

0.1354

economics

housing

0.1

0

-0.1

transportation

arts

-0.2

health

-0.3

-0.4

education

-0.5

-0.1

0

0.1

0.2

0.3

0.4

Component 1

0.5

0.6

13

3.4 PCA: Properties

% of variance explained

40

tr S x sx21 sx22 ... sx2p

2

zp

20

1 2 ... p

10

s s ... s

2

z1

2

z2

100

30

1

p

i

i 1

0

1

Total variance

2

3

4

5

6

Eigenvalue number

7

8

9

Cumulative % of variance explained

100

l

100

80

j 1

j

p

i 1

60

i

40

20

1

2

3

4

5

6

Eigenvalue number

7

8

9

14

3.4 PCA: Properties

Data restoration

p

x zi a*i

i 1

PCA

z1

z2

...

15

3.4 PCA: Properties

Data compression and denoising

p'

x zi a*i

i 1

% of variance explained

40

30

20

PCA

10

0

1

2

3

z1

4

5

6

Eigenvalue number

7

z2

8

9

...

16

3.4 PCA: Properties

Criteria:

• Explained variance of a single eigenvalue below a threshold

• Total accounted variance above a threshold

% of variance explained

40

30

20

10

0

1

2

3

4

5

6

Eigenvalue number

7

8

9

Cumulative % of variance explained

100

80

60

40

20

1

2

3

4

5

6

Eigenvalue number

7

8

9

17

3.5 PCA: Extensions

•

•

•

•

•

•

•

•

•

•

•

•

•

Use the correlation matrix instead of the covariance matrix (in this way the

influence of a variable with a extreme variance is avoided).

PCA of binary data: PCA is not well suited to non real data

Sparse PCA: Produce sparse factor loadings

Noisy PCA or Robust PCA: Input vectors contaminated by additive noise

Incremental PCA: Recompute easily the PCA as new data comes in

Probabilistic PCA: Consider the probability distribution of the input data

vectors

Assymetric PCA: Consider the unbalanced distribution of the samples

Generalized PCA: Use nonlinear projections

Kernel PCA: Use any kernel instead of the covariance matrix

Principal curves analysis: Use projections onto any curve instead of a line

Projection pursuit: Find interesting (non-Gaussian) projection directions

Correlational PCA: based on Correlational Embedding Analysis

Localized PCA: PCA of local neighbourhoods

18

3.6 Relationship to SVD

…

X

=

=

=

W

D

ଵ

ே

D

௧

௧

ଵ

ே

19

3.6 Relationship to SVD

…

ଵ ଶ

ே

ଵ ଶ

ଵ

ଶ

ଵ

ଶ

ଵ

ଶ

…

ଵ ଶ

ଵ ଶ

ଵ

ଶ

…

ே

…

≈

ெ

ெ

X

≈

W’

D’

௧

(SVD)

௧

(PCA)

m

X

≈

A*

X

N

m

20

Course outline: Session 2

3. Principal component analysis (PCA)

3.1. Introduction

3.2. Component computation

3.3. Example

3.4. Properties

3.5. Extensions

3.6. Relationship to SVD

4. Factor Analysis (FA)

4.1. Introduction

4.2. Factor computation

4.3. Example

4.4. Extensions

4.5. Rules of thumb

4.6. Comparison with PCA

21

4.1 FA: Introduction

X1

X2

X1 ,..., X 13 f gene1 , gene2 , food

X3

X4

X5

X8

Observable

variables

Non-observable

(latent) variables

X6

X9

X7

X 10

X 11

X 12

X μ X f ε

Average

Load

matrix

Noise

X 13

22

4.1 FA: Introduction

X μ X f ε

N p (μ X , X )

N m (0, I ) N p (0, )

m

12

22

X 1 1 11

X

p 2 2 21

... ... ...

...

X

13 13 13,1 13,2

Properties:

• Factors are uncorrelated/independent

• Factors and noise are uncorrelated

• The load matrix is the covariance between the

observed variables and the factors

• The variance of the observed variables can be

explained by the loading matrix and the variance of

the noise

13

23

F1

p F2

...

F

3

13,3

E εF 0

E ( X μ

1

m 2

...

13

E FF t I

t

X

)F t

Commonality

X

t

m

X2 ij2 2 hi2 2

i

j 1

i

i

23

p

4.1 FA: Introduction

X μ X f ε

Properties:

• The load matrix and factors are not uniquely

specified: any rotation of the factors can be

compensated by the load matrix

X μ X f ε μ X (H t )( Hf ) ε

Any orthogonal matrix

Solution:

t

1.

Impose that is a diagonal matrix: Principal factor method

2.

Impose that t 1 is a diagonal matrix: Maximum-Likelihood method

This matrix provides the possibility of rotating the components so that

we achieve a certain property (having the maximum number of zeros, …)

that helps us to understand the factors. There are several criteria offered

by the programs: varimax, equamax, parsimax, quartimax, orthomax, …

24

4.1 FA: Introduction

10

8

2

p

p

4

2

min bij bij

bij

p i 1

f 1 i 1

b

2

ij

a

6

Z

m

a1*

*

3

4

a*2

2

ij2

m

ik2

k 1

0

-2

4

5

2

0

0

-2

-4

-5

X

Y

Orthomax

Orthogonal rotation that maximizes a criterion based on the variance of the

loadings.

Parsimax

Special case of the orthomax rotation

Quartimax

Minimizes the number of factors needed to explain a variable. Special case

of orthomax.

Varimax

Maximizes the variance of the squared loadings of each factor. Special case

of orthomax.

Equimax

Compromise between quatimax and varimax. Special case of orthomax.

Promax

Allows for oblique factors (they are less interpretable)

pp ( mm1)2

0

1

m

2

25

4.2 FA: Factor computation

Principal factor method

X t

Solve for

in S X ˆ t s.t. t is diagonal

Option a: ˆ 0

i

Option b: regression residual

2

This step is also known as commonality

estimation since once the variance of the noise

is known, the commonalities are also known

X2 hi2 2

i

i

s X2 i hi2 ˆ 2i

hi2 s X2 i ˆ2i

26

4.2 FA: Factor computation

Maximum Likelihood method

X N μ X , X

Likelihood of observing a single individual

fX (x)

1

2

X

N

2

1

exp ( x μ X ) t X1 ( x μ X

2

)

Likelihood of observing all individuals

fX ( X )

n

i 1

f X (xi )

Log-likelihood of observing all individuals

pn

n

1

LX ( X )

log 2 log X

2

2

2

n

(x

i 1

i

μ X ) t X1 ( x i μ X )

X t

27

4.2 FA: Factor computation

Maximum Likelihood method

LX ( X )

0

LX ( X )

0

1.

Estimate an initial guess for ̂

2.

Estimate ˆ with the current guess of ̂

3.

ˆ ˆt

ˆ S X

Estimate ̂ with the current guess of

Solve for ̂ in

ˆ

ˆ 12 ( S I )ˆ 12 ˆ 12

4.

ˆ .

ˆ

ˆ t ˆ 1

ˆ

ˆ 12

Return to Step 2 till convergence

28

4.3 FA: Example

120 students have each taken five exams, the first two covering mathematics, the next two on

literature, and a comprehensive fifth exam. It seems reasonable that the five grades for a

given student ought to be related. Some students are good at both subjects, some are good at

only one, etc. The goal of this analysis is to determine if there is quantitative evidence that the

students' grades on the five different exams are largely determined by only two types of

ability.

Loadings =

0.6289

0.6992

0.7785

0.7246

0.8963

NoiseVar =

0.3485

0.3287

-0.2069

-0.2070

-0.0473

A factor distinguishing

those good in mathematics

and bad in literature or

viceversa

An overall factor affecting to all exams (mainly the

comprehensive, then literature and finally mathematics).

This can be interpreted as a general intelligence factor.

0.4829

0.4031

0.3512

0.4321

0.1944

The comprehensive

exam is the easiest to

predict with these two

factors

Normalized to 1 (1=no variance

reduction obtained by

commonalities; 0=all variance is

explained by commonalities)

29

4.3 FA: Example

count =

Example:

11

7

14

11

43

11 9

13 11

17 20

13 9

51 69

Traffic count in three

different places

(thousands/day)

…

3

1

2

Loadings =

NoiseVar =

0.9683

0.9636

0.9913

0.0624

0.0714

0.0172

As expected X3 is highly related to the

main factor, and it mostly explains the

traffic in X1 and X2. The noise is

coming from the red arrows.

30

4.4 FA: Extensions

• Multiple factor analysis (MFA): Study common factors along

several datasets about the same individuals

• Hierarchical FA and MFA (HFA and HMFA), Higher order FA:

Apply FA to the z scores obtained after a first FA

• Nonlinear FA: Nonlinear relationship between factors and

observed variables.

• Mixture of Factor Analyzers: formulation of the problem as a

Gaussian mixture.

31

4.5 Rules of thumb

• At least 10 times as many subjects as you have variables.

• At least 6 variables per expected factor (if loadings are low, you

need more)

• At least 3 variables should correlate well with each factor

• Each factor should have at least 3 variables that load well.

• If a variable correlates well with several factors, you need more

subjects to provide significance.

• The size of commonalities is proportional to the number of

subjects.

32

4.6 Comparison with PCA

Principal Component Analysis:

Factor analysis:

•

Selection of number of factors a

posteriori

•

Selection of the number of

factors a priori

•

Linear model

•

Linear model

•

Rotation is possible at the end

•

Rotation is possible at the end

•

Output variables are orthogonal

•

•

Many extensions

Output variables may not be

orthogonal

•

Few extensions

•

Assumption of normality

33

Course outline: Session 2

3. Principal component analysis (PCA)

3.1. Introduction

3.2. Component computation

3.3. Example

3.4. Properties

3.5. Extensions

3.6. Relationship to SVD

4. Factor Analysis (FA)

4.1. Introduction

4.2. Factor computation

4.3. Example

4.4. Extensions

4.5. Rules of thumb

4.6. Comparison with PCA

34

Multivariate Data Analysis

Session 3: Multidimensional scaling,

correspondence analysis, tensor analysis

Carlos Óscar Sánchez Sorzano, Ph.D.

Madrid

Course outline: Session 3

5. Multidimensional Scaling (MDS)

5.1. Introduction

5.2. Metric scaling

5.3. Example

5.4. Nonmetric scaling

5.5. Extensions

6. Correspondence analysis

6.1. Introduction

6.2. Projection search

6.3. Example

6.4. Extensions

7. Tensor analysis

7.1 Introduction

7.2 Parafac/Candecomp

7.3 Example

7.4 Extensions

2

5.1 MDS: Introduction

Multidimensional Scaling

• Does not require Gaussianity

• Any distance among individuals

Factor Analysis

• Requires input Gaussianity

• Distance between individuals

through the covariance matrix

• Individuals are directly observed

x1 (...)

x 2 (...)

x3 (...)

• Individuals are not observed, but

their relative distances are

d (xi , x j ) x1 x 2 x3

x1 0 0.3 2

x 2 0.3 0 1.5

x3

2

1.5

0

It may not be a true (in the

mathematical sense) distance

measure but a dissimilarity

measure.

3

5.2 MDS: Metric scaling

Zero-mean

Data Matrix

X

Dissimilarity

(Euclidean distance)

Matrix

Similarity

Matrix

X

Data

matrix

t

Q XX

MDS=PCA

dij2 qii q jj 2qij

D

qij xti x j

xi x j qij

xi x j qij

Equal up to a

rotation

Q is positive semidefinite iff D has been

built with the Euclidean distance

1

1

1

Q I 11t D I 11t

n

n

2

Assuming Q is

positive semidefinite

1

Y PD 2

Q PDP PD

t

1

2

PD

1

2

t

4

t

5.3 MDS: Example

cities = {'Atl','Chi','Den','Hou','LA','Mia','NYC','SF','Sea','WDC'};

D = [

0 587 1212 701 1936 604 748 2139 2182 543;

587

0 920 940 1745 1188 713 1858 1737 597;

1212 920

0 879 831 1726 1631 949 1021 1494;

701 940 879

0 1374 968 1420 1645 1891 1220;

1936 1745 831 1374

0 2339 2451 347 959 2300;

604 1188 1726 968 2339

0 1092 2594 2734 923;

748 713 1631 1420 2451 1092

0 2571 2408 205;

2139 1858 949 1645 347 2594 2571

0 678 2442;

2182 1737 1021 1891 959 2734 2408 678

0 2329;

543 597 1494 1220 2300 923 205 2442 2329

0]

5

5.3 MDS: Example

6

5.4 MDS: Nonmetric scaling

(xi , x j ) x1 x 2 x3

d (xi , x j ) x1 x 2 x3

x1 0 0.3 2

x 2 0.3 0 1.5

x3

2

1.5

(xi , x j ) f d (xi , x j )

0

Nonlinear, monotonically

increasing and unknown

x1

x2

0.4

x3

1.8 1.2

STRESS Objective function

n

dˆij* arg min

dˆij

n

(

i 1 j i 1

n

n

ˆ )2

d

ij

ij

i 1 j i 1

Solution:

xˆ

( k 1)

i

xˆ

(k )

i

dˆij2

dˆij

1

n 1 j 1, j i ij

n

0

0.4 1.8

0

1.2

0

MDS

dˆ (xi , x j ) x1 x 2 x3

x1

x2

(k )

(xˆ j xˆ i( k ) )

x3

7

5.4 MDS: Nonmetric scaling

Example: Sort (aunt, brother, cousin, daughter, father, granddaughter, grandfather, grandmother,

grandson, mother, nephew, niece, sister, son and uncle) according to their “meaning”

8

5.5 MDS: Extensions

• Classical MDS: D is computed using Euclidean distance.

• Metric MDS: D is computed using a distance but may be not

Euclidean

• Nonmetric MDS: D is not a distance but provides the right ranks

• Replicated MDS: Several experts are consulted to build D

• Weighted MDS: It allows experts to apply different nonlinear

transformations.

• ISOMAP: Uses geodesic distance

• MDS by local patches: approximate data locally by small patches

and embed these patches in a low dimensional space.

9

Course outline: Session 3

5. Multidimensional Scaling (MDS)

5.1. Introduction

5.2. Metric scaling

5.3. Example

5.4. Nonmetric scaling

5.5. Extensions

6. Correspondence analysis

6.1. Introduction

6.2. Projection search

6.3. Example

6.4. Extensions

7. Tensor analysis

7.1 Introduction

7.2 Parafac/Candecomp

7.3 Example

7.4 Extensions

10

6.1 CA: Introduction

8-dimensional vector: which topics are closer?

12-dimensional vector: which

years are more similar?

F Frequency matrix summing up to 1

11

6.2 CA: Projection search

1.

We should consider the structure of the rows.

For instance, the following two rows should be

equivalent.

0.05 0.05

0.45 0.45

I

0.10 f i .

0.90 Row mass

For achieving this we devide by the row sum

J

0.50 0.50

0.50 0.50

In matrix form, we define a new frequency

matrix

1

R Drow

F

Where Drow is a diagonal matrix with the

row sums.

0.1 0

Drow

0 0.9

12

6.2 CA: Projection search

2.

I

A change in probability from 0.6001 to 0.6101

is not that much as a change from 0.0001 to

0.0101, i.e., we have to weight (divide)

attributes by the relative frequency of the

attribute.

0.6001 0.0001 0.3998

0.6101 0.0101 0.3798

1.2101 0.0102 0.7796

J

After division we have

f. j

Column mass

0.4959 0.0098 0.5128

0.5041 0.9902 0.4872

Dcol

0

0

1.2101

0

0.0102

0

0

0

0.7796

We will later use the diagonal matrix Dcol

whose values are the column sums.

13

6.2 CA: Projection search

Distance between two rows

d 2 (ra , rb ) ra rb D

1

col

t

2

d

2

Euclidean

ra rb d

2

Euclidean

12

col a

12

col b

(D r , D r )

2

12 f a

f aj fbj 1

12 fb

, Dcol

Dcol

f

f

f

f

j 1 a .

a.

b.

b . f. j

J

We may transform directly the matrix F into some other matrix

whose rows are the ones needed for the Eucliden distance

F

1

row

12

col

Y D FD

2

d 22 (ra , rb ) d Euclidean

(y a , y b )

yij

fij

1

f i . f. j 2

14

6.2 CA: Projection search

Search of a projection direction

PCA

Y

I

a arg max y i , a1

*

1

a1

2

i 1

arg max a1tY tYa1

a1

Equal weight to all rows. These are

directions of maximum inertia

(instead of maximum variance)

Data matrix

I

a arg max fi. y i , a1

CA

*

1

3

a1

i 1

2

arg max a1tY t DrowYa1

a1

2

Y

1

a1 a11 , a12 ,..., a1J

0

Factor loadings

yi

-1

yi , a1

-2

-3

-3

-2

-1

0

X

1

2

This analysis can also be

done by columns, instead of

rows

3

15

6.3 CA: Example

United States population

16

6.4 CA: Extensions

• Multiple CA: Extension to more than two variables.

• Joint CA: Another extension to more than two variables.

• Detrended CA: Postprocessed CA to remove the “arch” effect

17

Course outline: Session 3

5. Multidimensional Scaling (MDS)

5.1. Introduction

5.2. Metric scaling

5.3. Example

5.4. Nonmetric scaling

5.5. Extensions

6. Correspondence analysis

6.1. Introduction

6.2. Projection search

6.3. Example

6.4. Extensions

7. Tensor analysis

7.1 Introduction

7.2 Parafac/Candecomp

7.3 Example

7.4 Extensions

18

7.1 Tensor analysis: Introduction

Examples:

• Scores of n subjects, at m tests, at p time points.

• Scores of n air quality indicators on m time points at p locations.

• Scores of n judges on m quality indicators for p food products.

Score=f(food, judge, indicator)

3-way tensor

food

indicator

judge

19

7.1 Tensor analysis: Introduction

Traditional analysis

• Unfolding

food

indicator

judge

food

…

indicator indicator

1

2

judge

judge

judge

judge

• PCA Analysis

20

7.2 Tensor analysis: PARAFAC

SVD (PCA) revisited

m

X USV t s1u1 v1t s2u 2 v t2 ... smu m v tm sk u k v k

k 1

M

M

1

1

Outer product

v2

v1

s2

s1

u1

u v ij ui v j

...

u2

21

7.2 Tensor analysis: PARAFAC

PARAFAC

m

X sk u k v k w k

k 1

M

uk M , vk N , w k P

u v w ijk ui v j wk

Outer product

w1

w2

v1

v2

s2

s1

u1

...

u2

22

7.2 Tensor analysis: PARAFAC

SVD(PCA) vs PARAFAC

• PARAFAC cannot be found analytically.

• PARAFAC has to be found numerically (Alternating Least

Squares).

• The best rank-m approximation may not exist.

• The m components are not ordered.

• The best rank-(m-1) approximation is not a subset of the

rank-m solution.

• PARAFAC does not maximize the explained variance.

• PARAFAC minimizes the approximation error.

• PARAFAC solution is unique (PCA is not, rotations).

• PARAFAC factorization may be real or complex-valued.

23

7.2 Tensor analysis: PARAFAC

Preprocessing for SVD (PCA)

X USV t

M

M individuals, N variables

Center and standardize columns of X

xij

xij x j

j

Why center? Scores are normally relative (origin is arbitrary).

Why normalize? Assures equal influence of each variable.

Preprocessing for PARAFAC

More possibilities, depending on interest

xijk

xijk x jk

jk

xijk

xijk x k

k

…

24

7.2 Tensor analysis: PARAFAC

How to choose the number of components

m

X sk u k v k w k

k 1

X Xˆ E

R

2

X

2

F

E

X

2

F

2

F

m

1

2

3

4

5

R2

0.102

0.164

0.187

0.189

0.191

Chosen m

25

7.3 Tensor analysis: Example

5 different kinds of breads are judged by 8 judges on 11 attributes.

There are 2 replicates (→10 breads).

2

X sk a k b k c k

k 1

M

judges

breads

c1

c2

b1

b2

=8

10

s2

s1

=11

attributes

a1

a2

26

7.3 Tensor analysis: Example

27

7.3 Tensor analysis: Example

28

7.3 Tensor analysis: Example

29

7.4 Tensor analysis: Extensions

PARAFAC

m

X sk u k v k w k

k 1

uk M , vk N , w k P

Tucker model

m1

m2

m3

X sk1k2 k3 u k1 v k2 w k3

k1 1 k2 1 k3 1

Principal Tensor Analysis (Multiway, multivariate analysis)

General name for this kind of algorithms to analyze tensors.

30

7.4 Tensor analysis: Extensions

Difference with ANOVA:

In ANOVA, interactions are modeled as ijk while in PARAFAC,

they are modeled as ai b j ck . ANOVA allows more general

interaction models, but if the multiplicative model is true, then

PARAFAC can be better interpreted.

31

Course outline: Session 3

5. Multidimensional Scaling (MDS)

5.1. Introduction

5.2. Metric scaling

5.3. Example

5.4. Nonmetric scaling

5.5. Extensions

6. Correspondence analysis

6.1. Introduction

6.2. Projection search

6.3. Example

6.4. Extensions

7. Tensor analysis

7.1 Introduction

7.2 Parafac/Candecomp

7.3 Example

7.4 Extensions

32

Multivariate Data Analysis

Session 4: MANOVA, Canonical Correlation,

Latent Class Analysis

Carlos Óscar Sánchez Sorzano, Ph.D.

Madrid

Course outline: Session 4

8. Multivariate Analysis of Variance (MANOVA)

8.1. Introduction

8.2. Computations (1-way)

8.3. Computations (2-way)

8.4. Post-hoc tests

8.5. Example

9. Canonical Correlation Analysis (CCA)

9.1. Introduction

9.2. Construction of the canonical variables

9.3. Example

9.4. Extensions

10. Latent Class Analysis (LCA)

10.1. Introduction

2

8.1 MANOVA: Introduction

Several metric variables are predicted by several categorical variables.

Example:

(Ability in Math, Ability in Physics)=f(Math textbook, Physics textbook, College)

1.

2.

3.

4.

What are the main effects of the

independent variables?

What are the interactions among

the independent variables?

What is the importance of the

dependent variables?

What is the strength of association

between dependent variables?

Math Text A

Math Text B

(7,7) (4,5)

College A

(9,9) (7,9)

(10,6) (6,7)

(10,10) (9,9)

Physics Text A

(3,1) (5,5)

(6,7) (8,7)

College B

(5,5) (5,5)

(8,8) (9,8)

Physics Text B

(2,8) (9,10)

(9,6) (5,4)

College A

(10,10) (6,9)

(1,3) (8,8)

Physics Text B

(10,8) (7,5)

(6,6) (7,7)

College B

(5,5) (6,5)

(8,3) (9,7)

Physics Text A

3

8.1 MANOVA: Introduction

1-way

Math Text A

(9,9) (7,9)

(10,6) (6,7)

3-way

Math Text B

(10,10) (9,9)

Math Text A

Physics Text A

(9,9) (7,9)

(10,6) (6,7)

Math Text B

(7,7) (4,5)

College A

(9,9) (7,9)

(10,6) (6,7)

(10,10) (9,9)

Physics Text A

(3,1) (5,5)

(6,7) (8,7)

College B

(5,5) (5,5)

(8,8) (9,8)

Physics Text B

(2,8) (9,10)

(9,6) (5,4)

College A

(10,10) (6,9)

(1,3) (8,8)

Physics Text B

(10,8) (7,5)

(6,6) (7,7)

College B

(5,5) (6,5)

(8,3) (9,7)

(7,7) (4,5)

2-way

Physics Text A

Math Text A

Math Text B

(7,7) (4,5)

Physics Text A

(10,10) (9,9)

(3,1) (5,5)

(6,7) (8,7)

(5,5) (5,5)

(8,8) (9,8)

4

8.1 MANOVA: Introduction

Analysis technique selection guide

Number of Dependent Variables

Number of Groups in

One

Two or More

Independent Variable

(Univariate)

(Multivariate)

Two Groups

Student’s t-test

Hotelling’s T2 test

Two or More Groups

Analysis of Variance

(Generalized Case)

(ANOVA)

Multivariate Analysis of

Variance

(Specialized Case)

(MANOVA)

5

8.1 MANOVA: Introduction

Why not multiple ANOVAs?

1. Independent ANOVAs cannot “see” covariation

patterns among dependent variables.

2. MANOVA may identify small differences while

independent ANOVAs may not

3. MANOVA is sensitive to mean differences, the

direction and size of correlations among

dependents.

4. Running multiple ANOVAs results in increasing

Type I errors (multiple testing)

6

8.1 MANOVA: Introduction

Assumptions:

• Normality: Data is assumed to be normally distributed. However,

MANOVA is rather robust to non-Gaussian distributions (particularly

so if cell size>20 or 30). Outliers should be removed before applying

MANOVA.

• Homogeneity of covariances: Dependent variables have the same

covariance matrix within each cell. MANOVA is relatively robust if the

number of samples per cell is relatively the same.

• Sample size per group is a critical issue (design of experiments):

must be larger than the number of dependent variables,

approximately equal number of samples per cell. Large samples

make MANOVA more robust to violations.

7

8.1 MANOVA: Introduction

Assumptions:

• Linearity: MANOVA assumes linear relationships among all pairs of

dependent variables, all pairs of covariates, and all pairs of

dependent variable-covariate within a cell.

– MANOVA works best if depedent variables are moderately

correlated.

– If the variables are not correlated, use independent ANOVAs– If the variables are too correlated (>0.8), the covariance matrix

becomes nearly singular and calculations are ill-conditioned.

(Remove collinearity by PCA or similar; then run independent

ANOVAs)

8

8.2 MANOVA: Computations (1-way)

Measurements

Cell 1 from

Cell 2 from

Cell k from

N p (μ1 , )

N p (μ 2 , )

N p (μ k , )

x11

x12

x 21

x 22

x k1

xk 2

x1n

x 2n

x kn

…

…

…

Measurement model

xij μ α i ε ij μ i ε ij

k

α

i

i1

Cell mean

Total mean

1 n

xi. xij

n j 1

1 k n

x.. xij

kn i 1 j 1

What are the main effects of the

independent variables?

αˆ i xi. x..

MANOVA Hypothesis Testing

H 0 : μ1 μ 2 ... μ k

H1 : At least two means are different

9

0

8.2 MANOVA: Computations (1-way)

There are three other ways of

testing the MANOVA hypothesis:

• Pillai’s test

• Lawley and Hotelling’s test

• Roy’s greatest root

MANOVA Hypothesis Testing

H 0 : μ1 μ 2 ... μ k

H1 : At least two means are different

S H E

Total

covariance

Within cell

covariance

Among

cells

covariance

We reject

H 0 if

E

S

p , , H , E

t

1 k n

S y ij y.. y ij y..

kn i j 1

1 k

t

H y i. y.. y i. y..

k i

t

1 k n

E y ij y i. y ij y i.

kn i j 1

H k 1

Wilks’ lambda

E

E

nk

k

E k ( n 1)

10

8.2 MANOVA: Computations (1-way)

11

8.2 MANOVA: Computations (1-way)

Are there dependent variables that are

not affected by the independent

variables?

If H 0 : μ1 μ 2 ... μ k is rejected,

ANOVA tests can be performed on each

component individually

What is the strength of association

between dependent variables and

independent variables?

What is the relationship among the cell

averages?

μ1 μ 3

Example: μ 2

R2 1

2

H 0 : μ1 2μ 2 μ 3 0

H1 : μ1 2μ 2 μ 3 0

H 0 : c1μ1 c2μ 2 ... ck μ k 0

n k

k

'

y

y

H k

c

c

i i. i i.

2 i 1

i 1

c

i

t

i 1

We reject

H 0 if

E

EH

'

p , ,1, E

12

8.3 MANOVA: Computations (2-way)

Measurements

A=1

A=a

Cell averages

A=1

A=a

B=1

B=2

B=b

x111

x121

x1b1

x11n

x12 n

x1bn

…

…

…

x a11

x a 21

x ab1

x a1n

xa 2n

x abn

B=1

B=2

B=b

x11.

x12.

x1b.

…

…

…

xa1.

xa 2.

xab.

xa..

x.1.

x.2.

x.b.

x...

…

…

…

…

…

…

Measurement model

xij μ α i βi γ ij ε ijk

Independent Interaction

effects

a

b

a

α β γ

i 1

i

j 1

j

i 1

b

ij

γ ij 0

j 1

x1..

13

8.3 MANOVA: Computations (2-way)

Cell averages

A=1

A=a

B=1

B=2

B=b

x11.

x12.

x1b.

…

…

…

xa1.

xa 2.

xab.

xa..

x.1.

x.2.

x.b.

x...

What are the main effects of the

independent variables?

What are the interactions among

independent variables?

x1..

αˆ i xi.. x... βˆ j x. j . x...

γˆ ij xij . ( x... αˆ i βˆ j )

14

8.3 MANOVA: Computations (2-way)

T H A H B H AB E

Total

variation

Noise

variation

Variation Variation Variation

due to A due to B due to

interactions

15

8.3 MANOVA: Computations (2-way)

H 0 : α1 α 2 ... α a

H 0 : β1 β 2 ... βb

H 0 : γ11 γ12 ... γ ab

What is the strength of association

between dependent variables and

independent variables?

What is the relationship among the cell

averages?

R2 1

H 0 : c1..μ1.. c2..μ 2.. ... ca μ a.. 0

16

8.3 MANOVA: Other computations

Considers differences over all the characteristic roots:

•

Wilks' lambda: most commonly used statistic for overall significance

•

Hotelling's trace

•

Pillai's criterion: more robust than Wilks'; should be used when sample size

decreases, unequal cell sizes or homogeneity of covariances is violated

Tests for differences on only the first discriminant function

•

Roy's greatest characteristic root: most appropriate when DVs are strongly

interrelated on a single dimension (only one source of variation is expected). Highly

sensitive to violation of assumptions - most powerful when all assumptions are met

17

8.4 MANOVA: Post-hoc tests

What to do once we know there are significant

differences among cells?

• Resort to univariate tests.

– Detect which component makes the

difference with ANOVA H 0 : 1l 2l ... kl

– For the component making the difference,

detect which pair of averages are different:

Scheffé, Fisher LSD (Least Significant

Difference), Dunnet, Tukey HSD (Honest

Significant Difference), Games-Howell.

• Resort to two-cell tests.

– Hotelling T2. H 0 : μ i μ j

• Use Linear Discriminant Analysis.

11 21

k1

12

22

k

2

...

... ...

...

1

p

2

p

kp

H 0 : il jl

18

8.5 MANOVA: Example

1-way

High

education

Medium

education

Low

education

(words/ad, words/sentence, #words with >=3 syllables)

Scientific

American

(203,20,21),…

Fortune

…

The New

Yorker

…

Sports

Illustrated

…

Newsweek

…

People Weekly

…

National

Enquirer

…

Grit

…

True

Confessions

(205,9,34)

H 0 : μ ScientificAmerican μ Fortune ... μTrueConfessions

0.371

pvalue 0.004 Not all magazines are

equal in the three variables

ANOVA words/ad:

H 0 : words / ad ScientificAmerican words / ad Fortune ...

F 4.127

…

pvalue 0.001

ANOVA words/sentence:

H 0 : words / sentence ScientificAmerican ...

F 1.643 pvalue 0.140

ANOVA #words with >=3 syllables:

H 0 : words 3 syll ScientificAmerican ...

F 3.694 pvalue 0.002

There are differences in the number

of words per ad and the number of

words with 3 or more syllables

19

8.5 MANOVA: Example

ANOVA words/ad:

H 0 : words / ad ScientificAmerican words / ad Fortune ...

H 0 : words / ad ScientificAmerican words / ad NewYorker

H 0 : words / ad Fortune words / ad NewYorker

H 0 : words / ad ScientificAmerican words / ad Grit

ANOVA #words with >=3 syllables:

H 0 : words 3 syll ScientificAmerican ...

H 0 : # words 3 syll ScientificAmerican # words 3 syll NewYorker

Very few significant differences were seen between magazines for two of the dependent variables

(total words per ad and number of 3 syllable words per ad), and all magazines were statistically

non-different for one (number of words per sentence). Therefore, one cannot state that magazines

placed into the high education group have significantly more words per advertisement than

magazines in either medium or low education groups.

20

8.5 MANOVA: Example

1-way

H 0 : μ ScientificAmerican μ Fortune ... μTrueConfessions

High

education

Medium

education

Low

education

(205,9,34)

(203,20,21)

…

Restart

…

…

21

Course outline: Session 4

8. Multivariate Analysis of Variance (MANOVA)

8.1. Introduction

8.2. Computations (1-way)

8.3. Computations (2-way)

8.4. Post-hoc tests

8.5. Example

9. Canonical Correlation Analysis (CCA)

9.1. Introduction

9.2. Construction of the canonical variables

9.3. Example

9.4. Extensions

10. Latent Class Analysis (LCA)

10.1. Introduction

22

9.1 CCA: Introduction

House Structure

• Number of people (X1)

• Number of people above 14 years old (X2)

• Education of the main family person (X3)

• Number of working people (X4)

Latent variables

X 1* , X 2* , X 3* , X 4*

Maximum

correlation

House Spendings

• Food (Y1)

• Clothes (Y2)

• Houseware (Y3)

• Transport and Communications (Y4)

• Free time and Education (Y5)

X N p X (0, X )

XY

Y N pY (0, Y )

Latent variables

Y1* , Y2* , Y3* , Y4*

X* AX

Maximum correlation

Y * BY

23

9.2 CCA: Construction of Canonical Variables

First canonical variables

x N p X (0, X )

x1* α1t x

XY

y N pY (0, Y )

Two

samples

*

1

*

1

Corr x , y

y β y

*

1

E x1* x1*t

t

1

*

1

α1 ,β1

s.t.

Sol:

*

1

*

1

α

*

1

2

*

1

*

1

*

1

2

t

1

α1 ,β1

2

2

1

X

XY

X

1

Y

XY

β1

1

t

1

YX

*

1

* *t

1 1

α α β β

t

1

X

t

1

1

Y

1

2

arg max α α β β α

Var x Var y 1

Corr x , y α α

α , β arg max Corr x , y

*

1

Ey y

α1t XY β1

t

1

E x1* y1*t

t

1

Y

t

α

β

1

X 1

1Y β1 1

1

2

*

1

YX X1 XY β1* 2β1*

1

Y

Eigenvectors associated

to the largest eigenvalues

24

9.2 CCA: Construction of Canonical Variables

Second canonical variables

Var x Var y 1

Corr x , x Corr y , y 0

α , β arg max Corr x , y

*

2

*

2

α 2 ,β 2

s.t.

*

2

*

2

*

2

2

*

2

*

1

*

2

*

1

Sol:

The following largest eigenvalue

*

2

β

XY Y1YX α *2 22α *2

1

X

1

Y

YX X1 XY

*

2

22β*2

Up to r min p X , pY canonical variables

Sol: All eigenvalues in descending order

Hypothesis testing

H 0 : i 0 i 1, 2,..., s; s 1 ... r 0

H1 : i 0 i 1, 2,..., s; s 1 ... r 0

r

l n ( p X pY 3) log(1 i2 )

1

2

l

2

( p X s )( pY s )

i s 1

25

9.3 CCA: Example

House Structure

• Number of people (X1)

• Number of people above 14 years old (X2)

• Education of the main family person (X3)

• Number of working people (X4)

House Spendings

• Food (Y1)

• Clothes (Y2)

• Houseware (Y3)

• Transport and Communications (Y4)

• Free time and Education (Y5)

1

0.55

1

RX

0.11 0.04

1

0.53

0.11

0.00

1

1

0.29

RY 0.13

0.23

0.33

Number of samples n 75

Individually, the two more correlated

variables are the eduction of the main

person of the family and the spending in

houseware.

RXY

0.46

0.03

0.22

0.40

1

0.25 1

0.23 0.35 1

0.32 0.22 0.36 1

0.34 0.05

0.33 0.29

0.18 0.02 0.13 0.17

0.32 0.51

0.26 0.23

0.14 0.02 0.25 0.17

26

9.3 CCA: Example

Number of people (X1)

Number of people above 14 years old (X2)

Education of the main family person (X3)

Number of working people (X4)

Correlation between the

two canonical variables

α1*

α *2

α *3

α *4

0.810

0.501

0.348

0.286

0.101

1.175 0.825

0.586

0.077

0.788 0.010 0.245

0.303 0.093 1.411

1.413

0.663 0.457 0.232 0.109

β1*

Food (Y1)

Clothes (Y2)

Houseware (Y3)

Transport and Communications (Y4)

Free time and Education (Y5)

β*2

β*3

β*4

0.592 0.459 0.757

0.119

0.332 0.057

0.544 0.799

0.241 0.971

0.407

0.088

0.293 0.300 0.287 0.726

0.032 0.032

0.581 0.248

27

9.3 CCA: Example

0.663 0.457 0.232 0.109

TotalVariance 0.6632 0.457 2 0.232 2 0.1092 0.714

Variance explained by the

first canonical variable

0.6632

61.6%

0.714

H 0 : 1 0; 2 , 3 , 4 0

Variance explained by the

first two canonical variables

0.6632 0.457 2

90.8%

0.714

l 75 12 (5 4 3) log(1 0.457 2 ) log(1 0.2322 ) log(1 0.1092 ) 20.81

l (42 1)(51) Pr l 20.81 0.0534

H 0 : 1 , 2 0, 3 , 4 0

We reject H0

l 75 12 (5 4 3) log(1 0.232 2 ) log(1 0.109 2 ) 4.64

l (42 2)(5 2) Pr l 4.64 0.5907

We cannot reject H0

28

9.3 CCA: Extensions

•

•

•

•

•

•

•

•

Kernel CCA: input variables are first mapped by a kernel onto a larger

dimensional space.

Generalized CCA: Study more than two groups of variables

Rotated CCA: Rotate the basis vectors of CCA

Nonlinear CCA: Use nonlinear projections of the input variables

Sparse CCA and CCA for Multilabel classification: Sparse loadings