Information extraction

advertisement

Information extraction

Lecture 8:

Information Extraction

from unstructured sources

Semantic IE

Reasoning

You

are

here

Fact Extraction

Is-A Extraction

singer

Entity Disambiguation

singer Elvis

Entity Recognition

Source Selection and Preparation

Overview

• Fact extraction from text

• Difficulties

• POS Tagging

• Dependency grammars

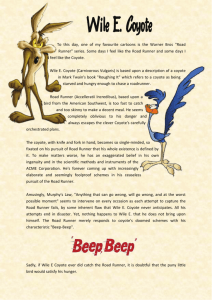

Reminder: Fact Extraction

Fact extraction is the extraction

of facts about entities from a corpus.

Coyote chases Roadrunner.

chases(Coyote, Roadrunner)

Def: Extraction Pattern

An extraction pattern for a binary relation r

is a string that contains two place-holders

X and Y, indicating that two entities stand

in relation r.

Extraction patterns for chases(X,Y):

• “X chases Y”

• “X runs after Y”

• “X’s chase for Y”

• “Y is chased by X”

task>

Task: Extraction Pattern

Define extraction patterns for

• wasBornInCity(X,Y)

• isDating(X,Y)

Def: Extraction Pattern Match

A match for an extraction pattern p in

a corpus c is a substring s of c such that

σ

there exists a substitution from

X and Y

to words such that

σ(p) = s

“X’s chase for Y”

Coyote’s chase for RR rarely succeeds.

Def: Extraction Pattern Match

A match for an extraction pattern p in

a corpus c is a substring s of c such that

σ

there exists a substitution from

X and Y

to words such that

σ(p) = s

“X’s chase for Y”

σ = {X → Coyote, Y → RR}

Coyote’s chase for RR rarely succeeds.

Def: Extraction Pattern Match

A match for an extraction pattern p in

a corpus c is a substring s of c such that

σ

there exists a substitution from

X and Y

to words such that

σ(p) = s

“X’s chase for Y”

σ = {X → Coyote, Y → RR}

match

Coyote’s chase for RR rarely succeeds.

Def: Pattern Application

Given a pattern p for a relation r, and a

corpus, pattern application produces,

for every match of p with substitution , σ

the fact r(σ(X), σ(Y ))

“X’s chase for Y” is pattern for chases()

σ = {X → Coyote, Y → RR}

Coyote’s chase for RR rarely succeeds.

Def: Pattern Application

Given a pattern p for a relation r, and a

corpus, pattern application produces,

for every match of p with substitution , σ

the fact r(σ(X), σ(Y ))

“X’s chase for Y” is pattern for chases()

σ = {X → Coyote, Y → RR}

Coyote’s chase for RR rarely succeeds.

chases(Coyote,RR)

Disambiguation is required!

Coyote’s chase for RR rarely succeeds.

chases(Coyote, RR)

?

Strictly speaking, we produce the fact

r(d(σ(X)), d(σ(Y )))

where d is a disambiguation function.

Where do we get the patterns?

• Option 1:

Manually compile patterns.

Where do we get the patterns?

• Option 2:

Manually annotate patterns in text

While Coyote chases Roadrunner, he

experiences certain inconveniences.

“X chases Y”

Where do we get the patterns?

• Option 3: Pattern Deduction

Retrieve patterns by help of known facts.

Known facts:

• chases(Obama,Osama)

Obama chases Osama since 2009.

Pattern for chases(X,Y):

“X chases Y”

Def: Pattern Deduction

Given a corpus, and given a KB, pattern

deduction is the process of finding

extraction patterns that produce facts

of the KB when applied to the corpus.

KB

chases

Corpus

+ Obama chases Osama.

Def: Pattern Deduction

Given a corpus, and given a KB, pattern

deduction is the process of finding

extraction patterns that produce facts

of the KB when applied to the corpus.

KB

chases

Corpus

+ Obama chases Osama.

=> “X chases Y” is a pattern for chases(X,Y)

Def: Pattern iteration/DIPRE

Pattern iteration (also: DIPRE) is the

process of repeatedly applying pattern

deduction and pattern application,

thus continuously augmenting the KB.

Known facts

Patterns

New facts

Patterns

Example: DIPRE

Obama chases Osama. Coyote runs

after RR. Coyote chases RR.

chases

Example: DIPRE

Obama chases Osama. Coyote runs

after RR. Coyote chases RR.

chases

“X chases Y”

Example: DIPRE

Obama chases Osama. Coyote runs

after RR. Coyote chases RR.

chases

chases

“X chases Y”

Example: DIPRE

Obama chases Osama. Coyote runs

after RR. Coyote chases RR.

chases

“X chases Y”

chases

“X runs after Y”

...

Task: DIPRE

Given this KB,

wantsToEat

apply DIPRE to this corpus:

Obama likes Michelle.

Coyote wants to eat Roadrunner.

Harry wants to eat cornflakes.

Harry likes cornflakes for breakfast.

Overview

• Fact extraction from text

• Difficulties

• POS Tagging

• Dependency grammars

We use labels to find patterns

KB

label

chases

“Obama”

label

“Osama”

Corpus

+ Obama chases Osama.

=> “X chases Y” is a pattern for chases(X,Y)

Ambiguity is a problem

KB

label

chases

“Obama”

label

loves

label

“Osama”

Corpus

+ Obama chases Osama.

Ambiguity is a problem

KB

label

chases

“Obama”

label

loves

label

“Osama”

Corpus

+ Obama chases Osama.

=> “X chases Y” is a pattern

for loves(X,Y) or for chases(X,Y)?

Disambiguation helps

KB

chases

label

“Obama”

loves

label

label

“Osama”

Corpus

+ Obama chases Osama.

=> “X chases Y” is a pattern

for chases(X,Y)

Phrase structure can be a problem

KB

label

chases

“Obama”

label

“Osama”

Corpus

+ Obama hat Osama gejagt.

Phrase structure can be a problem

KB

label

chases

“Obama”

label

“Osama”

Corpus

+ Obama hat Osama gejagt.

=> “X hat Y” is a pattern

for chases(X,Y)?

Multiple links are a problem

KB

label

“Obama”

chases

shot

label

“Osama”

Corpus

+ Obama chases Osama.

Multiple links are a problem

KB

label

“Obama”

chases

shot

label

“Osama”

Corpus

+ Obama chases Osama.

=> “X chases Y” is a pattern

for chases(X,Y) or for shot(X,Y)?

Confidence of a pattern

The confidence of an extraction pattern

is the number of matches that produce

known facts divided by the total number

of matches.

Pattern produces mostly new facts

=> risky

Pattern produces mostly known facts

=> safe

Simple word match is not enough

Coyote invents

a wonderful machine.

+

“X invents a Y”

=

invents(Coyote,wonderful)

Patterns may be too specific

Coyote invents

a wonderful machine.

+

“X invents a great Y”

=

invents(Coyote,machine)

Overview

• Fact extraction from text

• Difficulties

• POS Tagging

• Dependency grammars

Def: POS

A Part-of-Speech (also: POS, POS-tag,

word class, lexical class, lexical category)

is a set of words with the same

grammatical role.

• Proper nouns (PN): Wile, Elvis, Obama...

• Nouns (N): desert, sand, ...

• Adjectives (ADJ): fast, funny, ...

• Adverbs (ADV): fast, well, ...

• Verbs (V): run, jump, dance, ...

Def: POS (2)

• Pronouns (PRON): he, she, it, this, ...

(what can replace a noun)

• Determiners (DET): the, a, these, your,...

(what goes before a noun)

• Prepositions (PREP): in, with, on, ...

(what goes before determiner + noun)

• Subordinators (SUB): who, whose, that,

which, because...

(what introduces a sub-sentence)

Def: POS-Tagging

POS-Tagging is the process of assigning

to each word in a sentence its POS.

new machine.

Wile tries a

PN V DET ADJ N

task>

Task: POS-Tagging

POS-Tag the following sentence:

The coyote falls into a canyon.

• N, V, PN, ADJ, ADV, PRON

• Determiners (DET): the, a, these, your,...

(what goes before a noun)

• Prepositions (PREP): in, with, on, ...

(what goes before determiner + noun)

• Subordinators (SUB): who, whose, that,...

(what introduces a sub-sentence)

1

2

3

4

Probabilistic POS-Tagging

Introduce random variables for

words and for POS-tags:

(visible)

X1

Wile

X2

runs

Wile

runs

Wile

...

runs

(hidden)

Y1 Y2

PN V

ADJ

V

Prob.

PN PREP

...

P (w3) = 0.1

P (w1) = 0.7

P (w2) = 0.1

probabilities>

Independence Assumptions

Every tag depends just on its predecessor

P (Yi|Y1, ..., Yi−1) = P (Yi|Yi−1)

Every word depends just on its tag:

P (Xi|X1, ..., Xi−1, Y1, ..., Yi) = P (Xi|Yi)

(visible)

X1

Wile

X2

runs

Wile

runs

Wile

...

runs

(hidden)

Y1 Y2

PN V

ADJ

V

Prob.

PN PREP

...

P (w3) = 0.1

P (w1) = 0.7

P (w2) = 0.1

Homogeneity Assumption 1

P (Yi|Y1, ..., Yi−1) = P (Yi|Yi−1) = P (Yk |Yk−1) ∀ i, k

Homogeneity Assumption 1

P (Yi|Y1, ..., Yi−1) = P (Yi|Yi−1) = P (Yk |Yk−1) ∀ i, k

Example: The probability that a verb

comes after a noun is the same at

position 3 and at position 9:

P (Y3 = V erb|Y2 = N oun) = P (Y9 = V erb|Y8 = N oun

Homogeneity Assumption 1

P (Yi|Y1, ..., Yi−1) = P (Yi|Yi−1) = P (Yk |Yk−1) ∀ i, k

Example: The probability that a verb

comes after a noun is the same at

position 3 and at position 9:

P (Y3 = V erb|Y2 = N oun) = P (Y9 = V erb|Y8 = N oun

Let’s denote this probability by

P (V erb|N oun)

Homogeneity Assumption 1

P (Yi|Y1, ..., Yi−1) = P (Yi|Yi−1) = P (Yk |Yk−1) ∀ i, k

Example: The probability that a verb

comes after a noun is the same at

position 3 and at position 9:

P (Y3 = V erb|Y2 = N oun) = P (Y9 = V erb|Y8 = N oun

Let’s denote this probability by

P (V erb|N oun)

“Transition probability”

P (v|w) := P (Yi = v|Yi−1 = w) (f or any i)

Homogeneity Assumption 2

P (Xi|X1, ..., Xi−1, Y1, ..., Yi) = P (Xi|Yi) = P (Xk |Yk ) ∀ i, k

Homogeneity Assumption 2

P (Xi|X1, ..., Xi−1, Y1, ..., Yi) = P (Xi|Yi) = P (Xk |Yk ) ∀ i, k

Example: The probability that a PN

is “Wile” is the same at position 3 and 9:

P (X3 = W ile|Y3 = P N ) = P (X9 = W ile|Y9 = P N )

Homogeneity Assumption 2

P (Xi|X1, ..., Xi−1, Y1, ..., Yi) = P (Xi|Yi) = P (Xk |Yk ) ∀ i, k

Example: The probability that a PN

is “Wile” is the same at position 3 and 9:

P (X3 = W ile|Y3 = P N ) = P (X9 = W ile|Y9 = P N )

Let’s denote this probability by

P (W ile|P N )

Homogeneity Assumption 2

P (Xi|X1, ..., Xi−1, Y1, ..., Yi) = P (Xi|Yi) = P (Xk |Yk ) ∀ i, k

Example: The probability that a PN

is “Wile” is the same at position 3 and 9:

P (X3 = W ile|Y3 = P N ) = P (X9 = W ile|Y9 = P N )

Let’s denote this probability by

P (W ile|P N )

“Emission Probability”

P (v|w) := P (Xi = v|Yi = w) (f or any i, word v)

Def: HMM

A (homogeneous) Hidden Markov Model

(also: HMM) is a sequence of random

(We use random variables

variables, such that

P (< x1, ..., xn, y1, ..., yn >) =

Words of the

sentence

POS-tags

...where y0 is fixed as

X1,...,Xn,Y1,...,Yn that span

the possible worlds)

Q

i

P (xi|yi) × P (yi|yi−1)

Emission

probabilities

Transition

probabilities

y0 := Start

HMMs as graphs

P (< x1, ..., xn, y1, ..., yn >) =

Q

i

P (xi|yi) × P (yi|yi−1)

Transition

probabilities

P (P N |Start)

PN

Start

P (DET |Start)

P (V |P N )

P (V |N )

DET P (N |DET ) N

V

P (EN D|V )

END

HMMs as graphs

P (< x1, ..., xn, y1, ..., yn >) =

Q

i

P (xi|yi) × P (yi|yi−1)

Emission

probabilities

P (P N |Start)

PN

P (V |P N )

Start

P (DET |Start)

Transition

probabilities

P (V |N )

DET P (N |DET ) N

P (a|DET )

P (the|DET )

the a

V

P (EN D|V )

END

HMMs as graphs

P (< x1, ..., xn, y1, ..., yn >) =

Q

i

P (xi|yi) × P (yi|yi−1)

Emission

probabilities

P (P N |Start)

PN

P (V |P N )

Start

P (DET |Start)

Transition

probabilities

P (V |N )

V

P (EN D|V )

DET P (N |DET ) N

P (a|DET )

P (W ile|N )

END

.

P (coyote|N )

P (the|DET )

P (RR|N )

Wile

the a

RoadRunner

P (bird|N )

coyote

bird

eats

sleeps

Example: HMMs as graphs

P (< x1, ..., xn, y1, ..., yn >) =

Q

i

P (xi|yi) × P (yi|yi−1)

P (< the, bird, sleeps, .,

, EN D >) =0.2 × 0.5 × 1 × 0.7 × 1 × 0.9

× 1× 1

DET, N , V

Start

1

PN

0.8

0.2

DET

1

1

V

1

END

.1

N

0.1

0.5

0.4

0.2

0.9

0.5

0.7

0.6

Wile

the a

RoadRunner

coyote

bird

eats

sleeps

task>

Task: HMMs

Write the product of values for

P (< W ile, sleeps, ., P N, V, EN D >)

P (< a, coyote, eats, ., DET, N, V, EN D >)

Start

1

PN

0.8

0.2

DET

1

1

V

1

END

.1

N

0.1

0.5

0.4

0.2

0.9

0.5

0.7

0.6

Wile

the a

RoadRunner

coyote

bird

eats

sleeps

All other probabilities are 0!

P (< W ile, bird, P N, V >) = 0

The graph is just

one way to visualize

P (< W ile, sleeps, V, V >) = 0

the emisssion and

transistion probabilities!

0

Start

1

PN

0.8

0.2

DET

1

0.5

0.4

1

1

V

N

END

.1

0

0.1

0.2

0.9

0.5

0.7

0.6

Wile

the a

RoadRunner

coyote

bird

eats

sleeps

Estimate probabilities

Start with manually annotated corpus

The laws of gravity apply selectively

DET N PREP N

V

ADV

P (tagi|tagj ) =

# cases where tagi f ollows tagj

# appearances of tagj

P (ADV |V ) =

P (wordi|tagj ) =

1

1

P (V |N ) =

1

2

...

# cases where wordi has tagj

# appearances of tagj

P (the|DET ) =

1

1

P (laws|N ) =

1

2

...

task>

Task: Estimate probabilities

Estimate the transition and emission probs:

Wile runs many runs .

START PN V

ADJ N END

P (P N |Start) =

P (W ile|P N ) =

P (V |P N ) =

P (runs|V ) =

...

...

Draw the HMM as a graph.

Task: Compute probabilities

Using the probabilities from the last task,

compute the following probabilities:

P (< W ile, runs, ., P N, V, EN D >)

P (< W ile, runs, ., P N, N, EN D >)

Def: Probabilistic POS Tagging

Given a sentence and transition and

emission probabilities, Probabilistic POS

Tagging computes the sequence of tags

that has maximal probability (in an HMM).

~ = Wile runs.

X

P (W ile, runs, P N, N ) = 0.01

P (W ile, runs, V, N ) = 0.01

P (W ile, runs, P N, V ) = 0.1

...

winner

POS Tagging is not trivial

Several cases are difficult to tag:

• the word “blue” has 4 letters.

• pre- and post-secondary

• look (a word) up

• The Duchess was entertaining

last night.

Wikipedia/POS tagging

POS Taggers

There are several free POS taggers:

• Stanford POS tagger

• hunpos

• MBT: Memory-based Tagger

• TreeTagger

• ACOPOST (formerly ICOPOST)

• YamCha

• ...

Stanford NLP/Resources

Try it out!

POS taggers achieve very good precision.

POS Tagging helps pattern IE

We can choose to match the placeholders with only nouns or proper nouns:

“X invents a Y”

Coyote invents a catapult

invents(Coyote,catapult)

Coyote invents a really cool thing.

invents(Coyote,really)

POS-tags can generalize patterns

“X invents a ADJ X”

Coyote invents a cool catapult

Coyote invents a great catapult

Coyote invents a

super-duper catapult.

match

match

match

Phrase structure is a problem

“X invents a ADJ X”

Coyote invents a

very great catapult.

Coyote, who is very hungry,

invents a great catapult.

no

match

no

match

We will see next time how to solve it.

Semantic IE

Reasoning

You

are

here

Fact Extraction

Is-A Extraction

singer

Entity Disambiguation

singer Elvis

Entity Recognition

Source Selection and Preparation

References

Brin: Extracting Patterns and Relations from the WWW

Agichtein: Snowball

Ramage: HMM Fundamentals

Web data mining class