Intership - Generation time in matrix population models (2013)

advertisement

École Normale Supérieure

Bachelor Internship

Laboratory Ecology and Evolution (UMR 7625)

Eco-Evolutionary Mathematics unit

Generation Time in

Matrix Population Models

Supervisor:

Intern:

François Bienvenu

Licence de biologie

École Normale Supérieure

Stéphane Legendre

Équipe ‘Éco-Évolution mathématique’

École Normale Supérieure (UMR 7625)

legendre@ens.fr

francois.bienvenu@ens.fr

3 June - 26 July 2013

Contents

Presentation of the laboratory

The Ecology and Evolution laboratory (UMR 7625) . . . . . . . . . . . . . . . . . . . . . .

The Eco-Evolutionary Mathematics unit . . . . . . . . . . . . . . . . . . . . . . . . . . . .

0

0

0

Thanks

0

Introduction

1

Methods

Graph theory . . . . . . . . . . . . . . . . . .

Vocabulary . . . . . . . . . . . . . . . .

Adjacency matrices . . . . . . . . . . . .

Primitivity . . . . . . . . . . . . . . . .

The Perron-Frobenius theorem . . . . .

Matrix population models . . . . . . . . . . .

The model . . . . . . . . . . . . . . . . .

Eigen-elements . . . . . . . . . . . . . .

Sensitivities and elasticities . . . . . . .

Markov chains . . . . . . . . . . . . . . . . .

The model . . . . . . . . . . . . . . . . .

Stationary distribution and return time

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

1

1

2

2

2

2

2

3

3

3

3

4

Results

Markovization . . . . . . . . . . . . . . .

Motivation and principle . . . . . .

Interpretations . . . . . . . . . . .

Mathematical expression . . . . . .

Stationary probability distribution

Line graph . . . . . . . . . . . . . . . .

Motivation . . . . . . . . . . . . .

Construction . . . . . . . . . . . .

Properties . . . . . . . . . . . . . .

Generation time . . . . . . . . . . . . .

The A = R + S decomposition . .

e . . . . .

The transformed graph P

Distribution of T . . . . . . . . . .

Mean of T . . . . . . . . . . . . . .

Other results . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4

4

4

5

5

5

6

6

6

7

9

9

9

10

10

10

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Discussion

11

Existing measures of the generation time . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Perspectives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Conclusion

13

References

14

Appendix

A.1 The age-classified model .

A.2 The standard size-classified

A.3 More on the line graph . .

Notations (cheat-sheet) . . . .

. . . .

model

. . . .

. . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

I

.

I

. II

. IV

. VI

Presentation of the laboratory

The Ecology and Evolution laboratory (UMR 7625)

The aim of the UMR 7625 is to bring together the different levels of study of ecological processes,

which range from the study of the gene to that of the ecosystem. Most of the domains of modern

ecology are represented in the laboratory, with a strong emphasis on evolutionary ecology. The

questions addressed are of theoretical importance, but they are tackled with real-world problems

in mind (global change, conservation, emerging infectious diseases) and the UMR 7625 aims at

developing interdisciplinary work with the relevant fields. Given the variety of the topics, the

laboratory is organized in five research units :

• Evolution of Animal Societies

• Global Change and Adaptive Processes

• Eco-Evolutionary Mathematics

• Evolutionary Physiology

• Populations and Communities Ecology

The Eco-Evolutionary Mathematics unit

The Eco-Evolutionary Mathematics unit develops mathematical tools to better understand ecoevolution. It is composed of mathematicians, physicists and biologists who work together to link

theory and practice. Although not apparent in this report – which focuses on a deterministic, linear

model – a strong focus is put on stochastic and non-linear models, and the interaction between both.

Research subjects include speciation, small population dynamics, bio-ecological oscillations, trophic

networks, epidemiology, genome dynamics and eco-evolutionary dynamics in turbulent fluids. The

question typically encountered are: ‘How did this pattern evolve ?’, ‘What does it tell us about the

current dynamic of the system ?’, ‘Can we use this information to better manage it ?’

In this context, the work of Stéphane Legendre (who incepted and supervised this work) has

focused on population dynamics (small populations), conservation biology, evolutionary dynamics,

trophic networks, the metabolic theory of ecology and the link between these topics. His scientific

activity also consists in writing software aimed at ecologists.

Thanks

First of all I would like to thank Stéphane Legendre, who gladly presented me his work before

offering me an internship; who made sure that I had a desk and proper working conditions; who

accepted to take quite some time to talk about the subject of the intership, even when we had very

different opinions; and finally who made a careful correction of this manuscript.

I would also like to thank Renaud De Rosa, whose Idées et Théories en Biologie lesson gave me

the occasion to re-read the work of Richard Dawkins and who answered a few questions I asked

him (alas, forcing me to face reality).

Finally, I would like to thank all my friends and everyone with whom I even remotedly discussed

this work. I also thank BIOEMCO for the warm and intellectually stimulating atmosphere it

provided during my intership, and I deplore the fact that my successors won’t be able to benefit from

it as the whole laboratory was recently told to leave the École Normale Supérieure on appalingly

short notice.

0

found on arXiv [6]. This version, written mainly by Stéphane

Legendre, is aimed at making the results look as natural as

possible for people working with matrix population models,

whereas the aim of the present report is to be as general and

rigorous as possible when describing the methods used, since

they can be generalized to other models described by weighted

directed graphs.

Introduction

When they want to convert generations into years, molecular

biologists as well as historians use a quantity called the generation time. Intuitively, this is the difference in age between

the generation of an individual and that of his parents (about

20 to 30 years in human populations). Its many applications

and the resulting need for solid estimates justifies theoretical

studies of the generation time, but there are other important motivations: indeed, the generation time is a fundamental descriptor of organisms and populations. For instance,

it has allometric scaling with other biological descriptors [1]

(a mathematical formulation of the fact that smaller organisms tend to have shorter generation times). This makes it

a challenge for the metabolic theory of ecology, which aims

at explaining such relations from the metabolic rate of organisms. The generation time is also the most natural candidate

for a time-scale when describing processes at the level of the

population. Finally, it is arguably a valuable proxy for the

timing of evolution.

Surprisingly for such a basic concept, there is no single definition of the generation time – and the way in which the existing definitions relate is poorly understood. Three definitions

have been commonly used [2], and mathematical expressions

for each of them have been derived for several demographic

models, notably matrix population models [3, 4]. However,

these formula are rather complicated and hard to interpret.

Here, we provide a surprisingly simple and general formula

which we show to encompass the classic and intuitive notion

of the generation time as difference in age between an individual and its parents.

We focused on matrix population models because they are

among the most simple population models and yet provide a

great variety of information. Moreover, they are widely used

in conservation biology. Rather than trying to simplify existing formulas, we started from a new definition which takes

advantage of the cyclic nature of the life cycle to define the

generation time as the time between two reproductive events

in a realization of the life cycle. To perform the mathematical

calculation, we used Markov chains and graph theory. Markov

chains were used to model the life cycle in a way which makes

apparent its cyclic nature – we call this process of building

a Markov matrix out of the classic representation of the life

cycle as a population projection matrix markovization and

it was first introduced by Demetrius [5]. Graph theory was

used to avoid the ambiguous definition of newborn stages by

focusing on reproductive events rather than individuals. This

technique greatly eases the calculation and has to our knowledge never been used in matrix population models.

We also illustrate the interest of our formula by generalizing a result know as Lebreton’s formula and by reflecting on

the link between the generation time and another important

biological descriptor known as population entropy. However,

this topic calls for more work.

Much of the reasoning of this work was made possible by

thinking in terms of graphs rather than matrices. Because

of this, this document was written using the formalism of

graphs. A more classic, ‘matrix’, version of this work can be

Methods

In this section we present the mathematical tools used. Although we mostly rely on elementary linear algebra (the most

elaborate result we use is the Perron-Frobenius theorem),

graph theory, matrix population models, and Markov chains

each have their own vocabulary and conventions, which can

make even the simplest reasoning hard to follow for someone

not acquainted with them.

The following notations will hold for the rest of the document: we denote scalars with lowercase letters, such as p.

Matrices are in uppercase bold letters (e.g. A) and vectors –

row and column – in lowercase bold letters (e.g. v). Finally,

it is implicit that A = (aij ) and v = (vj ). A cheat-sheet

aimed at easing the reading of this document can be found in

the appendix.

Graph theory

Here we do not aim at providing an introduction to graph

theory but only at defining the vocabulary we use and giving

the main results we will need.

Vocabulary

A graph is a set of vertices, or nodes, linked by edges, or

arcs. Graphs can be directed or not, depending on whether

their edges denote a symetric relation between the vertices

they link (non-directed) or not (directed). Directed graphs

are sometimes called digraphs, but since we work exclusively

with directed graphs we will simply refer to them as ‘graphs’.

To follow what is usually done when working with directed

graphs, we will prefer the terms nodes and arcs over the terms

vertices and edges.

The node from which an arc starts is called its tail, and the

node to which it leads its head. We note t and h the functions

which map an arc to its tail and head, respectively. The arcs

coming to a node will be refered to as its in-arcs and those

leaving it as its out-arcs.

We work with weighted graphs, which means that each arc

has a weight, a scalar representing the intensity of the relation

between the head and the tail of the arc. We note WG the

weighting function of graph G, i.e. the function which map

an arc to its weight.

A node i leads to a node j if and only if there is an arc

going from i to j, in which case we note i → j. Similarily

we can say that an arc (i → j) leads to (k → l) if and only

if j = k. A path from i to k is an ordered sequence of arcs

{(i → j1 ), (j1 → j2 ), ..., (jn → k)}. When there exists a path

from i to k, we say that k is reached by i and we note i

k.

A path from a node i to itself is called a cycle (including the

1

case of the self-loop (i → i)). The length of a path / cycle

is simply the number of arcs composing it (therefore, a selfloop has length one). Finally, a graph is said to be strongly

connected if for any pair of nodes (i, j), i

j.

Nothing in those definitions imply that there is only one arc

going from one node to another. However, graphs are often

assumed to have this property. Those that do not are called

multigraphs. Here, we will use ‘graph’ when it does not matter

whether or not a graph is a multigraph, and precise ‘simple’

and ‘multi’ only when we want to emphasize its nature.

Let G be a primitive matrix. There exists an eigenvalue λ

of G so that :

• λ ∈ R, λ > 0

• ∀µ ∈ Sp G,

|λ| > |µ|

• λ is simple, i.e. has multiplicity one.

λ is called the dominant eigenvalue of G. Its right and left

eigenvectors are called the dominant eigenvectors of G, and

they are positive (i.e. all their entries are > 0).

As stated, this formulation of the Perron-Frobenius theorem is quite imprecise, but it is sufficient for our needs. For

a more detailed presentation of the results, see [7].

Adjacency matrices

It is possible to represent a graph with an adjacency matrix.

If G is a simple graph, its adjacency matrix is the matrix G

such that:

WG (i → j) if i → j

gij =

0 else

Matrix population models

Matrix population models were first introduced by Leslie as

age-classified models [8]. They were later generalized to other

type of classes (such as size or developmental stages) by

Lefkovitch [9]. Due to their simplicity and richness, theses

models have been extensively studied and used (especially in

conservation studies). The most complete introduction to the

subject is probably Caswell’s Matrix Population Models [10],

from which the following results were taken.

In that case, G contains all the information about G. But if G

is a multigraph, it cannot be represented unequivocally by a

single matrix. Nevertheless, we can still define its adjacency

matrix. Here, we do so by using the same definition as before

except we adapt it so that gij is the sum of the weights of

every arc going from i to j. The resulting definition coincide

with the classic one for simple graphs.

Of course, defining gij as a function of (i → j) rather than

(j → i) is an arbitrary convention – and unfortunatly this

convention is not respected in matrix population models. One

can deal with this problem either by keeping one definition

of adjacency matrices and working with transposes, or by

adapting the definition of the adjacency matrix when studying

matrix population models. We will use the latter possibility.

The model

The simplest demographic model one can imagine is arguably

the discrete-time malthusian growth model :

n(t + 1) = λ n(t)

Where n is the size of the population and λ is its growth rate.

However, this model supposes that the vital rates of all

individuals are identical, which is obviously false even when

leaving aside individual variability: indeed, in most cases the

population can be divided in stages (age-classes, size-classes,

instars...) with potentially very different fertilities and/or

survival probability. A simple way to take this into account

is to use a matrix population model :

Primitivity

Primitivity is the property we will rely on the most, as

it makes it possible to use the Perron-Frobenius theorem.

Primitivity can be broken down into three properties: nonnegativity, irreducibility and aperiodicity.

The matrix G is non-negative if and only if ∀(i, j), gij ≥ 0.

It is irreducible if and only if the graph it represents when

interpreted as an adjacency matrix is strongly connected. Finally, the period of a graph is the greatest common divisor

(GCD) of the lengths of all its cycle and a graph is said to

be aperiodic if and only if it has period one (therefore, any

graph containing a self-loop is aperiodic). As with irreducibility, the aperiodicity of a matrix is equivalent to that of the

corresponding graph.

There exist purely matricial characterizations of irreducibility (not similar by permutation to a block upper triangular

matrix), aperiodicity (gcd {m | (Gm )ii > 0} = 1) and primitivity (G ≥ 0 and ∃m, Gm > 0) but the graph definitions

are more intuitive and often easier to verify.

n(t + 1) = An(t)

Here, n is a population vector whose entries are the number

of individuals in each class. A is the projection matrix of the

population.

With this model, each class has a different contribution to

the composition of the population at time t + 1. However, all

individuals inside a class are supposed to have identical vital

rates, and there is no density dependence. It can therefore

be said that this model is a natural, simple but powerful,

extension of the malthusian growth model.

An good way to interpret this model is to see it as a discrete

time compartment model and think in term of graph. The

projection matrix is indeed the adjacency matrix (sensu lato,

see adjacency matrices) of a graph whose nodes are the ‘compartments’ containing individuals in each class, and whose

arcs denote the flows between compartments. This graph is

what is usually called the life cycle of the population. Figure 1 is an example of a life cycle and its associated projection

matrix.

The Perron-Frobenius theorem

What is known as the Perron-Frobenius theorem is actually

a set of results proved by Oskar Perron and Georg Frobenius

concerning the eigen-elements of positive and non-negative

square matrices. We will only give the results we need.

2

Sensitivities and elasticities

As the asymptotic growth rate, λ, is a valuable indicator of

the population’s welfare, it is natural to ask how reliable it

is. This is one of the motivation for sensitivity analysis. The

sensitivity of the growth rate to an entry aij of A is :

f3

f2

1

s1

g1

2

s2

g2

3

s1

g1

0

f2

s2

g2

f3

0

s3

sλ (aij ) =

s3

Of course, the sensitivity of any variable to any parameter can

be defined. If not precised, the term ‘sensitivity’ will refer to

the sensibilities of the growth rate, sλ .

The sensitivites to the entries of A are given by :

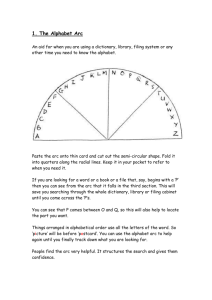

Figure 1: A typical matrix population model. The life

cycle graph is on the left and the projection matrix of the

population is on the right.

The quantity of information which can be obtained from

such a simple model is impressive but need careful interpretation. The first thing to keep in mind is that matrix population models are usually best not used as predictive models.

Indeed, as we will see, under assumptions which are almost

always verified, the population dynamic converges toward an

exponential growth, ever increasing or decreasing. Rather,

matrix population models must be seen as a way to obtain

information about the current population.

We now provide a basic set of results about matrix population models, without proving them. The proofs, as well as

various examples, can be found in Caswell [10].

sλ (aij ) =

vi w j

vw

Since λ can be viewed as an integrated measure of fitness at

the population level, the sensitivies are of particular interest

when studying evolution. However, they have some shortcomings when studying the life cycle: for instance, non-existent

transitions (null entries in the matrix) can have non-null sensitivities – which might be of great interest if studying how

new transitioned evolved in the life cycle, but is not in many

cases (e.g: if that transition is biologically impossible). This

is why it is sometimes more desirable to work with proportional (rather than absolute) contributions, and this is why

elasticities were introduced. The elasticity of λ to aij is :

Eigen-elements

Projection matrices are non-negative, and they are usually

irreducible, aperiodic, hence primitive. The Perron-Frobenius

theorem therefore applies, and the matrix has a dominant

eigenvalue λ with associated right and left eigenvectors w

and v, respectively. When the matrix is not irreducible, this

is usually due to a post-reproductive class or a source-sink

metapopulation, in which case although v will contain a null

entry, w will still be positive. When the projection matrix is

periodic with period h, there are h dominant eigenvalues and

the population oscillates with period h. However, it is possible

to consider the matrix Ah (which maps the population from

time t to time t + h) to come down to the primitive case.

We therefore assume in the rest of the document that A is

primitive.

For t large enough, we have :

n(t + 1) ∼ λn(t) ∝ w

∂λ

∂aij

eλ (aij ) =

aij ∂λ

λ ∂aij

It is given by :

aij vi wj

(2)

λ vw

The elasticities of λ to the entries of A sum to one, and

the elasticities to null entries are null. They are therefore

interpreted as ‘the relative contribution of a transition to λ’.

As we will see, it is possible to give another interpretation

for them.

eλ (aij ) =

We now give a very brief overview of the last tool used in

this work: finite-state discrete-time markov chains.

(1)

Markov chains

That is, the population vector converges toward the

eigenspace generated by w and then behaves exponentially,

increasing if λ > 1, decreasing if λ < 1 (the case λ = 1, although mathematically negligible, is of biological interest and

correspond to a population at demographic equilibrium).

λ can therefore be interpreted as the asymptotic growth rate

of the population, and w as its stable distribution: assuming

it is scaled so that its entries sums to one, its entries are the

fraction of individuals in each class.

The interpretation of v is less direct, but several independent arguments point to interpreting it as the reproductive

value of the population – that is, its entries indicate the relative contribution of each class to the overall size of the population.

The literature on Markov chains is huge, and once again we

simply recall a few results that we will need. These results

were taken from Amaury Lambert’s lesson LV388 at the École

Normale Supérieure: ‘What a biologist should not ignore’, of

which there is to my knowledge no written version. However,

these are basic results which are to be found in any introductory textbook on Markov chains.

The model

By ‘Markov chains’ we actually mean ‘Finite-stage discrete

time Markov chains’. They model a stochastic system occupying different states numbered {1, ..., n} according to the

following rule: the state of the system at time t + 1, X(t + 1),

depends only on its state at time t, X(t).

3

The formalism of Markov chains is very much like that

of matrix population models, except for a transpose when

writing the system:

both. This has notably been done by Cochran and Ellner,

and by Cushing and Yican [3, 4]. Both paper use the same

technique, which consist in separing the reproductive transitions from the survival ones. A complete presentation of this

technique is given in Caswell’s chapter 5, although he uses

the terms ‘fertilities’ and ‘transitions’ (and therefore different notations). The results derived in this report also depend

on the reproductive-survival decomposition, but for different

purposes than the markovization of the life cycle, which does

not rely on it. Therefore, we discuss it later in paragraph the

A = R + S decomposition.

x(t + 1) = x(t) P

Here, x is the probability distribution of the system among

the different states at time t (i.e. xi = P [X(t) = i]) and

P is the transition matrix, whose entries are the transition

probabilities:

pij = P [X(t + 1) = j | X(t) = i]

Motivation and principle

The term life cycle is rarely questioned. But from an individual’s perspective, it is not justified as life is but a linear

journey from birth to death. I therefore started my reflection

by trying to find what was cyclic about the life cycle. I was

greatly helped in this by the concepts that Richard Dawkins

popularized under the terms replicator and vehicle [11]. But

as it happens, the resulting method does not rely on these

concepts:

Stationary distribution and return time

The transition matrix entries, pij , are probabilities. As a result, P is non-negative. Moreover, {X(t) = i | i ∈ {1, ..., n}}

is a complete system of events, so the rows of P sum to one.

Provided P is irreducible aperiodic, the Perron-Frobenius theorem applies, and we can assert that P has a dominant eigenvalue λ with corresponding right and left eigenvectors e and

π, respectively. Since x is bounded and x(t) ∼ λt x(0), we

have λ ≤ 1. But the fact that the rows of P sum to one

gives that 1 is eigenvalue of P. Therefore, λ = 1, with corresponding right eigenvector e> = (1, ..., 1) (e> denoting the

transpose of e). Finally, π is interpreted as the stationary

probability distribution of X.

The return time of state i is the random variate Ti equal to

the time it takes the system to reach state i, having started

from it:

Ti = min {t ≥ 1 | X(t) = i} ,

0.6

1

X(0) = i

0.15

The mean of T is given by E [Ti ] = π1i . Indeed, in k steps,

we will have visited state i on average πi k times. The average

time between two visits is therefore πki k = π1i . More generally,

the return time to a set of states S is :

1

E [TS ] = P

πs

2

A

0.35

2

0.2

0.7

3

0.75

0.15

4

0.5

B

(3)

s∈S

time

Results

This work is based on the idea (which was suggested to me

by Stéphane Legendre) of defining the generation time as a

return time. To do so, I have made use of two techniques: the

first consists in transforming a population projection matrix

in a Markov chain transition matrix and was first found by

Demetrius [5]; the second consists in transforming the life cycle graph in order to study the properties of its arcs by studying the properties of the nodes of the transformed graph. This

has to my knowledge never been used in matrix population

models.

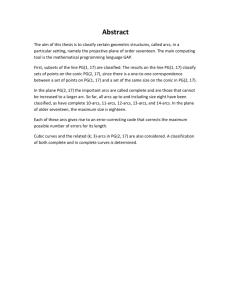

Figure 2: A, fictive life cycle (with a post-reproductive

class) – B, demographic process compatible with A represented as a branching process. See text for more precisions.

Figure 2.B is an example of a one-sex (or no-sex) demographic process followed at the individual level and represented as a branching process. Oblique lines mark births,

and with the vertical line that follow they correspond to a

given individual. The death of the individual occurs when

the vertical line stops. Different stages correspond to different colors, and Figure 2.A is a life cycle compatible with the

Markovization

Given the facts that: a. Markov chains and matrix population models share a common formalism, and b. the literature

on Markov chains is much more vast than that on matrix population models, it is natural to try to make the link between

4

demographic process depicted in Figure 2.B. This diagram

contains all the information about the demographic parameters of the population.

What we would like is to convert a matrix population model

into a model that would enable us to study the properties of

diagrams such as that of Figure 2.B This might seem impossible, as a matrix population model is built at the population

level whereas the branching diagram necessitates information

at the individual level, but it can actually be done assuming

that the vital rates of an individual depend only on its class –

which is the working hypothesis of matrix population models.

To do this, we work on genealogies, i.e. we trace down

the ancestors of individuals rather than their descendants. In

other words, we inverse the time in Figure 2.B so as to go up

instead of down. As a consequence, the process becomes much

easier to study as every individual comes from one and only

one individual (this is because matrix population models do

not take sex into account: when they are not used for asexual

organisms, only one sex is taken into account. It is then

almost always the female sex as it is often easier to determine

the offspring of a female than that of a male. The model

is then said to be female-based. There exist two-sex models,

but they include frequency-dependence – and therefore nonlinearity – and are much less used). However, by doing this we

also leave out the dead-end branches. This is not a problem

given that the absence of interaction between individuals is a

working hypothesis of matrix population models: as a result,

these post-reproductive individuals do not have any impact

on the population.

To model these genealogies, we use a Markov chain whose

system is an abstract particle following the branches of the

genealogy as it moves back in time, and whose states are the

classes of the population model. Before giving the mathematical expression describing this Markov chain, we give possible

interpretations of this abstract particle.

tation suffers from the same problem as the replicator-based

one when it comes to one-sex models.

Finally, one need not have any opinion on evolution to interpret the results obtained by markovization as they are rigorously identical (though simpler and more complete) to those

obtained when defining the generation time as ‘the mean age

of mothers at birth’, which is a commonly used measure [2].

Indeed, the quantity we compute in this work is directly be

interpreted as ‘the time between two births when tracing a genealogy’ and is therefore exactly the age of mothers at birth.

We will further discuss this point and compare our results to

those existing in the discussion section.

Mathematical expression

We now give the mathematical expression of markovization.

In order to be as independent as possible of any biological

context, we will talk of individuals rather than replicators or

germ-line cells in what follows.

We want to know the probability pij that an individual in class

P i comes from class j. At time t, there are

ni (t) =

k aik nk (t − 1) individuals in class i, aij nj (t − 1)

of which come from class j. Therefore:

pij =

aij nj (t − 1)

ni (t)

Assuming the population is at its stable stage distribution,

n(t) ∼ λn(t − 1) ∝ w (eq. 1). Replacing in the previous

expression,

aij wj

pij =

(4)

λwi

The resulting expression is independent of t, and the matrix

P = (pij ) is the Markov matrix we want (the fact

P that its

rows sum to one is trivial when replacing λwi by j aij wj in

P

P

1

j pij = λwi

j aij wj ) Since it is assumed that w > 0 (see

paragraph eigen-elements), the formula is always valid. Note

that the apparent absence of transposition is due to the fact

that two transpositions have been performed: one to reverse

the time, and the other to switch from the formalism of the

matrix population models to that of the Markov chains.

This formula had been found by Demetrius [5]. Further

exploration of the literature on Markov chains also made me

notice that this formula is very similar to that of what is

known as the ‘reverse Markov chain’ However, this technique

does not seem to have been used to markovize other matrices.

Interpretations

As already stated, I addressed the problem of generation time

with an evolutionary rather than demographic point of view,

and I was initially strongly influenced by the concepts of replicator and vehicle [11]. I wanted to define the generation

time as ‘the time a replicator spends in a vehicle’ and use

Markov chains to model the moves of a replicator from vehicle to vehicle. For a no-sex model, this aim is achieved by the

markovization technique. However, for a one-sex model, the

situation is a bit more complicated: indeed, we only take into

account replicators that stay into the sex of interest. But a

replicator is most likely to shift from vehicle of one sex to the

other. If the time spent in vehicles of both sexes is different,

then the computed quantity will not be ‘the time spent in a

vehicle’. It could still, however, be interpreted as ‘the time a

mitochondrial replicator spends in a vehicle’, given that for

many organisms paternal mitochondrial DNA is usually not

transmitted.

However, one need not embrace the ideas of replicator and

vehicle to interpret markovization: some people such as my

supervisor, Stéphane Legendre, might prefer to interpret it

as the average time spent before germ-line cells (rather than

replicators) experience reproduction. However, this interpre-

Stationary probability distribution

The stationary probability distribution of the previously defined Markov chain (eq. 4) is given by:

πi =

vi w i

vw

(5)

Proof:

pIt is obvious that the entries of π sum to one.

We now

check that π is a left eigenvector of P:

X

j

5

πj pij =

X vi wi aij wj

j

vw λwi

=

vi X

aij wj

λvw j

But since w P

is a right eigenvector of A associated with

eigenvalue λ, j aij wj = wi . Substituting into the previous

expression,

X

vi wi

= πi

πj pij =

vw

j

6

322.38

y

Hence the result.

0.872

30.17

0.750

3.448

0.023

Line graph

0.167

0.125

In this section we describe the second method we use to study

the generation time. This method enables the creation of an

object called the line graph. Although the line graph was already know in graph theory, the fact that it was mostly studied in a different context (non-directed, non-weighted graph)

and for different reasons (namely, per se) made it virtually

impossible to find the needed results, which had to be found

again. Simple though they are, these results and the corresponding proofs are therefore given here. To my knowledge,

this is the first time the line graph has been used in matrix

population models.

1

0.238

0.013

3

0.125

0.007

4

0.245

5

0.038

0.008

0.966

0.010

0.007

0.008

2

Motivation

Now that we have markovized the projection matrix of the

population, it is tempting to define the generation time as the

return time to one (or a subset) of its nodes – those that correspond to newborns. However, there are several cases in which

the newborns are ambiguously defined and are therefore not

an adequate choice: for instance, in certain models (e.g: sizeclasses, instar-classes), the new individuals can spend several

year in the newborn class before growing/maturing into another class (Figure 3.A). In that case, there is a self-loop on

the newborn node which as a survival component. Another

case which can be encountered is that of retrogression in sizeclass models (Figure 3.B). Finally, complex life cycle can also

lead to such situations, as exemplified by Figure 4.

Figure 4: A real-life example of a complex life cycle including survival into ‘newborn’ stages. The organism is

the teasel (Dipsacus sylvestris) and the classes are: 1.

dormant seed year 1, 2. dormant seed year 2, 3. small

rosette, 4. medium rosette, 5. large rosette, 6. flowering

plant. Taken from [10] (corrected from [12])

By contrast, reproductive arcs can be defined unambiguously, although there might be both a reproductive and a survival arc leading from a node to another (see the A = R + S

decomposition). It would therefore be desirable to be able to

work with arcs in the same way as we work with nodes. To

this end, we build a new graph whose nodes correspond to

the arcs of the original graph.

A

1

2

Construction

3

e as follow:

Let G be a graph. We build its line graph, noted G,

e Crucially,

• To each arc of G corresponds one node of G.

if there are several arcs of G going from one node to an

other, each of them will be associated with a distinct

e

node of G.

B

1

2

• A node a of Ge corresponding to arc (i → j) of G leads to

node b of Ge corresponding to arc (k → l) of G if and only

if j = k.

3

• The weight of an arc (a → b) of Ge is the weight of the

arc of G to which corresponds b.

Figure 3: Theoretical examples illustrating how survival

into ‘newborn’ classes might occur. The red arcs are reproductive arcs, the blue ones correspond to survival into the

newborn class. A, standard size-classified model – B, sizeclassified model with retrogression (an example of multigraph).

Figure 5 gives an example of a simple graph and its line

graph.

6

A

b

a

1. Connectedness:

Assuming G contains no isolated nodes,

b

c

d

c

d

G strongly connected ⇐⇒ Ge strongly connected

(9)

Proof:

e with corresponding arcs

pLet a and b be two nodes of G,

B

a

a

a

b

1

c

a

2

in G (i → j) and (k → l), respectively. If G is strongly

connected,

b

b

c

e

d

d

e

b

(i → j) → (m1 → m2 ) → ... → (mn−1 → mn ) → (k → l)

c

d

i.e. a

Conversely, if G is not strongly connected, ∃(j, k), j 6 k.

Since it is assumed that G has no isolated nodes, there exists

an arc containing j and an arc containing k (either as head

e

or tail). Let a and b be the corresponding nodes of G,

respectively. Then a 6 b. Indeed, if we had a

b, we

could explicit a path from a to b and proceed as before to

exhibit a path from j to k. As a result,

Properties

The following properties and their proofs can be written using the formalism of adjacency matrices. Although this might

seem the most natural option to someone used to matrix population models, it is much easier to reason in terms of graphs

– and the corresponding notations are much lighter. Indeed,

if we wanted to work with adjacency matrices and be as rigorous as possible without losing any generality, we would have

to introduce quite heavy notations to deal with the fact that

the graph we are working on can be a multigraph (i.e. can

contain more than one arc going from one node to another),

as will become apparent in paragraph the A = R + S decomposition. We therefore introduce some notations and give a

characterization of matrix products and left eigenvectors ‘in

terms of graphs’:

We note WG the weighting function of graph G, i.e. the

function which maps an arc to its weight. By a slight abuse

of notation, we also define the weighting function so as to map

a node of the line graph Ge to the weight of the corresponding

arc in G. We introduce the function t which maps an arc to

its tail. Finally, we note χ(a) the set of the in-arcs of a. With

these notations, the weighting function of Ge is defined by :

WGe(β) = WG (a)

b. As a result,

G strongly connected =⇒ Ge strongly connected

Figure 5: Constructions of line graphs. The letters indicate the weights of the arcs, and an arc and its corresponding node are the same color. A, a subgraph and its

corresponding subgraph in the line graph – B, a graph and

its line graph. This example shows that a multigraph is

transformed into a simple graph.

∀β ∈ χ(a),

j → m1 → ... → mn → k

It is then clear that

e

e

k, i.e. ∃(m1 , ..., mn ),

j

G not strongly connected =⇒ Ge not strongly connected

Hence the equivalence.

2. Period

G and Ge have the same period

y

(10)

Proof:

that

pTo show this, we show a stronger but obvious result:

e

each cycle in G has a corresponding cycle in G of same

length (and vice versa). This is because a cycle, which can

always be uniquely defined by its arcs, can also be uniquely

defined by its nodes when there is at most one arc going

e Thus, a

from one node to another, as is the case for G.

cycle of G described by its arcs defines a unique cycle of Ge

by considering the corresponding nodes, and vice-versa. As

the number of arcs of a cycle equals its number of nodes,

the cycles all have the same lengths. A fortiori, the GCD

of the lengths are identical.

y

3. Primitivity

(6)

G primitive ⇐⇒ Ge primitive

For any adjacency matrix G representing graph G, a product by a row vector x can be written:

X

(xG)b =

xt(a) WG (a)

(7)

(11)

Proof:

pThis is a direct consequence of (eq. 9) and (eq. 10)

y

4. Sums of the rows

Let Sums denote the set of the sums of the rows of the adjacency matrix of a graph. Then,

a∈χ(b)

Thus, the fact that x is a left eigenvector of G with respect

to the eigenvalue µ translates into:

X

xt(a) WG (a) = µxb

(8)

e

Sums(G) = Sums(G)

a∈χ(b)

7

But since x is a left eigenvector of G associated with respect

to eigenvalue µ, we have from (eq. 8)

X

xt(b) WG (b) = µ xa

Proof:

pThe weights of the out-arcs of node a in Ge are exactly those

of out-arcs of the head of the corresponding arc in G. Theree associated with node a

fore, the entries of the row of G

are those of the row of G associated with the head of a

(completed with zeros for columns associated with nodes to

which a doesn’t lead). Hence the result.

b∈χ(t(a))

Finally,

y

Corollary:

e

eG

x

= µ xa WG (a) = µ x

ea

a

e is a Markov matrix

G is a Markov matrix ⇐⇒ G

Corollary :

Let P be a Markov matrix with stationary probability distrie is a Markov matrix with stationary probability

bution π. P

distribution:

π

ea = πt(a) WG (a)

(14)

Proof:

pAssuming WG ≥ 0, this corresponds to the case where:

Sums(G) = {1, ..., 1}

y

Proof:

e is a Markov matrix from (eq. 12). From (eq. 11), P

e

P

is primitive. Thus, the Perron-Frobenius theorem applies

and the left eigenvector associated with eigenvalue λ = 1

whose entries sum to one can be interpreted as the statione Since π is associated with

ary probability distribution of P.

e from (eq. 13). Thus, all we have

eigenvalue λ = 1, so is π

e sum to one.

to do is check that the entries of π

X

X

X

X

π

ea =

πt(a) WP (a) =

πi

WP (b)

5. Eigen-elements

The question of eigen-elements is slightly more complex and

has not been fully explored during this intership. However,

the only result we need is the stationary distribution of the

line graph of a Markov chain, and this can be found easily.

More general results have been derived, but as they are of no

use for the study of the generation time, they are not given

here. They can, however, be found in appendix A.3.

Let µ be an eigenvalue of G, and x be a left eigenvector of

G associated with eigenvalue µ. Then:

p

a

• µ is an eigenvalue of Ge

e, whose entries are given by:

• row vector x

x

ea = xi WG (a),

where i is the tail of a

is a left eigenvector of Ge associated with µ

y

Which is the desired result.

(12)

a

i

b∈out(i)

Where out(i) is the set of the out-arcs of i. But as P represents a Markov chain,

X

WP (b) = 1

(13)

Proof:

b∈out(i)

pFrom (eq. 7), we have:

Therefore,

X

X

e

eG

x

=

x

et(β) WGe(β)

a

a

β∈χ(a)

As for every arc β pointing to a we have WGe(β) = WG (a)

e a:

(eq. 6), we can substitute both expressions in that of (e

xG)

X

e

eG

x

=

x

eb WG (a)

y

i

But we also have:

• P [X = i] = πi

h

i W (a) if i = t(a)

P

e

• P X=a|X=i =

0 else

b∈χ(t(a))

Substituting x

eb by xt(b) WG , we get

X

e

eG

x

=

xt(b) WG (b) WG (a)

a

πi = 1

i

The proof above isn’t satisfactory as it relies on (eq. 13)

which shows that the stated expression is correct without explaining where it comes from. Here is a less rigorous but more

intuitive justification: π is the stationary probability distribution of the nodes of P. We want the stationary probability

distribution on its arcs. Noting [X = i] event ‘being on node

e = a] event ‘using arc a’, we have:

i’ and [X

h

i X h

i

e =a =

e = a | X = i P [X = i]

P X

P X

b∈χ(t(a))

a

X

because π is a probability distribution.

Since (by construction) Ge is not a multigraph, for every arc

β of Ge pointing to node a, there is a unique node b of G so

that β = (b → a). And since the set of theses nodes for

every β pointing to a corresponds exactly to the set of the

arcs of G pointing to the tail of a,

X

X

function of t(β) =

function of b

β∈χ(a)

π

ea =

h

i

e = a = πt(a) WP (a) if i = t(a). This is how

As a result, P X

the expression of (eq. 13) was initially found.

b∈χ(t(a))

8

Generation time

instance, one must decide (depending on the particular population studied) whether to include vegetative reproduction

in the reproductive arcs.

This decomposition has been frequently used in matrix population models, because R is a convergent matrix which can

be interpreted as describing the moves of individuals from

class to class (by adding a ‘dead’ class, R can be transformed

into a transient Markov matrix which describes the fate of an

individual before being absorbed into the ‘dead’ state). The

fact that R is convergent (i.e. limk→∞ Rk = (0)) makes it

possible to compute expressions such the geometric series of

R and its derivatives, making this technique a powerful tool.

See Cochran and Ellner [3] or Cushing and Yican [4] for detailed example, or Caswell [10] for a more general discussion

of the technique.

However, we do not use this decomposition to this end.

We only use it to partition the nodes of the line graph into

‘reproductive’ and ‘survival’. This done, the generation time

can be defined as:

We now have all the mathematical tools we need to study

the generation time. All we have to do is to apply them to

the projection matrix of the population. The procedure is as

follow :

1. Identify the reproductive arcs

2. Markovize the life cycle

3. Build the line graph of the Markov chain

4. The generation time is then defined as the return time to

the nodes of the line graph corresponding to reproductive

arcs.

Interestingly enough, 2. and 3. need not be done in that

order as the markovized line graph of the life cycle is the

same as the line graph of the markovized life cycle (a proof of

this in the diagonalizable case can be found in appendix A.3).

By contrast, it is important to identify the reproductive arcs

before building the line graph because a reproductive arc and

a survival arc having the same tail and head will correspond

to disctinct nodes in the line graph.

At this point, the reader may wonder how to markovize a

multigraph, as (eq. 4) was given in terms of the adjacency

matrix of the graph. The general formula for markovization

is :

WA (a)wt(a)

(15)

WP (α) =

λwh(a)

T = min {t ≥ 1 | X(t) ∈ R}

(16)

Where X is the random variate of the Markov chain associated with the graph. In other word, the A = R + S decomposition, the markovization and the construction of the line

graph have enabled us to translate the initial definition of the

generation time into a mathematical definition which we can

compute. We now perform the calculation.

e

The transformed graph P

The definition of the transformed graph can be easy to apply

formally. Two examples of constructions can be found in the

appendix: that for the age-classified model (A.1) and that

for the standard size-classified model (A.2). Even when a

formal expression is hard to obtain, it is always possible to

get a numerical expression, as the numerical calculation of

the transformed graph is easy to program. About half of

my internship was spend writing a program performing this

calculation (as well as various others). The resulting code can

be found at [13].

e to find its stationHowever, one need not even compute P

ary probability distribution. Indeed, from (eq. 5), the stationary probability distribution π of the markovized graph P

i wi

. From (eq. 14), the stationary distribuis given by πi = vvw

tion of the associated line graph is given by π

ea = πt(a) WP (a).

Substituting WP (a) from (eq. 15),

Where α is the arc of markovized graph P corresponding to

arc a of A; h and t are the functions mapping an arc to its

head and tail, respectively; and w is the right (or left, if not

using the formalism of matrix population models) eigenvector

of A with respect to the dominant eigenvalue λ. This formula

reduces to (eq. 4) when A is not a multigraph. We did not

give it in the first place so as to avoid introducing to many

notations at once. We hope that after having familiarized

with reasoning on graphs rather than matrices in section Line

graph, the reader will find it obvious.

We now detail the steps described above.

The A = R + S decomposition

The arcs of the graph representing the life-cycle can be partitioned into two sets: R, which contains arcs associated with

the creation of new individuals, and S, whose elements correspond to the survival of individuals either in the same stage

or in a different one (growth, migration in multi-site models...). Even if there were several arcs of one type going from

one node to another, it would still be possible to aggregate

them into a single arc whose weight is the sum of the weights

of aggregated arcs, because we are only interested in whether

the arcs are reproductive or not.

Therefore, it is possible to decompose the adjacency matrix

A as A = R + S, where R and S are the partial adjacency

matrices associated with the sets of arcs R and S, respectively. Note that this does not correspond to decomposing

the graph into two subgraphs, as the nodes are shared.

Of course, the decomposition is dependent on the biological context and the definition of ‘reproduction’ adopted. For

π

ea =

=

vt(a) wt(a) WA (a)wh(a)

vw

λwt(a)

vt(a) wh(a) WA (a)

λvw

(17)

Stéphane Legendre noticed that from (eq. 2), this can also be

written:

π

ea = eλ (a)

(18)

It is very surprising that nobody has found this result before,

as it has important consequences for the interpretation of the

elasticities. Indeed, a direct interpretation of (eq. 18) is that

the elasticity of λ to aij is the frequency of traversal of the

arc (j → i).

9

Distribution of T

Homo sapiens

0.6

0.4

0.2

(19)

0.20

0.0

e SS

P

0.10

e SR

P

!

0.00

e RS

P

Density

e=

P

e RR

P

10

eRR contains the weights of the arcs

Where the submatrix P

going from R to R (similar notations hold for the other submatrices). Similarly, the stationary probability distribution

can be written:

50

4

6

8

10

12

Astrocaryum mexicanum

0.012

0.030

0.006

(20)

e R can be scaled so that it sums to one. We note the reπ

sulting vector ω. It is interpreted as a stationary probability

distribution on R, i.e. ω a = P [X = a | X ∈ R]

0

50 100

200

300

0

Generation time (years)

100

300

500

Generation time (years)

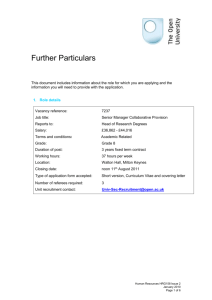

Figure 6: Distributions of T for various models. The

model for H. sapiens being age-classified with a maximum

cycle length of 10, its generation time has a finite support.

This is not the case for the other models. O. orca and

A. mexicanum are examples of standard size-class models, which typically exhibit a ‘negative binomial-shaped’

distribution. The model for D. sylvestris is less classic

and show an example of a generation time with infinite

support but which in practice takes only a few values. The

data used for these calculation can be found in [10]

The distribution of T is given by:

e RR e,

ωR P

t−2 P [T = t] =

ω

e

e RR

e SR e,

P

P

R PRS

2

0.000

40

0.015

eS

π

30

0.000

eR

π

20

Orcinus orca

Density

e=

π

Dipsacus sylvestris

0.30

e can be written:

The adjacency matrix of P

t=1

t≥2

(21)

where e is a column vector of the same length as ω full of

ones (it only sums the entries of the row vector by which it

is multiplied)

Mean of T

The distribution of T given in (eq. 21) is probably the most

complete information one can have about the generation time.

But it is hard to interpret and therefore of little interest in

itself (it can be used to compute other quantities though, see

section other results).

However, it is possible to compute the mean of T . Indeed,

combining (eq. 3) and (eq. 18), we have:

Proof:

pThe rigorous demonstration of (eq. 21) is simple but tedious. Therefore, we only give the main idea without detailing the calculus:

• T = 1 means that we have gone from a reproductive node to another. The probability of this is the

sum (hence e) of the probabilies of leaving a particular

node in R (hence ω R ) times the probability of using an

arc going from that node to another node of R (hence

e RR )

P

T = E [T ] = P

1

eλ (a)

(22)

a∈R

This is, with (eq. 18), the main result of this work. However,

this expression is only valid in the simple graph case. But

one can also use (eq. 17) to compute T. By noting that ∀a ∈

R, WA = rt(a)h(a) (where r comes from A = R + S), we have:

• T = t ≥ 2 means that we have left R (one projection interval), then spent t − 2 projection intervals in S

before returning to R (one projection interval) the vectors and matrices interpretation is as straightforward

as that of the case T = 1.

T=

λvw

vRw

This expression in valid in the general case.

Other results

The implications of the previous results have not yet been

fully explored. In this section, we give two results which might

benefit from further investigation.

1. Lebreton’s formula

This paragraph is due to Stéphane Legendre as I had no

y

The formula given in (eq. 21) makes it possible to compute

the distribution of T numerically. Some examples are given

in Figure 6.

10

Existing measures of the generation time

knowledge of Lebreton’s formula for age-classified models.

Here we generalize this formula to any model described by

a simple graph: Let c be a parameter multiplying the weights

of all reproductive arcs. Then:

eλ (c) =

The generation time is intuitively thought of as ‘the time

between two generations’. However, this definition might be

interpreted in more that one way. The demographist Coale is

generally credited for clarifying this definition [2]. He defined

three measures of the generation time:

1

T

Similarly, if d is a parameter multiplying the non-reproductive

arcs:

1

eλ (d) = 1 −

T

Proof:

• The difference in age between mothers and

daughters in the population. When the population

is at its stable age distribution, this is the age of mothers

at birth. Of course, it is also possible to define another

measure as ‘the mean difference in age between fathers

and sons’. But, as most models are female-based, this is

of less interest.

pSince c multiplies the weights of all reproductive arcs, we

have aij = c rij + sij . The fact that A is a not a multigraph

translates into rij 6= 0 =⇒ sij = 0 and rij 6= 0 =⇒ sij =

0 Hence,

(

∂λ ∂aij

∂λ ∂aij

if rij 6= 0

aij c

=

∂aij ∂c

0

else

• The age of mothers at birth in a given cohort.

This definition is very similar to the previous one, but

the population need not be at its stable age distribution.

• The time it takes for the population to grow by a

factor of its net reproductive rate. The net reproductive rate is the mean of the number of offspring an

individual is expected to produce during its life. Thus,

given the net reproductive rate, it is possible to map the

population from one ‘generation’ to another, rather than

from one time interval to another. The time scale of this

process is obtained by taking the ratio of the logarithm

of the growth rate by that of the net reproductive rate.

This can be interpreted as a measure of the generation

time.

As a result,

X

c X ∂λ ∂aij

c X ∂λ aij

c ∂λ

=

=

=

eλ (a)

λ ∂c

λ i,j ∂aij ∂c

λ

∂aij c

rij 6=0

a∈R

From (eq. 22),

1

T

The corresponding identity for eλ (d) can be derived by using the fact that the elasticities sum to one (this classic

result can be easily derived from the fact that as λ is a homogenous function ofP

degree one of the aij ’s, it follows from

∂λ

= λ)

Euler’s formula that

aij ∂a

ij

eλ (c) =

As of today, these are still the three common measures of the

generation time. The first and the second ones can be viewed

as formalizing the concept of ‘distance between generations’,

whereas the third is more similar to a ‘renewal rate’ of the

population.

For matrix population models, mathematical expressions

are available for each of these definitions. The mean age of

mothers at birth as been known for age-classified models since

Leslie [16]:

m

X

T=

iφi λ−i

y

2. Variance, entropy...

(eq. 21) makes it possible to perform numerical computation

of other values characterizing T , such as its variance, etc.

Although in some cases it is possible to get a formal explicit

expression of some of these quantities (see, for instance A.1

and A.2), the resulting expressions are rather obscure and

hard to interpret (but this might simply be due to a failure

to simplify them).

One of these quantities is the Shannon Entropy [14] of T ,

defined as:

X

S (T ) = −

P [T = t] log (P [T = t])

(23)

i=1

Where m is the number of age-classes of the model, and φi is

the net fertility of age-class i, i.e. the product of the survival

to class i times the fertility of class i.

However, a formula for the general case was unknown before

1992, until Cochran and Eller’s paper [3]. Their expression of

the mean age of mothers at birth is given by:

t

This quantity is intriguing because it as the same interpretation as the population entropy defined by Demetrius [15],

but is different from it for non age-classified models. We will

discuss this further in the discussion.

Pm

yi wi γi

Pi=1

m

i=1 wi γi

Discussion

Where m is the number of classes of the model, w is the stable

stage distribution, y is the distribution of ages in classes and

γ is the fecundity in ‘newborn equivalents’ of the classes. y

and γ are given by:

In this section, we first compare our expression of the generation time to the existing ones. We then discuss ways in which

this work could be extended.

11

Pm

(I − λ−1 S)−2

((I −

λ−1 S)−1 )

b

ij j

ij bj

(Rw)j

, where bj = Pm

i=1 (Rw)i

Comparison of the three measures of

generation time

300

yi =

j=1

Pm

j=1

and

250

●

●

100

(v, R and S having the same meaning as before).

150

200

(vR)i

, where vref is a newborn stage of reference

vref

Years

γi =

mu

tau

T

50

The interpretation of this quantity being the same as that

we computed, we expect both expressions to be identical.

And indeed, although this hasn’t been shown analytically,

numerical computations show that it is the case. As a result, we have found a much simpler expression of an already

known quantity. Our derivation is very different from that of

Cochran and Ellner. It is also more general at it envisions

the generation time as a random variate and provide its full

distribution. Moreover, our method is independent of any biological context and might be applied to other models than

matrix population models, as we will discuss in the next section. However, Cochran and Ellner’s method enabled them to

derive an expression for the mean age of mothers at birth in a

given cohort, as well as the time it take the population to grow

by a factor of its net reproductive rate (although with respect

to this quantity, both Cushin and Yican’s expression [4] and

its derivation are more simple and intuitive).

●

●

0

●

a

O.

●

●

orc

A.

xic

me

ii

s

is

ule

um

siz

ien

str

ca

an

as

ve

ap

l

a

g

s

y

.

a

.

s

C

H

G.

D.

Figure 7: Comparison of the three measures of the generation time for six organisms. mu is the age of ‘mothers’

at birth in a newborn, tau is the time it takes for the

population to grow by a factor of its net reproductive rate,

and T is the quantity which we calculated and showed to

equal the mean age of mothers at birth in the stable-stage

population. This illustrates the fact that the three values

can be quite different and that Coale’s relation does not

hold in the general case.

At this point, one might wonder how these three quantities

relate to one another, or whether one of them is superior in

anyway to the others, or might be better suited to a particular

use.

Perspectives

Equation 18 and the new interpretation of elasticities it leads

to are very intriguing. Although this is only valid in the

simple graph case, this is not a real limitation as far as matrix population models are concerned since multigraphs are

exceptional in this field (but this might in part be due to a

bias in the construction of projection matrices, as it is easy to

imagine situations best described by multigraphs). It is therefore very surprising that this result had never been found, as

elasticities and their interpretation have been a major subject of study [17,18]. As a result, it remains to be determined

whether this new interpretation of elasticities as the frequency

of use of arcs (rather than the classic, more indirect ‘relative

contribution to the growth rate’) can lead to novel interpretations of existing results.

Another point which will have to be studied further is the

question of the entropy of T . Demetrius [1, 15] defined a

quantity he calls population entropy as

Z ∞

S=−

p(x) log p(x) dx

As of how the quantities relate to one another, this remains

to be explored. However, Coale says that in humans, they are

very similar, and that the time it takes for the population to

grow by a factor of its reproductive rate is approximately the

mean age of mothers of a given cohort at birth and the average age of mothers at birth at the stable stage distribution [2].

Caswell backs up this assertion [10], but we found this assertion to be erroneous in the general case: the discrepancies

between the values can be huge, and the alleged relation does

not hold, as show by Figure 7.

It is harder to say that one of these quantity is better suited

for a particular use than the others. However, the mean age

of mothers at birth in a given cohort has the advantage that

it does not rely on the population being at its stable stage

distribution, a very strong and often questionable hypothesis

– But it is also more challenging to interpret. As of the mean

age of mother at birth in the population and the time it takes

for the population to grow by a factor of its net reproductive

rate, although we have no definitive argument nor experimental support for this, the way they were derived suggests that

the former might be more adapted when studying evolution,

and the latter better suited from a purely demographical perspective.

0

where p is the probability distribution of the age of mothers

at birth – that is, of the random variate we have called T . As

a result, it seems natural to adapt this definition to matrix

population models by using the Shannon entropy (the discrete

analogue of the differential entropy) of T and define S as

12

we did in (eq. 23). However, for matrix population models,

population entropy has been defined as

X

S = −T

πi pij log pij

be used to test for allometric relations in ecosystems in an

effort to scale-up the metabolic theory of ecology to the level

of the ecosystem.

i,j

The S = log(T) relation

(P being the previously defined Markov chain and π its stationary probability distribution).

Although it is easy to show that both definitions coincide

for age-classified models (see appendix A.1), numerical computations show that they are different for other models. It is

therefore interesting to compare both definitions, but this is

outside the scope of this document: population entropy relies

on far more complex mathematical concepts than those used

here, and there is a vast literature about its biological significance [1, 15, 19]. However, it is worth noting a few points:

150

100

0

• Many studies restricted themselves to the age-classified

case – as a consequence, many result about population

entropy apply to the Shannon entropy of the generation

time.

●

●

50

Population entropy: S = HT

●

● ●●●

●● ●

●

●●●

●● ●

●●●●

●●

● ●●

●●

● ●●● ●●

●

●

●

● ● ●

●●

●

●●

●

●

● ●

●

● ●

●

●

3

4

● ●

● ●

●●

●

●●

●

●●

●●

●

●●

●

●

●

●

●

●

●

●

●

1

• A 2009 paper [1] gave theoretical as well as experimental

support for the relation S = a log T + b. But this relation

is best observed when using the Shannon entropy of the

generation time, as shown by Figure 8.

●

●●

●

2

Shannon entropy of T

• The interpretation as the uncertainty over the genealogies of individuals also holds for the Shannon entropy of

the generation time, and is arguably more straightforward. Moreover, the Shannon entropy of the generation

time seems a more natural translation of the population