part 2 - livetoad.org

advertisement

Review of elementary linear algebra: part 2

Linear transformations and matrix multiplication

A linear transformation T : V → W is a function that respects linear combinations:

T (c1 v1 + · · · + cn vn ) = c1 T (v1 ) + · · · + cn T (vn ),

where the vi ∈ V and the ci are scalars. Think of differentiation and integration: they are both linear.

If V and W are finite dimensional, then T can be represented by a matrix. For this representation

we must choose bases, say v1 , . . . , vn for V and w1 , . . . , wm for W . Give this choice we can write each

T (vj ) as a linear combination of the wi :

T (vj ) =

m

X

aij wi .

i=1

Set M to be the m × n matrix [aij ]. If v = x1 v1 + · · · xn vn and T (v) = y1 w1 + · · · + ym wm then we can

compute the coefficients wi using multiplication by M :

y1

x1

a11 · · · a1n

..

.. .. = .. .

.

. . .

am1

···

amn

xn

ym

Note that each column of M records the coordinates of the image under T of the corresponding basis

vector vj .

Let’s summarize this in a somewhat abstract formulation. If B is the basis for V and C is the basis

for W then we write [v]B and [w]C for the vectors of coordinates with respect to these bases, and if

[T ]B,C denotes the matrix representation of T with respect to these bases, then

[T ]B,C [v]B = [T (v)]C .

We use this formulation mostly to keep track of the dependence on bases. If we change bases (as we will

want to do in many situations) then the matrix representation and the coordinate vectors change. More

on change of basis anon.

Here are two conventions concerning matrix representations. If V = W and B = C we write [T ]B

rather than [T ]B,C . Also, if we do not specify any basis then we implicitly we are using the standard

basis:

0

0

1

0

1

0

e1 = 0 , e2 = 0 , e3 = 1 , . . .

..

..

..

.

.

.

Kernel, image, and nullity

Two of the most important objects associated with a linear transformation are its image (or range):

T (V ) = im(T ) = {T (v) | v ∈ V } ⊂ W,

and its kernel:

ker(T ) = {v ∈ V | T (v) = 0} ⊂ V.

Let me emphasize that im(T ) is a subspace of W , while ker(T ) is a subspace of V . The subspace im(T )

is an abstraction of the column space for a matrix. The matrix version of the notion of kernel is called

the nullspace:

nullspace of M = {v | M v = 0}.

The dimension of kernel or the nullspace (as appropriate) is called the nullity.

The fundamental fact about this parameter is that

rank + nullity = dim(V ),

or

number of lead variables + number of free variables = number of columns.

1

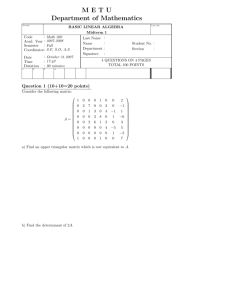

Elementary matrices and LDU factorization

Let’s return to our elementary row operations, and examine their matrix representations:

Type I: multiply row i by r. The matrix of this operation is a diagonal, with i-th diagonal entry

equal to r, and all other diagonal entries equal to 1. Note that this is the result of performing the row

operation on the identity matrix. All of the other elementary matrices are found in the same way.

Type II: transpose (swap) rows i and j. The matrix of this operation is the same as the identity

matrix except that the i-th and j-th rows have been swapped. More generally, a permutation matrix

is one obtained by permuting the rows of the identity matrix.

Type III: add r times row i to row j. Again this looks just like the identity matrix, except that there

is the value r in the ji-entry.

Now to reduce a matrix M to echelon form U we multiply by a sequence of elementary matrices:

Ek · · · E2 E1 M = U.

Usually we do not require permutation matrices, and we can leave until the last step factoring out all of

the leading entry from each nonzero row:

Lk · · · L2 L1 M = DU,

where the Li are of type III, and D is diagonal. Moreover, usually we can work systematically in M ,

clearing columns left-to-right and top-to-bottom. This is why we used the letters “L” in the formula

above: when j > i the elementary matrix which adds r times row i to row j is a lower triangular

matrix — that is, all of the entries above the main diagonal equal 0.

Now the product and the inverses of lower triangular matrices are lower triangular, hence, if we never

have to permute rows, then we can factor

−1

−1

M = LDU = (L−1

1 L2 · · · Lk )DU.

If we do have to permute rows then it turns out that we can perform all of the permutations at the

beginning:

P M = LDU.

This factorization leads to a simple algorithm for solving a system M x = b, since x = U −1 D−1 L−1 b:

1. Solve Lz = b by forward substitution:

for i = 1, 2, . . . , m : zi = bi −

i−1

X

Lij bj .

j=1

2. Solve Dy = z simply by scaling each zi .

−1

for i = 1, 2, . . . , m : yi = Dii

zi .

(D is diagonal!)

3. Solve U x = y by back substitution.

for i = m, m − 1, . . . , 1 : xi = yi −

m

X

Uij yj .

j=i+1

The change of basis formula

If we have a linear transformation T and two different bases B and E then we have two different matrix

representations, M and N . How re these matrices related? If S the the change of basis matrix from

B to E — that is, the matrix such that S[v]B = [v]E — then SM = N S.

x = [v]B

↓

y = Sx = [v]E

−→

M x = [T (v)]B

↓

−→ N Sx = SM x = [T (v)]E

2

Since S is invertible we also have

M = S −1 N S, N = SM S −1 .

In this case we say that M and N are similar matrices.

Let’s look at an example. Let E be the standard basis in R2 :

1

0

E = {e1 , e2 } =

,

.

0

1

Thus, the coordinates of a column vector in R2 are its usual coordinates:

x1

= x1 e1 + x2 e2 .

x2

For a second basis let

1

1

B = {b1 , b2 } =

,

.

1

−1

Now let T : R2 → R2 be the linear transformation such that

3

0

T (e1 ) =

, T (e2 ) =

−1

2

From this information we can easily write down the matrix of T in the standard basis:

3 0

[T ]E =

.

−1 2

We can also easily write down S, the change of basis matrix from B to E:

1 1

S=

.

2 −1

Since t is not hard to compute S −1 — the change of basis from B to E — we could find M = [T ]B by

computing S −1 N S.

However, this is not so efficient in more than 2 dimensions, so instead we find M by Gauss-Jordan

reduction. Let’s first recall what our goal is. If M = [aij ] then

3

0

3

T (b1 ) =

+2

=

= a11 b1 + a21 b2 ,

−1

2

3

3

0

3

T (b2 ) =

−

=

= a12 b1 + a22 b2 .

−1

2

−3

Thus, we need to solve the system

1 1

a11

2 −1 a21

a12

3 3

=

a22

3 −3

This we do by a sequence of elementary row operations:

1 1 | 3 3

1

→

2 −1 | 3 −3

0

1

→

0

1

→

0

1 |

−3 |

1 |

1 |

0 |

1 |

3

3

−3 −9

3 3

1 3

2 0

1 3

That is,

M =S

−1

2

NS =

1

0

,

3

or

T (b1 ) = 2b1 + b2 , T (b2 ) = 3b2 .

(Check this!)

3

Homework problems: due Friday, 20 January

6 Let E, B, and T be as in the example above, and set F = {e2 , b2 }

a Show that F is a basis.

b Find D = [T ]F .

c Find Q and R such that N = QDQ−1 and M = RDR−1 .

7 Let U be the 5 × 5 matrix such that

(

1, if i ≤ j;

Uij =

0, if i > j.

So, U is upper triangular.

a Explain why U has full rank. What is the column space of U ? What is its nullity?

b Let B be the columns of U . Find the change of basis matrix from B to E, the standard basis.

8 For each of the following parameters m, n, r, ν, either find an m × n matrix of rank r and nullity

ν, or else explain why no such matrix exists.

a m = 5, n = 3, r = 4, ν = 1.

b m = 5, n = 4, r = 3, ν = 1.

c m = 5, n = 4, r = 1, ν = 3.

d m = 3, n = 5, r = 3, ν = 2.

9 If i > j let Lij be the 4 × 4 elementary matrix of type III which adds j − i + (j − 1)(j − 8)/2 times

row j to row i.

a Find L−1

ij .

−1 −1 −1 −1 −1

b Compute L−1

21 L31 L41 L32 L42 L43 .

10 Let U be the matrix from problem 7, let L be its transpose, let M = LU , and finally let b be the

vector

1

2

3 .

4

5

Solve the system M x = b by the procedure described above, using forward and backwards substitution.

4