Much like the talking point that were published for this week, this

advertisement

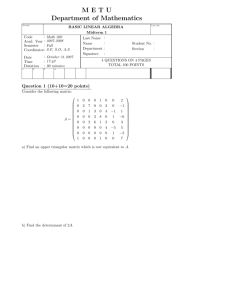

Much like the talking point that were published for this week, this week’s summary will likely lack a portion of substance as we spent a good deal (and very much needed) of time reviewing last week’s talking points which dealt primarily with a review of linear algebra. It was quite interesting to see the extent of the results change for a matrix system when the matrix A was a Hilbert Matrix. What I mean by this is the change that occurs in the solution vector is significant when only a minor change was made to the original Hilbert Matrix. Next, we went over what it means for something to be linearly independent. This means that there are no constants, a, b, and c, such that that 𝑎𝑥⃑ + 𝑏𝑦⃑ + 𝑐𝑧⃑ = ⃑0⃑ unless a, b, and c are all zero. Otherwise, the subset of vectors is linearly dependent. We then went on to review some computational methods for finding the determinant of a matrix (namely 2x2 and 3x3 matrices). Another way that we talked about (which I had never done before in Linear Algebra) was to turn the original matrix into a triangular matrix through the use of elementary row operations. I was more familiar using elementary row operations to find the inverse of a matrix by taking the original and augmenting it with the identity matrix and working towards reduced row echelon form. In class, we did come up with a neat little chart at what elementary row operations will do to the result of a determinant. The results were as follows: Elementary Row Operation Result of the Determinant Interchange Rows Change Sign Multiply a row by a constant Multiply the determinant Add a multiple of 1 row to another row No change When discussing inverse matrices, you showed us a way to “stack” two matrices together to perform the multiplication operation. I know that this method was unfamiliar to me and I can see the operation in action, I am just a little uneasy about using it at the point. This is merely a comfort level thing that I need to work on! We wrapped up the linear algebra review by revisiting characteristic polynomials; how to find them and use them to find the eigenvalues of a matrix. Of the newer material that we looked at briefly, the power method seemed to be pretty interesting but I am not sure yet what the exact uses for it will be. The algebra work behind the scenes here was actually straight forward and allowed us to get to the conclusion that 1 lim 𝜆𝑘 𝐴𝑘 𝑥⃑ = 𝑐1 𝑥⃑1 . This is due to the fact that in the theorem we are assuming that λ1 is the 𝑘→∞ 1 dominant eigenvalue. We concluded class with some basics on population growth models and quickly tried to portray them as matrices. The idea here was that each birthing cycle is a sum of the previous cycles. So we note that the change of population from one month 𝑥⃑ to another month 𝑦⃑ can be represented by: 𝑦0 = 𝑥1 + 𝑥2 + 𝑥3 𝑦1 = 𝑥0 𝑦2 = 𝑥1 𝑦3 = 𝑥3 Converting this back to matrix form gives us: 𝑦⃗ = 𝐴𝑥⃗ or ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃗ 𝑥𝑛+1 = 𝐴𝑥 ⃑⃑⃑⃑⃗ 𝑥𝑛 = 𝐴𝑛 ⃑⃑⃑⃑⃗ 𝑥0 𝑛 or ⃑⃑⃑⃑⃗