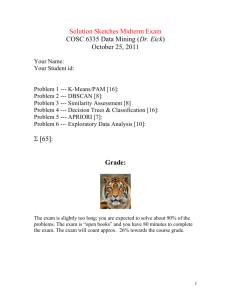

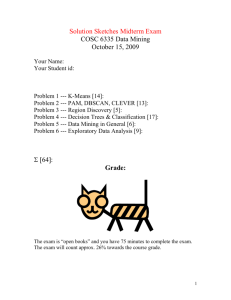

Data Mining Exam with Solutions

advertisement

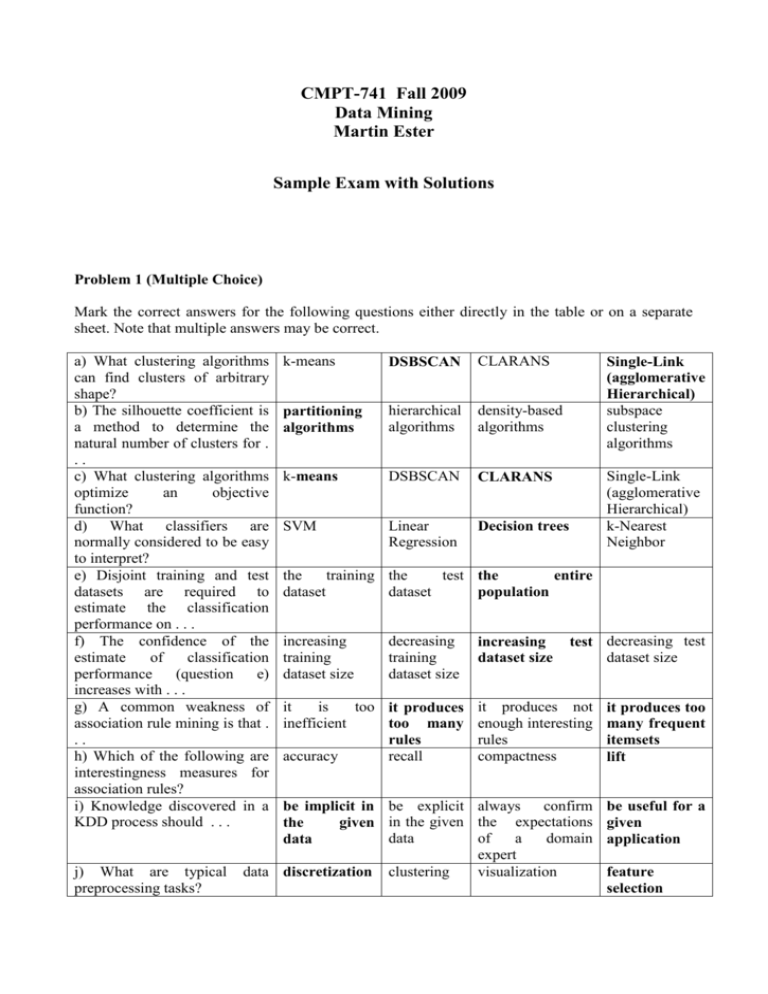

CMPT-741 Fall 2009 Data Mining Martin Ester Sample Exam with Solutions Problem 1 (Multiple Choice) Mark the correct answers for the following questions either directly in the table or on a separate sheet. Note that multiple answers may be correct. a) What clustering algorithms can find clusters of arbitrary shape? b) The silhouette coefficient is a method to determine the natural number of clusters for . .. c) What clustering algorithms optimize an objective function? d) What classifiers are normally considered to be easy to interpret? e) Disjoint training and test datasets are required to estimate the classification performance on . . . f) The confidence of the estimate of classification performance (question e) increases with . . . g) A common weakness of association rule mining is that . .. h) Which of the following are interestingness measures for association rules? i) Knowledge discovered in a KDD process should . . . j) What are typical preprocessing tasks? k-means DSBSCAN CLARANS partitioning algorithms hierarchical algorithms density-based algorithms k-means DSBSCAN CLARANS SVM Linear Regression Decision trees Single-Link (agglomerative Hierarchical) subspace clustering algorithms Single-Link (agglomerative Hierarchical) k-Nearest Neighbor the training the test the entire dataset dataset population increasing training dataset size decreasing training dataset size it is too it produces inefficient too many rules accuracy recall increasing dataset size test decreasing test dataset size it produces not enough interesting rules compactness it produces too many frequent itemsets lift be implicit in be explicit always confirm the given in the given the expectations data of a domain data expert data discretization clustering visualization be useful for a given application feature selection Problem 2 (Association Rules) Consider the following transaction database: TransID T100 T200 T300 T400 Items A, B, C, D A, B, C, E A, B, E, F, H A, C, H Suppose that minimum support is set to 50% and minimum confidence to 60%. a) List all frequent itemsets together with their support. A 100%, A,B 75% A,B,C 50% B 75%, A,C 75% A,B,E 50% C 75%, A,E 50% E 50%, A,H 50% H 50% B,C 50% B,E 50% b) Which of the itemsets from a) are closed? Which of the itemsets from a) are maximal? Closed: A, Maximal: A,H A,B A,C A,B,C A,H A,B,C A,B,E A,B,E c) For all frequent itemsets of maximal length, list all corresponding association rules satisfying the requirements on (minimum support and) minimum confidence together with their confidence. A,B,C A,B A,C B,C B C C B A A,C A,B 66% 66% 100% 66% 66% A,B A,E B,E B E E B A A,E A,B 66% 100% 100% 66% 100% A,B,E d) The lift of an association rule is defined as follows: lift = confidence / support(head) Compute the lift for the association rules from c). A,B A,C B,C B C C B A A,C A,B 66% 66% 100% 66% 66% lift = 0.66 / 0.75 = 0.89 lift = 0.66 / 0.75 = 0.89 lift = 1.0 / 1.0 = 1.00 lift = 0.66 / 0.75 = 0.89 lift = 0.66 / 0.75 = 0.89 A,B A,E B,E B E E B A A,E A,B 66% 100% 100% 66% 100% lift = 0.66 / 0.5 = 1.33 lift = 1.0 / 0.75 = 1.33 lift = 1.0 / 1.0 = 1.00 lift = 0.66 / 0.5 = 1.33 lift = 1.0 / 0.75 = 1.33 Why are only those association rules interesting that have a lift (significantly) larger than 1.0? The lift measures the ratio of the confidence and the expected confidence, assuming independence of the body and the head of the association rule. See the following proof: lift = confidence / support(head)= confidence / ( support(body) * support(head) / support(body) ) = confidence / expected_confidence Only those association rules are interesting for which the actual confidence is significantly larger than the expected confidence. Problem 3 (Clustering Mixed Data) The EM clustering algorithm is usually applied to numerical data, assuming that points of a cluster follow a multi-dimensional Normal distribution. With a modified cluster representation (probability distribution), the algorithm can also handle data with both numerical and categorical attributes. Suppose that data records have the format ( x1 , , x d , x d 1 , , x d e ) , where the first d attributes are numerical and the second e attributes are categorical. a) How can you represent the (conditional) probability distribution of a single categorical attribute Ad+i with k values a1, . . ., ak (given class cj)? Use a simple histogram: Frequency(a1), . . ., Frequency(ak) P( xd i | c j ) Frequency( xd i ) b) The d numerical attributes are still assumed to follow a d-dimensional Normal distribution, i.e. P( x1 ,, xd | c j ) 1 1 (2 ) d | | e2 (( x1 ,, xd ) )T 1 ( x1 ,, xd ) What assumption on the dependency of the numerical attributes and the categorical attributes can you make that allows you to compute the (conditional) joint probability distribution of all d + e attributes? Assumption: each categorical attribute is conditionally (given a certain class) independent from the d numerical attributes and from the other categorical attributes c) With the solutions to a) and b), what is the (conditional) joint probability distribution of all d + e attributes given class cj? e P( x1 , , x d , x d 1 , , x d e | cj) P( x1 ,, x d | c j ) P( x d i | c j ) i 1 Problem 4 (XML Classification) Consider the task of classifying XML documents. Compared to simple text documents, XML documents do not only have a textual content, but also a hierarchical structure represented by the XML tags. An XML document can be modeled as a labeled, ordered and rooted tree, where a node represents an element, an attribute, or a value. We simplify the situation by ignoring attributes. Thus, inner nodes of an XML tree represent elements, and leaf nodes represent values, i.e. the textual content (a string). The following is an (incomplete) example of an XML document representing a scientific paper: <paper> <title>TTTTTTT1 TTTTTT2</title> <author>AAAAA</author> <author>BBBBBB</author> <section> <title>RRRR1 RRRRRRR2</title> <text>SSS1 SSSS2 SSS3 SSS4</text> </section> <section> <title>UUUU1 UUUUUUU2</title> <text>VVVV1 VVV2 VVVV3</text> </section> <references>WWW1 WW2 WW3 W4</references> </paper> The XML structure defines the context of a given term, and one and the same term can appear in different contexts (elements) with different meanings. As an example, it makes a difference whether a term appears within the title of the paper or within the body of one of its sections. As another example, it makes a difference whether a term appears within one of its author elements or within the references element (as author of a referenced paper). a) In order to apply standard classification methods to XML documents, we want to represent each XML document by a feature vector. The features shall capture the content (terms) as well as the structure (elements in which the terms appear). Define an appropriate set of features and provide some illustrating examples. We define one feature for every combination of one element tag and one term, which appears in at least a certain percentage of the documents. E.g., <author> AAAAA, <author> BBBBBB, <title> TTTTTTT b) Suppose that we have 1000 relevant terms and 50 relevant XML elements (tags). How many features (dimensions) do you generate? What problems does such a dimensionality of the feature vectors create? What standard classification method do you recommend? Explain your answers. There are up to 10000 * 50 = 500,000 features. Note that we do only consider combinations of an element and a term that actually appear in the document set. Most features would have values of zero for most of the XML documents. Text feature vectors are always sparse, but the problem gets worse with our XML feature transformation, since it further blows up the number of features. SVM are most appropriate since they can deal well with very high-dimensional datasets. c) Suppose that you want to consider the entire context of terms. For example, you want to distinguish between a term appearing in the title of the paper and the same term appearing in the title of one of the sections. How can you extend your feature set (solution to a) to accommodate this requirement? Provide some illustrating examples. We define one feature for every combination of one path (starting from the root element) of element tags and one term. E.g., <title> TTTTTTT, <section> <title> RRRR, <section> <text> SSS Problem 5 (Clustering and Classification Algorithms) To compare the capabilities of some popular clustering and classification algorithms, provide sample datasets that cannot be dealt with accurately by one algorithm but by the other. In every case, explain why one of the algorithms fails to discover the correct clusters or classes. a) Draw a 2-dimensional dataset with two clusters that can be discovered with 100% accuracy by DBSCAN, but not by k-means. x x x x x x x x x x x x x x x x x x x x x x x x Solid: k-means x x x x Dashed: DBSCAN x x x x Solid: k-means Dashed: DBSCAN k-means represents a cluster by its centroid (a point) and assigns all other points to the closest centroid. b) Draw a 2-dimensional dataset with two clusters that can be discovered with 100% accuracy by k-means, but not by DBSCAN. x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x Solid: k-means x Dashed: DBSCAN Solid: k-means Dashed: DBSCAN The two clusters have a dense core and a sparser periphery. With a high MinPts value, DBSCAN finds only the dense cores, but misses the periphery. With a low MinPts value, DBSCAN merges both clusters into one, since the periphery of the two clusters has the same density as the bridge between the two clusters. c) Draw a 2-dimensional dataset with two classes that can be classified with 100% accuracy (on the training dataset) by a decision tree, but not by a linear SVM. + + + + + + + + + - - - - - - Solid: decision tree Dashed: SVM + + + + + + + + + These two classes are not linearly separable, but can easily be separated by a first vertical and a second horizontal split. d) Draw a 2-dimensional dataset with two classes that can be classified with 100% accuracy (on the training dataset) by a linear SVM, but not by a 3-Nearest Neighbour classifier. + + - --- + Solid: 3NN Dashed: SVM The two classes are linearly separable. The 3NN classifier misclassifies the positive training example in the solid circle because of the three negative training examples that are below the separating hyper-plane, but closer to the positive example than all other training examples.