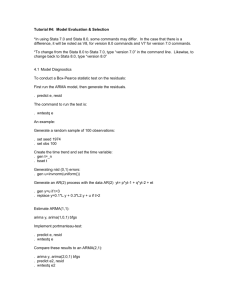

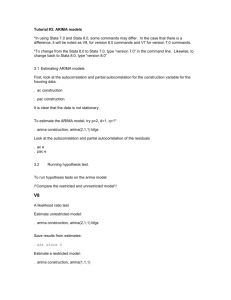

The ARIMA Procedure

advertisement