Brain-Computer Interfacing and Games

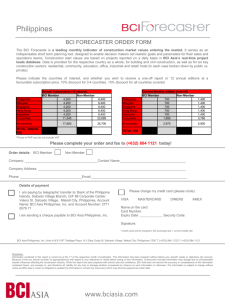

advertisement