g-emba-stat-lec06-mlr-winter-2013

advertisement

Correlation & Regression – (Significance)

XBUS 6240 – Understanding Managerial Statistics – Fall 2012

M.D. Harper, Ph.D.

Agenda

*Regression Significance

*Sum of Squares

*ANOVA

*Test of Hypothesis

*Inferential Statistics

*Coefficient of Determination

*Multiple Linear Regression

*Correlation Matrix

*Correlation Significance

Page 1 of 14

(Review)

Given paired data

A survey was administered to 5 subjects containing the following items.

[A questionnaire was given to 5 people containing the following questions.]

1. How many miles did you travel to get here today? _____

2. How many minutes did it take to get here today? _____

3. Please indicate your gender. (Circle one) Male Female

4. What was the temperature in Fahrenheit on your travel here today? _____

5. Please indicate your classification. (Circle one) Fresh Soph Jr Sr

Disagree

Agree

6. It was very difficult for me to travel here today. 1

2

3

4

5

.

The results:

Subject

Miles Minutes Gender Temp oF Class Difficult

1

1

4

M

68

Jr

2

2

3

6

F

58

Fresh

1

3

3

20

M

48

Soph

2

4

5

15

F

50

Jr

3

5

8

20

F

65

Soph

2

.

Consider Miles and Minutes.

Miles Minutes

Subject

X

Y

X*X Y*Y X*Y

1

1

4

1

16

4

2

3

6

9

36

18

3

3

20

9

400

60

4

5

15

25

225

75

5

8

20

64

400 160

SS=Sum of Squares

SSXX = 108–20*20/5 = 28

Sum

20

65

Sum 108 1077 317

SSYY = 1077–65*65/5 = 232

SS

28

232

57

SSXY = 317–20*65/5 = 57

SS = Sum of Squares of Error = Sum of Squared Errors

SSXX= ( X –X )2 = X2–(X)*(X)/n = 108–20*20/5 = 28

SSYY= ( Y –Y )2 = Y2–(Y)*(Y)/n = 1077–65*65/5 = 232

SSXY= ( X –X )*( Y –Y ) = X*Y–(X)*(Y)/n = 317–20*65/5 = 57

Summary

n=5

SS=Sum of Squares

2

SSXX = 108–20*20/5 = 28

X=20

X =108

2

SSYY = 1077–65*65/5 = 232

Y=65

Y =1077

SSXY = 317–20*65/5 = 57

X*Y=317

Page 2 of 14

(Review)

Summary

*Correlation. Describes relationship between two variables.

*Regression. Uses one variable to predict another variable.

*Estimation.

Correlation: Determine coefficient of correlation.

Regression: Determine regression model.

*Significance.

Correlation: Make inference that correlation is significantly different from zero.

Regression: Make inference that least-squares regression slope is significantly

different from zero.

(Summary)

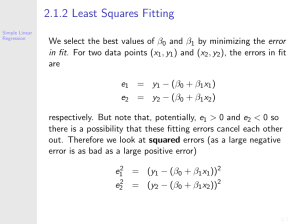

Given „n‟ pairs of data labeled „X‟ and „Y‟.

Mean of X is X = X/n

Mean of Y is Y = Y/n

Sum of Squared Errors about the Mean (SS):

SS of Variable Y: SSYY=( Y –Y )2=Y2–(Y)2/n

SS of Variable X: SSXX=( X –X )2=X2–(X)2/n

SS between variables: SSXY=( X –X )*( Y –Y ) =XY–(X)*(Y)/n

Estimation.

Coefficient of Correlation: R=SSXY/√(SSXX*SSYY)

For Regression, let Y=Dependent Variable & X=Independent Variable.

Regress Y on X.

Least-squares Regression Model: Ŷ=b0+b1*X

where b1 = SSXY/SSXX & b0 =Y – b1 *X

Significance.

Given Level of Significance = .

Correlation. Let tt = √[ (n–2)*R2/(1–R2) ]. Then, using the Excel function,

when 2*(1–T.DIST( tt , n–2 , 1 ) ) < , the correlation is significant.

Least-Squares Regression. Let Ft = (n–2)*R2/(1–R2) = tt2 . Then, using the Excel

function, when 1–F.DIST( Ft , 1 , n–2 , 1 ) < , the regression is significant.

Page 3 of 14

Concept of Regression Model Significance

1. Regression Line: Ŷ=b0+b1*X

2. Regression NOT Significant

3. Slope is Zero

4. b1=0

5. Intercept isY

6. b0 =Y

[Since b1=0 and b0=Y – b1 *X ,

b0 =Y ]

7. Independent variable, X, does not

contribute in estimating the dependent

variable, Y.

8. Since X does not contribute, the estimate

of Y is Y, the sample mean of Y

which is independent of X.

9. Since X does not contribute in

estimating Y, don‟t use it. Just useY.

1. Regression Line: Ŷ=b0+b1*X

2. Regression IS Significant

3. Slope is NOT Zero

4. b1≠0

5. Intercept is NOTY

6. b0 ≠Y

[Since b1≠0 and b0=Y – b1 *X ,

b0 =Y – b1 *X ]

7. Independent variable, X, contributes in

estimating the dependent variable, Y.

8. Since X contributes in estimating Y, the

estimate of Y is Ŷ=b0+b1*X, the

conditional estimate of the population

mean of Y given X or E[Y|X].

9. Since X contributes in estimating Y, use

the regression equation Ŷ=b0+b1*X.

When does a regression become significant?

How is significance determined?

How is significance stated?

(Test of Hypothesis)

Null Hypothesis: Ho: 1=0

↑

Alternative Hypothesis: Ho: 1≠0 ↑

(Inferential Statistics)

Page 4 of 14

Regression Definitions and Terminology

Subject

1

2

3

4

5

Miles Minutes Estimate

X

Y

Ŷ=5+2X

1

4

7

3

6

11

3

20

11

5

15

15

8

20

21

Error

=(Ŷ–Y)

3

5

–9

0

1

Y=Minutes

Consider the data and the regression line, Ŷ=5+2*X.

20

15

10

5

0

0

2

4

6

8

X=Miles

10

Consider the definitions and terminology.

The regression line: Ŷ=5+2*X

Now, the mean of Y isY=Y/n=13

But the estimate for E[Y|X] for X=3 is:

Model (Ŷ=11): Ŷ=b0+b1*X=5+2*3=11

Error, = (Ŷ – Y) between Model and Data

Data for X=3 is (Y=6): Y=0+1*X+

Y=Minutes

20

15

10

5

0

0

2

4 6 8

X=Miles

Model of Data: Y=0+1*X+

(Example: Data sets above.)

Model of Fit: Ŷ=b0+b1*X

(Example: Ŷ=5+2*X )

(Regression Model)

Mean of Y: isY=Y/n

(Example:Y=

Y=0+1*X+

Ŷ=b0+b1*X

10

0 and 1 are Parameters. is an error term.

b0 and b1 are Estimates of the Parameters.

E[Y|X] is the Parameter, population mean of Y|X.

Ŷ=b0+b1*X is the Estimate of the Parameter.

Y is the Dependent Variable representing Data

X is the Independent Variable representing Data

0 is the “Intercept” parameter

1 is the “Slope” parameter.

is the error term

Ŷ is the estimate of E[Y|X], the population mean of Y

conditioned on X representing the value from a

regression equation for a given value of X.

b0 is the estimate of 0 (the intercept)

b1 is the estimate of 1 (the slope)

Y is the sample mean

or the estimate of E[Y] not conditioned on X

or the estimate of the mean of Y independent of X

Regression Model of Data

Regression Equation

Regression Model of the Fit of the Data

Page 5 of 14

Sum of Squares

Consider the regression line, Ŷ= b0+b1*X.

Y=Minutes

20

Regression Line: Ŷ=b0+b1*X

15

Y:Y =Y/n

Sample Mean

Ŷ: Ŷ=b0+b1*X

Regression Estimate

10

5

0

0

2

4 6 8

X=Miles

10

Y: Y=0+1*X+

Data Value

For example, given the regression line, Ŷ=5+2*X.

Y=13 , Sample Mean

For X=3:

Ŷ=11 , Estimate

Y=6 , Data Point

Definitions.

Model of Data: Y=0+1*X+

Least-squares Regression Model: Ŷ=b0+b1*X , for b1=SSXY/SSXX and b0=Y – b1 *X

Sample mean of Data :Y = Y/n

Total Sum of Squares, TSS = (Y –Y)2 = SSYY = 232

Sum of Squares of Model, SSM = (Ŷ –Y )2 = (SSXY)2/SSXX = (572/28) ≈ 116.04

(Sum of Squares of Regression)

Sum of Squares of Error, SSE = (Y – Ŷ )2 = SSYY – (SSXY)2/SSXX ≈ 115.96

(Sum of Squares of Residuals)

From Example: SSYY = 232; SSXX = 28; SSXY = 57

Identities.

Total

TSS

(Y –Y)2

SSYY

=

Model

=

SSM

=

(Ŷ –Y )2

= [ (SSXY)2/SSXX ]

+

+

+

+

Error

SSE

(Y – Ŷ )2

[ SSYY – (SSXY)2/SSXX ]

1. Total variation is explained by either the variation due to the model or variation due to

random error.

2. As Ŷ approachesY, SSM approaches zero and SSE approaches TSS.

3. As Ŷ approachesY, the total variation is explained less by the model and more by

random error.

4. As Ŷ approachesY, the regression slope approaches zero and the correlation

coefficient approaches zero. Consider the estimate of the regression line,

Ŷ=b0+b1*X = (Y – b1 *X ) + b1 * X , as b1 approaches zero, Ŷ approachesY.

Page 6 of 14

ANOVA – Analysis of Variance – Regression Significance

Consider the values: Subject

1 2 3 4 5 Sum

SSXY =57

X=Miles

1 3 3 5 8

20

SSXX =28

Y=Minutes 4 6 20 15 20 65

SSYY =232

ANOVA Table

SOURCE

DF SS

MS=SS/DF

Ft

R2

MODEL

1

SSM MSM=SSM/1

MSM/MSE SSM/TSS

(Regression)

ERROR

n-2 SSE MSE=SSE/(n-2)

(Residuals)

TOTAL

n-1 TSS

(Corrected Total)

Using Values:

TSS = (Y –Y)2 = SSYY = 232

SSM = (Ŷ –Y )2 = (SSXY)2/SSXX = (572/28) ≈ 116.04

SSE = (Y – Ŷ )2 = SSYY – (SSXY)2/SSXX ≈ 115.96

ANOVA Table

SOURCE DF SS

MS=SS/DF Ft=MSM/MSE R2=SSM/TSS P-value

MODEL 1

116.04 116.04

3.00

0.50

0.182

ERROR

3

115.96 38.65

TOTAL

4

232

Let = Level of Significance. Then regression is significant when P-value < .

P-value is determined using Excel, =1 – F.DIST( Ft , 1, DFE=(n–2) , 1 )

For this example, P-value = 1 – F.DIST(3,1,3,1) ≈ 0.182

For =0.10, Regression is not significant since P-value>.

Significant regression implies slope is not zero.

Regression is not significant implies slope is zero.

As slope, b1, increases, the test statistic, Ft, increases, and the P-value, P[F>Ft], decreases.

When P-value is less than , model is significant at level of significance.

0

F

Ft=3.00

Page 7 of 14

R2 – Coefficient of Determination

Total

TSS

(Y –Y)2

SSYY

=

Model

=

SSM

=

(Ŷ –Y )2

= [ (SSXY)2/SSXX ]

+

+

+

+

Error

SSE

(Y – Ŷ )2

[ SSYY – (SSXY)2/SSXX ]

Divide by TSS:

TSS/TSS =

SSM/TSS

+

SSE/TSS

2

1

= [ (SSXY) /(SSXX *SSYY ) ] + [ 1 – (SSXY)2/(SSXX *SSYY )]

4. Define: Coefficient of Determination, R2= SSM/TSS

Thus:

1

=

R2

+

[ 1 – R2 ]

Coefficient of Determination

R2= SSM/TSS = (SSXY)2/(SSXX*SSYY)

0 ≤ R2 ≤ 1

1. R2 = SSM/TSS represents the percent of total variation explained by the model.

2. 1–R2 = SSE/TSS represents the percent of total variation explained by random error.

The correlation coefficient, r = sqrt(R2) = SSXY/sqrt( SSXX*SSYY ), –1 ≤ r ≤ +1

Using Algebra to combine relationships:

Coefficient of Determination, R2 = SSM/TSS = (SSXY)2/(SSXX*SSYY) ≈ 0.5

Total Sum of Squares, TSS = (Y –Y)2 = SSYY = 232

Sum of Squares of Model, SSM = (Ŷ –Y )2 = (SSXY)2/SSXX = TSS*R2≈ 116.04

Sum of Squares of Error, SSE=(Y–Ŷ)2=SSYY–(SSXY)2/SSXX = TSS*(1–R2) ≈ 115.96

ANOVA Table

SOURCE DF SS

MS=SS/DF

Ft

R2

MODEL 1

SSM=TSS*R2

MSM=SSM/1

MSM/MSE SSM/TSS

2

ERROR

n-2 SSE=TSS*(1–R ) MSE=SSE/(n-2)

TOTAL

n-1 TSS=SSYY

ANOVA Table

SOURCE DF SS

MS=SS/DF Ft=MSM/MSE R2=SSM/TSS P-value

MODEL 1

116.04 116.04

3.00

0.50

0.182

ERROR

3

115.96 38.65

TOTAL

4

232

P-value = 1 – F.DIST(3,1,3,1) ≈ 0.182

Page 8 of 14

MLR (Multiple Linear Regression)

MLR: Assumptions

.

Linear Model: Y = 0 + 1 X 1 + - - - +k X k +

~ N(0,2)

is Normal i.i.d.

(independent & identically distributed)

Regression Equation: Ŷ = b 0 + b 1 X 1 + - - - + b k X k

i = Yi – Ŷi

.

ANOVA Table

SOURCE

DF

SS

MS=SS/DF

Ft

R2

MODEL

k

SSM MSM=SSM/k

MSM/MSE SSM/TSS

(Regression)

ERROR

n-k-1 SSE MSE=SSE/(n-k-1)

(Residuals)

TOTAL

n-1

TSS

(Corrected Total)

P-value = 1 – F.DIST( Ft , DFM=k , DFE=(n–k–1) , 1 )

Adjusted R2 = 1 – ( SSE/(n-k-1) )/(TSS/(n – 1 ) )

MLR: Violations

.

* Non-Linearity.

The model must be a linear combination of independent variables.

The model must contain linearity in the coefficients.

* Heteroscedasticity.

The variance of the error term must be constant.

* Non-Normality.

The error terms must be normally distributed.

* Autocorrelation.

The error terms must be independent.

* Multicollinearity.

The independent variables must not contain large redundant correlations.

.

Page 9 of 14

Page 10 of 14

Correlation Concepts

Y=Minutes

Does there seem to be a relationship between the two variables?

Miles Minutes

Subject

20

X

Y

15

1

1

4

10

2

3

6

5

3

3

20

0

0

2

4

6

8

4

5

15

X=Miles

5

8

20

n=5

X=20

Y=65

X =108

Y2=1077

X*Y=317

2

10

SS=Sum of Squares

SSXX = 108–20*20/5 = 28

SSYY = 1077–65*65/5 = 232

SSXY = 317–20*65/5 = 57

1. As „Miles‟ increases, „Minutes‟ increases. Thus, there is a positive relationship or

positive association between „Miles‟ and „Minutes‟. If „Minutes‟ decreased as

„Miles‟ increased, the relationship would be negative.

2. To represent the relationship between the two variables, consider the coefficient of

correlation,

R = SSXY / √( SSXX*SSYY )

Range of Sample Correlation is { –1 ≤ R ≤ +1 }

Using Excel: =Correl( X-array , Y-array )

R = 57 / √( 28*232) ≈ 0.7072

3. R=0 implies no linear correlation

4. R close to ±1 implies strong linear correlation

5. R close to 0 implies weak linear correlation

.

Note:

Sample Covariance is defined as

Cov(X,Y)=SSXY/(n–1)

=57/(5–1) = 14.25

Sample Variances are defined as

SX2=SSXX/(n–1)

SY2=SSYY/(n–1)

=28/(5–1) = 7

=232/(5–1) = 58

Sample Standard Deviations are

defined as

SX=√[SSXX/(n–1)] &

SY=√[SSYY/(n–1)]

=√(7) ≈2.64575

=√(58) ≈7.61577

Thus, Correlation is often defined as R=Cov(X,Y)/[ SX * SY] =14.25/√(7*58)≈0.7072

(See Excel Examples)

Correlation: Relationship vs. Causation

Page 11 of 14

Matrix Representations

Consider three sets of data, A, B, & C, along with their univariate and bivariate measures.

Univariate Measures:

Index:1,2,3,4,5 Sum = X X2 SS = X2 – (X)2/n S2

A: 0,2,1,2,1

6

10

2.8

0.7

B: 4,1,2,0,2

9

25

8.8

2.2

C: 2,0,0,2,0

4

8

4.8

1.2

Bivariate Measures:

Variance/

Correlation

Sum(XY)

SSxy

Covariance

r

Sxy

Index:1,2,3,4,5 A B C A

B

C

A

B

C A

B

C

A: 0,2,1,2,1 10 6 4 2.8 –4.8 –0.8 0.7 –1.2 –0.2

–0.967 –0.218

B: 4,1,2,0,2

25 8

8.8 0.8

2.2 0.2

0.123

C: 2,0,0,2,0

8

4.8

1.2

Correlation Matrix

A

B

C

A

-0.967 -0.218

B

0.123

C

Covariance Matrix

A

B

C

A

-1.2 -0.2

B

0.2

C

Variance Matrix

A B C

A 0.7

B

2.2

C

1.2

Variance/Covariance/Correlation Matrix

A

B

C

A

0.7

–0.967

–0.218

B

–1.2

2.2

0.123

C

–0.2

0.2

1.2

(See Excel Example)

Page 12 of 14

Correlation Significance

Consider three sets of data, A, B, & C, along with their univariate and bivariate measures.

Univariate Measures:

Index:1,2,3,4,5 Sum = X X2 SS = X2 – (X)2/n S2

A: 0,2,1,2,1

6

10

2.8

0.7

B: 4,1,2,0,2

9

25

8.8

2.2

C: 2,0,0,2,0

4

8

4.8

1.2

Bivariate Measures:

Variance/

Correlation

Sum(XY)

SSxy

Covariance

r

Sxy

Index:1,2,3,4,5 A B C A

B

C

A

B

C A

B

C

A: 0,2,1,2,1 10 6 4 2.8 –4.8 –0.8 0.7 –1.2 –0.2

–0.967 –0.218

B: 4,1,2,0,2

25 8

8.8 0.8

2.2 0.2

0.123

C: 2,0,0,2,0

8

4.8

1.2

Given Level of Significance = .

Let tt = √[ (n–2)*R2/(1–R2) ].

Then, determine P-value using Excel,

P-value = 2*(1–T.DIST( tt , n–2 , 1 ) )

If P-value < /2 , correlation is significant (correlation is not zero).

If P-value > /2 , correlation is not significant (correlation is essentially zero).

Correlation Matrix

A

B

C

A

-0.967* -0.218

B

0.123

C

R2

A

B

C

A

0.935* 0.048

B

0.015

C

*significant at =0.10

Let =0.05

RAB is significant

RAC is not significant

RBC is not significant

A

A

B

C

P-value

B

C

0.0072* 0.7244

0.8437

Let =0.10

RAB is significant

RAC is not significant

RBC is not significant

Page 13 of 14

Page 14 of 14