NAPLAN Data Analysis Guide

advertisement

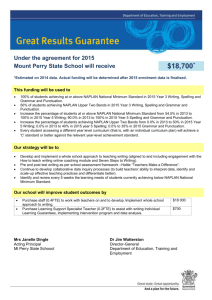

DEPARTMENT OF EDUCATION 2014 NAPLAN Data Analysis Guide National Assessment Program Literacy and Numeracy CONTENTS Introduction 3 Process for using data 4 1. Data collection and storage 5 2. Analyse, interpret and discuss data 6 Level 1 – top level interrogation 6 Level 2 – sub-group interrogation 9 Level 3 – item level 11 Numeracy 12 Reading 17 Language Conventions 20 Writing 24 Collaborative Data Review 27 3. Reflect, gain new understandings and decide on actions 28 4. Make decisions and commit to action 29 5. Feedback to students and classroom/school changes 30 Appendices 31 References 40 Contacts: See your regional team in the first instance for data analysis assistance. For NAPLAN or RAAD queries contact: Sarah Belsham Senior Manager National Assessment Curriculum, Assessment and Standards Ph: 8944 9239 Email: sarah.belsham@nt.gov.au Assessment and Reporting August 2014 Gay West Numeracy Assessment Curriculum, Assessment and Standards Ph: 8944 9246 Email: gabrielle.west@nt.gov.au 2 Introduction An assessment culture that involves the transformation of quality assessment data into knowledge and ultimately effective teaching practice is critical to advancing students’ literacy and numeracy achievement. This booklet provides a framework or process for the efficient use of data – collecting, analysing, discussing and deciding on actions in response to student achievement data. The examples used in this booklet are NAPLAN reports, from the RAAD tool, that inform teachers about how to assist students to improve their learning outcomes. This valuable information supports whole school accountability processes and enables strategic and operational planning at the school level and also at the classroom level. The RAAD application and the User Guide are available on the DET website: www.education.nt.gov.au/teachers-educators/assessment-reporting/nap Effective use of data A shift is occurring in the way educators view data and its potential to inform professional learning needs, intervention requirements and resource allocation. Data can be used to focus discussion on teaching, learning, assessment, teacher pedagogy, and monitoring of progress. School improvement requires more than just presenting the data and assuming it will automatically transform teachers’ thinking. Rather, teachers need sensitive coaching and facilitation to study the data and make connections between data and instructional decision making. School leaders, as data coaches, need to ask purposeful questions to promote thoughtful discussion which should be followed by targeted action. Effective data use has to become part of a school’s culture. A culture where: - there is a shared belief in the value of data - data literacy capacity and skills are proactively developed - there are planned times to collaboratively interrogate the data - effective change in classroom practice is achieved in a supportive environment. A culture change requires people to believe in the data, get excited by it and use it as a focus for future planning. Holcomb (2004) sees the process involving three Ps: people, passion and proof – everyone’s responsibility, commitment and ownership of the process – and carried out with three Rs: reflection, and relentless resilience. The process outlined below can be used or adjusted by schools to suit their individual context and the different data sources that they are interrogating. In this booklet NAPLAN data, as presented in the RAAD, will be used exclusively to elucidate and expand each of these steps involved in the process of effective data use. Assessment and Reporting August 2014 3 Process for using data Step 1. Collect and store data Gather and collect good quality data from multiple sources. Develop a process whereby data is well-organised in a central location to allow easy retrieval and review at any time now and in the future. Step 2. Analyse, interpret and discuss the data Interrogate the data in a supportive group environment. Aggregate and disaggregate the data in different ways and ask probing questions that reveal information about the students, the teaching, the resources and the school structures. Step 3. Reflect, gain new understandings and decide on actions Reflection after the data interrogation and discussions, and further student questioning will reveal new knowledge and understandings about the sequence of learning involved in concept development. This will guide decisions about changes or adjustments to the types of classroom approaches, strategies and resources already in use. Step 4. Make decisions and commit to action Leadership teams, in collaboration with teachers, will decide on which parts of the curriculum to target. From this, a whole school action plan that identifies, prioritises and schedules the professional development, structural changes and resource allocation must be organised. Leadership teams must drive the process and set up regular feedback to keep the plan on track. Step 5. Feedback to students include them in the process Students have to be part of the effective data use process by providing them with constructive explicit feedback and supporting them to develop SMART learning goals, see Section 5, page 30. Repeat the cycle This cycle is a never-ending process in that the effect of any change in practice should be monitored. Begin the process again: Collect data … Assessment and Reporting August 2014 4 1. Data collection and storage Reliable data from good quality assessments can range from teacher in-class specific skill observation and student reflection information (qualitative) at one end of the spectrum to teacher constructed assessment and standardised diagnostic assessment results (quantitative) at the other. Results from NAPLAN, commercial standardised tests or any of the online diagnostic assessments described on the website shown here provide good examples of qualitative data: www.education.nt.gov.au/teachers-educators/assessment-reporting/diagnostic-assessments Multiple sources of data allow triangulation and verification of student achievement levels. Putting as many of the assessment jigsaw pieces together will produce a clearer picture of what is happening in the classroom and the school and what specific actions are required to improve results. Data storage cannot be ad hoc. Files, reports, assessments and subsequent action plans need to be stored systematically, in easy-to-access, clearly labelled digital or hard copy folders. This allows trouble-free retrieval and review of achievement data along with records of previous data analysis sessions. New staff or regional officers should be able to access previous data and discussions. Of course data should be accessible, but also needs to be treated confidentially. In addition to student academic achievement data, parallel school data sets need to be gathered and analysed to inform what changes must occur to make it possible for student learning to improve. Measures such as student demographics, attendance, perceptions, staff and community attitudes, expectations and expertise, school processes, programs and protocols contribute to building a comprehensive picture of a school’s culture. Understanding these data sets, and the interplay between them, will allow schools to build a big picture view so that teachers and school leaders can reflect on their practices, decide on and instigate change to improve their performance. Assessment and Reporting August 2014 5 2. Analyse, interpret and discuss the data At its core, effective data use requires a collaborative group of educators coming together regularly to interrogate the data sets and discuss future actions. Presenting data in a variety of formats, aggregating and disaggregating results, filtering down to specific subgroups, and drilling down to the smallest grain size (individual student responses to questions) allows thorough analysis and is essential to effective data use. By questioning, discussing, deciding and implementing using three levels of data interrogation, a quality set of assessment results can be transformed into an instructional tool to shape strategic professional development and future programming and planning. Level 1 The top level of data interrogation looks at the school’s overall achievement data for each year level. For example in NAPLAN each area of testing – numeracy, reading, writing, spelling, grammar and punctuation – could be analysed and discussed using the following questions: • Does the school mean differ significantly from the national mean? • How does the school distribution of student achievement compare to the national range (highest 20%, middle 60% & lowest 20%)? • Who are the students below, at and above the National Minimum Standard? • Does this data provide information on the impact of specific programs or approaches that teachers are using? • In what areas of testing did we do well/poorly? - Did this vary between year levels or was it across the board? - Does this data validate teachers’ A–E assessment of the students against the Northern Territory or National Curriculum achievement standards? - Are there any surprises in the overall results? The Reporting and Analysing Achievement Data (RAAD) program takes the NAPLAN data and presents it in a variety of data analysis displays. At the top level, the display shows: - achievement across the year level where students can be ordered according to results of below, at or above the national minimum standard - the spread of the students across the national achievement bands - student year level achievement as compared to the top 20%, middle 60% and bottom 20% of Australian results. Assessment and Reporting August 2014 6 Year level/cohort data analysis in the RAAD display screens Tabular display Graphical display Means Top 20% Middle 60% Above At Bottom 20% Below Achievement distribution across the bands shows whether students are clustering at certain NAPLAN national bands and if their placement is skewed to the lower or upper areas of achievement. This data can be used to validate classroom observations and assessment information. Schools should reflect on the programs and resources used to drive improvement of the ‘at risk’ students, below minimum standard, and those that are at or just above the minimum standard. It is also important to look at the extensions offered to students who cluster just under or in the top 20% achievement category. Reviewing and rethinking the programs offered to all students will form part of the discussion with the questions that include: • Why are students clustering in certain bands? • What do we need to do to move our students on? • What strategies can we put in place for the students above the national minimum standard but below the Australian mean? • How do we extend those students who are well above the national minimum standard? Assessment and Reporting August 2014 7 NAPLAN means can be recorded for previous years at a school, region, territory or national level and compared to the 2014 results. Using Excel the means can be plotted for a number of years and the resulting visual charts can help staff to identify trends. The graphs below show some of the ways that means can be tracked within a school and compared to the Australian, NT or Regional means. The same cohorts can be tracked every 2 years e.g. 2010 Year 3 to 2012 Year 5 to 2014 Year 7 cohort. Data analysis over time These examples show trends overtime: - numbers of students within a year group level that achieved below, at and above the national minimum standard over a 3 year period in the NAPLAN spelling test - school means for a year level compared to the Australian means over a 4 year period in the NAPLAN numeracy test Reading 2013 Reading 2014 - a four way comparison of school, region, NT and Australian means for reading over a 2 year period. Staff discussions will include questions like: • Are results improving at a satisfactory rate over time? • Can we use these results to prove or disprove the veracity of our school program in a certain area? • Can we use these results to support the need for a change in our school program in a certain area? • What other evidence can we use to validate the programs we use in our school? A template for recording and comparing Australian and school means is shown in Appendix G and a variety of ready-made analysis graphs are available on the DoE NT website, see Appendix D page 32. Assessment and Reporting August 2014 8 Level 2 The second level of data interrogation involves disaggregating the results and looking at specific subgroup achievement within the year level for particular assessments. The RAAD, using NAPLAN data from each test area, provides a variety of student filters that can be used to disaggregate the data into subgroups such as class, gender, Indigenous status, LBOTE or ESL groups. It also allows schools to customise filters and create subgroups using specific program codes (see the RAAD User Guide). Questions similar to the ones above need to be asked for specific groups of students: • Does the subgroup mean differ significantly from the national mean? • How does the spread of this subgroup differ from the whole year group? • What are the specific strengths and weaknesses of this subgroup? Data analysis focussing on a subgroup This Assessment Data display shows the question statistics and responses for the females in one class group. Filtered subgroup display This disaggregated data set will reveal the particular questions that proved difficult for this group of students and point to curriculum areas of concern. The patterns of response to each of the questions will also show up any common misconceptions within this group. Another type of display – Student Distribution – will show the mean and the spread of this group in graphical form. Discussion using this data will focus on the special needs of this specific subgroup: • What specific programs or teaching approaches will produce the best results? • Are their extra resources that would support these programs? • What community members have the skills that will influence this group of students? • Were there any surprises in the results for this group of students? Assessment and Reporting August 2014 9 Data analysis comparing the spread of two subgroups NAPLAN Distribution comparison 32% males 6% females These two distribution displays show the disaggregated achievement data for a Year 5 group of males and females for Numeracy. This subgroup filtering into male and female groups shows the contrasting spread of achievement that the whole year level distribution would not show up. This comparison shows the poor achievement of the Year 5 females in numeracy compared to the males e.g. 32% of males compared to 6% of females are in the top 20% of Australian Achievement. Questions for discussion using this data information could be: • Does the class assessment align with this data? • How can we engage the young females at this level in relevant mathematical investigations? • What special approaches or content will tap into their interests? A variety of visual presentations of the data, for whole cohort or subgroup analysis, can be very effective when used as springboards for staff discussions about the efficacy of programs being used at the school. National Minimum Standard - % above - % at - % below - % absent Assessment and Reporting August 2014 Results from the NAPLAN tests can be exported to an Excel spread sheet and graphed in a variety of ways. This pie chart visual representation of the data can be used to start discussions about the efficacy of programs and teaching methods being used with this cohort of students. 10 Level 3 The third level of data interrogation looks at the students’ responses to individual questions or items within an assessment. Using NAPLAN data, individual question responses in the numeracy, reading, spelling, punctuation and grammar test and the criteria scores for writing can be examined in detail. From this close examination, we can obtain detailed evidence about the students’ conceptual understanding and the skill level and discover what may have been previously unknown misconceptions in specific areas of the curriculum. This level of interrogation also gives the classroom teacher insights into his/her instructional practice and programming – the strong points and the gaps. Analysing the question data can show common areas of the curriculum where students are having difficulty or conversely achieving success. Individual criteria scores will show up areas of poor achievement in specific components of writing in general. Educators can find patterns or trends in student performance by asking questions like: • Were there specific strands in the Numeracy test where all students or specific groups of students experienced difficulty or success? • What elements within each sub- strand showed the strongest or poorest performance? • Were there specific text types – narrative, information, procedural, letter, argument, poem – in the Reading test where students experienced difficulty/success? • What type of question – literal, inferential, applied – was most problematic for the group? • Were there particular spelling words or spelling patterns that the group had problems with? • What aspects of punctuation and grammar did students have trouble answering correctly? • Which writing criteria did the students perform well in or not so well in? • What areas of the curriculum did my higher level/lower level students find particularly difficult? The NAPLAN test papers, answers, writing marking guides and the NAPLAN analysis using a curriculum focus support documents are vital resources to have on hand when analysing the Assessment Data reports in the RAAD. It is essential to be able to refer to each individual question, reading text, spelling word or writing criteria description when interrogating the data and diagnosing areas of strength (see Appendix D for these resources). Assessment and Reporting August 2014 11 Numeracy analysis In any numeracy assessment it is important to resist the urge to look at the overall score, instead look at individual item responses. The teacher becomes a detective and looks for common errors which provide clues about shared misconceptions or lack of problem solving skills in certain areas. In the RAAD tool, numeracy question responses are divided into sub-strands in the Assessment Data screen for easier analysis. Results for a year level, class or other subgroup can be analysed directly from the screen or saved to a clipboard picture or Excel spread sheet. The question statistics and response details are also provided. Numeracy data analysis at sub-strand and question level This Assessment Data screen for the Year 3 2008 Numeracy test shows the Number substrand questions and the student responses. Correct answers→ Australian % correct→ School % correct→ Expected % correct→ The three red highlighted questions are all associated with money concepts in Number. The red indicates that the students performed below what was expected for these questions. This points to an area of difficulty for that curriculum area. The green coloured questions indicate areas where the students performed above what was expected and points to an area of strength. Questions to start group discussion: • What approaches are used to teach this concept in Years 1, 2 and 3? • What are the early learning stages in the development of this concept? • What mathematical language is needed to access these questions? • What other elements of the Number sub-strand are closely related to these understandings? • Are teachers confident when teaching this sub-strand? • What resources support the teaching of this concept? Assessment and Reporting August 2014 12 Numeracy individual question analysis 4. 2008 Year 3 This is question 4 from the Assessment Data screen above. The correct answer is d and the three other alternatives are called distractors. These distractors are chosen deliberately to ‘distract’ from the correct answer, thus testing deep understandings that aren’t swayed by alternatives. This is not to put students off or trick them but to draw out misconceptions and reveal lack of comprehension or understanding. In many of the numeracy questions, particular distractors show different conceptual misunderstandings while other distractors are chosen by the students due to minor arithmetic errors. In the question above, students who choose the first response $0.98, are taking the dollar coin to be worth one cent. This is a major misconception compared to those students who choose $3.45 which indicates that they did not add in the 50 cent coin. This last error could point to a lack of problem solving skills e.g. crossing off the coins as you count them or rechecking your answer. Questions to start group discussion: • Which students chose a, b, or c in this question? • What misconceptions can be concluded from the students who answered a, b or c? • What problem solving strategies would be useful for this type of problem? • What follow-up classroom activities will enable the teacher to verify and delve more deeply into this misconception? Sometimes a general look at the Assessment Data screen will show up a strand where many questions are highlighted in red. This may indicate a weakness in this strand e.g. Year 7 Space, so this would be a good place to start analysis of the items. Other strands which show many green highlighted questions may indicate areas of strength in teacher pedagogy and programs. Assessment and Reporting August 2014 13 The clipboard picture of the item responses shown below is one way for groups of teachers to evaluate their students’ performance. A printed hard copy of this report enables teachers to highlight, circle and question mark any areas of concern. It is ideal for analysis sessions as it shows the big picture for each test so that patterns and trends are easy to identify. It is important to have the test papers on hand to view the questions in detail. Numeracy analysis with Clipboard Display and Comparison Graphs Clipboard Display The RAAD Comparison Graphs are stacked bar graphs that show the questions ordered from left to right by the degree of difficulty from easiest to most difficult. The green part of the bar shows the number of students who answered correctly. The other coloured parts of the bar show the number of incorrect a, b, c or d responses chosen by the students. The red part of the bar shows the missing or incorrect short answers. Assessment and Reporting August 2014 14 Teachers can identify common problems that may also show up across year levels. Schools can categorise the questions and begin to inquire into the methods and strategies used to teach the different sub-strands. Individual questions may show that many of the students chose a similar incorrect response which could indicate a common misconception. Questions to start group discussion: • For a specific question, was there a commonly selected incorrect distractor? • What can this error analysis show both in terms of student conceptual development and teaching pedagogy? Another way to analyse the NAPLAN numeracy results is to use the NAPLAN analysis using a curriculum focus (2008-10) Excel spread-sheets found on the Learning Links site with the past tests. Schools can insert their percentage correct for each question and sort the spread sheet as required. These documents identify the main mathematical skill for each question and provide extension ideas to which teachers can add. Numeracy analysis using percentage and extended activities This is a section of the Year 3 Numeracy NAPLAN analysis using a curriculum focus spread-sheet, the Australian percentage correct is in the second column and the schools percentage correct can be inserted into the third column. The next column can be set up to show the difference between the Australian percentage and the school percentage if required. The 2011 and 2012 analysis documents align the questions to the Australian Curriculum and extend the questions by relating the skills to the four mathematics proficiencies – Fluency, Understanding, Problem Solving and Reasoning. The different strands and sub-strands are colour coded for easy reference. The percentage correct for the school can be inserted into the spread sheet and compared to the national percentage correct. The questions can be sorted by strand and ordered from most poorly answered to the best answered questions if required. Assessment and Reporting August 2014 15 Numeracy analysis using percentage correct and proficiencies The 2014 numeracy analysis documents take the form of a set of PowerPoints with screen shots of each of the NAPLAN questions. These are grouped into the strands and sub-strands of mathematics e.g. Number and Algebra – Fractions and decimals. The notes view contains focussing questions, extension ideas, statistics, analysis and resources relating to each question. Numeracy questions for class discussion This data can stimulate reflection on classroom approaches and practice and act as a focus for collegial discussion about ways to open up everyday problems and improve students understanding and thinking. Reflective questions might include: • How can we develop our understanding of the four mathematics proficiencies? • What do they look like in the class room? How can they help with concept development? • How can we help students to develop effective problem solving strategies and the specific mathematical language needed to explain their understandings? • What activities will allow students the chance to connect mathematical ideas then explain and justify their reasoning? Assessment and Reporting August 2014 16 Reading analysis When analysing any reading comprehension data it is important to note what type of text is being used and what kinds of questions are being asked. The NAPLAN questions are divided by text type in the Assessment Data screen of the RAAD tool for easy analysis. As with Numeracy, results for a year level, class or other subgroup can be analysed directly from the screen or saved to a clipboard picture or Excel spread sheet. The clipboard picture of the item responses is the recommended way for groups of teachers to evaluate their students’ performance in the test. Reading analysis using text type and question data Reading texts Comparing results for different text types The Assessment Data screen for Reading shows the students’ responses for the first two texts in the test. The first one is an advertisement and the second, a narrative. All the questions were poorly answered in the first text as shown by the amount of a, b, c and d incorrect distractors listed. The red highlighted expected percentage correct figures and the school percentages correct totals are well below the national percentages correct. Comparisons can be made with the students’ results for the other texts like the narrative shown here which shows better results This group of students had difficulty answering the comprehension questions for this persuasive advertisement text. The three questions highlighted above and shown below illustrate different levels of comprehension skills from literal to inferential. As the students were able to answer these question types in the other text genres, this could indicate that the genre of the text was the problem rather than the type of questions asked. Questions from Helping our waterways Question discriptors 1.--What is the main message of these posters? Infers the main message of an advertisement. 4.--How do leaves and clippings kill aquatic life? Locates directly stated information in an advertisement. 5.--According to Poster 2, what should we Connects information across sentences in compost or mulch? Assessment and Reporting August 2014 advertisement. 17 When examining these results, questions to start group discussion would include: • Have students been exposed to a variety of text types? • Have students been exposed to the various forms of each text type e.g. advertisements, arguments, discussions (persuasive genre)? • What strategies do the students use when reading and understanding various text types? • Why did such a large proportion of the students choose a particular incorrect distractor, like b in question 3? • Did the students understand the words and language used in the test questions? • What follow up classroom activities could improve student understandings of this specific type of text? Reading analysis using text type and question data Year 7 Reading Helping our waterways A Special Day Comparing results for different question types The Assessment Data screen for Year 7 Reading shows the students’ responses for the first two texts on the test. The first one is an advertisement and the second, a narrative. As previously explained, the question statistics and the student results are shown for each question. For each text, students answered a variety of questions ranging from literal to applied. The data highlights which particular questions across the genre types (e.g. 1, 5, 7 and 8), were answered poorly. These questions involve higher level critical thinking skills – inference and interpretation – rather than literal questions where the answer is found directly in the text. Examining the responses to common question types, allows teachers to find areas of strength and weakness in reading comprehension skill levels. Assessment and Reporting August 2014 18 Reading analysis by question type Year 7 Reading questions Question 7 asks the students to interpret the significance of an event in a narrative text. The authors’ meaning was not clear to these students, as only 24% chose the correct answer c. Question 8 asks the students to infer a reason for a characters’ thinking in a narrative text. Again only 24% of the students chose the correct answer b. Reflection of classroom practices will determine the level of exposure students have to this type of thinking. Reading Analysis Guide for 2014 Comprehension of any reading text involves the understanding of literal facts directly stated in the passage and inferential meanings implied but not directly stated by the author. For younger students this literal understanding of the text can be termed reading ‘on the lines’ while inferential meaning of the text can be termed reading ‘between the lines’. The reader also takes the information, evaluates it then makes connections and applies this to their existing schema. They can then create new knowledge and understandings. The Reading Analysis Guide supports teachers to use the meta-cognitive instructional strategies to improve the reading comprehension levels of all students. NAPLAN reading questions contain many different types of comprehension questions and it is important to reflect on what level of questioning the students experience in your school. Questions to start group discussion include: • Is there a pattern of poor response in the literal or inferential types of questions? • Are the students exposed to a variety of comprehension question types in class? • What strategies will help students to understand the underlying meaning in texts? • Do they have regular practice in answering, constructing and classifying different levels of questions – literal, inferential, evaluative and applied? This data can stimulate reflection on classroom approaches and practice and act as a focus for collegial discussion about effective classroom practice. Assessment and Reporting August 2014 19 Language Conventions analysis The language conventions of spelling, grammar and punctuation are a critical part of effective writing. Many every day in-class writing activities and formal assessments such as NAPLAN allow teachers to look for patterns of misconception within a group of students. In the punctuation questions in the NAPLAN test, students have to choose the correct placement of punctuation marks or the correct multiple choice option as shown in the question below. Year 9 Language Conventions 37. Which Sentence has the correct punctuation? o “We need to start rehearsing, said Ms Peters so get your skates on.” o “We need to start rehearsing” said Ms Peters “so get your skates on.” Correct answer c o “We need to start rehearsing,” said Ms Peters, “so get your skates on.” Most students choose d o “We need to start rehearsing,” said Ms Peters, “So get your skates on.” The students who chose the incorrect response, d, show a relatively simple punctuation misconception. This could be remedied by explaining the continuation of the sentence so that a capital S is not needed. Reading this sentence aloud will reinforce the notion of it being one complete sentence rather than two sentences. The students could also look for examples of this rule when reading class texts. Teachers could also set up authentic writing tasks that involve this skill so that rather than an isolated rule, students see it as a real skill to be mastered and used. Students who chose the incorrect response, a, however have a more fundamental misconception about direct speech and will need more support and reinforcement than the students who chose d. In the NAPLAN there are a variety of grammar questions. In the item below the students had to choose the correct verb to complete this sentence. 33. Which word correctly completes the sentence? Sam -------- his homework before he went to soccer training. did done does doing Year 5 Language Conventions Correct answer is a, but many students answered b and c. The students’ responses indicated that they had difficulty with the concept of verb tense and agreement. The teachers analysing this data would need to look for other similar errors across the test and follow up with questioning and explicit teaching of this concept. The Language Conventions Analysis Guide is divided up into Spelling and Grammar and Punctuation. The guide helps teachers to analyse a question that students had difficulty with and provides insight into how to correct this error in understanding. All the analysis guides are available on the Learning Links site shown in Appendix C, page 34. Assessment and Reporting August 2014 20 Language Conventions analysis guide Questions to start group discussion would include: • Is there a pattern of responses that highlight similar misconceptions about certain concepts in punctuation and grammar? • Are the students exposed to and made aware of the variety, form and function of punctuation and grammar used in different texts? • What sort of effective teaching strategies could we incorporate into our program? In the NAPLAN Spelling tests, link words appear in more than one year level test e.g. a particular spelling word will appear in the Year 3 and the Year 5 test. This enables comparison of different year level responses to the same spelling word. For example, in 2009 the spelling tests for Year 3 and Year 5 both contained the word ‘stopped’. The results for one school were as follows: - Year 3 result for spelling ‘stopped’ was 20% correct - Year 5 result for spelling ‘stopped’ was 47% correct. Some relevant questions to ask here might be: • Is there a whole school program for the teaching of spelling? • Do teachers share and discuss different teaching strategies? • Are there simple, easy-to-remember spelling rules being used in every classroom? [e.g. ‘When there’s a lonely vowel next to me, then you have to double me.’] • What are some new approaches, programs – commercial packages, games, computer sites, Ipad applications – that are showing improved results? Assessment and Reporting August 2014 21 Note: Numeracy, Reading and Language Conventions NAPLAN tests include link questions – common questions that appear in consecutive year level tests e.g. Year 3 questions appear in the Year 5 test, and Year 5 questions appear in the Year 7 test etc. This provides teachers with the chance to compare the responses of different year levels to the same test items, and monitor improvement in some curriculum areas. Some questions to be asked of these results are: • Have the students made sufficient progress in certain areas of the curriculum over the 2 year period? When using the RAAD, the spelling results for the whole year level or class level can be compared to the Australian results. By hovering over a question number the spelling word appears in a box as shown below. Spelling analysis using percentage correct In this school, the Year 3 group on the whole had difficulty spelling the word ‘carefully’ – 21% were correct. Language Conventions analysis guide - Spelling In the analysis guide, the spelling words have been organised into lists for each test year level according to the four areas of spelling knowledge – Etymological, Morphemic, Phonological and Visual. Teachers can use this resource to supplement their existing spelling programme. They may wish to use the lists as a starting point and find activities to match the skills listed. Some relevant questions to ask here might be: • What spelling derivation activities occur in our classrooms? • Do teachers share different classroom teaching methods to improve vocabulary and spelling skills e.g. break up and expand words by cutting up word cards and pegging on a clothesline? Assessment and Reporting August 2014 22 The reading, grammar and spelling test item responses can be viewed using the RAAD Comparison Graph. This colourful visual profile helps teachers to make use of the detailed item response data and spot common errors at a glance. Reading analysis using the Comparison Graph The green dotted line above shows an approximation of the national profile of performance. The red arrows indicate questions where the students performed lower than the national level and conversely, the green arrows indicate questions where the students’ achieved a better result than the national profile. Questions to start group discussion on this data would include: • What questions did the class or group have difficulty with compared to the national performance? • For a specific question, was there a common incorrect distractor (a, b, c, or d) that many of the students chose? • What can this error analysis show both in terms of student conceptual development and teaching pedagogy? Assessment and Reporting August 2014 23 Writing analysis The Writing task for NAPLAN is the same for all year levels. There is a common stimulus and a common rubric where students are scored using the same 10 criteria. This allows direct comparison between year levels and monitoring of student progress over the 2 years. The NAPLAN persuasive and narrative rubrics can be used in schools for writing tasks and the data can be compared in the same way as shown below. Writing criteria analysis using mean comparison The Year 3 writing results in the Assessment Data screen show the Maximum Score for each of the 10 writing Criteria Mean (average) score for the whole of Australia at Year 3 Mean score for this Year 3 school group. In paragraphing and punctuation this group has performed better than the Australian average. In Sentence Structure, this group has performed below the Australian average. Sentence structure average: Australian = 2.03 School = 1.86 Questions to start group discussion on this data would include: • What did the students score in each writing criteria? • In what criteria did they achieve well or perform poorly compared to the Australian average? • What do the criteria descriptors for each score level tell us about the features of the students’ writing? • What strategies, resources and professional learning will improve our teachers’ capability and the school outcomes? The RAAD Comparison Graphs for Writing show the number of students achieving a certain score for each of the 10 criteria. Questions to start group discussion would include: • What score within each criteria should my students be achieving? • On which score has the group spiked? • What teaching strategies will help our students move from simple sentences to good quality compound and complex sentences in their writing? • How can we progress our students onto the next level of writing? Assessment and Reporting August 2014 24 Writing criteria analysis using Comparison Graphs This visual representation will indicate at a glance the most common score and spread of scores for a group and can be used to ask reflective questions about where students are at in their writing skills. The RAAD has the ability to export data to Excel spread sheets and these can be collated to show how results are trending over time. The same cohort could be tracked (on most of the criteria) since 2008. The marking rubric for Narrative and Persuasive has differences but the results for many of the criteria can be compared over time – vocabulary, sentence structure and punctuation. Writing criteria analysis over time This spreadsheet allows for a comparison of writing results over a 3 year period for the criteria of Sentence Structure.The different colours represent 2008, 2009 and 2010. This is a visual way to monitor the performance of the students over time. These students are clustering at a score of 2 for this criteria. This can be adjusted to show the 2012-2014 results. Assessment and Reporting August 2014 25 Writing analysis Teachers can use the Persuasive and Narrative Marking Guides and NAPLAN Writing Analysis Guides to compare samples with their own student’s writing. 2014 This document will assist them to plan for future programmes for teaching areas of the Australian Curriculum associated with writing. It has been divided up into the 10 writing criteria with one or two samples provided for each category (or marking level). Comparing trend data over time allows schools to ask questions like: • Have the programs we are using improved the performance over time? • What does this mean for the way we teach writing (especially sentence structure)? • Is a whole school approach needed across all grade levels? Assessment and Reporting August 2014 26 Collaborative data review Guiding questions for collaborative data review, where school leaders and classroom teachers come together to ask questions about student performance, promotes purposeful evidence-based discussion. This is central to maintaining a cycle of inquiry that focuses on improved standards of student achievement. The matrix below outlines the overview of questions that collaborative groups of educators need to work through (see Appendix A). Interpretation of data needs to be a highly consultative process that involves all key stakeholders. Data analysis supports teachers to set school and classroom targets, identify strategies for improvement, focus resources, and strategically monitor progress towards those targets. A collaborative data review process gives teachers a safety net for implementing change – taking risks and improving their craft – and is aimed at creating a no blame culture. Teaching should not be a ‘private profession’ where teachers spend long periods alone with their classes, rather they should be encouraged to collaborate with colleagues in most dimensions of their work including data analysis. A supportive group environment is needed to interrogate the data thoroughly and unlock the real reasons for the students’ results. Many heads mean many solutions. Not one-off sessions, but regular mentoring discussions. Collaboration benefits include: - Experience and shared skills – a bigger group means more experience, a broader consideration of issues and generation of a wider range of alternative strategies to address issues. The total skill set in a group is greater than the skill set of any one person. Members of groups learn from each other and with each other. - Perspective – colleagues may look at each other’s programs, teaching and data from a different perspective e.g. culture, language, family, location, social background. - Objectivity – colleagues can be more objective than the teacher who is immersed in the culture and emotion of the classroom. Working collaboratively can control bias. - Emotional support – the evaluative dimension of teaching can be emotionally draining as people may feel they are personally ‘under the microscope’. Support and encouragement can alleviate some of this pressure. - Shared responsibility – no one teacher can be held accountable for the learning of students. Learning for all students is a shared responsibility. - Taking ‘blame’ off the agenda – in a collaborative environment there is less risk that people will feel blamed for student under-achievement. A group is more likely to ask: What is happening here? How can we address the issue? Rather than: Who’s to blame? (See the Data Rules in Appendix B) Assessment and Reporting August 2014 27 3. Reflect, gain new understandings and decide on actions During and after the data interrogation and collaborative discussion, teachers must be open to and responsible for reflecting about their own practice. It is the simple acknowledgement that learning has not occurred (or has not occurred to the desired degree). The teacher response to this must be adjustments or changes in their teaching methods (pedagogy) and programs to address the issues that were evident in the data. ‘One of the most powerful ideas in evidence-based models of teaching and learning is that teachers need to move away from achievement data as saying something about the student, and start considering achievement data as saying something about their teaching. If students do not know something, or cannot process the information, this should be cues for teacher action, particularly teaching in a different way.’ (Hattie, 2005) The questions used in this reflection might be: • What does this data mean for my teaching? • What is it saying about the students’ learning (or failure to learn)? • How will I apply this new knowledge and understanding to my teaching? • What could I change or adjust? • What could I do differently? As the ‘senior partner’ in the teaching/learning process the teacher has a professional obligation to determine why the desired learning did not occur and implement changes to improve outcomes. Any teacher who collects achievement data and does not use it to guide further intervention is losing valuable opportunities to improve their own teaching/coaching and help their students reach higher levels of achievement. The changes in teaching that are likely to be effective will depend on what has been revealed in the data and the reflection and thinking that is part of data analysis and improvement planning. As part of a collaborative group of educators, teachers reflect, formulate a plan and then take action on such factors as: - The design of the learning environment - Classroom planning and programming – setting goals/targets - Changes/adjustments to pedagogy and the associated professional learning required - Grouping of students and explicit teaching tailored for these groups - Use of resources, IT and manipulatives - Use of paraprofessionals - Community involvement – using the varied skill sets to improve student engagement Assessment and Reporting August 2014 28 4. Make decisions and commit to action Leadership teams, in collaboration with teachers, decide on which parts of the curriculum to target. From this, a whole school action plan that identifies, prioritises and schedules professional development, structural changes and resource allocation must be organised. Leadership teams must drive the process and set up regular feedback to keep the plan on track. • What is it that we need to work on most? • Why did we get these student results? Data-driven decisions and whole school action plans require - an active, effective leadership team with vision - the careful examination, discussion and analysis of all data sets - particular priority areas of the curriculum to be chosen. Action plan includes: Goals/targets Teachers/students Strategies/approaches Resources/programs Dates and times Feedback loops A limited number of areas are chosen at one time and reviewed at the end of set period of time (term, semester or year depending on the areas). Schools should only focus on a few specific core challenges – that’s where the change will begin! Example: Reading – inferential comprehension of texts Writing – sentence structure and punctuation Mathematics – geometry concepts or fraction and place value understandings. Immediate attention and commitment needs to be given by ALL staff to address these focus areas. Students also need to know the targeted areas for improvement. School leaders have the responsibility to facilitate and drive: - Collection and storage of data - Analysis, interpretation, collaborative discussion and action - Clarification of issues and target-setting (bring it all together) - Mentoring support, professional development and resource allocation - Follow up meetings/reviews/discussions to check progress. School leaders have to become data ‘coaches’. Instructional improvement requires more than just presenting the data and expecting it to automatically transform teachers’ thinking. Rather, teachers may need sensitive coaching and facilitation to study their data and to build bridges between data and instructional decision making. Leaders can’t just ‘tell staff what to change’ rather they need to focus thoughtful discussion and move it into action, coaches ask such questions as: - How are we doing? What are we doing best? What do these assessments tell us? - What are we missing? Is this the best we can do? Where should we place more emphasis? - What do we already do that we can do more of? Is there something we can do sooner in the year? Where can we place less emphasis? Data capacity and culture Leaders have to build skill sets and put in place time to collaboratively interrogate the data effectively e.g. ‘data teams’ work together at regular intervals. They have to get people to believe in the data and get excited by it. The 3 Ps – People, passion and proof – mean that everyone has the responsibility, commitment and ownership of the data decision making process. Data use determines Professional Learning needs, intervention requirements, and resource allocation. It focuses discussions about teaching, learning and assessment, guides teacher instruction and monitors progress. Teachers and administration teams have a shared belief in its value. Assessment and Reporting August 2014 29 5. Feedback to students and classroom/school changes Explicit feedback delivered face-to-face opens possibilities for powerful leaning. This is more potent when the process includes teaching the student how to analyse and use data generated from previous learning. When students know and understand what it is that they are expected to learn (preferably through a model or exemplar) how this learning connects to previous learning, how learning will be assessed and how they can participate in the process, they are more likely to be actively engaged in learning. The most powerful single moderator that enhances achievement is feedback.’ Hattie (2005) Peer assessment, peer tutoring and peer teaching can enhance the level of student engagement. Insisting that students use data in these processes helps maintain rigor and discipline. Effective teachers teach students how to use data to inform their own learning. These students can: - look objectively at personal performances and self-correct where appropriate - use exemplars, performance criteria and standards to guide performance - set SMART learning targets or goals and coach and mentor others. S M A R T Specific Measurable Achievable Realistic Time-related Effective feedback helps students to learn and improve. It is recommended that feedback is: Non- threatening: Feedback should be part of the classroom culture, where it is seen as a positive and necessary step in any learning. It should be viewed as encouragement and improve confidence and self-esteem. This can establish good communication based on trust. Resources such as Tribes and The Collaborative Classroom may be useful. Constructive and purposeful: Feedback should reflect the steps achieved so far towards the learning goal as well as highlighting the strengths and weaknesses of a piece of work or performance. It should set out ways in which the student can improve the work. It encourages students to think critically about their work and to reflect on what they need to do to improve. Timely: Feedback is given while the work is still fresh in a student’s mind throughout the semester rather than just at the end. Regular timely feedback enables students to incorporate feedback into later assessment tasks. Meaningful and clear: Feedback should target individual needs and be linked to specific achievement standards. It should ask the questions to help make connections and prompt new ideas and/or suggest alternative strategies to solve a problem or produce a product. Prioritise and limit feedback to include 2-3 specific recommendations. Teachers should ask students about what feedback or types of feedback they find most helpful and what feedback is not so helpful. Acted upon: How students receive, interact with and act on feedback is critical to the development of their learning. They should become collaborative partners in the feedback and learning process. Teachers need to incorporate effective feedback into their planning and every day classroom practice. Assessment and Reporting August 2014 30 Questions about when to give feedback, what sort and how much feedback will include: • What constructive feedback can I provide to learners? • How should this be presented? • How can I make feedback effective and avoid comparisons with other students’ performances? • How can rubrics help students to improve their performance and/or deepen understanding of the concepts? • How can the data be used to help students become more deeply engaged in their learning? • How can I involve the students in this? The Individual Profile screen in the RAAD, will allow students to look at their own performance on the NAPLAN tests. The example below, shows the individual student’s name and scaled score which is the dark line. More importantly, the question descriptors and numbers for a particular test are listed in order of difficulty, with the correctly answered questions in green and those answered incorrectly in red. Individual Profile of NAPLAN results By using this report and copies of the test papers, students will be able to review their responses. They can work out which incorrect questions were the results of minor errors or the result of fundamental misconceptions. This analysis can be done independently or jointly with the teacher or assistant teacher. Similarly if students become familiar with the 10 criteria in the writing marking rubric they can review their piece of writing against the scores and descriptors for each criterion and use this information to highlight areas where their skills require improvement. The writing marking guide is available on the Learning Links site, see Appendix C page 34. Repeat the cycle This cycle is a never-ending process in that the effect of any change in practice should be monitored. Begin the process again: Collect data …and start all over again… Assessment and Reporting August 2014 31 Appendix A Data sources* and question matrix Driving questions Data sources What? What do learners already know? What concepts are already in place? What ‘gaps’ are evident? So What? Where do learners need and want to be? What skills do students need to develop? What are they ready to learn next? Now What? How do learners best learn? What approach is working? What strategies can we put in place? What professional learning is needed to improve practice? What resources does the school need? NAPLAN test results NT and Australian Curriculum: Teacher observations, tests, projects, performances, tasks etc. Commercial or web-based test results: PAT, Torch, RAZ Reading, OnDemand, On-line Maths Interview, CMIT (SENA 1 & 2) Students’ learning logs, reflections and self-assessment rubrics Continuum monitoring: First Steps, T–9 Net etc. *Data sources can be quantitative with associated marks, scores, grades, levels, and percentages e.g. tests, projects, assignments or qualitative as in teacher anecdotal notes, continuum tracking, surveys, rubric descriptions, interview responses, student reflection and learning logs etc. All are valid and enable triangulation of data to get a better ‘fix’ on student development. Assessment and Reporting August 2014 32 Appendix B Data Rules Here are two sets of data rules that can be put into a chart or card and be used for collaborative data analysis sessions. Staff may amend/add/delete details to make their own Data Rules. Data Rules This is a safe room There is no rank in this room All ideas are valid Each person gets a chance to speak Each person gets a chance to listen We are here to focus on the future Our purpose is improvement, not blame Victoria Bernhardt (2007) Rules for using Data Let the data do the talking We will not use data to blame or shame ourselves or others We’re all in this together Keep the focus on the kids Assessment and Reporting August 2014 33 Appendix C Support documents Copies of NAPLAN support and analysis documents plus past test papers and answers from 2008 to 2014 can be found on the DoE Learning Links portal shown below: http://ed.ntschools.net/ll/assess/naplan/Pages/default.aspx A CD containing the 2014 test papers and analysis documents was sent to every school. Writing, Reading and Language Conventions analysis documents provide curriculum links, statistics, teaching ideas for every year level. Assessment and Reporting August 2014 Numeracy PowerPoints with screenshots of the questions and notes pages – teaching ideas, resource links and statistics. 34 Appendix D National Reports The National Summary Report and student reports are released at the end of Term 3. This report provides the percentage of students achieved at each band for each year level test for all states and territories. The full report – National Assessment Program Literacy and Numeracy Achievement – will be published December 2014. This report builds on the National Summary Report and provides detailed student achievement for all states and territories by geolocation, gender, Indigeneity and Language Background other than English. The National Summary Report and the full report will be available on the DET website: www.education.nt.gov.au/teachers-educators/assessmentreporting/nap and the ACARA website: www.nap.edu.au/Test_Results/National_reports/index.html Student reports Schools and parents are provided with information in the student report. The student reports show achievement of individual students, together with graphic representation of the student’s performance in comparison to other students in the same year group across Australia (national mean) on the common achievement band scale. The 2014 student reports for some NT schools will include the school average. It was agreed nationally to include this additional information in the student report so that parents have not only the national average to compare their child’s achievement but also the school average. In order to maintain valid or accurate reporting to parents, analysis of the averages has shown that for some NT schools the school mean is not reliable. The accuracy of the school average is influenced by both the student cohort size and the range of student performance for that year level in a school. It was decided by the Minister that inclusion of the school average will only occur for schools where there is confidence in the accuracy and validity of the information being reported. Assessment and Reporting August 2014 Year 5 Report If a student’s result is here, they are well above the expected level for that year. Student’s result National mean for Year 5 Light blue area --------------represents the middle --------60% of students School mean for this student National minimum standard is Band 4 for Year 5. Students in Band 3 are below the national minimum standard for Year 5. 35 Appendix E NAPLAN BANDS For each assessed area a NAPLAN scale of achievement has been developed. The performance of Years 3, 5, 7 and 9 students is measured on a single common achievement scale. It is divided into 10 achievement bands representing increasing demonstrations of proficiency in skills and understandings. This also enables individual achievement to be compared to the national average. Every raw score for a test is converted into a NAPLAN scaled score out of 1000. For example, an average Year 3 student’s score is around 400, while an average year 9 score would be around 580. This is a vertical scale and a student’s score in 2010 can be compared to their 2012 and 2014 scores to gauge progress, (although not in the writing as the genre has changed). Student results are reported against the NAPLAN achievement bands. There are 5 separate scales: one each for reading, writing, spelling, grammar and punctuation and numeracy. A score in one assessed area is not comparable with a score in another test domain. The achievement scale’s 10 bands are represented across the four year groups, with each year group being reported across 6 bands. This allows for tracking and monitoring of student progress over time. Refer to the graphical representation of the achievement scale below. The second bottom band for each year level represents the national minimum standard (orange). This standard represents the minimum range of acceptable performance for the given year level as demonstrated on the assessment. Students whose achievement places them in the band below are identified as below the national minimum standard (red). For a more detailed description of the national minimum standards go to: http://www.nap.edu.au/results-and-reports/student-reports.html Students who are below the national minimum standard should receive focussed intervention and support to help them achieve required literacy and numeracy skills. Students who are at the national minimum standard are at risk of slipping behind and need close monitoring and additional support. Assessment and Reporting August 2014 36 Appendix F Means summary sheet The RAAD Student Distribution – NAPLAN screen shows the school and Australian means. This template can be used to collate and compare all the means. This top level analysis data may provide a starting point for closer analysis and action in certain areas of the curriculum for each year level. In what areas did our school mean vary greatly from the Australian mean? 2014 Year 3 Year 5 NAPLAN Means Aust School Aust School Reading Writing Spelling Punctuation and Grammar Numeracy 2014 Year 7 Year 9 NAPLAN Means Aust School Aust School Reading Writing Spelling Punctuation and Grammar Numeracy Assessment and Reporting August 2014 37 Appendix G Analysis and Action Record Sheets Schools may create documents that record the analysis of a particular set of assessment data and the resultant decisions about what professional learning, adjustments to practice, change in programming and allocation of resources are required to improve results. Student-by-student needs analysis record Group needs analysis record Professional learning goals of an individual teacher or team Assessment and analysis sheet and instructional plan Ghiran Byrne in the Darwin Regional team, 8999 5603 and Leanne Linton in the Assessment and Reporting unit 8944 9248 have developed the example resources shown here. Assessment and Reporting August 2014 38 References Articles from Educational Leadership Dec 2008/Jan 2009 Vol 66 Number 4 www.ascd.org/ Bernhardt, Victoria L. (2009) Data, Data Everywhere Larchmont, NY: Eye On Education Bernhardt, Victoria L. (2007) Translating Data into Information to Improve Teaching and Learning Larchmont, NY: Eye On Education Burke, P. et al (2001) Turning Points Transforming Middle Schools: Looking Collaboratively at Student and Teacher Work Boston MA: Center for Collaborative Education www.turningpts.org Department of Education Queensland (2009) Various Guidelines on Curriculum Leadership, Curriculum Planning, Data Use, Assessment, Moderation and Reporting. education.qld.gov.au/curriculum/framework/p-12/curriculum-planning.html Foster, M. (2009) Using assessment evidence to improve teaching and learning PowerPoint presentation at the ACER 2009 Assessment Conference Glasson, T. (2009) Improving Student Achievement: A practical guide to assessment for learning Vic: Curriculum Corporation Hattie, J (2005) What is the Nature of Evidence that Makes a Difference to Learning? Paper and PowerPoint presented at the 2005 ACER ‘Using Data to Support Learning’ Conference research.acer.edu.au/research_conference_2005/ Holcomb, E. (2004) Getting Excited about Data: Combining People, Passion and Proof to Maximize Student Achievement California: Corwin Press Ikemoto, G. & Marsh, J. (2005) Cutting through the “Data-Driven” Mantra: Different Conceptions of Data-Driven Decision Making Chicago, IL: National Society for the Study of Education Perso, Dr Thelma (2009) Data Literacy and learning from NAPLAN PowerPoint (NT workshop presentation) Timperley, H. (2009) Using assessment data for improving teaching practice Paper presented at the 2009 ACER Assessment Conference research.acer.edu.au/research_conference/RC2009/17august/ Wellman, B and Lipton, L (2008) Data-Driven Dialogue: A Facilitator’s Guide to Collaborative Inquiry Sherman, CT: Mira Assessment and Reporting August 2014 39 40