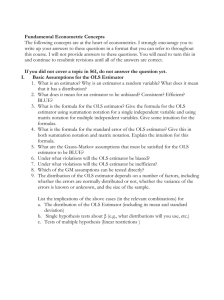

Introduction to Econometrics Fall 2008 Mid Term Exam 2 Please

advertisement

Introduction to Econometrics Fall 2008 Mid-Term Exam 2 Please answer all questions and show your work. Clearly state your answer to each problem. Answers without justi…cation/explanation will not be given credit. Problem 1. (total 25 points) Using a sample of 1801 black individuals, the following earnings equation has been estimated: \ ln(earnings) = 7:059 + 0:147educ + 0:049experience (:135) (:008) 0:201f emale (:007) (:036) R2 = 0:179; n = 1801 where the standard errors are reported in parenthesis. (a) Interpret the coe¢ cient estimate on f emale: In answering parts (b)-(c), you must write down: (i) the null and alternative hypotheses; (ii) the test statistic; (iii) the rejection rule. (b) Test the hypothesis that there is no di¤erence in expected earnings between black women and black men. Test this hypothesis against a twosided alternative, using the 5% signi…cance level. (c) Dropping experience and f emale from the equation gives: \ ln(earnings) = 6:703 + 0:151educ (:182) (:012) R2 = 0:153; n = 1801 1 Are experience and f emale jointly signi…cant in the original equation at the 5% signi…cance level? Answer: (a) The average earnings of females are by 0:201(100)% = 20:1% lower than those of males. (b) H0 : f emale =0 H1 : f emale 6= 0 The test statistic is bf emale t= The degrees of freedom: n Ho se(bf emale ) K 1 = 1801 tn K 1 4 = 1797: Reject if: jtj > tcritical = 1:96 The value of the t-statistic jtj = 0:201 = 5:583 > 1:96 0:036 and hence, we reject the null hypothesis of no di¤erence in the expected earnings between black females and males. (c) H0 : exp er = f emale H1 : H0 is not true 2 =0 The test statistic is F = 2 Rr2 ) =q (Rur 2 )=(n Rur K (1 Ho 1) Fq;n K 1 The degrees of freedom: q = 2 n K 1 = 1801 4 = 1797: Reject if: F > Fcritical = 3:0 The value of the test statistic: F = 0:026 (0:179 0:153) =2 = (898:5) = 28:45 > 3 (1 0:179) =1797 0:821 and hence, experience and f emale are jointly signi…cant at the 5% signi…cance level. Problem 2. (total 15 points) Consider the following regression model: yi = 0 + 1 xi + 2 zi + ui ; i = 1; :::; n where (yi ; xi ; zi ) are i.i.d. and E(ui jxi ; zi ) = 0 E(u2i jxi ; zi ) = 2 4 xi How would you obtain the Best Linear Unbiased Estimator (BLUE) estimator for this regression? In answering this question, you must write down any required transformation and explain why you need it. 3 Answer: The disturbances in this model are mean-independent, but heteroscedastic. To obtain the GLS estimator, which is the BLUE in this model, we need to induce an equal variance across all observations. This can be achieved by p dividing i-th equation by x4i = x2i : yi = x2i 0 1 + x2i 1 1 + xi 2 zi ui + 2 ; i = 1; :::; n 2 xi xi Re-write the transformed model as: yi = 0 wi + 1 xi + 2 zi + ui ; i = 1; :::; n (1) Then, the OLS estimator of the transformed model (1) is the GLS estimator of the original model. Problem 3. (total 60 points) Consider the linear probability model: yi = Xi + ui , i = 1; :::; n where yi is the binary variable equal 1 or 0; Xi is the vector of observed explanatory variables; and ui is a zero-mean error term such that E(ui uj jXi ; Xj ) = 0; for i 6= j: (a) (15 points) What is the P (yi = 1jXi )? Derive the conditional distribution of ui jXi . Show that it has mean 0 and …nd its variance. (b) (15 points) Show that the OLS estimator of is unbiased and …nd its variance. (c) (10 points) Is the OLS estimator the BLUE of ? If not, give the expression for the BLUE estimator of : (d) (5 points) Discuss the drawbacks/problems of the linear probability model. 4 (e) (15 points) What alternative models/speci…cations could one use to avoid the problems associated with the linear probability model? Write down the optimization problem that needs to be solved to estimate those alternative models. Answer: (a) P (yi = 1jXi ) = Xi : Conditional on Xi , if yi = 1, then ui = 1 and if yi = 0, then ui = Xi with probability 1 E(ui jXi ) = (1 Xi ) Xi + ( Xi ) (1 with probability Xi ; Xi . Then, we have (2) Xi ) = 0 Xi )2 Xi + ( Xi )2 (1 V ar(ui jXi ) = E(u2i jXi ) = (1 = (1 Xi Xi ) Xi ) Xi Hence, E(ujX) = 0 2 (1 6 6 V ar(ujX) = 6 4 X1 ) X1 .. . 0 ::: .. . ::: (1 Thus, the disturbances are heteroscedastic. (b) The OLS estimator is given by b = (X 0 X) 1 X 0 y = + (X 0 X) 1 X 0 u 5 0 .. . Xn ) Xn 3 7 7 7 5 Taking conditional expectations gives: E(bjX) = E(b) = + (X 0 X) 1 X 0 E(ujX) = since E(ujX) = 0. The variance of the OLS estimator is h V ar(bjX) = E (b )(b )0 jX i = (X 0 X) 1 X 0 E(u u0jX)X(X 0 X) = (X 0 X) 1 X 0 X(X 0 X) (c) The OLS estimator is not BLUE for 1 1 since the conditional ho- moscedasticity assumption is violated. The BLUE of is the GLS estimator given by: e = (X 0 1 X) 1 X 0 1 y: (d) The main drawbacks of the linear probability model are that both P (yi = 1jXi ) = Xi and V ar(ui jXi ) = (1 Xi ) Xi may be negative. (e) (15 points) To avoid these problems one could instead use the following speci…cation: P (yi = 1jXi ) = G(Xi ) where G(z) is some cumulative distribution function, for example, G(z) is the c.d.f. of a normal distribution or the c.d.f. of a logistical distribution. To estimate this model, one maximizes the likelihood or log likelihood function: M ax L( ; X) = n i=1 [G(Xi )]yi [1 6 G(Xi )]1 yi or M ax ln L( ; X) = X yi ln [G(Xi )] + i:yi =1 X (1 yi ) ln [1 G(Xi )] i:yi =0 The resulting estimators are called probit for the normal c.d.f. and logit for the logistical c.d.f. 7