Introduction to Statistical Methods Descriptive Statistics

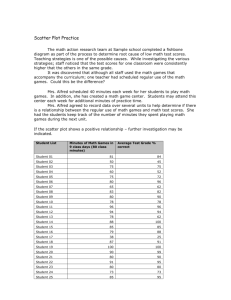

advertisement