chapter 4 asymptotic theory for linear regression models with iid

advertisement

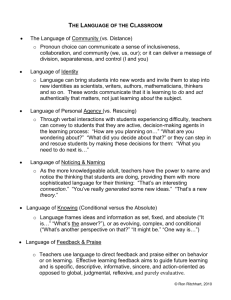

CHAPTER 4 ASYMPTOTIC THEORY FOR LINEAR REGRESSION MODELS WITH I.I.D. OBSERVATIONS Key words: Asymptotics, Almost sure convergence, Central limit theorem, Convergence in distribution, Convergence in quadratic mean, Convergence in probability, I.I.D., Law of large numbers, the Slutsky theorem, White’s heteroskedasticity-consistent variance estimator. Abstract: When the conditional normality assumption on the regression error does not hold, the OLS estimator no longer has the …nite sample normal distribution, and the t-test statistics and F -test statistics no longer follow a Student t-distribution and a F -distribution in …nite samples respectively. In this chapter, we show that under the assumption of i.i.d. observations with conditional homoskedasticity, the classical t-test and F -test are approximately applicable in large samples. However, under conditional heteroskedasticity, the t-test statistics and F -test statistics are not applicable even when the sample size goes to in…nity. Instead, White’s (1980) heteroskedasticity-consistent variance estimator should be used, which yield asymptotically valid hypothesis test procedures. A direct test for conditional heteroskedasticity due to White (1980) is presented. To facilite asymptotic analysis in this and subsequent chapters, we …rst introduce some basic tools in asymptotic analysis. Motivation The assumptions of classical linear regression models are rather strong and one may have a hard time …nding practical applications where all these assumptions hold exactly. For example, it has been documented that most economic and …nancial data have heavy tails, and so are not normally distributed. An interesting question now is whether the estimators and tests which are based on the same principles as before still make sense in this more general setting. In particular, what happens to the OLS estimator, the tand F -tests if any of the following assumptions fails: strict Exogeneity E("t jX) = 0? normality ("jX N (0; 2 I))? conditional homoskedasticity (var("t jX) = 2 )? serial uncorrelatedness (cov("t ; "s jX) = 0 for t 6= s)? 1 When classical assumptions are violated, we do not know the …nite sample statistical properties of the estimators and test statistics anymore. A useful tool to obtain the understanding of the properties of estimators and tests in this more general setting is to pretend that we can obtain a limitless number of observations. We can then pose the question how estimators and test statistics would behave when the number of observations increases without limit. This is called asymptotic analysis. In practice, the sample size is always …nite. However, the asymptotic properties translate into results that hold true approximately in …nite samples, provided that the sample size is large enough. We now need to introduce some basic analytic tools for asymptotic theory. For more systematic introduction of asymptotic theory, see, for example, White (1994, 1999). 2 Introduction to Asymptotic Theory In this section, we introduce some important convergence concepts and limit theorems. First, we introduce the concept of convergence in mean squares, which is a distance measure of a sequence of random variables from a random variable. De…nition [Convergence in mean squares (or in quadratic mean)] A sequence of random variables/vectors/matrices Zn ; n = 1; 2; :::; is said to converge to Z in mean squares as n ! 1 if EjjZn Zjj2 ! 0 as n ! 1; where jj jj is the sum of the absolute value of each component in Zn Z: Remarks: (i) When Zn is a vector or matrix, convergence can be understood as convergence in each element of Zn : (ii) When Zn Z is a l m matrix, where l and m are …xed positive integers, then we can also de…ne the squared norm as jjZn l X m X 2 Zjj = Z]2(t;s) : [Zn t=1 s=1 Example 1: Suppose fZt g is i.i.d.( ; 2 ); and Zn = n q:m: Zn ! 1 Pn t=1 : Solution: Because E(Zn ) = ; we have E(Zn )2 = var(Zn ) = var n 1 var n2 = 1 n X t=1 n X Zt Zt t=1 ! ! n 1 X var(Zt ) n2 t=1 = 2 = n ! 0 as n ! 1: It follows that 2 E(Zn )2 = n 3 ! 0 as n ! 1: Zt : Then Next, we introduce the concept of convergence in probability that is another popular distance measure between a sequence of random variables and random variable. De…nition [Convergence in probability] Zn converges to Z in probability if for any given constant " > 0; Pr[jjZn Zjj > "] ! 0 as n ! 1 or Pr[jjZn Zjj "] ! 1 as n ! 1: Remarks: (i) We can also write Zn p Z ! 0 or Zn Z = oP (1); p (ii) When Z = b is a constant, we can write Zn ! b and b = p lim Zn is called the probability limit of Zn : (iii) The notation oP (1) means that Zn b vanishes to zero in probability. (iv) Convergence in probability is also called weak convergence or convergence with probability approaching one. (v) When Zn !p Z; the probability that the di¤erence jjZn Zjj exceeds any given small constant " is rather small for all n su¢ ciently large. In other words, Zn will ve very close to Z with very high probability, when the sample size n is su¢ ciently large. Lemma [Weak Law of Large Numbers (WLLN) for I.I.D. Sample] Suppose P fZt g is i.i.d.( ; 2 ); and de…ne Zn = n 1 nt=1 Zt ; n = 1; 2; ::: : Then p Zn ! as n ! : Proof: For any given constant " > 0; we have by Chebyshev’s inequality Pr(jZn E(Zn "2 j > ") )2 2 = n"2 ! 0 as n ! 1: Hence, p Zn ! as n ! 1: Remark: This is the so-called weak law of large numbers (WLLN). In fact, we can weaken the moment condition. Lemma [WLLN for I.I.D. Random Sample] Suppose fZt g is i.i.d. with E(Zt ) = P and EjZt j < 1: De…ne Zn = n 1 nt=1 Zt : Then p Zn ! as n ! 1: 4 Question: Why do we need the moment condition EjZt j < 1? Answer: An counter example: Suppose fZt g is a sequence of i.i.d. Cauchy(0; 1) random variables whose moments do not exist. Then Zn Cauchy(0; 1) for all n 1; and so it does not converges in probability to some constant as n ! 1: We now introduce a a related useful concept: De…nition [Boundedness in Probability] A sequence of random variables/vectors/matrices fZn g is bounded in probability if for any small constant > 0; there exists a constant C < 1 such that P (jjZn jj > C) as n ! 1: We denote Zn = OP (1): Interpretation: As n ! 1; the probability that jjZn jj exceeds a very large constant is small. Or, equiavently, jjZn jj is smaller than C with a very high probability as n ! 1: Example: Suppose Zn 2 N( ; ) for all n 1: Then Zn = OP (1): Solution: For any such that > 0; we always have a su¢ ciently large constant C = C( ) > 0 P (jZn j > C) = 1 = 1 = 1 P( C C P C Zn Zn + C) C C+ ; where )= ] (z) = P (Z 1 12 and z) is the CDF of N (0; 1): [We can choose C such that [ (C + )= ] 12 :] A Special Case: What happens to C if Zn N (0; 1)? In this case, P (jZn j > C) = 1 = 2[1 (C) + ( C) (C)]: 5 [(C Suppose we set 2 (C) = 1 ; that is, we set where 1 1 1 C= ; 2 ( ) is the inverse function of ( ): Then we have P (jZn j > C) = : q:m: p Remark: Zn Z ! 0 implies Zn We now give a counter example. Example: Suppose Z ! 0 (why?) but the converse may not be true. ( Zn = p 1 n 0 with prob 1 n with prob n1 : 0)2 = n ! 1: Please verify it. Then Zn ! 0 as n ! 1 but E(Zn Solution: (i) For any given " > 0; we have P (jZn 0j > ") = P (Zn = n) = 1 ! 0: n (ii) E(Zn 0)2 = X 0)2 f (zn ) (zn zn 2f0;ng = (0 0)2 1 n 1 + (n 0)2 n 1 = n ! 1: Next, we provide another convergence concept called almost sure convergence. De…nition [Almost Sure Convergence] fZn g converges to Z almost surely if h i Pr lim jjZn Zjj = 0 = 1: n!1 We denote Zn a:s: Z ! 0: Interpretation: Recall the de…nition of a random variable: any random variable is a mapping from the sample space to the real line, namely Z : ! R: Let ! be a basic outcome in the sample space : De…ne a subset in : Ac = f! 2 : lim Zn (!) = Z(!)g: n!1 6 That is, Ac is the sets of basic outcomes on which the sequence of fZn ( )g converges to Z( ) as n ! 1: Then almost sure convergence can be stated as P (Ac ) = 1: In other words, the convergent set Ac has probability one to occur. Example: Let ! be uniformly distributed on [0; 1]; and de…ne Z(!) = ! for all ! 2 [0; 1]: and Is Zn Zn (!) = ! + ! n for ! 2 [0; 1]: a:s: Z ! 0? Solution: Consider Ac = f! 2 : lim jZn (!) n!1 Z(!)j = 0g: Because for any given ! 2 [0; 1); we always have lim jZn (!) n!1 Z(!)j = = lim j(! + ! n ) n!1 !j lim ! n = 0: n!1 In contrast, for ! = 1; we have lim jZn (1) n!1 Z(1)j = 1n = 1 6= 0: Thus, Ac = [0; 1) and P (Ac ) = 1: We also have P (A) = P (! = 1) = 0: Remark: Almost sure convergence is closely related to pointwise convergence (almost everywhere). It is also called strong convergence. Lemma [Strong Law of Large Numbers (SLLN) for I.I.D. Random Samples] Suppose fZt g be i.i.d. with E(Zt ) = and EjZt j < 1: Then a:s: Zn ! as n ! 1: Remark: Almost sure convergence implies convergence in probability but not vice versa. 7 p p Lemma [Continuity]: (i) Suppose an ! a and bn ! b; and g( ) and h( ) are continuous functions. Then p g(an ) + h(bn ) ! g(a) + h(b); and p g(an )h(bn ) ! g(a)h(b): (ii) Similar results hold for almost sure convergence. The last convergence concept we will introduce is called convergenve in distribution. De…nition [Convergence in Distribution] Suppose fZn g is a sequence of random variables with CDF fFn (z)g; and Z is a random variable with CDF F (z): Then Zn converges to Z in distribution if Fn (z) ! F (z) as n ! 1 for all continuity points of F ( ); namely all points z where F (z) is continuous. The distribution F (z) called the asymptotic distribution or limiting distribution of Zn : Remark: For convergence in mean squares, convergence in probability and almost sure convergence, we can view that convergence of Zn to Z occurs element by element (that is, each element of Zn converges to the corresponding element of Z). For convergence in distribution of Zn to Z, however, element by element convergence does not imply convergence in distribution of Zn to Z; because element-wise convergence in distribution d ignores the relationships among the components of Zn : However, Zn ! Z implies that each element of Zn converges in distribution to the corresponding element of Z; namely convergence in joint distribution implies convergence in marginal distribution. It may be emphasized that convergence in distribution is de…ned in terms of the closeness of the CDF Fn (z) to the CDF F (z); not between the closeness of the random variable Zn to the random variable Z: In contrast, convergence in mean squres, convergence in probability and almost sure convergence all measure the closeness between the random variable Zn and the random variable Z: The concept of convergence in distribution is very useful in practice. Generally, the …nite sample distribution of statistic is either complicated or unknown. Suppose we know the asymptotic distribution of Zn . Then we can use it as the approximation to the …nite sample distribution and use it in statistical inference. The resulting inference is not exact, but the accuracy improves as the sample size n becomes larger. 8 With the concept of convergence in distribution, we can now state the Central Limit Theorem (CLT) for i.i.d. random samples, a fundamental limit theorem in probability theory. Lemma [Central Limit Theorem (CLT) for I.I.D. Random Samples]: Suppose P fZt g is i.i.d.( ; 2 ); and Zn = n 1 nt=1 Zt : Then as n ! 1; Zn Zn E(Zn ) p = p 2 =n var(Zn ) p n(Zn = ) d ! N (0; 1): Proof: Put Yt = and Yn = n 1 Pn t=1 Zt ; Yt : Then p The characteristic function of n (u) p n(Zn ) = p n Yn : n Yn p p i= 1 = E[exp(iu nYn )]; " !# n iu X = E exp p Yt n t=1 = n Y iu p Yt n E exp t=1 n u p n = Y = Y (0) + by independence by identical distribution: 0 u 1 (0) p + n 2 00 (0) u2 + ::: n n n = 1 ! exp u2 + o(1) 2n u2 as n ! 1; 2 where (0) = 1; 0 (0) = 0; 00 (0) = 1; o(1) means a reminder term that vanishes to n zero as n ! 1; and we have also made use of the fact that 1 + na ! ea : 9 More rigorously, we can show ln n (u) = n ln ln = u p n Y pu n Y n 1 0 Y p (u= n) p u (u= n) lim Y 1=2 ! 2 n!1 n p 00 u2 Y (u= n) lim = 2 n!1 u2 : = 2 p p [ 0Y (u= n)]2 Y (u= n) p 2 Y (u= n) It follows that lim n (u) n!1 =e 1 2 u 2 : This is the characteristic function of N (0; 1). By the uniqueness of the characteristic function, the asymptotic distribution of p n(Zn ) is N (0; 1): This completes the proof. d Lemma [Cramer-Wold Device] A p 1 random vector Zn ! Z if and only if for any nonzero 2 Rp such that 0 = pj=1 2j = 1; we have 0 d Zn ! 0 Z: Remark: This lemma is useful for obtaining asymptotic multivariate distributions. d p p Slutsky Theorem: Let Zn ! Z; an ! a and bn ! b; where a and b are constants. Then d an + bn Zn ! a + bZ as n ! 1: Question: If Xn !d X and Yn !d Y: Is Xn + Yn !d X + Y ? Answer: No. We consider two examples: Example 1: Xn and Yn are independent N(0,1). Then Xn + Yn !d N (0; 2): 10 Example 2: Xn = Yn N (0; 1) for all n 1: Then Xn + Yn = 2Xn N (0; 4): p )= !d N (0; 1), and g( ) is continuously Lemma [Delta Method] Suppose n(Zn di¤erentiable with g 0 ( ) 6= 0: Then as n ! 1; p n[g(Zn ) g( )] !d N (0; [g 0 ( )]2 2 ): Remark: The Delta method is a Taylor series approximation in a statistical context. It linearizes a smooth (i.e., di¤erentiable) nonlinear statistic so that the CLT can be applied to the linearized statistic. Therefore, it can be viewed as a generalization of the CLT from a sample average to a nonlinear statistic. This method is very useful when more than one parameter makes up the function to be estimated and more than one random variable is used in the estimator. p p Proof: First, because n(Zn )= !d N (0; 1) implies n(Zn have Zn = OP (n 1=2 ) = oP (1): Next, by a …rst order Taylor series expansion, we have Yn = g(Zn ) = g( ) + g 0 ( where n = + (1 )Zn for n )(Zn )= = OP (1); we ); 2 [0; 1]: It follows by the Slutsky theorem that p g(Zn ) n g( ) = g0( ! d p Zn n n) N (0; [g 0 ( )]2 ); where g 0 ( n ) !p g 0 ( ) given n !p : By the Slutsky theorem again, we have p n[Yn g( )] !d N (0; 2 [g 0 ( )]2 ): p )= !d N (0; 1) and Example: Suppose n(Zn p 1 limiting distribution of n(Zn 1 ): Solution: Put g(Zn ) = Zn 1 : Because Taylor series expansion, we have 1 = ( < 1: Find the 6= 0; g( ) is continuous at : By a …rst order g(Zn ) = g( ) + g 0 ( Zn 1 6= 0 and 0 < 2 n 11 )(Zn n )(Zn ) ); or where n = + (1 )Zn !p p n(Zn 1 given Zn !p 1 ) = ! and 2 [0; 1]: It follows that p n(Zn ) 2 n d N (0; 2 = 4 ): We now use these asymptotic tools to investigate the large sample behavior of the OLS estimator and related statistics in subsequent chapters. 12 Large Sample Theory for Linear Regression Models with I.I.D. Observations 4.1 Assumptions We …rst state the assumptions under which we will estalish the asymptotic theory for linear regression models. Assumption 4.1 [I.I.D.]: fYt ; Xt0 gnt=1 is an i.i.d. random sample. Assumption A.2 [Linearity]: Yt = Xt0 for some unknown K 1 parameter 0 + "t ; 0 t = 1; :::; n; and some unobservable random variable "t : Assumption 4.3 [Correct Model Speci…cation]: E("t jXt ) = 0 a.s. with E("2t ) = 2 < 1: Assumption 4.4 [Nonsingularity]: The K K matrix Q = E(Xt Xt0 ) is nonsingular and …nite: Assumption 4.5: The K de…nite (p.d.). K matrix V var(Xt "t ) = E(Xt Xt0 "2t ) is …nite and positive Remarks: (i) The i.i.d. observations assumption implies that the asymptotic theory developed in this chapter will be applicable to cross-sectional data, but not time series data. The observations of the later are usually correlated and will be considered in Chapter 5. Put Zt = (Yt ; Xt0 )0 : Then I.I.D. means that Zt and Zs are independent when t 6= s, and the Zt have the same distribution for all t: The identical distribution means that the observations are generated from the same data generating process, and independence means that di¤erent observations contain new information about the data generating process. 13 (ii) Assumptions 4.1 and 4.3 imply the strict exogenciety condition (Assumption 3.2) holds, because we have E("t jX) = E("t jX1 ; X2 ; :::Xt ; :::Xn ) = E("t jXt ) = 0 a:s: (iii) As a most important feature of Assumptions 4.1–4.5 together, we do not assume normality for the conditional distribution of "t jXt ; and allow for conditional heteroskedasticity (i.e., var("t jXt ) 6= 2 a.s.). Relaxation of the normality assumption is more realizatic for economic and …nancial data. For example, it has been well documented (Mandelbrot 1963, Fama 1965, Kon 1984) that returns on …nancial assets are not normally distributed. However, the I.I.D. assumption implies that cov("t ; "s ) = 0 for all t 6= s: That is, there exists no serial correlation in the regression disturbance. 2 (iv) Among other things, Assumption 4.4 implies E(Xjt ) < 1 for 0 j k: By the SLLN for i.i.d. random samples, we have X 0X n 1X Xt Xt0 n t=1 n = ! a:s: E(Xt Xt0 ) = Q as n ! 1: Hence, when n is large, the matrix X 0 X behaves approximately like nQ; whose minimum eigenvalue min (nQ) = n min (Q) ! 1 at the rate of n: Assumption 4.4 implies Assumption 3.3. (v) When X0t = 1; Assumption 4.4 implies E("2t ) < 1: (vi) If E("2t jXt ) = 2 < 1 a.s., i.e., there exists conditional homoskedasticity, then Assumption 4.5 can be ensured by Assumption 4.4. More generally, there exists conditional heteroskedasticity, the moment condition in Assumption 4.5 can be ensured by 4 the moment conditions that E("4t ) < 1 and E(Xjt ) < 1 for 0 j k; because by repeatedly using the Cauchy-Schwarz inequality twice, we have jE("2t Xjt Xlt )j where 0 j; l k and 1 t 2 [E("4t )]1=2 [E(Xjt Xlt2 )]1=2 4 [E("4t )]1=2 [E(Xjt )E(Xlt4 )]1=4 n: We now address the following questions: Consistency of OLS? 14 Asymptotic normality? Asymptotic e¢ ciency? Con…dence interval estimation? Hypothesis testing? In particular, we are interested in knowing whether the statistical properties of OLS ^ and related test statistics derived under the classical linear regression setup are staill valid under the current setup, at least when n is large. 4.2 Consistency of OLS Suppose we have a random sample fYt ; Xt0 gnt=1 : Recall that the OLS estimator: ^ = (X 0 X) 1 X 0 Y 1 X 0X n = ^ 1n = Q 1 n X X 0Y n Xt Yt ; t=1 where ^=n Q 1 n X Xt Xt0 : t=1 Substituting Yt = Xt0 0 + "t ; we obtain ^= 0 ^ 1n +Q 1 n X Xt "t : t=1 We will consider the consistency of ^ directly. Theorem [Consistency of OLS] Under Assumptions 4.1-4.4, as n ! 1; p ^! 0 or ^ 0 = oP (1): Proof: Let C > 0 be some bounded constant. Also, recall Xt = (X0t ; X1t ; :::; Xkt )0 : First, the moment condition holds: for all 0 j k; EjXjt "t j 1 1 2 2 (EXjt ) (E"2t ) 2 by the Cauchy-Schwarz inequality 1 1 C2C2 C 15 2 ) C by Assumption 4.4, and E("2t ) where E(Xjt WLLN (with Zt = Xt "t ) that n 1 n X t=1 C by Assumption 4.3. It follows by p Xt "t ! E(Xt "t ) = 0; where E(Xt "t ) = E[E(Xt "t jXt )] by the law of iterated expectations = E[Xt E("t jXt )] = E(Xt 0) = 0: Applying WLLN again (with Zt = Xt Xt0 ) and noting that 1 2 [E(Xjt )E(Xlt2 )] 2 EjXjt Xlt j C by the Cauchy-Schwarz inequality for all pairs (j; l); where 0 j; l k; we have p ^! Q E(Xt Xt0 ) = Q: ^ Hence, we have Q 1 !p Q 1 by continuity. It follows that ^ 0 = (X 0 X) 1 X 0 " n X 1 1 ^ = Q n Xt "t t=1 p !Q 1 0 = 0: This completes the proof. 4.3 Asymptotic Normality of OLS Next, we derive the asymptotic distribution of ^ : We …rst provide a multivariate CLT for I.I.D. random samples. Lemma [Multivariate Central Limit Theorem (CLT) for I.I.D. Random Samples]: Suppose fZt g is a sequence of i.i.d. random vectors with E(Zt ) = 0 and var(Zt ) = E(Zt Zt0 ) = V is …nite and positive de…nite. De…ne Zn = n 1 n X t=1 16 Zt : Then as n ! 1; p or 1 2 V d nZn ! N (0; V ) p d nZn ! N (0; I): Question: What is the variance-covariance matrix of Answer: Noting that E(Zt ) = 0; we have ! n X p 1 var( nZn ) = var n 2 Zt = E = n " 1 t=1 1 2 n n X Zt t=1 n X n X ! 1 2 n n X Zs s=1 p n Zn ? !0 # E(Zt Zs0 ) t=1 s=1 = n = 1 n X E(Zt Zt0 ) (because Zt and Zs are independent for t 6= s) t=1 E(Zt Zt0 ) = V: In other words, the variance of random vector Zt : p nZn is identical to the variance of each individual Theorem [Asymptotic Normality of OLS] Under Assumptions 4.1-4.5, we have p as n ! 1; where V n( ^ 0 d ) ! N (0; Q 1 V Q 1 ) var("t jXt ) = E(Xt Xt0 "2t ): Proof: Recall that p n( ^ 0 ^ 1n )=Q 1 2 n X Xt "t : t=1 First, we consider the second term n 1 2 n X Xt "t : t=1 Noting that E(Xt "t ) = 0 by Assumption 4.3, and var(Xt "t ) = E[(Xt Xt0 "2t ) = V; which is …nite and p.d. by Assumption 4.5. Then, by the CLT for i.i.d. random sequences 17 fZt = Xt "t g, we have n 1 2 n X Xt "t = p = p t=1 n n 1 n X Xt "t t=1 n Zn ! d ! Z ~ N (0; V ): On the other hand, as shown earlier, we have p ^! Q Q; and so ^ Q 1 p !Q 1 given that Q is nonsingular so that the inverse function is continuous and well de…ned. It follows by the Slutsky Theorem that p n( ^ 0 ^ 1n ) = Q 1 2 n X Xt "t t=1 d 1 1 N (0; Q V Q 1 ): !Q Z This completes the proof. Remarks: The theorem implies that: p p 0 0 (i) The asymptotic mean of n( ^ ) is 0. That is, the mean of n( ^ ) is approximately 0 when n is large. p 0 ) is Q 1 V Q 1 : That is, the variance of (ii) The asymptotic variance of n( ^ p ^ 0 n( ) is approximately Q 1 V Q 1 : Because the asymptotic variance is a di¤erent p p concept from the variance of n( ^ 0 ); we denote the asymptotic variance of n( ^ 0 ) p as follows:avar( n ^ ) = Q 1 V Q 1 : p We now consider a special case under which we can simplfy the expression of avar( n ^ ): Special Case : Conditional Homoskedasticity Assumption 4.6: E("2t jXt ) = 2 a.s. Theorem: Suppose Assumptions 4.1–4.6 hold. Then as n ! 1; p n( ^ 0 d ) ! N (0; 18 2 Q 1 ): Remark: Under conditional homoskedasticity, the asymptotic variance of is p avar( n ^ ) = 2 Q 1 : p n( ^ 0 ) Proof: Under Assumption 4.6, we can simplify V = E(Xt Xt0 "2t ) = E[E(Xt Xt0 "2t jXt )] by the law of iterated expectations = E[Xt Xt0 E("2t jXt )] = 2 E(Xt Xt0 ) = 2 Q: The results follow immediately because Q 1V Q 1 =Q 1 2 QQ 1 2 = Q 1: Question: Is the OLS estimator ^ the BLUE estimator asymptotically (i.e., when n ! 1)? 4.4 Asymptotic Variance Estimator To construct con…dence interval estimators or hypothesis tests, we need to estimate p p p the asymptotic variance of n( ^ 0 ), avar( n ^ ): Because the express of avar( n ^ ) differs under conditional homoskedasticity and conditional heteroskedasticity respectively, p we consider the estimator for avar( n ^ ) under these two cases seperately. Case I: Conditional Homoskedasticity In this case, the asymptotic variance of p n( ^ p avar( n ^ ) = Q 1 V Q 0 1 = ) is 2 Q 1: Question: How to estimate Q? Lemma: Suppose Assumptions 4.1, 4.2 and 4.4 hold. Then ^=n Q 1 n X t=1 Question: How to estimate 2 p Xt Xt0 ! Q: ? 19 2 Recalling that = E("2t ); we use the sample residual variance estimator s2 = e0 e=(n = = Is s2 consistent for 2 K) n X e2t 1 n K t=1 n X 1 n K Xt0 ^ )2 : (Yt t=1 ? 2 Theorem [Consistent Estimator for ]: Under Assumptions 4.1-4.4, p s2 ! Proof: Given that s2 = e0 e=(n 2 : K) and Xt0 ^ et = Yt = "t + Xt0 Xt0 ^ 0 Xt0 ( ^ = "t 0 ); we have s2 = = 1 n K n n n X Xt0 ( ^ ["t t=1 K +( ^ n 1 0 0 " n X t=1 ) (n 2( ^ 0 0 "2t ) (n 0 )]2 ! K) 1 n X # Xt Xt0 ( ^ t=1 K) 1 n X 0 ) Xt "t t=1 p !1 = 2 +0 Q 0 2 0 0 2 given that K is a …xed number (i.e., K does not grow with the sample size n), where we have made use of the WLLN in three places respectively. Remark: We can then consistently estimate 2 Q 1 Theorem [Asymptotic Variance Estimator of 4.1-4.4, we have p 2 ^ 1! s2 Q Q 1: 20 ^ 1: by s2 Q p n( ^ 0 )] Under Assumptions p 0 ^ 1 = s2 (X 0 X=n) 1 : Remark: The asymptotic variance estimator of n( ^ ) is s2 Q 0 ^ 1 =n = This is equivalent to saying that the variance estimator of ^ is equal to s2 Q s2 (X 0 X) 1 when n ! 1: Thus, when n ! 1 and there exists conditional homoskedas0 ticity, the variance estimator of ^ coincides with the form of the variance estimator 0 for ^ in the classical regression case. Because of this, as will be seen below, the conventional t-test and F -test are still valid for large samples under conditional homoskedasticity. CASE II: Conditional Heteroskedesticity In this case, p avar( n ^ ) = Q 1 V Q 1 ; which cannot be simpli…ed. ^ to estimate Q: How to estimate V = E(Xt Xt0 "2 )? Question: We can still estimate Q t Answer: Use its sample analog V^ = n 1 n X Xt Xt0 e2t t=1 where X 0 D(e)D(e)0 X = ; n D(e) = diag(e1 ; e2 ; :::; en ) is an n n diagonal matrix with diagonal elements equal to et for t = 1; :::; n: To ensure consistency of V^ to V; we impose the following additional moment conditions. 4 ) < 1 for all 0 Assumption 4.7: (i) E(Xjt j k; and (ii) E("4t ) < 1: Lemma: Suppose Assumptions 4.1–4.5 and 4.7 hold. Then p V^ ! V: Proof: Because et = "t V^ (^ = n 0 0 ) Xt ; we have 1 +n n X Xt Xt0 "2t t=1 n X 1 2n Xt Xt0 [( ^ t=1 n X 1 Xt Xt0 ["t Xt0 ( ^ t=1 ! p V +0 ) Xt Xt0 ( ^ 0 0 2 0; 21 0 )] 0 )] where for the …rst term, we have 1 n n X t=1 p Xt Xt0 "2t ! E(Xt Xt0 "2t ) = V by the WLLN and Assumption 4.7, which implies 1 EjXit Xjt "2t j 2 [E(Xit2 Xjt )E("4t )] 2 : For the second term, we have 1 n = ! given ^ 0 k X n X t=1 k X Xit Xjt ( ^ (^l ) Xt Xt0 ( ^ 0 0 0 ^ l )( m 0 m) n 1 ) n X Xit Xjt Xlt Xmt t=1 l=0 m=0 p 0 0 ! !p 0; and n 1 n X t=1 Xit Xjt Xlt Xmt !p E (Xit Xjt Xlt Xmt ) = O(1) by the WLLN and Assumption 4.7. Similarly, for the last term, we have n 1 n X Xit Xjt "t Xt0 ( ^ 0 ) t=1 = ! given ^ 0 !p 0; and n 1 k X l=0 p n X t=1 (^l 0 l) n 1 n X Xit Xjt Xlt "t t=1 0 ! Xit Xjt Xlt "t !p E (Xit Xjt Xlt "t ) = 0 by the WLLN and Assumption 4.7. This completes the proof. p We now construct a consistent estimator for avar( n ^ ) under conditional heteroskedasticity. p Theorem [Asymptotic variance estimator for n( ^ 4.1–4.5 and 4.7, we have p ^ 1 V^ Q ^ 1! Q Q 1V Q 1: 22 0 )]: Under Assumptions Remark: This is the so-called White’s (1980) heteroskedasticity-consistent variancep 0 ): It follows that when there exists condicovariance matrix of the estimator n( ^ 0 tional heteroskedasticity, the estimator for the variance of ^ is (X 0 X=n) 1 V^ (X 0 X=n) 1 =n = (X 0 X) 1 X 0 D(e)D(e)0 X(X 0 X) 1 ; which di¤ers from the estimator s2 (X 0 X) 1 in the case of conditional homoskedasticity. ^ 1 as an estimator for the avar[pn( ^ Question: What happens if we use s2 Q while there exists conditional heteroskedasticity? 0 )] Answer: Observe that E(Xt Xt0 "2t ) V = 2 Q + cov(Xt Xt0 ; "2t ) = 2 Q + cov[Xt Xt0 ; 2 (Xt )]; where 2 = E("2t ); 2 (Xt ) = E("2t jXt ); and the last equality follows by the LIE. Thus, if 2 (Xt ) is positively correlated with Xt Xt0 ; 2 Q will underestimate the true variance2 Q is a positive de…nite matrix. Consecovariance E(Xt Xt0 "2t ) in the sense that V quently, the standard t-test and F -test will overreject the correct null hypothesis at any given signi…cance level. There will exist substantial Type I errors. ^ 1 V^ Q ^ Question: What happens if one use the asymptotic variance estimator Q there exists conditional homoskedasticity? 1 but Answer: The asymptotic variance estimator is asymptotically valid, but it will not ^ 1 in …nite samples, because the latter exploit the perform as well as the estimator s2 Q information of conditonal homoskedasticity. 4.5 Hypothesis Testing Question: How to construct a test statistic for the null hypothesis H0 : R 0 = r; where R is a J K constant matrix, and r is a J 1 constant vector? The test procedures will di¤er depending on whether there exists conditional heteroskedasticity. We …rst consider the case of conditional homoskedasticity. Case I: Conditional Homoskedasticity 23 2 Theorem [Asymptotic under H0 ; Test] Suppose Assumptions 4.1–4.4 and 4.6 hold. Then (R ^ J F d 1 r)0 s2 R(X 0 X) 1 R0 (R ^ r) 2 J ! as n ! 1: Proof: Consider R^ r = R( ^ 0 0 )+R r: Note that under conditional homoskedasticity, we have p n ^ 0 d ! N (0; 2 Q 1 ): Thus, under H0 : R = r; we have p p r) = R n( ^ n(R ^ 0 d 2 ) ! N (0; RQ 1 R0 ): It follows that the quadratic form p ^ Also, because s2 Q n(R ^ 1 p ! p 2 r)0 2 RQ 1 R0 1 p d n(R ^ r) ! 2 J: Q 1 ; we have by the Slutsky theorem n(R ^ ^ 1 R0 r)0 s2 RQ 1 p d n(R ^ r) ! 2 J: or equivalently J (R ^ r)0 [R(X 0 X) 1 R0 ] 1 (R ^ s2 r)=J d =J F ! 2 J; namely d J F ! 2 J: Remarks: When f"t g is not i.i.d.N (0; 2 ) conditional on Xt ; we cannot use the F distribution, but we can still compute the F -statistic and the appropriate test statistic is J times the F -statistic, which is asymptotically 2J . That is, (~ e0 e~ e0 e) d J F = 0 ! e e=(n K) An Alternative Interpretation: Because J FJ;n 24 2 J: K approaches 2 J as n ! 1; we may interpret the above theorem in the following way: the classical results for the F -test are still approximately valid under conditional homoskedasiticity when n is large. When the null hypothesis is that all slope coe¢ cients except the intercept are jointly zero, we can use a test statistic based on R2 : A Special Case: Testing for Joint Signi…cance of All Economic Variables Theorem [(n K)R2 Test]: Suppose Assumption 4.1-4.6 hold, and we are interested in testing the null hypothesis that 0 1 H0 : where the = 0 2 = = 0 k + 0 k Xkt = 0; are the regression coe¢ cients from Yt = 0 0 0 1 X1t + + + "t : Let R2 be the coe¢ cient of determination from the unrestricted regression model Yt = Xt0 0 + "t : Then under H0 ; (n d K)R2 ! 2 k; where K = k + 1: Proof: First, recall that in this special case we have F = = (1 (1 R2 =k R2 )=(n k 1) R2 =k : R2 )=(n K) By the above theorem and noting J = k; we have k F = (n K)R2 d ! 1 R2 2 k under H0 : This implies that k F is bounded in probability; that is, (n K)R2 = OP (1): 1 R2 Consequently, given that k is a …xed integer, R2 = OP (n 1 ) = oP (1) 2 1 R 25 or p Therefore, 1 R2 ! 0: p R2 ! 1: By the Slutsky theorem, we have K)R2 =k (1 1 R2 (k F )(1 R2 ) (n K)R2 = k (n = d ! R2 ) 2 k; or asymptotically equivalently, d K)R2 ! (n 2 k: This completes the proof. d Question: Do we have nR2 ! Answer: Yes, we have nR2 = 2 k? n n K (n K)R2 and n n K ! 1: Case II: Conditional Heteroskedasticity Recall that under H0 ; p p r) = R n( ^ p = R n( ^ n(R ^ 0 )+ 0 ) p n(R 0 r) d ! N (0; RQ 1 V Q 1 R0 ); where V = E(Xt Xt0 "2t ): It follows that a robust (infeasible) Wald test statistic W = d ! p n(R ^ r)0 [RQ 1 V Q 1 R0 ] 1 p n(R ^ r) 2 J: p p ^! Because Q Q and V^ ! V; we have the Wald test statistic W = p d n(R ^ ! ^ 1 V^ Q ^ 1 R0 ] r)0 [RQ 2 J 26 1 p n(R ^ r) by the Slutsky theorem. By robustness, we mean that W is valid no matter whether there exists conditional heteroskedasticity. We can write W equivalently as follows: W = (R ^ r)0 [R(X 0 X) 1 X 0 D(e)D(e)0 X(X 0 X) 1 R0 ] 1 (R ^ r); where we have used the fact that 1X Xt et et Xt0 V^ = n t=1 n X 0 D(e)D(e)0 X = ; n where D(e)= diag(e1 ; e2 ; :::; en ): Remarks: (i) Under conditional heteroskedasticity, the test statistics J F and (n K)R2 cannot be used. Question: What happens if there exists conditional heteroskedasticity but J F or (n K)R2 is used. There will exist Type I errors. (ii) Although the general form of the Wald test statistic developed here can be used no matter whether there exists conditional homoskedasticity, this general form of test statistic may perform poorly in small samples. Thus, if one has information that the error term is conditionally homoskedastic, one should use the test statistics derived under conditional homoskedasticity, which will perform better in small sample sizes. Because of this reason, it is important to test whether conditional homoskedasticity holds. 4.6 Testing Conditional Homoskedasticity We now introduce a method to test conditional heteroskedasticity. Question: How to test conditional homoskedasticity for f"t g in a linear regression model? There have been many tests for conditional homoskedasticity. Here, we introduce a popular one due to White (1980). White’s (1980) test The null hypothesis H0 : E("2t jXt ) = E("2t ); 27 where "t is the regression error in the linear regression model Yt = Xt0 0 + "t : First, suppose "t were observed, and we consider the auxiliary regression "2t = 0 + k X j Xjt + j=1 X jl Xjt Xlt + vt 1 j l k = 0 vech(Xt Xt0 ) + vt = 0 Ut + vt ; where vech(Xt Xt0 ) is an operator stacks all lower triangular elements of matrix Xt Xt0 1 column vector. There is a total of K(K+1) regressors in the auxiliary into a K(K+1) 2 2 regression. This is essentially regressing "2t on the intercept, Xt ; and the quadratic terms and cross-product terms of Xt : Under H0 ; all coe¢ cients except the intercept are jointly zero. Any nonzero coe¢ cients will indicate the existence of conditional heteroskedasticity. Thus, we can test H0 by checking whether all coe¢ cients except the intercept are jointly zero. Assuming that E("4t jXt ) = 4 (which implies E(vt2 jXt ) = 2v under H0 ); then we can run an OLS regression and construct a R2 -based test statistic. Under H0 ; we can obtain d 2 ~2 ! (n J 1)R J; where J = K(K+1) 1 is the number of the regressors except the intercept. 2 Unfortunately, "t is not observable. However, we can replace "t with et = Yt run the following feasible auxiliary regression e2t = 0+ = 0 k X j Xjt + (n J j=1 X jl Xjt Xlt Xt0 ^ ; and + v~t 1 j l k vech(Xt Xt0 ) + v~t ; the resulting test statistic d 1)R2 ! 2 J: It can be shown that the replacement of "2t by e2t has no impact on the asymptotic 2J distribution of (n J 1)R2 : The proof, however, is rather tedious. For the details of the proof, see White (1980). Below, we provide some intuition. Question: Why does the use of e2t in place of "2t have no impact on the asymptotic distribution of (n J 1)R2 ? Answer: 28 Put Ut = vech(Xt Xt0 ): Then the infeasible auxiliary regression is "2t = Ut0 + vt : We have p n(~ 0 ) !d N (0; p 1 2 v Quu ); and under H0 : R = r; we have r) !d N (0; n(R~ 1 0 2 v RQuu R ); where ~ is the OLS estimator and 2v = E(vt2 ): This implies R~ r = OP (n 1=2 ); which vanishes to zero in probability at rate n 1=2 : It is this term that yields the asymp~ 2 ; which is asymptotically equiavalent to the test totic 2J distribution for (n J 1)R statistic p p ^ uu1 R0 ] 1 n(R ~ r): n(R~ r)0 [s2v RQ Now suppose we replace "2t with e2t ; and consider the auxliary regression e2t = Ut0 0 + v~t : Denote the OLS estimator by ^ : We decompose h i2 0 e2t = "t Xt0 ( ^ ) = "2t + ( ^ 2( ^ = 0 ) Xt Xt0 ( ^ 0 0 0) 0 0 ) Xt "t Ut + v~t : Thus, ^ can be written as follows: ^ = ~ + ^ + ^; where ~ is the OLS estimator of the infeasible auxiliary regression, ^ is the e¤ect of the second term, and ^ is the e¤ect of the third term. For the third term, Xt "t is uncorrelated 0 with Ut given E("t jXt ) = 0: Therefore, this term, after scaled by the factor ^ that 1=2 vanishes to zero in probability at the rate n ; will vanish to zero in probability at a rate 1 1 n ; that is, ^ = OP (n ): This is expected to have negligible impact on the asymptotic distribution of the test statistic. For the second term, Xt Xt0 is perfectly correlated with 0 2 0 Ut : However, it is scaled by a factor of jj ^ jj rather than by jj ^ jj only. As a 0 0 0 0 consequence, the regression coe¢ cient of ( ^ ) Xt Xt ( ^ ) on Ut will also vanish 1 1 ^ to zero at rate n ; that is, = OP (n ): Therefore, it also has negligible impact on the asymptotic distribution of (n J 1)R2 : 29 Question: How to test conditional homoskedasticity if E("4t jXt ) is not a constant (i.e., E("4t jXt ) 6= 4 for some 4 under H0 )? This corresponds to the case when vt displays conditional heteroskedasticity. Hint: Consider a robust Wald test statistic. Please try it. 4.7 Empirical Applications 4.8 Summary and Conclusion In this chapter, within the context of i.i.d. observations, we have relaxed some key assumptions of the classical linear regression model. In particular, we do not assume conditional normality for "t and allow for conditional heteroskedasticity. Because the exact …nite sample distribution of the OLS is generally unknown, we have replied on asymptotic analysis. It is found that for large samples, the results of the OLS estimator ^ and related test statistics (e.g., t-test statistic and F -test statistic) are still applicable under conditional homoskedasticity. Under conditional heteroskedasticity, however, the statistic properties of ^ are di¤erent from those of ^ under conditional homoskedasticity, and as a consequence, the conventional t-test and F -test are invalid even when the sample size n ! 1: One has to use White’s (1980) heteroskedasticity-consistent variance estimator for the OLS estimator ^ and use it to construct robust test statistics. A direct test for conditional heteroskedastcity, due to White (1980), is described. References Hong, Y. (2006), Probability Theory and Statistics for Economists. White, H. (1980), Econometrica. White, H. (1999), Asymptotic Theory for Econometricians, 2nd Edition. Hayashi, F. (2000), Econometrics, Ch.2. 30 ADVANCED ECONOMETRICS PROBLEM SET 04 1. Suppose a stochastic process fYt ; Xt0 g0 satis…es the following assumptions: Assumption 1.1 [Linearity] fYt ; Xt0 g0 is an i.i.d. process with 0 Yt = Xt0 for some unknown parameter 0 Assumption 1.2 [i.i.d.] The K + "t ; t = 1; :::; n; and some unobservable disturbance "t ; K matrix E(Xt Xt0 ) = Q is nonsingular and …nite; Assumption 1.3 [conditional heteroskedasticity]: (i) E(Xt "t ) = 0; (ii) E("2t jXt ) 6= 2 ; 4 ) C for all 0 j k; and E("4t ) C for some C < 1: (iii) E(Xjt (a) Show that ^ !p 0 ? p 0 (b) Show that n( ^ ) !d N (0; ); where = Q 1 V Q 1 ; and V = E(Xt Xt0 "2t ): (c) Show that the asymptotic variance estimator ^ =Q ^ 1 V^ Q ^ 1 !p ; ^ = n 1 Pn Xt X 0 and V^ = n 1 Pn Xt X 0 e2 : This is called White’s (1980) where Q t t t t=1 t=1 heteroskedasticity consistent variance-covariance matrix estimator. (d) Consider a test for hypothesis H0 : R 0 = r: Do we have J F !d 2J ; where F = (R ^ r)0 [R(X 0 X) 1 R0 ] 1 (R ^ s2 r)=J is the usual F -test statistic? If it holds, give the reasoning. If it does not, could you provide an alternative test statistic that converges in distribution to 2J : 2. Suppose the following assumptions hold: Assumption 2.1: fYt ; Xt0 g0 is an i.i.d. random sample with Yt = Xt0 for some unknown parameter 0 0 + "t ; and unobservable random disturbance "t : Assumption 2.2: E("t jXt ) = 0 a.s. 31 Assumption 2.3: (i) Wt = W (Xt ) is a positive function of Xt ; (ii) The K K matrix E (Xt Wt Xt0 ) = Qw is …nite and nonsingular. 8 ) C < 1 for all 0 j k; and E("4t ) (iii) E(Wt8 ) C < 1; E(Xjt C; Assumption 2.4: Vw = E(Xt Wt2 Xt0 "2t ) is …nite and nonsingular. We consider the so-called weighted least squares (WLS) estimator for ! 1 n n X X 0 1 ^ = n 1 Xt Wt X Xt Wt Yt : n w 0 : t t=1 t=1 (a) Show that ^ w is the solution to the following problem min n X Wt (Yt Xt0 )2 : t=1 (b) Show that ^ w is consistent for 0 ; p 0 (c) Show that n( ^ w ) !d N (0; w ) for some K K …nite and positive de…nite matrix w : Obtain the expression of w under (i) conditional homoskedasticity E("2t jXt ) = 2 a.s. and (ii) conditional heteroskedasticity E("2t jXt ) 6= 2 . (d) Propose an estimator ^ w for w ; and show that ^ w is consistent for w under conditional homoskedasticity and conditional heteroskedasticity respectively: (e) Construct a test statistic for H0 : R 0 = r; where r is a J K matrix and r is a J 1 vector under conditional homoskedasticity and under conditional heteroskedasticity respectively. Derive the asymptotic distribution of the test statistic under H0 in each case. (f) Suppose E("2t jXt ) = 2 (Xt ) is known, and we set Wt = 1 (Xt ). Construct a test statistic for H0 : R 0 = r; where r is a J K matrix and r is a J 1 vector. Derive the asymptotic distribution of the test statistic under H0 . 3. Consider the problem of testing conditional homoskedasticity (H0 : E("2t jXt ) = for a linear regression model Yt = Xt0 0 + "t ; 2 ) where Xt is a K 1 vector consisting of an intercept and explanatory variables. To test conditional homoskedasticity, we consider the auxiliary regression "2t = vech(Xt Xt0 )0 + vt = Ut0 + vt : 32 Suppose Assumptions 4.1, 4.2, 4.3, 4.4, 4.7 hold, and E("4t jXt ) 6= 4 : That is, E("4t jXt ) is a function of Xt : (a) Show var(vt jXt ) 6= 2v under H0 : That is, the disturbance vt in the auxiliary regression model displays conditional heteroskedasticity. (b) Suppose "t is directly observable. Construct an asymptotically valid test for the null hypothesis H0 of conditional homoskedasticity. Justify your reasoning and test statistic. 33