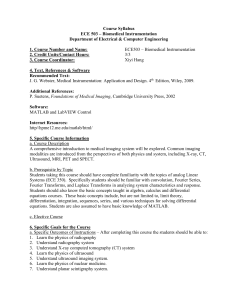

Signal Processing Overview of Ultrasound

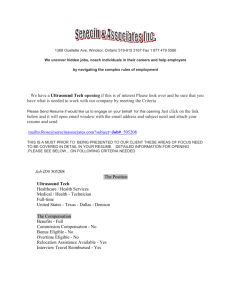

advertisement