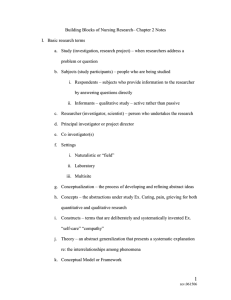

Using Contextual Constructs Model to Frame Doctoral Research

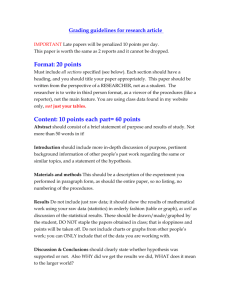

advertisement