3. Hypothesis tests and confidence intervals in multiple regression

advertisement

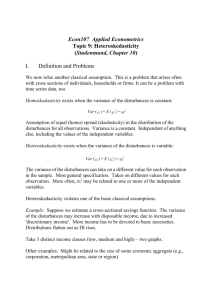

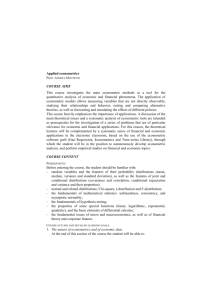

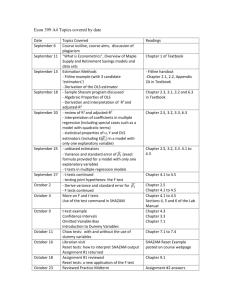

3. Hypothesis tests and confidence intervals in multiple regression Contents of previous section: • Definition of the multiple regression model • OLS estimation of the coefficients • Measures-of-fit (based on estimation results) • Some problems in the regression model (omitted-variable bias, multicollinearity) Now: • Statistical inference based on OLS estimation (hypothesis tests, confidence intervals) 39 3.1. Standard errors for the OLS estimators Recall: • OLS estimators are subject to sampling uncertainty • Given the OLS assumptions on Slide 18 the OLS estimators are normally distributed in large samples, that is β̂j ∼ N (βj , σβ̂2 ) j for j = 0, . . . , k Now: • How can we estimate the (unknown) r OLS estimator’s variance σ 2 and its standard deviation σ 2 ≡ σβ̂ β̂j β̂j j 40 Definition 3.1: (Standard error) We call an appropriately defined estimator of the standard deviation σβ̂ the standard error of β̂j and denote it by SE(β̂j ). j Natural question: • What constitutes a good estimator of σβ̂ ? j Answer: • The analytical formula of a good estimator crucially hinges on whether the errors ui are homoskedastic or heteroskedastic (see Definition 2.2 on Slide 8) 41 3.1.1. Homoskedasticity / heteroskedasticity Important notes: • The way we defined the terms ’homoskedasticity’ and ’heteroskedasticity’ in Definition 2.2 on Slide 8 implies that ’homoskedasticity’ is a special case of ’heteroskedasticity’ (’heteroskedasticity’ is more general than ’homoskedasticity’) • Since the OLS assumptions on Slide 18 place no restrictions on the conditional variance of the error terms ui, they apply to both the general case of ’heteroskedasticity’ and the special case of ’homoskedasticity’ −→ Theorem 2.4 on Slide 19 is valid under both concepts 42 Corollary 3.2: (To Theorem 2.4, Slide 19) Given the OLS assumptions on Slide 18, the OLS estimators are unbiased, consistent, and normally distributed in large samples (asymptotically normal) irrespective of whether the error terms are heteroskedastic or homoskedastic. Classical econometrics: • In classical econometrics the default assumption is that the error terms are homoskedastic • Given our OLS assumptions plus homoskedasticity, the OLS estimators are efficient (optimal) among all alternative linear and unbiased estimators of the regression coefficients β0 , . . . , β k (Gauss-Markov theorem) 43 Classical econometrics: [continued] • Under heteroskedasticity there are more efficient estimators than OLS, namely the so-called (feasible) Generalized Least Squares (GLS) estimators (see the lectures Econometrics I+II) Mathematical aspects: • There exist specific formulas for the standard errors SE(β̂j ) both under heteroskedasticity and homoskedasticity • Since homoskedasticity is a special case of heteroskedasticity the standard errors under homoskedasticity have a simpler structural form (homoskedasticity-only standard errors) 44 Mathematical aspects: [continued] • The homoskedasticity-only standard errors are valid only under homoskedasticity, but lead to invalid statistical inference under heteroskedasticity • The more general standard errors under heteroskedasticity were proposed by Eicker (1967), Huber (1967), and White (1980) (Eicker-Huber-White standard errors) • The Eicker-Huber-White standard errors produce valid statistical inference irrespective of whether the error terms are heteroskedastic or homoskedastic (heteroskedasticity-robust standard errors) 45 Homoskedasticity-only and heteroskedasticity-robust standard errors for the house-prices dataset Dependent Variable: SALEPRICE Method: Least Squares Date: 28/02/12 Time: 09:43 Sample: 1 546 Included observations: 546 White heteroskedasticity-consistent standard errors & covariance Dependent Variable: SALEPRICE Method: Least Squares Date: 07/02/12 Time: 16:50 Sample: 1 546 Included observations: 546 Variable Coefficient Std. Error t-Statistic Prob. Variable Coefficient Std. Error t-Statistic Prob. C LOTSIZE BEDROOMS BATHROOMS STOREYS -4009.550 5.429174 2824.614 17105.17 7634.897 3603.109 0.369250 1214.808 1734.434 1007.974 -1.112803 14.70325 2.325153 9.862107 7.574494 0.2663 0.0000 0.0204 0.0000 0.0000 C LOTSIZE BEDROOMS BATHROOMS STOREYS -4009.550 5.429174 2824.614 17105.17 7634.897 3668.048 0.459424 1262.593 2263.281 917.6527 -1.093102 11.81735 2.237153 7.557690 8.320029 0.2748 0.0000 0.0257 0.0000 0.0000 R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood F-statistic Prob(F-statistic) 0.535547 0.532113 18265.23 1.80E+11 -6129.993 155.9529 0.000000 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion Hannan-Quinn criter. Durbin-Watson stat 68121.60 26702.67 22.47250 22.51190 22.48790 1.482942 R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood F-statistic Prob(F-statistic) 0.535547 0.532113 18265.23 1.80E+11 -6129.993 155.9529 0.000000 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion Hannan-Quinn criter. Durbin-Watson stat 68121.60 26702.67 22.47250 22.51190 22.48790 1.482942 46 Practical issues: • Heteroskedasticity arises in many econometric applications −→ It is prudent to assume heteroskedastic errors unless you have compelling reasons to believe otherwise • Rule of thumb: to be on the safe side, always use heteroskedasticity-robust standard errors • Many software packages (like EViews) report homoskedasticity-only standard errors as their default setting • It is up to the user to activate the option of heteroskedasticity-robust standard errors (in EViews: use the command ls(cov=white)) 47 3.1.2. Autocorrelated errors Problem: • Particularly in time-series regressions (that is when the index i represents distinct points in time) we often encounter autocorrelated error terms: Corr(ui, uj ) = 6 0 for some i 6= j • Under autocorrelation the OLS coefficient estimators are still consistent, but the usual OLS standard errors become inconsistent −→ Statistical inference based on the usual OLS standard errors becomes invalid 48 Solution: • Standard errors should be computed using a heteroskedasticity- and autocorrelation-consistent (HAC) estimator of the variance • Such HAC standard errors become relevant in the Sections 6 and 10 • A well-known (special) HAC estimator is the so-called NeweyWest variance estimator (see Newey and West, 1987) • For a more formal discussion of HAC estimators see Stock and Watson (2011, Section 15.4) 49 3.2. Hypothesis tests and confidence intervals for a single coefficient Testing problem: • Consider one of the k regressors, say Xj , and the corresponding regression coefficient βj • We aim at testing the two-sided problem that the unknown βj takes on some specific value βj,0 • In technical terms: H0 : βj = βj,0 vs. H1 : β j = 6 βj,0 50 Testing procedure: • Compute the standard error of β̂j , SE(β̂j ) • Compute the so-called t-statistic: β̂j − βj,0 t= SE(β̂j ) • Compute the p-value: act p-value = 2 · Φ − t , (3.1) (3.2) where tact is the value of the t-statistic actually computed and Φ(·) is the cdf of the standard normal distribution • Reject H0 at the 5% significance level if p-value < 0.05 (or, equivalently, if |tact| > 1.96) 51 Remarks: • Our testing procedure makes use of the result that the sampling distribution of the OLS estimator β̂j is approximately normal for moderate and large sample sizes • Under H0 the mean of this distribution is βj,0 −→ The t-statistic (3.1) is approximately N (0, 1) distributed • The phrasing ’t-statistic’ stems from the fact that for finite sample sizes and under some additional (classical) assumptions on the multiple regression model the t-statistic (3.1) follows the t-distribution with n − k − 1 degrees of freedom • Given these restrictive assumptions the p-values should be computed from the quantiles of the tn−k−1-distribution 52 Remarks: [continued] • Since these additional assumptions are rarely met in realworld applications and since sample sizes are typically moderate or even large, we base inference on the p-values (3.2) computed from the normal distribution Attention: • Many software packages (like EViews) assume the validity of the classical assumptions and report p-values based on the tn−k−1-distribution in the default setting −→ p-values should be corrected manually (see the following example) 53 p-values for the house-prices dataset based on the t- and the normal distribution, respectively Dependent Variable: SALEPRICE Method: Least Squares Date: 28/02/12 Time: 09:43 Sample: 1 546 Included observations: 546 White heteroskedasticity-consistent standard errors & covariance Dependent Variable: SALEPRICE Method: Least Squares Date: 28/02/12 Time: 09:43 Sample: 1 546 Included observations: 546 White heteroskedasticity-consistent standard errors & covariance Variable Coefficient Std. Error t-Statistic Prob. Variable Coefficient Std. Error t-Statistic Prob. C LOTSIZE BEDROOMS BATHROOMS STOREYS -4009.550 5.429174 2824.614 17105.17 7634.897 3668.048 0.459424 1262.593 2263.281 917.6527 -1.093102 11.81735 2.237153 7.557690 8.320029 0.2748 0.0000 0.0257 0.0000 0.0000 C LOTSIZE BEDROOMS BATHROOMS STOREYS -4009.550 5.429174 2824.614 17105.17 7634.897 3668.048 0.459424 1262.593 2263.281 917.6527 -1.093102 11.81735 2.237153 7.557690 8.320029 0.2743 0.0000 0.0253 0.0000 0.0000 R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood F-statistic Prob(F-statistic) 0.535547 0.532113 18265.23 1.80E+11 -6129.993 155.9529 0.000000 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion Hannan-Quinn criter. Durbin-Watson stat 68121.60 26702.67 22.47250 22.51190 22.48790 1.482942 R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood F-statistic Prob(F-statistic) 0.535547 0.532113 18265.23 1.80E+11 -6129.993 155.9529 0.000000 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion Hannan-Quinn criter. Durbin-Watson stat 68121.60 26702.67 22.47250 22.51190 22.48790 1.482942 54 Remarks: [continued] • A (1 − α) two-sided confidence interval for the coefficient βj is an interval that contains the true value of βj with a (1 − α) probability • It contains the true value of βj in 100·(1−α)% of all possible randomly drawn samples • Equivalently, it is the set of values of βj,0 that cannot be rejected by an α-level hypothesis test H0 : βj = βj,0 vs. H1 : βj 6= βj,0 • When the sample size is large, we approximate the (1 − α) confidence interval for βj by h i β̂j − u1−α/2 · SE(β̂j ), β̂j + u1−α/2 · SE(β̂j ) , (3.3) where uα denotes the α-quantile of the N (0, 1)-distribution 55 3.3. Tests of joint hypotheses Now: • Testing hypotheses on two or more regression coefficients (joint hypotheses) • Example: H0 : β1 = 0 and β2 = 0 vs. H1 : β1 6= 0 and/or β2 6= 0 (two restrictions) 56 General form of joint hypothesis: • Consider the k + 1 regression coefficients β0, β1, . . . , βk and k + 1 prespecified real numbers β0,0, β1,0, . . . , βk,0 • For q out of the k + 1 coefficients we consider joint null and alternative hypotheses of the form H0 : βj = βj,0, βm = βm,0, . . . , H1 : one or more of the q restrictions under H0 does or do not hold for a total of q restrictions, 57 Remarks: • A special case is given by considering the k restrictions with β1,0 = 0, β2,0 = 0, . . . , βk,0 = 0, that is H0 : β1 = 0, . . . , βk = 0 H1 : at least one of the βm is nonzero for m = 1, . . . , k (overall-significance test of the regression model) • It is tempting to conduct the test by using the usual tstatistics to test the k restrictions one at a time: Test #1: H0 : β1 = 0 vs. H1 : β1 6= 0 Test #2: H0 : β2 = 0 vs. H1 : β2 6= 0 ... ... ... 6 0 Test #k: H0 : βk = 0 vs. H1 : βk = 58 Remarks: [continued] • This approach is unreliable: ... testing a series of single hypotheses is not equivalent to testing those same hypotheses jointly. The intuitive reason for this is that in a joint test of several hypotheses any single hypothesis is ’affected’ by the information in the other hypotheses. (Gujarati and Porter, 2009, p. 238) Solution: • Joint-hypotheses testing on the basis of the F -statistic • EViews-example: overall-significance test for the house-prices dataset 59 F -test (overall-significance test) for the house-prices dataset Dependent Variable: SALEPRICE Method: Least Squares Date: 28/02/12 Time: 09:43 Sample: 1 546 Included observations: 546 White heteroskedasticity-consistent standard errors & covariance Variable Coefficient Std. Error t-Statistic Prob. C LOTSIZE BEDROOMS BATHROOMS STOREYS -4009.550 5.429174 2824.614 17105.17 7634.897 3668.048 0.459424 1262.593 2263.281 917.6527 -1.093102 11.81735 2.237153 7.557690 8.320029 0.2743 0.0000 0.0253 0.0000 0.0000 R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood F-statistic Prob(F-statistic) 0.535547 0.532113 18265.23 1.80E+11 -6129.993 155.9529 0.000000 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion Hannan-Quinn criter. Durbin-Watson stat 68121.60 26702.67 22.47250 22.51190 22.48790 1.482942 60 Form and null-distribution of the F -statistic: • The exact formula of the F -statistic used for testing the (general) problem of q restrictions given on Slide 57 depends on the specific assumptions imposed on the multiple regression model • Also, the exact null-distribution of the F -statistic (F -distribution with exactly specified degree-of-freedom parameters n1 and n2) also depends on the these assumptions • In EViews, the F -statistic and its null-distribution are computed under the classical assumptions of (1) normally distributed and (2) homoskedastic error terms ui • In contrast, Stock and Watson (2011) do not assume normally distributed errors ui and consider a heteroskedasticityrobust F -statistic (Stock and Watson, 2011, pp. 748-749) 61 3.4. Testing single restrictions involving multiple coefficients General F -testing: • Consider again the multiple regression model: Yi = β0 + β1 · X1i + β2 · X2i + . . . + βk · Xki + ui (3.4) • We aim at testing hypotheses involving some linear restrictions on the parameters of the k-variable model such as H0 : β2 = β3 vs. H1 : β2 = 6 β3 H0 : β3 + β4 + β5 = 3 vs. H1 : β3 + β4 + β5 6= 3 62 General F -testing: [continued] • All these hypotheses can be tested using a general F -statistic • This general testing strategy distinguishes sharply between the so-called unrestricted regression model (3.4) and the restricted regression obtained from plugging the restriction specified under H0 into the unrestricted regression (3.4) • The general F -statistic then compares the sum of squared residuals obtained from the unrestricted regression (3.4), denoted by SSRUR, with the sum of squared residuals obtained from the restricted regression, denoted by SSRR (see Gujarati and Porter, 2009, pp. 249-254) 63 General F -testing: [continued] • As in Section 3.3., the exact null-distribution of this general F -statistic (F -distribution with exactly specified degree-offreedom parameters) again depends on the assumptions imposed on the multiple regression model (in particular on the normality/nonnormality and the homoskedasticity/heteroskedasticity of the error terms ui) • EViews provides a fully-fledged framework for performing these general F -tests under the classical assumptions of normally distributed and homoskedastic error terms −→ A thorough discussion will be given in the class 64 3.5. Confidence sets for multiple coefficients Definition 3.3: (Confidence set) A 95% confidence set for two or more coefficients is the set of numbers that contains the true population values of these coefficients in 95% of randomly drawn samples. Remarks: • A confidence set is the generalization to two or more coefficients of a confidence interval for a single coefficient • Recall Formula (3.3) on Slide 55 for constructing a confidence interval for the single coefficient βj 65 Remarks: [continued] • Instead of using Formula (3.3), an equivalent way of constructing a say 95% confidence interval for the single coefficient βj consists in determining the set of all values βj,0 that cannot be rejected by a two-sided hypothesis test H0 : βj = βj,0 vs. H1 : βj = 6 βj,0 at the 5% significance level based on the t-statistic (3.1) on Slide 51 • This approach can be extended to the case of multiple coefficients using the general F -testing approach described in Section 3.3 66 Example: • Suppose you are interested in constructing a confidence set for the two coefficients βj and βm (for j, m = 0, . . . , k, j = 6 m) • In line with Slide 57, consider testing a joint null hypothesis with the 2 restrictions H0 : βj = βj,0, βm = βm,0 at the 5% level using the appropriate F -statistic • The set of all pairs (βj,0, βm,0) for which you cannot reject H0 at the 5% level constitutes a 95% confidence set for βj and βm 67 Remarks: • In line with the confidence-interval formula (3.3), there are also analytical formulas for constructing confidence sets for multiple coefficients (not to be discussed here) • EViews provides a fully-fledged framework for constructing confidence intervals and confidence sets (see class for details) Example: • Confidence intervals and two-dimensional confidence sets for the house-prices dataset in EViews 68 Single-coefficient confidence intervals for the house-prices dataset Coefficient Confidence Intervals Date: 20/03/12 Time: 12:39 Sample: 1 546 Included observations: 546 90% CI 95% CI 99% CI Variable Coefficient Low High Low High Low High C LOTSIZE BEDROOMS BATHROOMS STOREYS -4009.550 5.429174 2824.614 17105.17 7634.897 -10053.30 4.672192 744.2712 13376.02 6122.903 2034.202 6.186155 4904.956 20834.33 9146.891 -11214.91 4.526700 344.4288 12659.28 5832.298 3195.812 6.331647 5304.799 21551.07 9437.496 -13491.26 4.241586 -439.1222 11254.71 5262.813 5472.162 6.616761 6088.350 22955.64 10006.98 69 95% two-dimensional confidence sets for the house-prices dataset 6.5 LOTSIZE 6.0 5.5 5.0 BEDROOMS 4.5 4,000 2,000 0 BATHROOMS 22,000 20,000 18,000 16,000 14,000 12,000 STOREYS 9,000 8,000 7,000 70 6,000 -10,000 -5,000 C 0 4.5 5.0 5.5 LOTSIZE 6.0 6.5 0 2,000 4,000 BEDROOMS 12,000 16,000 20,000 BATHROOMS Remarks: • The vertical and horizontal dotted lines show the corresponding 95% confidence intervals for the single coefficients βj , βm • The orientation of the ellipse indicates the estimated correlation between the OLS estimators β̂j and β̂m • If the OLS estimators β̂j and β̂m were independent, the ellipses would be exact circles 71