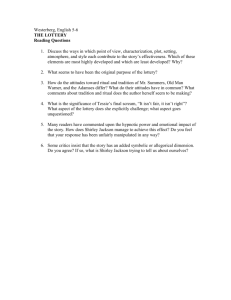

Slides 2

advertisement

Probability basics

Probability theory offers a mathematical language for describing

uncertainties:

p(disease d) = 0.35

Probability theory and

assessment

0

0.5

1

In a medical problem, a probability p(disease d) = 0.35 for a

patient is interpreted as:

• in a population of 1000 similar patients, 350 have the

disease d;

• the attending physician assesses the likelihood that the

patient has the disease d at 0.35.

77 / 401

78 / 401

A frequentist’s probabilities

• in theory, a probability Pr(d) is the relative frequency with

which d occurs in an infinitely repeated experiment;

• in practice, the probability is estimated from (sufficient)

experimental data.

Sources of information

Sources of probabilistic information are:

Example

In a clinical study, 10000 men over 40 years of age have been

examined for hypertension:

• (experimental) data;

• literature;

• human experts.

hypertension no hypertension

1983

8017

The first two sources are the most reliable.

The probability of hypertension in a man aged 45 is estimated to

be

1983

p(hypertension) =

= 0.20

10000

79 / 401

80 / 401

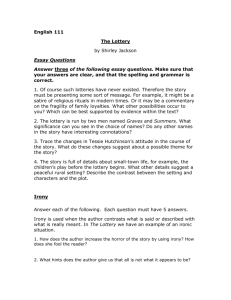

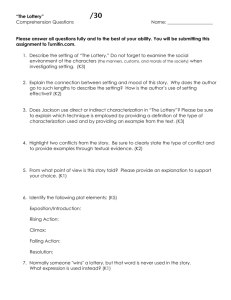

Probability scales — an example

For their new soda, an expert from Colaco has to assess the

probability that the soda will turn out a national success:

Subjective probabilities

For assessing probabilities with experts, various tools are

available:

certain

(almost)

• probability scales;

• the expert is asked, using

mathematical notation, to assess:

• probability wheels;

• betting models;

p(national success) = ?

• lottery models.

A subjective probability is based upon personal knowledge and

experience.

• the expert is asked to indicate the

probability on a scale from 0 to

100% certainty:

probable

100

85

75

expected

fifty-fifty

50

uncertain

25

improbable

(almost)

impossible

81 / 401

15

0

82 / 401

Betting models — an example

For their new soda, an expert from Colaco is asked to assess

the probability of a national success:

• the expert is offered two bets:

Probability wheels

A probability wheel is composed of two coloured faces and a

hand:

national success

x euro

d

national failure

national success

d¯

national failure

The expert is asked to adjust the area of the red face so that the

probability of the hand stopping there, equals the probability of

interest.

83 / 401

−y euro

−x euro

y euro

¯ then

• if the expert is indifferent between d and d,

x · Pr(n) − y · (1 − Pr(n)) = y · (1 − Pr(n)) − x · Pr(n)

y

from which we find Pr(n) =

.

x+y

84 / 401

Lottery models — an example

For their new soda, an expert from Colaco is asked to assess

the probability of a national success:

• the expert is offered two lotteries:

national success

Hawaiian trip

• subjective probabilities are coherent if they adhere to the

d

national failure

p(outcome)

d¯

p(not outcome)

Coherence and calibration

postulates of probability theory;

• subjective probabilities are well calibrated if they reflect true

frequencies.

chocolate bar

Hawaiian trip

chocolate bar

¯ then

• if the expert is indifferent between d and d,

Pr(n) = p(outcome).

• the second lottery is termed the reference lottery;

85 / 401

86 / 401

Overconfidence and underconfidence

Heuristics

Upon assessing probabilities, people tend to use simple

cognitive heuristics:

• representativeness: the probability of an outcome is based

• a human expert is an overconfident assessor if, compared

with the true frequencies, his subjective probabilities show a

tendency towards the extremes;

• a human expert is an underconfident assessor if, compared

with the true frequencies, his subjective probabilities show a

tendency away from the extremes.

87 / 401

upon the similarity with a stereotype outcome;

• availability: the probability of an outcome is based upon the

ease with which similar outcomes are recalled;

• anchoring-and-adjusting: the probability of an outcome is

assessed by adjusting an initially chosen anchor probability:

88 / 401

Pitfalls — cntd.

Pitfalls

Using the representativeness heuristic upon assessing

probabilities, can introduce biases:

• the prior probabilities, or base rates, are insufficiently taken

Using the availability heuristic upon assessing probabilities, can

introduce biases:

• the ease of recall from memory is influenced by recency,

rareness, and the past consequences for the assessor;

• the ease of recall is further influenced by external stimuli:

Example

into account;

• the assessments are based upon insufficient samples;

• the weights of the characteristics of the stereotype outcome

are insufficiently taken into consideration;

• ...

89 / 401

• ...

90 / 401

Pitfalls — cntd.

Continuous chance variables

Using the anchoring-and-adjusting heuristic upon assessing

probabilities, can introduce biases:

• the assessor does not choose an appropriate anchor;

• the assessor does not adjust the anchor to a sufficient

extent:

Example

In decision trees, chance variables are discrete:

• a discrete variable C takes a single value from a non-empty

finite set of values {c1 , . . . , cn }, n ≥ 2;

• the distribution associated with C is a probability mass

function, which assigns a probability to each value ci of C.

In reality, chance variables can also be continuous:

• a continuous variable C takes a single value within a

non-empty range of values [a, b], a < b;

• the distribution associated with C is a probability distribution

function, which defines a probability for any interval

[x, y] ⊆ [a, b].

• ...

91 / 401

92 / 401

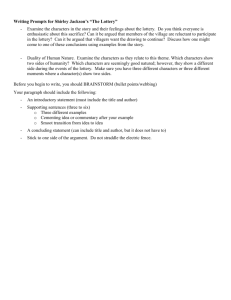

Pivoting on values

For a real-estate agency,

the demand for luxury

apartments is a

continuous chance

variable A:

The following procedure provides for modelling a continuous

variable C in a decision tree:

1

construct a cumulative distribution function for C:

• pivoting on the values of C, or

• pivoting on the cumulative probabilities for C;

2

approximate the probability distribution function for C by a

probability mass function for a discrete chance variable C ′ :

• using the Pearson-Tukey method, or

• using bracket medians.

0.35

0.3

0.25

0.2

0.15

0.1

0.05

0

50

60

70

80

90

100

no. of appartments

110

120

130

A cumulative distribution function is constructed by

• assessing the cumulative probabilities for a number of

values of A:

Pr(A ≤ 65) = 0.08 Pr(A ≤ 90) = 0.90

Pr(A ≤ 80) = 0.65 Pr(A ≤ 110) = 0.97

• and drawing a curve

through them:

1

cum. distribution

Using continuous chance variables

0.8

0.6

0.4

0.2

0

50

60

70

93 / 401

80

90

100

no. of appartments

110

120

130

94 / 401

Pivoting on cumulative probabilities

Pivoting on values — cntd.

0.35

For assessing cumulative probabilities, by pivoting on the values

of a variable under study, lottery models can be used.

Example

Reconsider the demand

for luxury apartments,

modelled by a continuous

chance variable A:

0.3

0.25

0.2

0.15

0.1

0.05

0

50

d

A > 90 apartments

p(outcome)

d¯

p(not outcome)

70

80

90

100

no. of appartments

110

120

130

A cumulative distribution function is constructed by

• finding the values for A that give a number of cumulative

probabilities:

Hawaiian trip

chocolate bar

Pr(A ≤ a1 ) = 0.05 Pr(A ≤ a3 ) = 0.50

Pr(A ≤ a2 ) = 0.35 Pr(A ≤ a4 ) = 0.95

Hawaiian trip

• and drawing a curve

chocolate bar

through them:

Other elicitation tools can be used as well.

1

cum. distribution

A ≤ 90 apartments

60

0.8

0.6

0.4

0.2

0

50

95 / 401

60

70

80

90

100

no. of appartments

110

120

130

96 / 401

The Pearson-Tukey method

Pivoting on cumulative probabilities — cntd.

The Pearson-Tukey method approximates the distribution

function for a continuous variable C by a probability mass

function over a discrete variable C ′ :

For assessing cumulative probabilities, by pivoting on these

cumulative probabilities, lottery models can be used.

Example

• find the values c1 , c2 , c3 for which

A ≤ x apartments

Pr(C ≤ c1 ) = 0.05 Pr(C ≤ c3 ) = 0.95

Pr(C ≤ c2 ) = 0.50

Hawaiian trip

d

A > x apartments

p(outcome) = 0.35

d¯

chocolate bar

• construct the discrete chance variable C ′ with values

Hawaiian trip

c1 , c2 , c3 and probabilities

p(not outcome) = 0.65

chocolate bar

Pr(C ′ = c1 ) = 0.185 Pr(C ′ = c3 ) = 0.185

Pr(C ′ = c2 ) = 0.63

Other elicitation tools can be used as well.

97 / 401

98 / 401

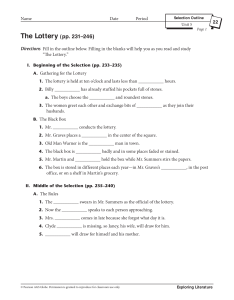

Bracket medians (Clemen)

The Pearson-Tukey method — an example

For the real-estate agency, the demand for luxury apartments is

a continuous chance variable A, for which probabilities need to

be assessed:

• for the cumulative probabilities

Pr(A ≤ a1 ) = 0.05 Pr(A ≤ a3 ) = 0.95

Pr(A ≤ a2 ) = 0.50

we have found

a1 = 62

a2 = 77

The method of bracket medians approximates the distribution

function for a continuous variable C by a probability mass

function over a discrete variable C ′ :

1 for a number of equally likely intervals, for example five, find

the values of C for which

Pr(C ≤ c1 ) = 0

Pr(C ≤ c4 ) = 0.60

Pr(C ≤ c2 ) = 0.20 Pr(C ≤ c5 ) = 0.80

Pr(C ≤ c3 ) = 0.40 Pr(C ≤ c6 ) = 1.00

2

a3 = 99

for each interval [ci , ci+1 ], i = 1, . . . , 5, establish the bracket

median mi such that

Pr(ci ≤ C ≤ mi ) = Pr(mi ≤ C ≤ ci+1 )

• the variable A′ is constructed with

3

Pr(A′ = 62) = 0.185 Pr(A′ = 99) = 0.185

Pr(A′ = 77) = 0.63

99 / 401

construct the discrete chance variable C ′ with

Pr(C ′ = m1 ) = Pr(C ′ = m2 ) = Pr(C ′ = m3 ) =

Pr(C ′ = m4 ) = Pr(C ′ = m5 ) = 0.20

100 / 401

Brackets medians — an example

Bracket medians – more general and practical

With the bracket medians method, using n equally likely

intervals, steps 1 and 2 basically provide for finding values mi ,

i = 1, . . . , n, of the chance variable C, such that

Pr(C ≤ mi ) =

1

+ k · n,

2n

k = 0, . . . , n − 1

1

,

n

Pr(A ≤ m1 ) = 0.10 Pr(A ≤ m4 ) = 0.70

Pr(A ≤ m2 ) = 0.30 Pr(A ≤ m5 ) = 0.90

Pr(A ≤ m3 ) = 0.50

we have found

m1 = 65 m3 = 77 m5 = 89

m2 = 73 m4 = 81

to construct, in step 3, the discrete chance variable C ′ with

Pr(C ′ = mi ) =

For the real-estate agency, the demand for luxury apartments is

a continuous chance variable A, for which probabilities need to

be assessed:

• for the five cumulative probabilities

• the variable A′ is constructed with

i = 1, . . . , n

Pr(A′ = 65) = 0.20 Pr(A′ = 81) = 0.20

Pr(A′ = 73) = 0.20 Pr(A′ = 89) = 0.20

Pr(A′ = 77) = 0.20

101 / 401

M.C. Airport: probability assessments Ia

The real-estate agency revisited

For each of the identified chance variables, the outcome will

depend on the chosen strategy, that is, on activity and site in

1975, 1985 and 1995.

Reconsider the decision problem for the real-estate agency:

• with the Pearson-Tukey

method, the agency’s

decision tree includes:

the expected demand is 78

apartments;

Pr(A′ = 62) = 0.185

It is assumed that the chance variables are all probabilistically

independent of each other, therefore

Pr(A′ = 77) = 0.63

Pr(A′ = 99) = 0.185

Pr(C) = Pr(C1 ∧ C2 ∧ C3 ∧ C4 ∧ C5 ∧ C6 ) =

Pr(A′ = 65) = 0.20

• using bracket medians, the

agency’s tree includes:

the expected demand is 77

apartments;

102 / 401

Pr(A′ = 73) = 0.20

6

Y

Pr(Ci )

i=1

Probabilities are to be assessed for

• the outcome of each Ci , i = 1, . . . , 6 for each of the ∼ 100

decision alternatives of D

(where D captures the sequence D75 , D85 , D95 )

Pr(A′ = 77) = 0.20

Pr(A′ = 81) = 0.20

Pr(A′ = 89) = 0.20

103 / 401

104 / 401

M.C. Airport: probability assessments Ib

M.C. Airport: probability assessments IIa

For each of the identified chance variables, the outcome will

depend on the chosen strategy, that is, on activity and site in

1975, 1985 and 1995.

Alternatively, probabilities can be assessed for

• the outcome of each Ci , i = 1, . . . , 6, for the 16 decision

alternatives of each D j , j = 75, 85, 95.

This second option

• requires less assessments;

• requires easier assessments (Cij vs Ci );

• assumes that Cik is independent of Cij given Dk , j = 75, 85,

k = 85, 95;

• requires that for some functions fi , i = 1, . . . , 6,

Pr(Ci ) = fi (Pr(Ci75 ), Pr(Ci85 ), Pr(Ci95 )).

The required probabilities were established from

• information from previous studies;

• government administrators (group consensus).

For each of the Cij (i = 1, . . . , 6, j = 75, 85, 95) cumulative

distributions were assessed using

• the fractile method, and

• consistency checks

Distributions for Ci were derived from Cij by defining

Ci ≡

Ci75 + Ci85 + Ci95

3

105 / 401

106 / 401

M.C. Airport: probability assessments IIb

Example

Consider the 1975 noise impact of the ’all activity at Texcoco’

alternative. To establish Pr(C675 | D 75 = T-IDMG), the following

numbers are assessed:

min #people = ?

max #people = ?

Pr(#people ≤ a1 ) = 0.5 ⇒ a1 = ?

Pr(#people ≤ a2 ) = 0.25 ⇒ a2 = ?

Pr(#people ≤ a3 ) = 0.75 ⇒ a3 = ?

etc.

400.000

800.000

640.000

540.000

700.000

107 / 401

Introduction to utility theory

and assessment

108 / 401

The appraisal of consequences

For various decision problems, the fundamental objectives have

a natural numerical scale:

• money;

• percentages;

• length of life;

• ...

For other decision problems, not all objectives have such a

scale:

• reputation;

• attractiveness;

• quality of life;

• ...

For such objectives, a proxy scale may be used, or a new

numerical scale need be designed.

The gangrene problem

A 68-year old man is suffering from diabetic gangrene at an

injured foot. The attending physician has to choose between two

decision alternatives:

• to amputate the leg below the knee,

which involves a small risk of death;

• to wait:

• if untreated, the gangrene may cure;

• if the gangrene expands, an amputation above the

knee becomes necessary, which involves a larger risk

of death.

109 / 401

110 / 401

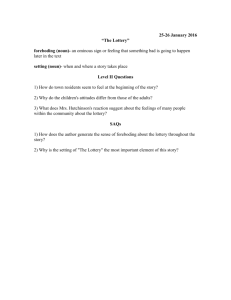

The gangrene problem — continued

The gangrene problem — continued

The elements of the gangrene problem are organised in the

following decision tree:

survive

amputation

below knee

amputated

below knee

p = 0.99

0.89

amputation

die

death

p = 0.01

below knee

survive

0.9

p = 0.99

die

0

p = 0.01

recover

wait

Reconsider the gangrene problem and compare the following

appraisals of the consequences

cured

p = 0.70

survive

worsen

amputation

p = 0.30

above knee

p = 0.90

die

p = 0.10

0.92

amputated

above knee

wait

death

recover

1.0

p = 0.70

survive

worsen

amputation

p = 0.30

above knee

0.72

Before the decision tree can be evaluated, the various

consequences need be assigned numerical appraisals.

111 / 401

p = 0.90

die

p = 0.10

0.8

0

112 / 401

Lotteries

D EFINITION

A lottery is simply a probability distribution over a known, finite

set of outcomes, that is, it is a pair L = (R, Pr) with

• R = {r1 , . . . , rn }, n ≥ 2, is a set of rewards;

• Pr is a probability distribution over R, with

P

i=1,...,n Pr(ri ) = 1.

A lottery is graphically

represented as:

A lottery L is commonly denoted

L = [ p 1 , r1 ; . . . ; p i , ri ; . . . ; p n , rn ]

p1

Types of lottery

There exist different types of lottery:

• a lottery is termed a certain lottery if it has a single reward r

with Pr(r) = 1;

• a lottery is called a simple lottery if all its rewards are

certain lotteries;

• a lottery is coined a compound lottery if at least one of its

rewards is not a certain lottery.

r1

where pi = Pr(ri ), i = 1, . . . , n;

pi

···

occasionally, we write [R] for short.

pn

···

ri

rn

113 / 401

114 / 401

The gangrene problem revisited

A preference ordering

Reconsider the gangrene problem. In the problem, several types

of lottery occur:

• the certain lottery [1.0, amputated above knee];

• the simple lottery [0.99, amputated below knee; 0.01, death]:

p = 0.99

amputated below knee

p = 0.01

• for all Li , Lj , Lk ∈ L, if Li Lj and Lj Lk , then Li Lk ;

0.7, cured; 0.3, [0.9, amputated above knee; 0.1, death] :

p = 0.70

If Li Lj , we say that Li is preferred over Lj .

cured

p = 0.90

If Li Lj and Lj Li , we say that Li and Lj are equivalent; we

then write Li ∼ Lj .

amputated above knee

p = 0.30

p = 0.10

Let L be a set of lotteries. A binary relation is termed a

preference ordering on L if adheres to the following

properties:

• for all Li , Lj ∈ L, we have that Li Lj or Lj Li ;

death

• the

compound lottery

D EFINITION

death

115 / 401

116 / 401

The gangrene problem revisited

The gangrene problem — continued

Reconsider the gangrene problem:

• a preference ordering on the set of all lotteries of the

problem specifies a total ordering on the four certain

lotteries:

L1 = amputated below knee

L2 = death

L3 = cured

L4 = amputated above knee

it seems evident that L3 L1 L4 L2 ;

• the ordering also specifies a total ordering on the more

complex lotteries:

p = 0.99

p = 0.90

amputated

below knee

L5

Reconsider the gangrene problem, with L3 L1 L4 L2 :

L1

L2

L3

L4

death

p = 0.99

amputated

above knee

p = 0.90

amputated

above knee

p = 0.30

L7

p = 0.10

death

death

Does L7 L8 hold, or is L8 L7 ?

117 / 401

The continuity axiom (aka Archimedean axiom)

for all Li , Lj , Lk ∈ L with Li Lj Lk , there is

probability p such that [p, Li ; (1 − p), Lk ] ∼ Lj

Consider three (certain) lotteries Li Lj Lk with rewards

ri , rj , rk . The axiom states that there exists a probability p such

that

p

118 / 401

The independence axiom (or: substitutability)

Let L be a set of lotteries and let be a preference ordering on

L. Then, the continuity axiom asserts:

rj

L8

amputated

below knee

cured

death

it seems evident that L5 L6 .

1.0

p = 0.70

p = 0.01

p = 0.10

amputatied below knee

death

cured

amputated above knee

For

L6

p = 0.01

=

=

=

=

ri

Let L be a set of lotteries and let be a preference ordering on

L. Then, the independence axiom asserts:

for all Li , Lj , Lk ∈ L with Li ∼ Lj and for each

probability p, we have that

[p, Li ; (1 − p), Lk ] ∼ [p, Lj ; (1 − p), Lk ]

Consider three lotteries Li , Lj , Lk . The independence axiom

states that if Li and Lj are equivalent, then so are

p

∼

1−p

Li

p

Lj

rk

1−p

• p is termed the calibration probability for Lj and

[p, Li ; (1 − p), Lk ];

• Lj is termed the certainty equivalent for [p, Li ; (1 − p), Lk ].

119 / 401

Lk

1−p

Lk

for all probabilities p.

120 / 401

The compound lottery axiom

The unequal probability axiom (or: monotonicity)

Let L be a set of lotteries and let be a preference ordering on

L. Then, the unequal probability axiom asserts:

for all Li , Lj ∈ L with Li Lj and for all probabilities

p, p′ with p ≥ p′ , we have

[p, Li ; (1 − p), Lj ] [p′ , Li ; (1 − p), Lj ]

Let L be a set of lotteries and let be a preference ordering on

L. Then, the compound lottery axiom asserts:

for all Li , Lj ∈ L, Lj = [q, Lm ; (1 − q),Ln ], Lm , Ln ∈ L,

0 ≤ q ≤ 1, and for each probability p, we have

[p, Li ; (1−p), Lj ] ∼ [p, Li ; (1−p)·q, Lm ; (1−p)·(1−q), Ln ].

Consider two lotteries Li , Lk with Lk = [q, Li ; (1 − q), Lj ], Lj ∈ L,

0 ≤ q ≤ 1. The compound lottery axiom states that

Consider two lotteries Li , Lj with Li Lj . The unequal

probability axiom states that

p

p

p′

Li

1−p

Lj

Li

q

1−p

1 − p′

for all probabilities p ≥ p′ .

1−q

Lj

p + (1 − p) · q

Li

∼

Li

(1 − p) · (1 − q)

Li

Lj

Lj

for all probabilities p. The axiom is also termed “no fun in

gambling”.

121 / 401

122 / 401

The assumption of finiteness

A rational preference ordering

Consider the following decision problem:

D EFINITION

lose all your money

Let L be a set of lotteries. A preference ordering on L is a

rational preference ordering if it adheres to the Von Neumann –

Morgenstern utility axioms:

• the continuity axiom;

• the independence axiom;

• the unequal probability axiom;

• the compound lottery axiom.

123 / 401

win billions

gamble

p = 0.99999

everything bad

p = 0.00001

bankruptcy

riches

infinite misery

Bayes criterion for choosing between decision alternatives does

not help much if the problem involves consequences of infinite

appraisal.

124 / 401

The main theorem — a sketchy proof

Consider two lotteries L and L′ with the rewards r1 · · · rn ,

n ≥ 1:

The main theorem of utility theory

L′ = [p′1 , r1 ; · · · ; p′n , rn ]

L = [p1 , r1 ; · · · ; pn , rn ]

T HEOREM

Let L be a set of lotteries and let be a rational preference

ordering on L. Then, there exists a real function u on L such that

• for all Li , Lj ∈ L, we have that Li Lj iff u(Li ) ≥ u(Lj );

X

i=1,...,n

[ui , r1 ; (1 − ui ), rn ] ∼ ri

For the two lotteries, we then have that

#

"

X

X

pi · ui ), rn

pi · ui , r1 ; (1 −

L ∼

• for each [p1 , L1 ; . . . ; pn , Ln ] ∈ L, we have that

u([p1 , L1 ; . . . ; pn , Ln ]) =

For each reward ri we can find a value 0 ≤ ui ≤ 1, such that

pi · u(Li ).

i=1,...,n

i=1,...,n

L

The function u is termed a utility function on L.

′

∼

"

X

i=1,...,n

p′i

· ui , r1 ; (1 −

So, L L′ if and only if

125 / 401

X

i=1,...,n

X

· ui ), rn

X

p′i · ui .

i=1,...,n

p i · ui ≥

#

p′i

i=1,...,n

126 / 401

Utility versus expected reward

Example

Consider the set of euro rewards R = {0, 10, 20, 50}, and the

following two lotteries over R:

Some notes

The main theorem of utility theory implies that:

L1 = [0.0, 0; 1.0, 20],

• a lottery with highest utility is a most preferred lottery;

and

L2 = [0.7, 10; 0.3, 50]

Although IEr(L2 ) > IEr(L1 ), the decisionmaker may express the

preference L1 ≻ L2 without being irrational! Consider two

possible utility functions over the rewards, expressing that more

money is preferred to less:

• any rational preference ordering on a set of lotteries is

encoded uniquely by the utilities of its certain lotteries.

u1 (0) = 0, u1 (10) = 0.2, u1 (20) = 0.4, u1 (50) = 1

The main theorem does not imply that:

• a lottery with highest expected reward is a most preferred

lottery

127 / 401

u2 (0) = 0, u2 (10) = 0.1, u2 (20) = 0.6, u1 (50) = 1

Note that u1 (L1 ) < u1 (L2 ), but u2 (L1 ) > u2 (L2 ).

Note: even if objectives have a natural numerical scale,

preferences (over lotteries) may be such that a utility function is

required!

128 / 401

Strategic equivalence

D EFINITION

Let L be a set of lotteries. Two utility functions ui , uj on L are

strategically equivalent, written ui ∼ uj , if they imply the same

preference ordering on L.

Example: Consider the following two utility functions for the

gangrene problem:

u1 (amputated below knee) = 0.9

u1 (death) = 0

u1 (cured) = 1.0

u1 (amputated above knee) = 0.8

and

u2 (amputated below knee) = 2.98

u2 (death) = 1

u2 (cured) = 3.2

u2 (amputated above knee) = 2.76

A linear transformation of utilities

T HEOREM

Let L be a set of lotteries and let ui , uj be two utility functions on

L, then

ui ∼ uj

⇐⇒

uj = a · ui + b,

for some constants a, b with a > 0

A utility function is unique up to a positive linear transformation.

The functions u1 and u2 are strategically equivalent.

129 / 401

130 / 401

The gangrene problem revisited

Normalisation

Let X be an attribute with values x1 . . . xn , n > 1.

Often, utility functions are normalised such that, for example,

Reconsider the gangrene problem with

u(amputated below knee) = 0.9

u(death) = 0

u(cured) = 1.0

u(amputated above knee) = 0.8

For the two lotteries

u(x1 ) = 0 and u(xn ) = 1

p = 0.99

L5

These values only set the origin of u(X) and the unit of

measurement.

amputated

above knee

L6

p = 0.01

If we decide to consider, for example, xi ≺ x1 or xj ≻ xn , then

u(xi ) < 0, and u(xj ) > 1, respectively.

p = 0.90

amputated

below knee

death

p = 0.10

death

we have that

u(L5 ) = 0.99 · u(amputated below knee)+

+0.01 · u(death) = 0.99 · 0.9 = 0.89

u(L6 ) = 0.90 · u(amputated above knee)+

+0.10 · u(death) = 0.90 · 0.8 = 0.72

131 / 401

So, L5 L6 .

132 / 401

The gangrene problem — continued

Reconsider the gangrene problem with

u(amputated below knee) = 0.9

u(death) = 0

u(cured) = 1.0

u(amputated above knie) = 0.8

For the two lotteries

p = 0.99

• subjective assessment

• direct methods

• magnitude estimation/production

p = 0.70

amputated

below knee

L7

p = 0.01

Utility assessment

L8

cured

p = 0.90

• ratio estimation/production

amputated

above knee

p = 0.30

death

p = 0.10

• indirect (behavioural) methods

• based on reference gambles

• ”objective” assessment

death

we have that

u(L7 ) = 0.99 · 0.9 = 0.89

• choose a mathematical function

u(L8 ) = 0.70 · 1.0 + 0.3 · 0.9 · 0.8 = 0.92

So, L8 L7 .

133 / 401

134 / 401

Direct methods

Direct methods

Ratio estimation or production:

Magnitude estimation or production can be done using

a utility scale:

1.0

0.5

Example

Reconsider the diabetic gangrene treatment

example. To assess the utilities for the different

treatment consequences, a patient is asked one

of the following types of question:

• ”How do you value life after an above-knee

amputation?” (estimation);

• ”Which consequence do you associate with a

utility of 0.2?” (production)

0

135 / 401

Example

My sister is interested in buying a new car. To assess the

utilities for the different car options, she is asked one of the

following types of question:

• ”How much more do you value a Volvo than a Fiat?”

(estimation);

• ”Which car seems to you twice as valuable as a Fiat?”

(production)

This method was used to assess the empirical utility of money

(Galanter, 1962).

√

empirical utility function for monetary gain: ∼ x

empirical utility function for monetary loss: ∼ −x2

136 / 401

Assessment using certainty equivalents

Reference gamble

Consider a set of consequences for which utilities are to be

assessed. Let ci , cj , ck be consequences from that set such that

ci cj ck .

A reference gamble is a choice between two lotteries:

1 the certain lottery L = [1.0, cj ]

′

2 the simple lottery L = [p, ci ; (1 − p), ck ]

The utilities of a decision maker for the possible consequences

in a decision problem can be assessed using several certainty

equivalents:

1

2

elicit a preference order on consequences from most

preferred (c+ ) to least preferred (c− );

assign the first two points of the utility function:

u(c+ ) = 1 and u(c− ) = 0

• L′ is the reference lottery of the ”gamble” — if u(ci ) = 1.0

3

• for fixed ci , cj , and ck , the probability p for which L ∼ L′ is

5

and u(ck ) = 0, then L is called a standard reference lottery;

′

the indifference probability for L and L′ ;

• for fixed p and ci , ck , the consequence cj for which L ∼ L′ is

the certainty equivalent, or indifference point, for L′ .

4

6

7

create a standard reference gamble with p = 0.5;

elicit the certainty equivalent cCE for the reference lottery;

compute the utility of this indifference point;

create two reference gambles with p = 0.5 and

consequences c+ and cCE , and cCE and c− , respectively;

repeat steps 4 – 6 until enough points are found to draw the

utility curve.

137 / 401

138 / 401

Computing a utility

An example

Consider the lotteries L and L′ of a reference gamble:

′

L = [1.0, cj ] and L = [p, ci ; (1 − p), ck ]

where ci cj ck . Assume that the utilities u(ci ) and u(ck ) for

consequences ci and ck are known.

Question:

How do we compute u(cj ) for consequence cj ?

Suppose you have an old computer for which components are

bound to need replacement in the near future. You have a

number of replacement alternatives that will cost you between

e 50 and e 500. What is your utility function?

Two points can be fixed:

u(e 500) = 0 and u(e 50) = 1

Answer:

If either

You are then presented with the

following gamble:

p is the indifference probability for L and L′ ,

or

cCE

0.5

0.5

cj is the certainty equivalent of L′

500

Suppose that cCE = 200 is the indifference point for this gamble.

We then have that

then L ∼ L′ and consequently

1.0 · u(cj ) = p · u(ci ) + (1 − p) · u(ck ).

From this equation we can solve u(cj ).

50

1.0 · u(200) = 0.5 · u(50) + 0.5 · u(500) = 0.5

139 / 401

140 / 401

An example

Assessment using probability equivalents

The utilities of a decision maker for the possible consequences

in a decision problem can be assessed using several probability

equivalents:

1

2

elicit a preference order on consequences from most

preferred (c+ ) to least preferred (c− );

assign the first two points of the utility function:

4

5

amputated

below knee

The patient is given the

following gamble:

u(c+ ) = 1 and u(c− ) = 0

3

Reconsider the decision problem for treatment of diabetic

gangrene. The possible consequences of treatment are:

cured

1.0

amputated below knee

?

amputated above knee

?

death

0.0

create a standard reference gamble for enough

intermediate outcomes;

elicit the indifference probability for the lotteries in each of

the gambles;

compute the utility of an intermediate outcome using this

indifference probability.

p

cured

1−p

death

Suppose that p = 0.9 is the indifference probability for the

lotteries.

We then have that

u(amputated below knee) = 0.9·u(cured)+0.1·u(death) = 0.9

141 / 401

142 / 401

Example: extreme utilities

Direct vs indirect methods

direct:

• roots in psychophysics

• inferior in both validity & reliability

• easily applied to risky tasks with complex consequences

indirect:

• roots in utility axioms

• time consuming

• irrelevant ”gaming” effect

• distasteful / unethical

• unsuitable for measuring very small or very large utilities

143 / 401

For the diabetic gangrene decision problem, we could use the

following gambles:

p

1−p

amputated

above knee

amputated

below knee

death

amputated

below knee

q

cured

1−q

amputated

above knee

144 / 401

Subjective utility assessments

• can change overtime

Risk attitudes

• are required for unknown / unexperienced outcomes

• are influenced by framing and certainty effects

• are influenced by third parties

• are not comparable from person to person

• ...

145 / 401

146 / 401

Risk-neutral preferences

Let X be an attribute with values x1 . . . xn , n ≥ 2, measured

in some unit. Let L = [p, xi ; (1 − p), xj ] be a lottery over X.

An Example

Suppose you are given the choice between the following two

“games”:

0.5

0.5

0.5

0.5

A decision maker is risk-neutral if each additional unit of X is

valued with the same increase in utility:

Example

e5

D EFINITION

A decision maker is risk-neutral,

if

e −1

u(p · xi + (1 − p) · xj ) =

e6

p · u(xi ) + (1 − p) · u(xj )

e −2

that is, the utility function is

linear.

Do you have a clear preference for playing one or the other?

Note that u′′ (X) = 0.

147 / 401

148 / 401

Risk-averse preferences

An Example

Let X be an attribute with values x1 . . . xn , n ≥ 2, measured

in some unit. Let L = [p, xi ; (1 − p), xj ] be a lottery over X.

Suppose you are given the choice between the following two

“games”:

0.5

0.5

0.5

0.5

A decision maker is risk-averse if each additional unit of X is

valued with a smaller increase in utility:

Example

e 52

D EFINITION

A decision maker is risk-averse,

if

u(p · xi + (1 − p) · xj ) >

e −2

e 5000

p · u(xi ) + (1 − p) · u(xj )

e −4950

that is, the utility function is

concave.

Which game would you prefer to play?

Note that u′′ (X) < 0.

149 / 401

150 / 401

Risk-prone preferences

Let X be an attribute with values x1 . . . xn , n ≥ 2, measured

in some unit. Let L = [p, xi ; (1 − p), xj ] be a lottery over X.

An Example

Suppose you are given the choice between the following two

“games”:

A decision maker is risk-prone, or risk-seeking, if each

additional unit of X is valued with a larger increase in utility:

Example

0.2

0.8

0.8

0.2

D EFINITION

A decision maker is risk-prone,

if

e 10

e 0, 10

e 2, 50

u(p · xi + (1 − p) · xj ) <

e1

p · u(xi ) + (1 − p) · u(xj )

that is, the utility function is

convex.

Which game would you prefer to play?

Note that u′′ (X) > 0.

151 / 401

152 / 401

Discounting

Risk attitudes

• the process of translating future rewards to their present

value;

• necessary to perform ”cost-benefit” analyses now.

The zero-illusion curve:

u(X)

u(X)

RA

RA

Examples

RS

• the time value of money

value of an euro depends on when it is available

euros can be invested to yield more euros

RA

RS

0

X

0

• the time value of life

X

life years in the future less valuable than today (?)

life years are valued relative to money

153 / 401

154 / 401

An example

Consider the following ‘standard reference gamble’:

A discountingfactor

0.50

A discountingfactor indicates how much less a patient values

each successive year of life, compared to the previous year:

The utility function for length of life

with a constant discountingfactor δ

is approximated by

Z x

u(x) =

e−δ·t dt

t=0

for life-expectancy x.

155 / 401

1.0

x years

∼

0.50

25 years

0 years

For a utility function for length of life with a constant

discountingfactor δ = 0.02, we find:

u(25 years) =

u(0 years) =

and

Z 25

Z 0

−0.02·t

e−0.02·t dt = 19.67

e

dt = 0

t=0

t=0

The patient should be indifferent about the choice between the

two lotteries for a life-expectancy x for which

u(x) = 0.5 · 19.67 + 0.5 · 0 = 9.835

Z x

We find from

e−0.02·t dt = 9.835 that x ≈ 11 years.

t=0

156 / 401

An example

The risk-premium

Let X be an attribute with values x1 . . . xn , n ≥ 2, measured

in some unit. Let u(X) be a utility function over X.

Consider a lottery L = [p, xi ; (1 − p), xj ] over X. Let xC be the

certainty equivalent of lottery L and xE the expected value of L.

Let u(x) = −e

for all x ∈ [0 . . . 50] Consider the lottery

L = [0.5, 0; 0.5, 10]. Compute the risk premium for this lottery.

−0.2x

The expected value xE of the lottery is

0.5 · 0 + 0.5 · 10 = 5

The certainty equivalent xC of the lottery is determined from

The risk premium RP of L is

defined as:

xE − xC if u(X) is increasing

RP =

xC − xE if u(X) is decreasing

u(xC ) = 0.5 · u(0) + 0.5 · u(10) = −0.5 − 0.5 · e−2 ≈ −0.57

and equals 2.83.

As u(x) is an increasing function, the risk premium for L is

RP = 5 − 2.83 = 2.17

Exercise

Let u(x) = − log(x + 30) for all x > −30. Consider the lottery

L = [0.5, −20; 0.5, −10]. What is the lottery’s risk premium? Is

the decision maker risk averse or risk prone?

157 / 401

Risk averseness & risk premium

158 / 401

Risk proneness & risk premium

A decision maker is

• risk averse iff his/her risk premium is positive for all

nondegenerate lotteries;

• decreasingly risk averse iff he/she is risk averse and

• his/her risk premium for any lottery

[0.5, x − h; 0.5, x + h] decreases (↓ 0) as x increases;

• increasingly risk averse iff he/she is risk averse and

• his/her risk premium for any lottery

[0.5, x − h; 0.5, x + h] increases (↑ ∞) as x increases;

• constantly risk averse iff he/she is risk averse and

• his/her risk premium for any lottery

[0.5, x − h; 0.5, x + h] remains constant for all x.

159 / 401

A decision maker is

• risk prone iff his/her risk premium is negative for all

nondegenerate lotteries;

• decreasingly risk prone iff he/she is risk prone and

• his/her risk premium for any lottery

[0.5, x − h; 0.5, x + h] increases (↑ 0) as x increases;

• increasingly risk prone iff he/she is risk prone and

• his/her risk premium for any lottery

[0.5, x − h; 0.5, x + h] decreases (↓ −∞) as x increases;

• constantly risk prone iff he/she is risk prone and

• his/her risk premium for any lottery

[0.5, x − h; 0.5, x + h] remains constant for all x.

160 / 401

The risk-aversion function

The degree of risk aversion / proneness – Introduction

Information regarding a decision maker’s risk attitude is given by

RP: sign indicates aversion vs proneness;

magnitude captures the degree of this behaviour,

for one specific lottery!

u′′ (X): sign indicates aversion vs proneness;

magnitude conveys no relevant information,

since strategically equivalent functions capture

same risk attitude:

u(X)

u(x) = −3e−x

u(X)

u(x) = 1 − e−x

D EFINITION

Consider a utility function u(X), and let σ ∈ {+, −} denote the

sign of u′ (x). The risk-aversion function R(X) for u(X) is then

defined by

R(X) = −σ

u′′ (X)

u′ (X)

• if R(X) > 0 then the decision maker is risk averse;

• if R(X) < 0 then the decision maker is risk prone;

• if R(X) = 0 then the decision maker is risk neutral;

T HEOREM

RP

x

(xE )

x−h

R(X) is increasing (decreasing, constant) iff the decision

maker’s risk premium for any lottery [0.5, x − h; 0.5, x + h] is

increasing (decreasing, constant) for increasing x.

RP

x+h

x−h

X

certainty

equivalent

x

(xE )

x+h

X

certainty

equivalent

161 / 401

An example

Let u(x) = 1 − e

, x > 0, be a utility function. Find the

risk-aversion function for X.

−x/900

We have that

u′ (x) =

1

· e−x/900 ,

900

x>0

and

−1

· e−x/900 , x > 0

9002

Since u(x) is an increasing function in x (u′ (x) > 0 for all x > 0),

the risk-aversion function is defined as

u′′ (X)

R(X) = − ′

u (X)

u′′ (x) =

1

and that u(X) models constant

We conclude that R(X) =

900

risk aversion.

163 / 401

162 / 401