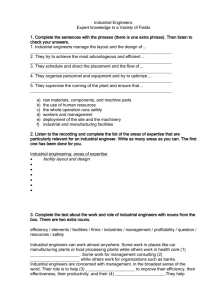

System Engineering Competency

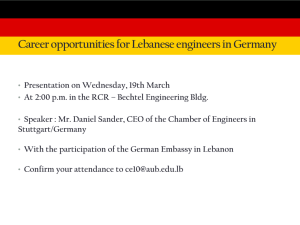

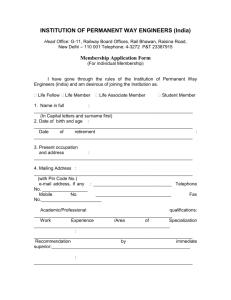

advertisement