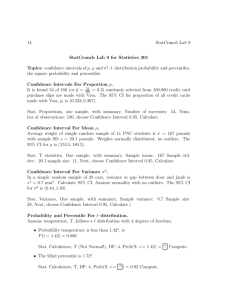

Chapter 7 Solutions

advertisement