lecture slides (single page) - School of Informatics

advertisement

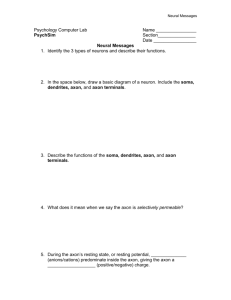

Neural Information Processing: Introduction Mark van Rossum School of Informatics, University of Edinburgh January 2015 1 / 12 Course Introduction Welcome Administration Books, papers Class papers Assignments + exam Tutor Maths level 2 / 12 Course goal and outline 1 Computational methods to get better insight in neural coding and computation. Because Neural code is complex: distributed and high dimensional Data collection is becoming better 2 Biologically inspired algorithms and hardware. More concretely Neural coding: encoding and decoding. Statistical models: modelling neural activity and neuro-inspired machine learning. Unconventional computing: dynamics and attractors. 3 / 12 Relationships between courses NC Wider introduction, but less mathematical than NIP (van Rossum, first term) CCN Cognition and coding (Series) PMR Pure ML perspective (Storkey) 4 / 12 Real Neurons The fundamental unit of all nervous system tissue is the neuron Axonal arborization Axon from another cell Synapse Dendrite Axon Nucleus Synapses Cell body or Soma [Figure: Russell and Norvig, 1995] 5 / 12 A neuron consists of a soma, the cell body, which contains the cell nucleus dendrites: input fibres which branch out from the cell body an axon: a single long (output) fibre which branches out over a distance that can vary between 1cm and 1m synapse: a connecting junction between the axon and other cells 6 / 12 Each neuron can form synapses with anywhere between 10 and 105 other neurons Signals are propagated at the synapse through the release of chemical transmitters which raise or lower the electrical potential of the cell When the potential reaches a threshold value, an action potential is sent down the axon This eventually reaches the synapses and they release transmitters that affect subsequent neurons Synapses can be inhibitory (lower the post-synaptic potential) or excitatory (raise the post-synaptic potential) Synapses can also exhibit long term changes of strength (plasticity) in response to the pattern of stimulation 7 / 12 Assumptions We will not consider the biophysics of neurons We will ignore non-linear interactions between inputs Spikes can be modelled as rate-modulated random processes Ignore biophysical details of plasticity 8 / 12 Recent developments: Neurobiology technique [Stevenson and Kording, 2011] Recordings from many neurons at once (Moore’s law) 9 / 12 Recent developments: Computing Hardware [Furber et al., 2014] Single CPU speed limit reached Renewed call for parallel hardware and algorithms, including brain-inspired ones (slow, noisy, enery-efficient). 10 / 12 Recent developments: (Deep) Machine Learning [Le et al., 2012] Neural network algorithms, developed 20 years ago, were considered superseeded. Due to a few tricks and extreme training, these algorithms are suddenly top performers in vision, audition and natural language. 11 / 12 References I Furber, S. B., Galluppi, F., Temple, S., and Plana, L. A. (2014). The spinnaker project. Proceedings of the IEEE, 102(5):652–665. Le, Q. V., Ranzato, M., Monga, R., Devin, M., Chen, K., Corrado, G. S., Dean, J., and Ng, A. Y. (2012). Building high-level features using large scale unsupervised learning. In ICM. ICML 2012: 29th International Conference on Machine Learning, Edinburgh, Scotland, June, 2012. Stevenson, I. H. and Kording, K. P. (2011). How advances in neural recording affect data analysis. Nat Neurosci, 14(2):139–142. 12 / 12