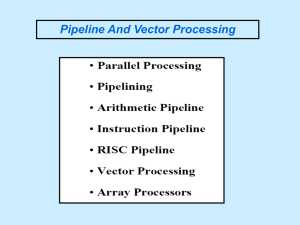

What Is A Pipeline?

advertisement

Systemy RT i embedded Wykład 6 Rdzenie ARM, część 2 Wrocław 2013 Pipelining What Is A Pipeline? • Pipelining is used by virtually all modern microprocessors to enhance performance by overlapping the execution of instructions. • In terms of a pipeline within a CPU, each instruction is broken up into different stages. What Is A Pipeline? • Ideally if each stage is balanced (all stages are ready to start at the same time and take an equal amount of time to execute.) the time taken per instruction (pipelined) is defined as: Time per instruction (unpipelined) / Number of stages What is a pipeline • If the stages of a pipeline are not balanced and one stage is slower than another, the entire throughput of the pipeline is affected. What is a pipeline • In terms of a CPU, the implementation of pipelining has the effect of reducing the average instruction time, therefore reducing the average CPI (Clocks per Instruction). • Example: If each instruction in a microprocessor takes 5 clock cycles (unpipelined) and we have a 4 stage pipeline, the ideal average CPI with the pipeline will be 1.25 . Classical 5-stage pipeline • Usually we have 5 cycle deep pipeline: •Instruction Fetch Cycle •Instruction Decode/Register Fetch Cycle •Execution Cycle •Memory Access Cycle •Write-Back Cycle Instruction Fetch (IF) Cycle • During IF cycle the instructiondecoder fetches an instruction from an instruction memory Instruction Decode (ID)/Register Fetch Cycle • Decoding the instruction and at the same time reading in the values of the register involved. • As the registers are being read, the equality test is done in case the instruction decodes as a branch or jump. • Instruction can be decoded in parallel with reading the registers because the register addresses are at fixed locations. Execution (EX)/Effective Address Cycle • If a branch or jump did not occur in the previous cycle, the arithmetic logic unit (ALU) can execute the instruction. • At this point the instruction falls into three different types: • Memory Reference: ALU adds the base register and the offset to form the effective address. • Register-Register: ALU performs the arithmetic, logical, etc… operation as per the opcode. • Register-Immediate: ALU performs operation based on the register and the immediate value (sign extended). Memory Access (MEM) Cycle • If a load, the effective address computed from the previous cycle is referenced and the memory is read. The actual data transfer to the register does not occur until the next cycle. • If a store, the data from the register is written to the effective address in memory. Write-Back (WB) Cycle • Occurs with Register-Register ALU instructions or load instructions. • Simple operation whether the operation is a register-register operation or a memory load operation, the resulting data is written to the appropriate register. Problems With The Previous Figure • The memory is accessed twice during each clock cycle. This problem is avoided by using separate data and instruction caches. • It is important to note that if the clock period is the same for a pipelined processor and an non-pipelined processor, the memory must work five times faster. • Another problem that we can observe is that the registers are accessed twice every clock cycle. To try to avoid a resource conflict we perform the register write in the first half of the cycle and the read in the second half of the Problems With The Previous Figure (cont’d) • We write in the first half because therefore a write operation can be read by another instruction further down the pipeline. • A third problem arises with the interaction of the pipeline with the PC. We use an adder to increment PC by the end of IF. Within ID we may branch and modify PC. How does this affect the pipeline? Pipeline Hazards • The performance gain from using pipelining occurs because we can start the execution of a new instruction each clock cycle. In a real implementation this is not always possible. • Another important note is that in a pipelined processor, a particular instruction still takes at least as long to execute as non-pipelined. • Pipeline hazards prevent the execution of Types Of Hazards • There are three types of hazards in a pipeline, they are as follows: • Structural Hazards: are created when the data path hardware in the pipeline cannot support all of the overlapped instructions in the pipeline. • Data Hazards: When there is an instruction in the pipeline that affects the result of another instruction in the pipeline. • Control Hazards: The PC causes these due to the pipelining of branches and other instructions that change the PC. A Hazard Will Cause A Pipeline Stall • We can look at pipeline performance in terms of a faster clock cycle time as Clock cycle time unpipelined CPI unpipelined well: Speedup = x CPI pipelined Clock cycle pipelined = Clock cycle time pipelined Clock cycle time unpipelined Pipeline Depth Speedup = 1 1 + Pipeline stalls per Ins x Pipeline Depth Dealing With Structural Hazards • Structural hazards result from the CPU data path not having resources to service all the required overlapping resources. • Suppose a processor can only read and write from the registers in one clock cycle. This would cause a problem during the ID and WB stages. • Assume that there are not separate instruction and data caches, and only one memory access can occur during one clock cycle. A hazard would be caused during the IF and MEM cycles. Dealing With Structural Hazards • A structural hazard is dealt with by inserting a stall or pipeline bubble into the pipeline. This means that for that clock cycle, nothing happens for that instruction. This effectively “slides” that instruction, and subsequent instructions, by one clock cycle. • This effectively increases the average CPI. Dealing With Structural Hazards (cont’d) Speedup = CPI no haz Clock cycle time no haz x Clock cycle time haz CPI haz Speedup = 1 x 1+0.4*1 = 0.75 1 1/1.05 Dealing With Structural Hazards (cont’d) • We can see that even though the clock speed of the processor with the hazard is a little faster, the speedup is still less than 1. • Therefore the hazard has quite an effect on the performance. • Sometimes computer architects will opt to design a processor that exhibits a structural A: The improvement to the processor data path is too hazard. •Why? costly. • B: The hazard occurs rarely enough so that the processor will still perform to specifications. Data Hazards (A Programming Problem?) • We haven’t looked at assembly programming in detail at this point. • Consider the following operations: DADD R1, R2, R3 DSUB R4, R1, R5 AND R6, R1, R7 OR R8, R1, R9 XOR R10, R1, R11 Pipeline Registers What are the problems? Data Hazard Avoidance • In this trivial example, the programmer cannot be expected to reorder his/her operations. Assuming this is the only code we want to execute. • Data forwarding can be used to solve this problem. • To implement data forwarding we need to bypass the pipeline register flow: – Output from the EX/MEM and MEM/WB stages must be fed back into the ALU input. – We need routing hardware that detects when the next instruction depends on the write of a previous General Data Forwarding • It is easy to see how data forwarding can be used by drawing out the pipelined execution of each instruction. • Now consider the following instructions: DADD R1, R2, R3 LD R4, O(R1) SD R4, 12(R1) Problems • Can data forwarding prevent all data hazards? • NO! • The following operations will still cause a data hazard. This happens because the further down the pipeline we get, the R1, O(R2) less we canLDuse forwarding. DSUB R4, R1, R5 AND R6, R1, R7 OR R8, R1, R9 Problems • We can avoid the hazard by using a pipeline interlock. • The pipeline interlock will detect when data forwarding will not be able to get the data to the next instruction in time. • A stall is introduced until the instruction can get the appropriate data from the previous instruction. Control Hazards • Control hazards are caused by branches in the code. • During the IF stage remember that the PC is incremented by 4 in preparation for the next IF cycle of the next instruction. • What happens if there is a branch performed and we aren’t simply incrementing the PC by 4. • The easiest way to deal with the occurrence of a branch is to perform the IF stage again once the branch occurs. Performing IF Twice • We take a big performance hit by performing the instruction fetch whenever a branch occurs. Note, this happens even if the branch is taken or not. This guarantees that the PC will get the correct value. branch IF ID EX MEM WB IF ID EX MEM WB IF IF ID EX MEM WB Performing IF Twice • This method will work but as always in computer architecture we should try to make the most common operation fast and efficient. • By performing IF twice we will encounter a performance hit between 10%-30% • Next class we will look at some methods for dealing with Control Hazards. Control Hazards (other solutions) • What if every branch is treated as “not taken”. Than not only the registers are read during ID, but we also an equality test is performed in case a branch is necessary or not. • The performance can be improved by assuming that the branch will not be taken. • The complexity arises when the branch evaluates and we end up needing to actually take the branch. Control Hazards (other solutions) • If the branch is actually taken than the pipeline needs to be cleared of any code loaded in from of the “not-taken” path. • Likewise it can be assumed that the branch is always taken. Control Hazards (other solutions) • The next method for dealing with a control hazard is to implement a “delayed” branch scheme. • In this scheme an instruction is inserted into the pipeline that is useful and not dependent on whether the branch is taken or not. It is the job of the compiler to determine the delayed branch instruction. How To Implement a Pipeline Multi-clock Operations • Sometimes operations require more than one clock cycle to complete. Examples are: • Floating Point Multiply • Floating Point Divide • Floating Point Add Dependences and Hazards • Types of data hazards: – RAW: read after write – WAW: write after write – WAR: write after read • RAW hazard was already shown. WAW hazards occur due to output dependence. • WAR hazards do not usually occur because of the amount of time between the read cycle and write cycle in a pipeline. Dynamic Scheduling • In the statically scheduled pipeline the instructions are fetched and then issued. If the users code has a data dependency / control dependence it is hidden by forwarding. • If the dependence cannot be hidden a stall occurs. • Dynamic Scheduling is an important technique in which both dataflow and exception behavior of the program are maintained. Dynamic Scheduling (continued) • Data dependence can cause stalling in a pipeline that has “long” execution times for instructions that dependencies. • EX: Let’s consider this code ( .D is floating point) , DIV.D F0,F2,F4 ADD.D F10,F0,F8 SUB.D F12,F8,F14 Dynamic Scheduling (continued) • Longer execution times of certain floating point operations give the possibility of WAW and WAR hazards. EX: DIV.D ADD.D SUB.D MUL.D F0, F6, F8, F6, F2, F4 F0, F8 F10, F14 F10, F8 Dynamic Scheduling (continued) • If we want to execute instructions out of order in hardware (if they are not dependent etc…) we need to modify the ID stage of the 5 stage pipeline. • Split ID into the following stages: – Issue: Decode instructions, check for structural hazards. – Read Operands: Wait until no data hazards, then read operands. • IF still precedes ID and will store the instruction into a register or queue. Branch Prediction In Hardware • Data hazards can be overcome by dynamic hardware scheduling, control hazards need also to be addressed. • Branch prediction is extremely useful in repetitive branches, such as loops. • A simple branch prediction can be implemented using a small amount of memory and the lower order bits of the address of the branch instruction. • The memory only needs to contain one bit, representing whether the branch was taken or Branch Prediction In Hardware • If the branch is taken the bit is set to 1. The next time the branch instruction is fetched we will know that the branch occurred and we can assume that the branch will be taken. • This scheme adds some “history” to our previous discussion on “branch taken” and “branch not taken” control hazard avoidance. 2-bit Prediction Scheme • This method is more reliable than using a single bit to represent whether the branch was recently taken or not. • The use of a 2-bit predictor will allow branches that favor taken (or not taken) to be mispredicted less often than the onebit case. Branch Predictors • The size of a branch predictor memory will only increase it’s effectiveness so much. • We also need to address the effectiveness of the scheme used. Just increasing the number of bits in the predictor doesn’t do very much either. • Some other predictors include: – Correlating Predictors – Tournament Predictors Branch Predictors • Correlating predictors will use the history of a local branch AND some overall information on how branches are executing to make a decision whether to execute or not. • Tournament Predictors are even more sophisticated in that they will use multiple predictors local and global and enable them with a selector to improve accuracy. ARM cores, part 2 Plan • • • • ARM9 AMBA Cortex-M Cortex-R ARM9 Source: [2] Source: [2] ARM9 ARM9 - features • Over 5 Billion ARM9 processors have been shipped so far • The ARM9 family is the most popular ARM processor family ever • 250+ silicon licensees • 100+ licensees of the ARM926EJ-S processor • ARM9 processors continue to be successfully deployed across a wide range of products and applications. • The ARM9 family offers proven, low risk and easy to use designs which reduce costs and enable rapid time to market. • The ARM9 family consists of three processors ARM926EJ-S, ARM946E-S and ARM968E-S ARM9 – family features • Main features – Based on ARMv5TE architecture – Efficient 5-stage pipeline for faster throughput and system performance – Fetch/Decode/Execute/Memory/Writeback – Supports both ARM and Thumb® instruction sets – Efficient ARM-Thumb interworking allows optimal mix of performance and code density ARM9 – family features • Main features – Harvard architecture - Separate Instruction & Data memory interfaces – Increased available memory bandwidth – Simultaneous access to I & D memory – Improved performance – 31 x 32-bit registers – 32-bit ALU & barrel shifter – Enhanced 32-bit MAC block ARM9 – DSP enhancements • Single cycle 32x16 multiplier implementation • Speeds up all multiply instructions • Pipelined design allows one 16x16 or 32x16 to start each cycle • New 32x16 and 16x16 multiply instructions • Allow independent access to 16-bit halves of registers ARM9 – DSP enhancements • Gives efficient use of 32-bit bandwidth for packed 16-bit operands • ARM ISA provides 32x32 multiply instructions • Efficient fractional saturating arithmetic • QADD, QSUB, QDADD, QDSUB • Count leading zeros instruction • CLZ for faster normalisation and division Source: [3] ARM9 – features comparison AMBA AMBA - Advanced Microcontroller Bus Architecture • AMBA – onchip communications standard for designing high-performance embedded microcontrollers introduced by ARM in 1996 • A few versions: – – – – AHB (Advanced High-performance Bus) ASB (Advanced System Bus) APB (Advanced Peripheral Bus) AXI AMBA – first specification • Buses defined : – Advanced System Bus (ASB) – Advanced Peripheral Bus (APB) AMBA 2 – specification • Buses defined : – Advanced High-performance Bus (AHB) widely used on ARM7, ARM9 and ARM CortexM based designs – Advanced System Bus (ASB) – Advanced Peripheral Bus (APB2 or APB) AMBA 3 – specification • Buses defined: – Advanced eXtensible Interface (AXI3 or AXI v1.0) - widely used on ARM Cortex-A processors including Cortex-A9 – Advanced High-performance Bus Lite (AHBLite v1.0) – Advanced Peripheral Bus (APB3 v1.0) – Advanced Trace Bus (ATB v1.0) AMBA 2 – specification • Buses defined : – AXI Coherency Extensions (ACE) - widely used on the latest ARM Cortex-A processors including Cortex-A7 and Cortex-A15 – AXI Coherency Extensions Lite (ACE-Lite) – Advanced eXtensible Interface 4 (AXI4) – Advanced eXtensible Interface 4 Lite (AXI4-Lite) – Advanced eXtensible Interface 4 Stream (AXI4Stream v1.0) – Advanced Trace Bus (ATB v1.1) – Advanced Peripheral Bus (APB4 v2.0) APB • APB – designed for low-power system modules, for example register interfaces on system peripherals • optimized for minimal power consumption and reduced interface complexity to support peripheral functions • It has to support 32bit and 66 MHz signals. ASB • ASB – designed for high-performance system modules • alternative system bus suitable for use where the high-performance features of AHB are not required • supports also the efficient connection of: – processors, – on-chip memories – off-chip external memory interfaces with low-power peripheral macrocell functions AHB • AHB – designed for high-performance, high clock frequency system modules • acts as the high-performance system backbone bus • supports the efficient connection of: – processors, – on-chip memories – off-chip external memory interfaces with low-power peripheral macrocell functions AHB • Features: – – – – – – – – single edge clock protocol split transactions several bus masters burst transfers pipelined operations single-cycle bus master handover non-tristate implementation large bus-widths (64/128 bit). AHB - Lite • AHB – Lite is a subset of AHB • This subset simplifies the design for a bus with a single master AXI • AXI – designed for high-performance, high clock frequency system modules with low latency • enables high-frequency operation without using complex bridges • provides flexibility in the implementation of interconnect architectures • is backward-compatible with existing AHB and APB interfaces. AXI • Features: – separate address/control and data phases – support for unaligned data transfers using byte strobes – burst based transactions with only start address issued – issuing of multiple outstanding addresses with out of order responses – easy addition of register stages to provide timing closure. Typical AMBA system Cortex-M Source: [2] Cortex family • Currently Cortex family is strongly introduced to the market by ARM corporation • Cortex family consists of three subfamilies: – Cortex-M – cores for microcontrollers and costsensitive applications; Thumb-2 instructions supported Cortex family • Cortex family consists of three subfamilies: – Cortex-R – cores for real time systems appliactions; ARM, Thumb and Thumb-2 instructions supported – Cortex-A – the most complex and the most powerful cores, for multimedia devices and application processors; ARM, Thumb and Thumb-2 instructions supported Source: [4] Cortex-M Cortex-M Cortex-M • Main features: – – – – – 32-bit processor 3 stage pipelining Thumb-2 instruction list – concise and efficient code Many power saving modes and domains Nested Vectored Interrupt Controller – well defined times and methods of interrupts invoking – RTOS support – Debugger support (JTAG, SWD – Serial Wire Debug) Source: [2] Cortex-M0/M0+ Source: [2] Cortex-M0/M0+ Cortex-M0 • Main features: – The armest version of ARM cores – The most power saving version of ARM cores – only 85mW/MHz – Upward compatibility with Cortex-M3 – Only 12000 gates – Only 56 C-optimized instructions – Support for low power wireless communication: Bluetooth Low Energy (BLE), ZigBee, etc. – Performance 0.9 DMIPS/MHz – Single cycle 32x32 multiply instructions – Interrupt execution delay: 16 cycles Source: [2] Cortex-M0 Cortex-M0 • Processor modes: – Thread mode: Used to execute application software. The processor enters Thread mode when it comes out of reset. – Handler mode: Used to handle exceptions. The processor returns to Thread mode when it has finished all exception processing. Cortex-M0 – core registers Cortex-M0 – memory map Cortex-M0 – vector table Cortex-M0 – register stacking Source: [2] Cortex-M1 Cortex-M1 – Core destined for FPGA applications – Support for Actel, Altera and Xilinx chips – Easy migration from FPGA (development) to ASIC (production) Source: [2] • Main features: Cortex-M1 – A general-purpose 32-bit microprocessor, which executes the ARMv6-M subset of the Thumb-2 instruction set and offers high performance operation and small size in FPGAs. – It has: • a three-stage pipeline • a three-cycle hardware multiplier • little-endian format for accessing all memory. – A system control block containing memory-mapped control registers. Source: [2] • Main features: Cortex-M1 – An integrated Operating System (OS) extensions system timer. – An integrated Nested Vectored Interrupt Controller (NVIC) for low-latency interrupt processing. – A memory model that supports accesses to both memory and peripheral registers. – Integrated and configurable Tightly Coupled Memories (TCMs) – Optional debug support. Source: [2] • Main features: Source: [2] Cortex-M1 Cortex-M1 Source: [2] • Processor modes as in Cortex-M0 Source: [2] Cortex-M1 – Memory Map Source: [2] Cortex-M3 Cortex-M3 • Main features: – Introduced to the market in 2004 – Destined for the most demanding microcontrollers – High performance and many additional features – Low power consumption (12.5 DMIPS/mW) – Up to 240 interrupt sources!!! – Support for many serial protocols Cortex-M3 • Main features: – Performance of 1.25DMIPS/MHz – Support for bit operations – Single cycle 32x32bit multiply; 2-12 cycle division – Three stage pipelining with branch prediction – Memory Protection Unit (MPU) – Max speed: up to 275 MHz /340 DMIPS Cortex-M3 Cortex-M3 • Core features: – – – – Thumb instruction set (ARMv7) Banked Stack Pointer Hardware integer divide instructions Automatic processor state saving and restoration for low latency Interrupt Service Routine (ISR) entry and exit. Cortex-M3 • NVIC (Nested Vector Interrupt Controller) features: – – – – External interrupts, configurable from 1 to 240. Bits of priority, configurable from 3 to 8. Dynamic reprioritization of interrupts. Priority grouping - selection of preempting interrupt levels and non preempting interrupt levels. – Support for tail-chaining and late arrival of interrupts. This enables back-to-back interrupt processing without the overhead of state saving and restoration between interrupts. – Processor state automatically saved on interrupt entry, and restored on interrupt exit, with no instruction overhead. – Optional Wake-up Interrupt Controller (WIC), providing ultra-low power sleep mode support. Cortex-M3 • MPU features features: – Eight memory regions. – Sub Region Disable (SRD), enabling efficient use of memory regions. – The ability to enable a background region that implements the default memory map attributes. Cortex-M3 • Bus interfaces: – Three Advanced High-performance Bus-Lite (AHB-Lite) interfaces: ICode, DCode, and System bus interfaces. – Private Peripheral Bus (PPB) based on Advanced Peripheral Bus (APB) interface. – Bit-band support that includes atomic bit-band write and read operations. – Memory access alignment. – Write buffer for buffering of write data. – Exclusive access transfers for multiprocessor systems. Cortex-M3 • The processor supports two modes of operation, Thread mode and Handler mode: – The processor enters Thread mode on Reset, or as a result of an exception return. Privileged and Unprivileged code can run in Thread mode. – The processor enters Handler mode as a result of an exception. All code is privileged in Handler mode. Cortex-M3 • The processor can operate in one of two operating states: – Thumb state. This is normal execution running 16-bit and 32-bit halfword aligned Thumb instructions. – Debug State. This is the state when the processor is in halting debug. Cortex-M3 Cortex-M3 – bit band mapping Source: [2] Cortex-M4 Cortex-M4 • Main features: – The richest version of Cortex-M subfamily – Destined for low power digital signal applications – Integrated 32b CPU and DSP – Single precision FPU unit – Other features like in Cortex-M3 – DSP instructions – Max speed: up to 300 MHz /375 DMIPS Cortex-M4 Cortex-M4 • FPU features: – 32-bit instructions for single-precision (C float) dataprocessing operations. – Combined Multiply and Accumulate instructions for increased precision (Fused MAC). – Hardware support for conversion, addition, subtraction, multiplication with optional accumulate, division, and square-root. – Hardware support for denormals and all IEEE rounding modes. – 32 dedicated 32-bit single precision registers, also addressable as 16 double-word registers. – Decoupled three stage pipeline. Cortex-M4 - FPU • FPU registers: – sixteen 64-bit doubleword registers, D0-D15 – or thirty-two 32-bit single-word registers, S0S31 Source: [2] Cortex-R4 Cortex-R4 • Main features: – A mid-range processor for use in deeply-embedded, real-time systems – Includes Thumb-2 technology for optimum code density and processing throughput – Integrated 32b CPU and DSP – Single precision FPU unit (in versions R4F) – ARM and Thumb instructions – Tightly-Coupled Memory (TCM) ports for low-latency and deterministic accesses to local RAM, in addition to caches for higher performance to general memory Cortex-R4 • Main features: – High-speed Advanced Microprocessor Bus Architecture (AMBA) Advanced eXtensible Interfaces (AXI) for master and slave interfaces – Dynamic branch prediction with a global history buffer, and a 4-entry return stack – The ability to implement and use redundant core logic, for example, in fault detection – ECC – Error Corrcting Codes - Optional singlebit error correction and two-bit error detection for cache and/or TCM memories with ECC bits Cortex-R4 • Main features: – A Harvard L1 memory system with: • optional Tightly-Coupled Memory (TCM) interfaces with support for error correction or parity checking memories • optional caches with support for optional error correction schemes • optional ARMv7-R architecture Memory Protection Unit (MPU) • optional parity and Error Checking and Correction (ECC) on all RAM blocks. – An L2 memory interface: • single 64-bit master AXI interface • 64-bit slave AXI interface to TCM RAM blocks and cache RAM blocks. Cortex-R4 • Main features: – A Harvard L1 memory system with: • optional Tightly-Coupled Memory (TCM) interfaces with support for error correction or parity checking memories • optional caches with support for optional error correction schemes • optional ARMv7-R architecture Memory Protection Unit (MPU) • optional parity and Error Checking and Correction (ECC) on all RAM blocks. – An L2 memory interface: • single 64-bit master AXI interface • 64-bit slave AXI interface to TCM RAM blocks and cache RAM blocks. Cortex-R4 • Operating modes: – User (USR) mode - the usual mode for the execution of ARM or Thumb programs. – Fast interrupt (FIQ) mode entered on taking a fast interrupt. – Interrupt (IRQ) mode entered on taking a normal interrupt. – Supervisor (SVC) mode is a protected mode for the operating system entered on taking a Supervisor Call (SVC), formerly SWI. – Abort (ABT) mode entered after a data or instruction abort. – System (SYS) mode is a privileged user mode for the operating system. – Undefined (UND) mode entered when an Undefined Cortex-R4 – register set Cortex-R4 – status register Source: [2] Cortex-R5 Cortex-R5 • Main features: – Improved (extended) version of Cortex-R4 processor – Added hardware Accelerator Coherency Port (ACP) to reduce the requirement for slow software cache maintenance operations when sharing memory with other master – Added Vector Floatin-Point v3 – Added Multiprocessing Extensions for multiprocessing functionality – Added Low Latency Peripheral Port for integration of latency sensitive peripherals with processor Cortex-R5 • Implementation example: Thank you for your attention Cortex-R5 • VFP v3-D16: – The FPU fully supports single-precision and double-precision add, subtract, multiply, divide,multiply and accumulate, and square root operations – provides conversions between fixed-point and floating-point data formats, and floating-point constant instructions – includes 16 double-precision registers Cortex-R5 • Vector instructions: Source: [2] Cortex-R7 Cortex-R7 • Main features: – The highest perfoming Cortex-R processor – On a 40 nm G process the Cortex-R7 processor can be implemented to run at well over 1 GHz when it delivers over 2700 Dhrystone MIPS performance – On a 28nm process the perfomance is estimated to reach 4600 Dhrystone MIPS Cortex-R7 • Main features: – Eleven-stage pipeline with instruction prefetch, branch prediction, superscalar and out of order execution – divide and floating-point 2.53 Dhrystone MIPS/MHz – Added LLRAM – low latency memory port designed specifically to connect to local memory (64-bit) Thank you for your attention References [1] ARM7TDMI core documentation; www.arm.com [2] www.arm.com [3] ARM9 family documentation; www.arm.com [4] Cortex family documentation; www.arm.com [5] http://www.engr.mun.ca/~venky/Pipelining.ppt#256,1,P ipelining: Basic and Intermediate Concepts