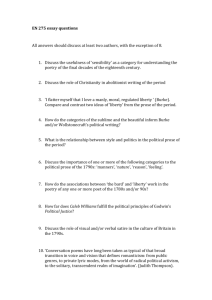

The temporal structure of spoken language understanding

advertisement