Natural Language Processing Linguistic Structure

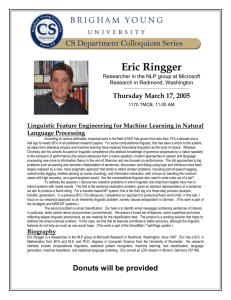

advertisement

NLP

Natural Language Processing

Linguistic Structure

Ian Lewin

Rebholz Group

EBI

NLP

Hard Times

“Now, what I want is,

Facts”

(not documents)

Mr. Gradgrind

in Hard Times

by Charles Dickens

NLP

Learning Objectives

To understand . . .

key concepts in linguistic analysis

the variety of options in linguistic processing

their use in recent IE systems and their potential

The PPI Task: Given a full-text article, identify pairs of

interacting proteins

NLP

Contents

1 Introduction

2 Linguistic Structure and Analysis

Varieties of syntactic rules

Syntactic categories

Ambiguity & Parse Selection

Dependency analyses

3 Use of syntax in selected IE Systems

4 Summary

NLP

Introduction

Reprise of Part One

Documents are “bags of words”

and named entities

NLP

Introduction

A document

Dickkopf-1 (Dkk-1) is a potent head inducer in Xenopus.

This effect can be attributed to its capability to

specifically inhibit Wnt/beta-catenin signalling. Recent

data point to a crucial role for Dkk-1 in the control of

programmed cell death during vertebrate limb

development. In this paper, we present a comparative

expression analysis of Dkk-1, Bmp-4 and Sox-9 as well as

data on the regulation of Dkk-1 by Wnt. Finally, we

summarize the current knowledge of its potential function

in the developing limb and present a model how the

interplay of the Bmp, Fgf and Wnt signalling pathways

might differentially regulate programmed cell death versus

chondrogenic differentiation in limb mesodermal cells.

NLP

Introduction

A Bag of words

dkk-1

wnt

limb

data

present

death

cell

...

4

3

3

2

2

2

2

NLP

Linguistic Structure and Analysis

Linguistic structure

Documents are not just bags of words.

Documents have structure

Sentences have structure

Words have structure

NLP

Linguistic Structure and Analysis

Traditional Components of Language

Phonetics

Phonology

Morphology

Lexicon

Syntax

Semantics

Pragmatics and Discourse

NLP

Linguistic Structure and Analysis

Example: Arithmetic grammar

1 + 2∗3

E

E → identifier

!aa

a

! !

E

E → E + E

E

"b

"

b

E → (E )

E → E ∗ E

+

id

E

1

id

id

2

3

*

E

NLP

Linguistic Structure and Analysis

Grammars and structure

1 + 2∗3

E

E

!aa

!! e

e a

!aa

a

! !

E

*

E

E

"b

"

E

b

+

E

"b

"

b

E

id

id

E

id

id

3

1

id

id

1

2

2

3

+

*

E

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Varieties of syntactic rules

There are varieties of syntax

They have different properties

You can have an engineering perspective

or a theoretical perspective

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Regular Expressions

Regular expressions are one way to capture patterns in language

a matches “a” and ab matches “ab”

a(b|c)d matches “abd” and “acd”

(ab)+ matches “ab”, “abab”, “ababab”, . . .

a(b?)c matches “ac” and “abc”

if a and b are expressions, so is ab

if a and b are expressions, so is a|b

if a is an expression, so is a∗, a?, a+ . . .

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Tools

Lots of tools support regular expressions

java.util.regex

perl, python, ruby, ldots

emacs Microsoft Words, . . .

monq

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

The Chomsky family: Symbol rewrite grammars

a set of rules: X0 , . . . , Xi → Y0 , . . . , Yj

over a set of non-terminal symbols (NT)

and a set of terminal symbols (T)

a distinguished non-terminal symbol (start symbol)

a notion of derivation

Different restrictions on rules give different types of language

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Chomsky’s family

Type 3 X → Y0 or

X → Y0 Y1

where Y0 ∈ T , Y1 ∈ NT

Type 2 X → Y0 , . . . Yn

where Yi ∈ (T ∪ NT )

Type 1 X0 , A, X1 → X0 , B0 . . . Bn , X1

where A ∈ NT and Bi ∈ (T ∪ NT )

Type 0 Any symbol rewrite grammar

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Generative power and Computation

Different notations have different properties, just as different

programming languages do . . . including

Generative Power

Recognizability

Computational Efficiency; Availability of Tools

Descriptive elegance

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Properties of Type 3

Regular Expressions (or Type 3) are recognizable by Finite state

machines

Fast!

In coverage strings are recognizable in linear time

Decidable whether strings are in coverage

Well studied algorithms for manipulating them

Supported in lots of software

Least expressive (no centre embedding)

. . . but not always descriptively elegant

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Type 3 Usage

used extensively in phonology and morphology

and elsewhere e.g. sentence boundary detection

very good at simple pattern matching (e.g. codes of various

sorts)

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Context Free Grammars

CFGs are type 2 in the Chomsky hierarchy.

Each rule takes the form: X → Y0 , . . . Yn

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Simple CFG example

S → NP VP

VP → Vi

VP → Vt NP

VP → VP PP

NP → DT NN

NP → NP PP

PP → P NP

Vi → stopped

Vt → inhibited

NN → process

NN → reaction

NN → drug

DT → the

P → with

P → in

The process stopped

The drug inhibited the reaction

The reaction inhibited the process with the drug

* The drug inhibited

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Properties of CFGs

CFGs are also well-understood mathematically

a string is recognizable in O(n3 ) (CYK algorithm)

the union of 2 CFG’s is CF (but not the intersection)

given 2 CFGs, A and B, L(A)=L(B) is not decidable

used extensively in programming language definitions

have lots of supporting tools

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

CFG Descriptive Inadequacies

X → Y0 , . . . Yn

The X and Yi atomic entities quite unrelated to each other.

Lexical Features

but singular, plural, 1st person, mass . . . nouns are all

nouns

Phrasal categories

the fact that the heads of NP,VP,PP . . . are N,V,P

. . . is no accident

Coordination

How can a CFG express the fact that like

co-ordinates with like?

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Beyond CFG

Replace unstructured names by structured ones

np[case = acc, per = 3] → him

np[case = nom, per = 3] → he

np[per = 3] → John

np[per = A] → John

S:[] -> NP:[per=P num=N] VP:[per=P num=N]

NP:[per=P num=N pronom=yes] -> N:[per=P num=N pronom=yes]

VP:[per=P num=N] -> V:[per=P num=N val=intrans]

VP:[per=P num=N] -> V:[per=P num=N val=trans] NP:[]

NLP

Linguistic Structure and Analysis

Varieties of syntactic rules

Beyond CFG: Computational Implications

These grammars often have a context free backbone

but add an information merging operation (unification)

“Unification” grammars are at least Type 1

and, possibly, in the worst case, recognition is undecidable

but, in practice, they seem “ok”

but you might not want to parse Medline too often

NLP

Linguistic Structure and Analysis

Syntactic categories

Grammatical Categories

What are the categories?

Linguists explain the distribution of sequences of words by

appealing to the notion of category

Engineers (e.g. programming language designers) can design

their own

NLP

Linguistic Structure and Analysis

Syntactic categories

Parts of Speech: Word level categories

Major word level categories are: N, V, A and P

Traditionally, nouns denote things, verbs actions . . .

NLP

Linguistic Structure and Analysis

Syntactic categories

Parts of Speech: Word level categories

Linguists prefer other criteria, especially distributional ones

Identify a frame such as: “They/it can

”

Claim: Only items of category X (verb, in base form) can fill

the frame

NLP

Linguistic Structure and Analysis

Syntactic categories

Nouns

N: “

can be a pain in the neck”

Dogs/John/Linguistics can be a pain in the neck

*happily/swam/under can be a pain in the neck

NLP

Linguistic Structure and Analysis

Syntactic categories

Adjective/Adverb

Adj: “He is very

”

He is very green/tall/large.

*He is very the/dogs/swam

Adv: “She treats him

”

She treats him badly/politely/happily

*She treats him green/swam/dogs

NLP

Linguistic Structure and Analysis

Syntactic categories

Phrase level categories

Major Phrasal Categories are: NP, VP, PP, AP

Dogs/John/Linguistics can be a pain in the neck

The mixture/Two alternative splicings/The protein bound at

position 5 /... can be a pain in the neck

The phrases have a key, obligatory element called the “head”. The

head of an NP is an N, VP is a V and so forth....

NLP

Linguistic Structure and Analysis

Syntactic categories

How to Diagnose a Constituent

substitution

movement

insertion

omission

coordination

NLP

Linguistic Structure and Analysis

Syntactic categories

Examples 1

KPT inhibits the nociceptive effect of IL-1 in mice

NLP

Linguistic Structure and Analysis

Syntactic categories

Examples 2

Blocking of dickkopf-1 counteracts

limb truncations and microphthalmia

dickkopf-1 is a negative regulator of

normal bone homeostasis

Conjunction, noun compounding, and PP-attachment are pervasive

introducers of ambiguity

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Ambiguity is pervasive

Ambiguity is pervasive and a good source of humour . . .

1

Children make tasty snacks

2

Police Help Dog Bite Victim

3

British Left Waffles on Falklands Islands

4

Prostitutes appeal to the Pope

5

Attenborough writes book on penguins

6

New vaccine may contain rabies

7

Prime Minister Used to Be a Woman

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Example of State of the art

Enju is a unification grammar with a context free backbone

http://www-tsujii.is.s.u-tokyo.ac.jp/enju/

Intravenous interleukin-8 inhibits granulocyte

emigration from rabbit mesenteric venules without

altering L-selectin expression or leukocyte rolling.

Ley K et al, J Immunol. 1993 Dec 1

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Choosing the most likely parse

Can we add probabilities to our grammars to aid parse selection?

S → NP VP

VP → Vi

1.0

0.4

VP → Vt NP

0.4

VP → VP PP

NP → DT NN

0.2

0.3

NP → NP PP

PP → P NP

0.7

1.0

Vi → stopped

Vt → inhibited

NN → process

1.0

1.0

0.7

NN → reaction

0.2

NN → drug

DT → the

P → with

0.1

1.0

0.5

P → in

0.5

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

The probability of a sentence

The probability of a derivation is the product of the rules used

in it

Y

p(D) =

p(X → Y |X )

X →Y ∈D

There’s even a reasonably efficient method (Viterbi) for

picking the most probable tree without actually enumerating

them all

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Parameter Estimation

Where do the probabilities in the grammar come from?

If we have a tagged corpus then we can count in the corpus

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Example

S

S

H

HH

NP

Det

VP

@

@

N

NP

Det

Det

@

@

N

S

H

H

NP

Q

Q

V

H

VP

@

@

N

V

@

@

,l

,

l

NP

VP

PN

V

NP

PN

p(NP → Det N |NP) = 0.6

p(NP → PN |NP) = 0.4

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Bias

PCFGs do give a notion of parse probability

They enable you to rank parses

They do have a built in bias

shorter derivations are more probable, ceteris paribus

probs are based only on a small amount of structual

information

probs don’t take account of lexical dependencies

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Lexical influences

N

N

XXX

X

X

N

N

!aa

!!

a

!

a

!aa

!!

a

N

lung

XX

XXX

A

N

N

N

N

inflammatory

response

lung

inflammatory

SCFG assigns the same probability for these two trees; but

inflammatory response is a likely lexical association

response

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Lexical influences

N

N

!aa

!!

a

!

a

!aa

!!

a

!

a

N

N

fresh

N

gas-flow

A

N

"b

"

"

"b

b

b

"

"

N

N

rates

fresh

b

b

N

rates

gas-flow

SCFG assigns the same probability for these two trees; but fresh

gas-flow is a likely lexical association

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Lexical influences

HIV gag-1 modulates protease activity by a cleavage site

S`

```

NP

```

VP

PPP

P

HIV gag-1

VP

PP

!aa

!!

a

VP

NP

modulates

p activity

by . . .

modulate by is a likely lexical association

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Extending the probabilitistic conditioning

PCFGs are poor because the only conditioning is

p(X → Y

| X)

So . . . condition on more!

e.g. more ancestors than immediate parent

e.g. lexical material

NLP

Linguistic Structure and Analysis

Ambiguity & Parse Selection

Going beyond PCFGs

The “best” form of statistical parser is an active research issue

They all need training material, which is expensive to come by

The more features one adds, the sparser the data . . .

NLP

Linguistic Structure and Analysis

Dependency analyses

Dependency analyses

Sentence structure: binary asymmetric relations between

words

Each relation has a head and a modifier

Grammatical relations include

subject-of

object-of

NLP

Linguistic Structure and Analysis

Dependency analyses

Dependency Example

NLP

Linguistic Structure and Analysis

Dependency analyses

Example Parse

In the absence of stg , these cells are inappropriately

recruited.

(|dobj| |In:1_II| |absence:3_NN1|)

(|det| |absence:3_NN1| |the:2_AT|)

(|iobj| |absence:3_NN1| |of:4_IO|)

(|dobj| |of:4_IO| |stg:5_NP1|)

(|det| |cell+s:8_NN2| |these:7_DD2|)

(|ncmod| _ |recruit+ed:11_VVN| |In:1_II|)

(|ncsubj| |recruit+ed:11_VVN| |cell+s:8_NN2| _)

(|aux| |recruit+ed:11_VVN| |be+:9_VBR|)

(|passive| |recruit+ed:11_VVN|)

NLP

Linguistic Structure and Analysis

Dependency analyses

Advantages of Dependency representations

Dependency links are closer to semantics.

subject-of . . . is (nearly) the right vocabulary

No need to read them off a tree structure

May facilitate comparison of different analysers

Later processors only need to search in a flat list of bi-lexical

relations

NLP

Linguistic Structure and Analysis

Dependency analyses

Dependency Parsing

There are specialist dependency parsers

Or, there are translators from trees to grammatical relations

A search engine that exploits grammatical relations:

http://www-tsujii.is.s.u-tokyo.ac.jp/medie/

NLP

Use of syntax in selected IE Systems

IE in bioinformatics

Motto: Get the facts not the documents

BioCreative 2 (2007) is the most recent competitive evaluation of

work in this field

The PPI Task: Given a full-text article, identify pairs of

interacting proteins

identify strings that refer to proteins

identify proteins referred to by those strings

identify pairs that are claimed to be interacting

NLP

Use of syntax in selected IE Systems

Some bounds

OntoGene in BioCreative II Rinaldi et al., ”Genome

Biology, 2008”

generate all pairs of proteins in the abstract?

recall of 35% but precision < 1%

generate all pairs in the same sentence?

recall of 21%; precision 8%

NLP

Use of syntax in selected IE Systems

Ontogene approach

Hand-code rules over dependency parses

X interacts with Y if

1

subj(X , H) & prep − obj(Y , H) & prot(X ) & prot(Y )

2

subj(X , H) & prep −

obj(Z , H) &conj(Y , Z ) & prot(X ) & prot(Y )

recall of 25%; precision 26%

NLP

Use of syntax in selected IE Systems

Protein corral

a web application that combines IR and IE over Medline

retrieves Medline abstracts similarly to PubMed

analyzes each and summarizes protein associations

PPI NLP Pattern matching

Co3 Tri Co-Occurrence (2 proteins and a verb)

Co Bi Co-Occurrence (2 proteins only)

http://www.ebi.ac.uk/Rebholz-srv/pcorral/

NLP

Use of syntax in selected IE Systems

Akane approach

AKANE system: protein-protein interaction pairs in

the BioCreative2 challenge, PPI-IPS subtask Saetre

et al, Proc. BioCreative II 2007

Assume any sentence mentioning two proteins known to

interact actually describes that interaction

Parse with Enju

Extract the smallest tree covering both proteins

Use this as a pattern if its overall accuracy on training data is

“ok”

Remove pairs that don’t share a species

recall of 19%; precision 11%

NLP

Use of syntax in selected IE Systems

Colorado system: a specialist “semantic parser”

Some example rules

NUCLEUS

:=

nucleus, nuclei, nuclear

PROTEIN-TRANSPORT

:=

([TRANSPORTED-ENTITY dep:x] _

[ACTION-TRANSPORT head:x])

@ (from {det}? [TRANSPORT-ORIGIN])]

@ (to {det}? [TRANSPORT-DESTINATION]);

recall of 31%; precision 39%

NLP

Use of syntax in selected IE Systems

Edinburgh TXM system

Adapting a relation extraction pipeline for the

BioCreative II Task Grover et al. Proc. BioCreative II

2007

propose ALL pairs of proteins within n sentences of each other

Filter using a MaxEnt classifier with features such as. . .

the two words surrounding each protein name

the distance between the protein names

the interaction word

the distance from the interaction word to the nearest protein

various pattern matches including . . .

PROT WORD{0, 2} INTERACTION WORD{0, 2} PROT

PROT word{0, 1} PROT WORD{0, 2} “complex00

NLP

Use of syntax in selected IE Systems

Edinburgh TXM system: Document filter

Filter again using document level features such as

frequency of interaction mentions in the document

whether most interaction mentions are extra-sentential

whether the title contains a mention

whether the species of both proteins are the same

recall of 30%; precision 28%

NLP

Summary

Summary

Documents are more than bags of words

Syntactic engines vary

regular expression engines

context free engines

“advanced linguistic” engines

special purpose (“semantic”) engines

IE applications exploit, variously,

different syntactic engines

patterns over engine outputs

statistics

machine learning

The future?